How to use the GPT-3 language model

5th June 2022

I ran a Twitter poll the other day asking if people had tried GPT-3 and why or why not. The winning option, by quite a long way, was “No, I don’t know how to”. So here’s how to try it out, for free, without needing to write any code.

You don’t need to use the API to try out GPT-3

I think a big reason people have been put off trying out GPT-3 is that OpenAI market it as the OpenAI API. This sounds like something that’s going to require quite a bit of work to get started with.

But access to the API includes access to the GPT-3 playground, which is an interface that is incredibly easy to use. You get a text box, you type things in it, you press the “Execute” button. That’s all you need to know.

How to sign up

To try out GPT-3 for free you need three things: an email address, a phone number that can receive SMS messages and to be located in one of this list of supported countries and regions.

- Create an account at https://openai.com/join/—you can create an email/password address or you can sign up using your Google or Microsoft account

- Verify your email address (click the link in the email they send you)

- Enter your phone number and wait for their text

- Enter the code that they texted to you

New accounts get $18 of credit for the API, which expire after three months. Each query should cost single digit cents to execute, so you can do a lot of experimentation without needing to spend any money.

How to use the playground

Once you’ve activated your account, head straight to the Playground:

https://beta.openai.com/playground

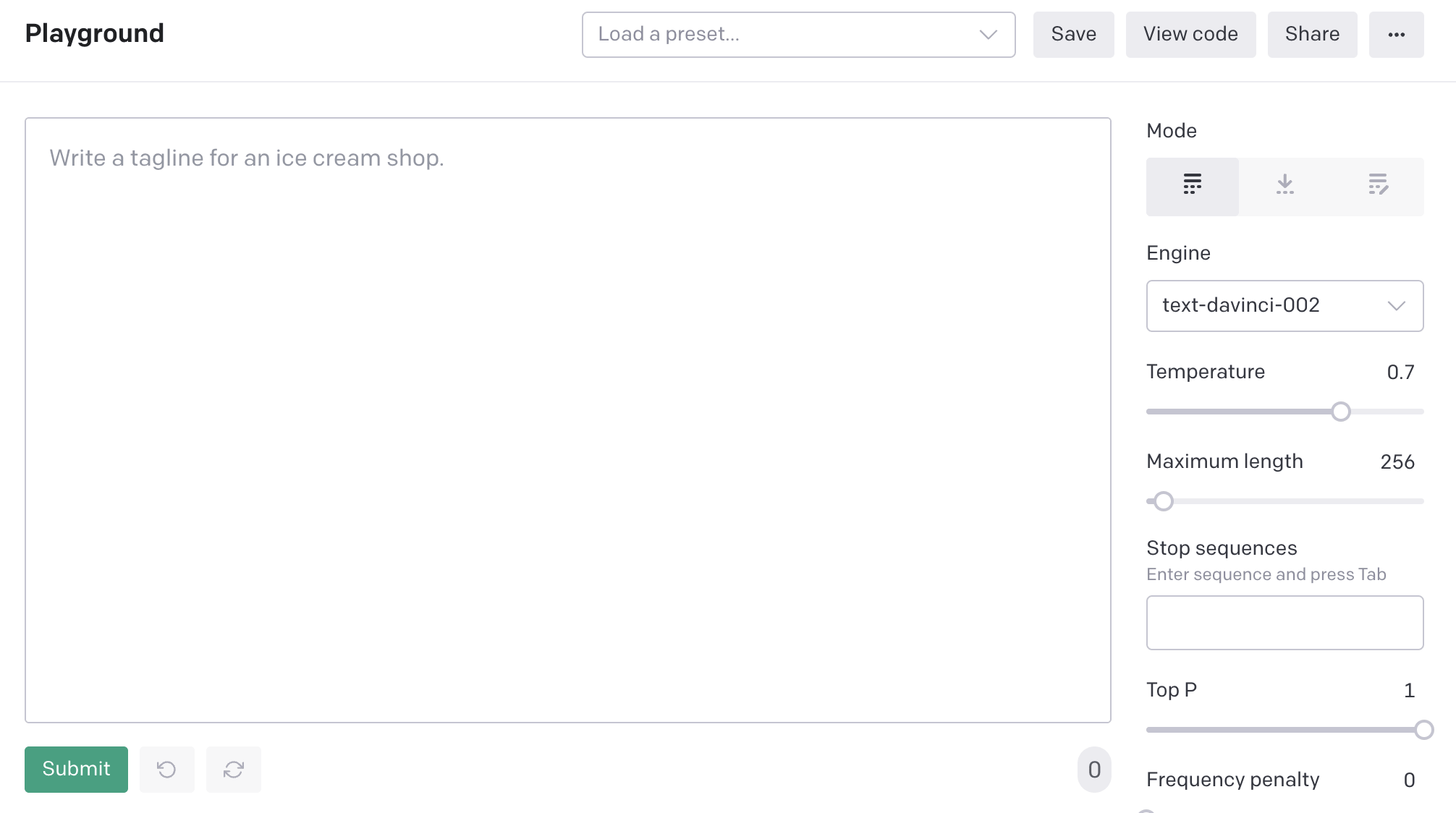

The interface looks like this (it works great on mobile too):

The only part of this interface that matters is the text box and the Submit button. The right hand panels can be used to control some settings but the default settings work extremely well—I’ve been playing with GPT-3 for months and 99% of my queries used those defaults.

Now you can just type stuff into the box and hit that “Submit” button.

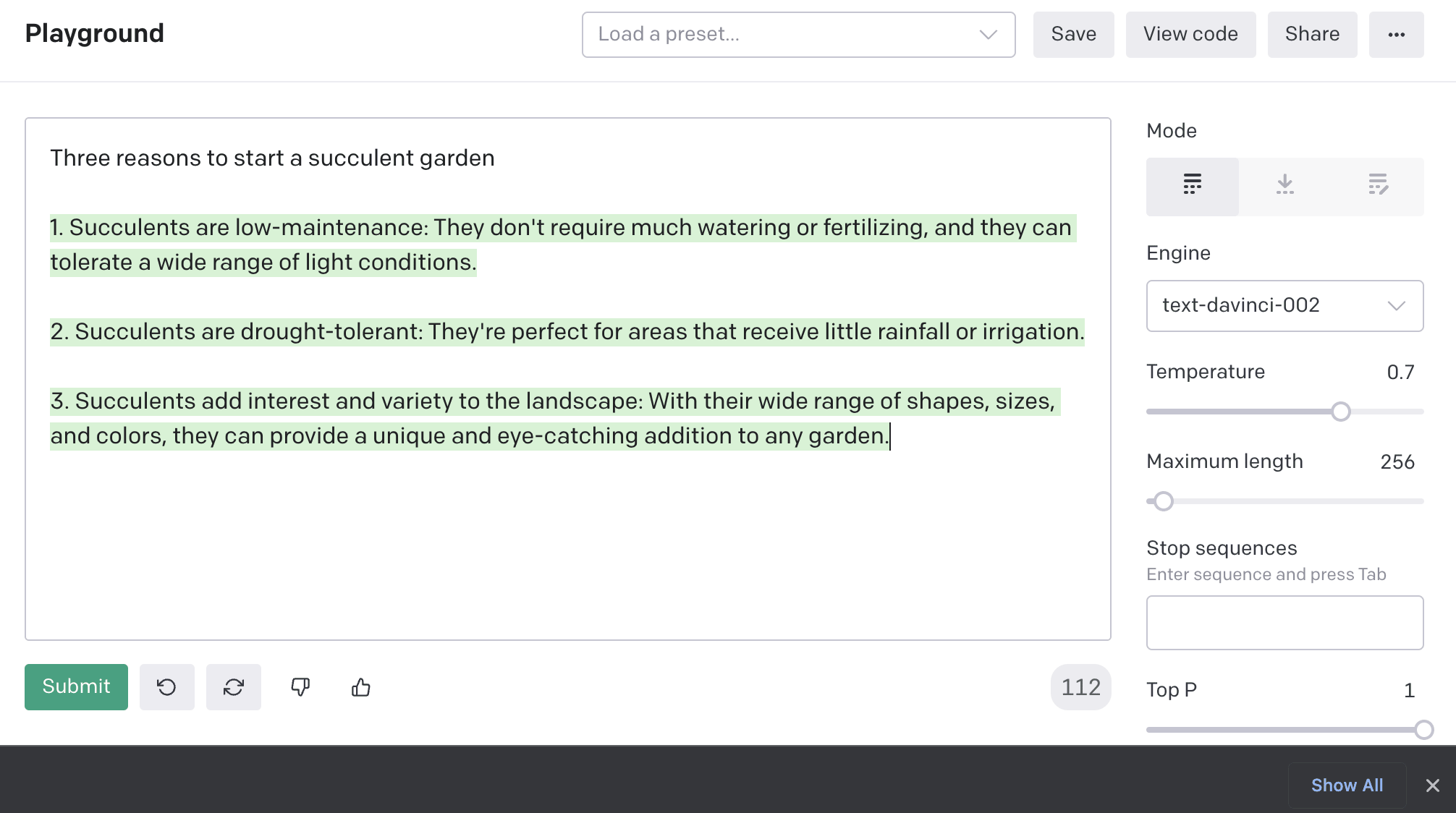

Try this one to get you started:

Three reasons to start a succulent garden

Prompt engineering

The text that you entered there is called a “prompt”. Everything about working with GPT-3 is prompt engineering—trying different prompts, and iterating on specific prompts to see what kind of results you can get.

It’s a programming activity that actually feels a lot more like spellcasting. It’s almost impossible to reason about: I imagine even the creators of GPT-3 could not explain to you why certain prompts produce great results while others do not.

It’s also absurdly good fun.

Adding more to the generated text

GPT-3 will often let you hit the Submit button more than once—especially if the output to your question has the scope to keep growing in length—“Tell me an ongoing saga about a pelican fighting a cheesecake” for example.

Each additional click of “Submit” costs more credit.

You can also add your own text anywhere in the GPT-3 output, or at the end. You can use this to prompt for more output, or ask for clarification. I like saying “Now add a twist” to story prompts to see what it comes up with.

Further reading

- OpenAI’s examples are an encyclopedia of interesting things you can do with prompts

- A Datasette tutorial written by GPT-3 describes my experiments getting GPT-3 to write a tutorial for my Datasette project

- Using GPT-3 to explain how code works shows how I use GPT-3 to get explanations of unfamiliar source code

- How GPT3 Works—Visualizations and Animations is a great explanation of how GPT-3 works, illustrated with animations

- OpenAI GPT-3: Everything You Need to Know offers a good overview of GPT-3

- GPT-3 as a muse: generating lyrics by Vincent Bons walks through some advanced GPT-3 fine tuning techniques to get it to output usable song lyrics inspired by the styles of various existing artists

More recent articles

- Writing about Agentic Engineering Patterns - 23rd February 2026

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026

- Two new Showboat tools: Chartroom and datasette-showboat - 17th February 2026