Tuesday, 13th August 2024

mlx-whisper

(via)

Apple's MLX framework for running GPU-accelerated machine learning models on Apple Silicon keeps growing new examples. mlx-whisper is a Python package for running OpenAI's Whisper speech-to-text model. It's really easy to use:

pip install mlx-whisper

Then in a Python console:

>>> import mlx_whisper

>>> result = mlx_whisper.transcribe(

... "/tmp/recording.mp3",

... path_or_hf_repo="mlx-community/distil-whisper-large-v3")

.gitattributes: 100%|███████████| 1.52k/1.52k [00:00<00:00, 4.46MB/s]

config.json: 100%|██████████████| 268/268 [00:00<00:00, 843kB/s]

README.md: 100%|████████████████| 332/332 [00:00<00:00, 1.95MB/s]

Fetching 4 files: 50%|████▌ | 2/4 [00:01<00:01, 1.26it/s]

weights.npz: 63%|██████████ ▎ | 944M/1.51G [02:41<02:15, 4.17MB/s]

>>> result.keys()

dict_keys(['text', 'segments', 'language'])

>>> result['language']

'en'

>>> len(result['text'])

100105

>>> print(result['text'][:3000])

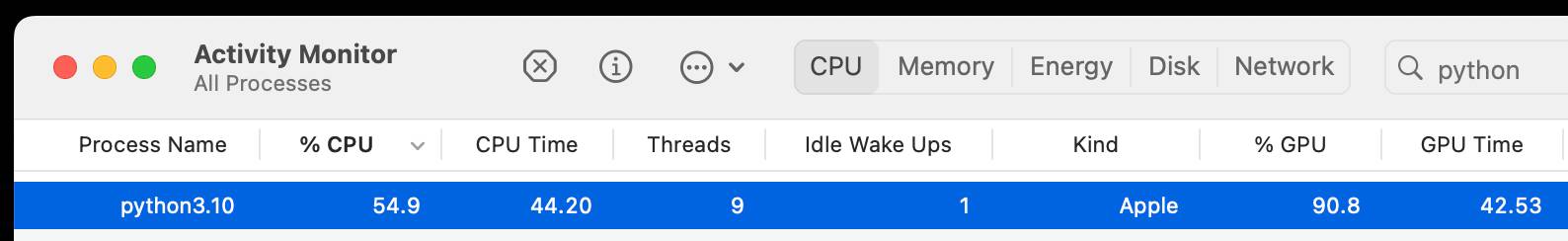

This is so exciting. I have to tell you, first of all ...Here's Activity Monitor confirming that the Python process is using the GPU for the transcription:

This example downloaded a 1.5GB model from Hugging Face and stashed it in my ~/.cache/huggingface/hub/models--mlx-community--distil-whisper-large-v3 folder.

Calling .transcribe(filepath) without the path_or_hf_repo argument uses the much smaller (74.4 MB) whisper-tiny-mlx model.

A few people asked how this compares to whisper.cpp. Bill Mill compared the two and found mlx-whisper to be about 3x faster on an M1 Max.

Update: this note from Josh Marshall:

That '3x' comparison isn't fair; completely different models. I ran a test (14" M1 Pro) with the full (non-distilled) large-v2 model quantised to 8 bit (which is my pick), and whisper.cpp was 1m vs 1m36 for mlx-whisper.

I've now done a better test, using the MLK audio, multiple runs and 2 models (distil-large-v3, large-v2-8bit)... and mlx-whisper is indeed 30-40% faster

Help wanted: AI designers (via) Nick Hobbs:

LLMs feel like genuine magic. Yet, somehow we haven’t been able to use this amazing new wand to churn out amazing new products. This is puzzling.

Why is it proving so difficult to build mass-market appeal products on top of this weird and powerful new substrate?

Nick thinks we need a new discipline - an AI designer (which feels to me like the design counterpart to an AI engineer). Here's Nick's list of skills they need to develop:

- Just like designers have to know their users, this new person needs to know the new alien they’re partnering with. That means they need to be just as obsessed about hanging out with models as they are with talking to users.

- The only way to really understand how we want the model to behave in our application is to build a bunch of prototypes that demonstrate different model behaviors. This — and a need to have good intuition for the possible — means this person needs enough technical fluency to look kind of like an engineer.

- Each of the behaviors you’re trying to design have near limitless possibility that you have to wrangle into a single, shippable product, and there’s little to no prior art to draft off of. That means this person needs experience facing the kind of “blank page” existential ambiguity that founders encounter.

New Django {% querystring %} template tag. Django 5.1 came out last week and includes a neat new template tag which solves a problem I've faced a bunch of times in the past.

{% querystring color="red" size="S" %}

Adds ?color=red&size=S to the current URL - keeping any other existing parameters and replacing the current value for color or size if it's already set.

{% querystring color=None %}

Removes the ?color= parameter if it is currently set.

If the value passed is a list it will append ?color=red&color=blue for as many items as exist in the list.

You can access values in variables and you can also assign the result to a new template variable rather than outputting it directly to the page:

{% querystring page=page.next_page_number as next_page %}

Other things that caught my eye in Django 5.1:

- PostgreSQL connection pools.

- The new LoginRequiredMiddleware for making every page in an application require login.

- The SQLite database backend now accepts init_command for settings things like

PRAGMA cache_size=2000on new connections. - SQLite can also be passed

"transaction_mode": "IMMEDIATE"to configure the behaviour of transactions.