Video scraping: extracting JSON data from a 35 second screen capture for less than 1/10th of a cent

17th October 2024

The other day I found myself needing to add up some numeric values that were scattered across twelve different emails.

I didn’t particularly feel like copying and pasting all of the numbers out one at a time, so I decided to try something different: could I record a screen capture while browsing around my Gmail account and then extract the numbers from that video using Google Gemini?

This turned out to work incredibly well.

AI Studio and QuickTime

I recorded the video using QuickTime Player on my Mac: File -> New Screen Recording. I dragged a box around a portion of my screen containing my Gmail account, then clicked on each of the emails in turn, pausing for a couple of seconds on each one.

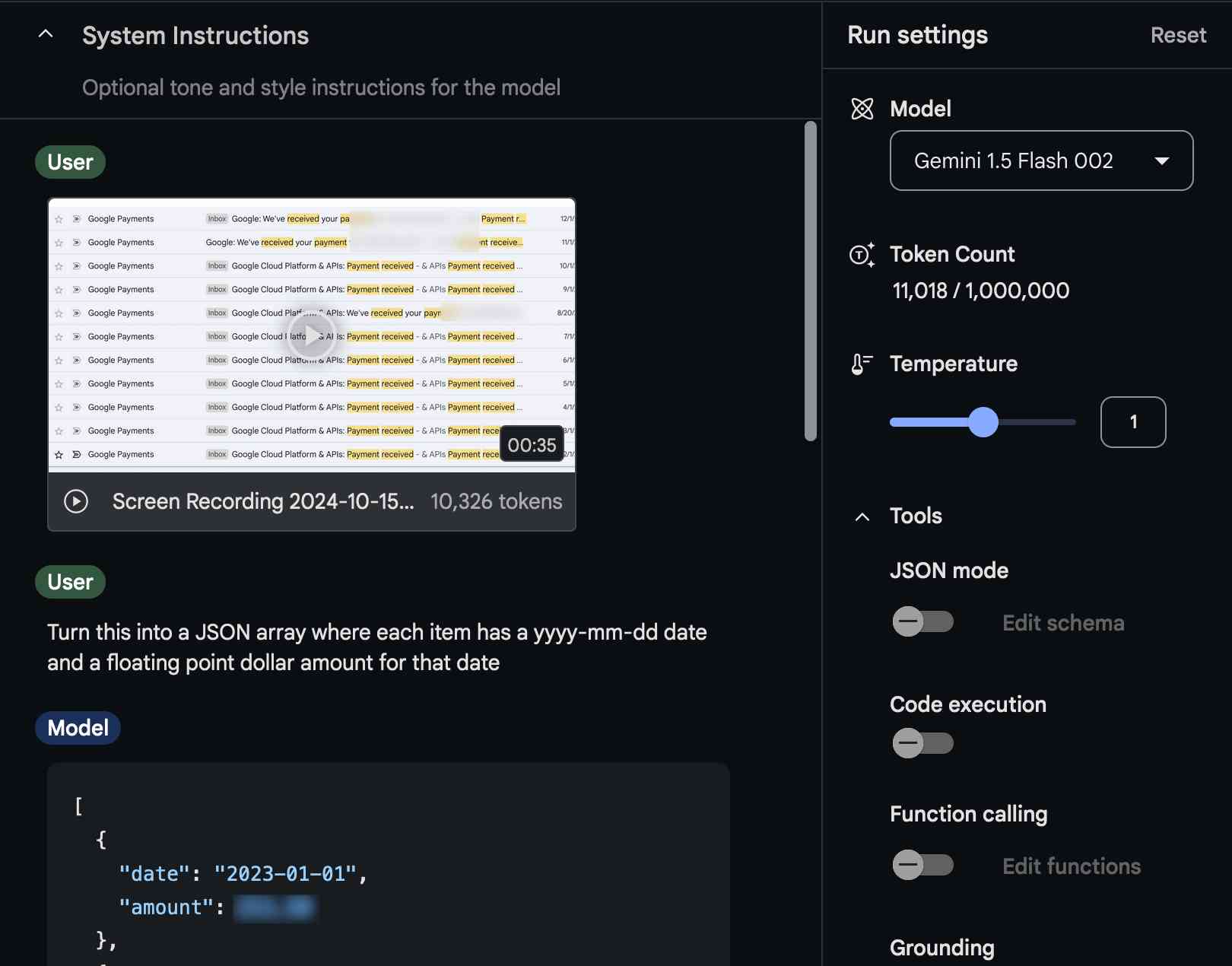

I uploaded the resulting file directly into Google’s AI Studio tool and prompted the following:

Turn this into a JSON array where each item has a yyyy-mm-dd date and a floating point dollar amount for that date

... and it worked. It spat out a JSON array like this:

[

{

"date": "2023-01-01",

"amount": 2...

},

...

]

I wanted to paste that into Numbers, so I followed up with:

turn that into copy-pastable csv

Which gave me back the same data formatted as CSV.

You should never trust these things not to make mistakes, so I re-watched the 35 second video and manually checked the numbers. It got everything right.

I had intended to use Gemini 1.5 Pro, aka Google’s best model... but it turns out I forgot to select the model and I’d actually run the entire process using the much less expensive Gemini 1.5 Flash 002.

How much did it cost?

According to AI Studio I used 11,018 tokens, of which 10,326 were for the video.

Gemini 1.5 Flash charges $0.075/1 million tokens (the price dropped in August).

11018/1000000 = 0.011018

0.011018 * $0.075 = $0.00082635

So this entire exercise should have cost me just under 1/10th of a cent!

And in fact, it was free. Google AI Studio currently “remains free of charge regardless of if you set up billing across all supported regions”. I believe that means they can train on your data though, which is not the case for their paid APIs.

The alternatives aren’t actually that great

Let’s consider the alternatives here.

- I could have clicked through the emails and copied out the data manually one at a time. This is error prone and kind of boring. For twelve emails it would have been OK, but for a hundred it would have been a real pain.

- Accessing my Gmail data programatically. This seems to get harder every year—it’s still possible to access it via IMAP right now if you set up a dedicated app password but that’s a whole lot of work for a one-off scraping task. The official API is no fun at all.

- Some kind of browser automation (Playwright or similar) that can click through my Gmail account for me. Even with an LLM to help write the code this is still a lot more work, and it doesn’t help deal with formatting differences in emails either—I’d have to solve the email parsing step separately.

- Using some kind of much more sophisticated pre-existing AI tool that has access to my email. A separate Google product also called Gemini can do this if you grant it access, but my results with that so far haven’t been particularly great. AI tools are inherently unpredictable. I’m also nervous about giving any tool full access to my email account due to the risk from things like prompt injection.

Video scraping is really powerful

The great thing about this video scraping technique is that it works with anything that you can see on your screen... and it puts you in total control of what you end up exposing to the AI model.

There’s no level of website authentication or anti-scraping technology that can stop me from recording a video of my screen while I manually click around inside a web application.

The results I get depend entirely on how thoughtful I was about how I positioned my screen capture area and how I clicked around.

There is no setup cost for this at all—sign into a site, hit record, browse around a bit and then dump the video into Gemini.

And the cost is so low that I had to re-run my calculations three times to make sure I hadn’t made a mistake.

I expect I’ll be using this technique a whole lot more in the future. It also has applications in the data journalism world, which frequently involves the need to scrape data from sources that really don’t want to be scraped.

A note on reliability

Added 22nd December 2024. As with anything involving LLMs, its worth noting that you cannot trust these models to return exactly correct results with 100% reliability. I verified the results here manually through eyeball comparison of the JSON to the underlying video, but in a larger project this may not be feasible. Consider spot-checks or other strategies for double-checking the results, especially if mistakes could have meaningful real-world impact.

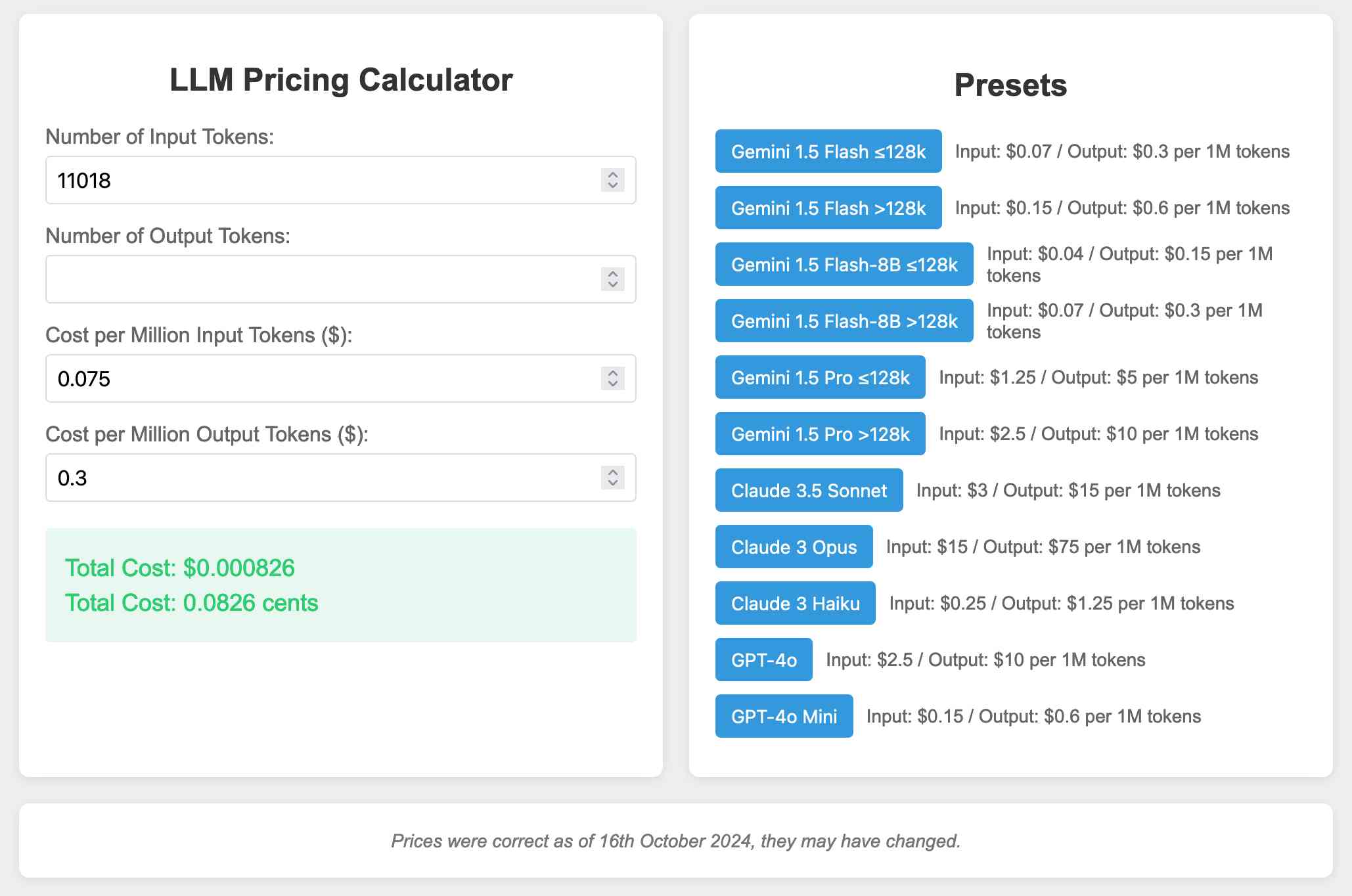

Bonus: An LLM pricing calculator

In writing up this experiment I got fed up of having to manually calculate token prices. I actually usually outsource that to ChatGPT Code Interpreter, but I’ve caught it messing up the conversion from dollars to cents once or twice so I always have to double-check its work.

So I got Claude 3.5 Sonnet with Claude Artifacts to build me this pricing calculator tool (source code here):

You can set the input/output token prices by hand, or click one of the preset buttons to pre-fill it with the prices for different existing models (as-of 16th October 2024—I won’t promise that I’ll promptly update them in the future!)

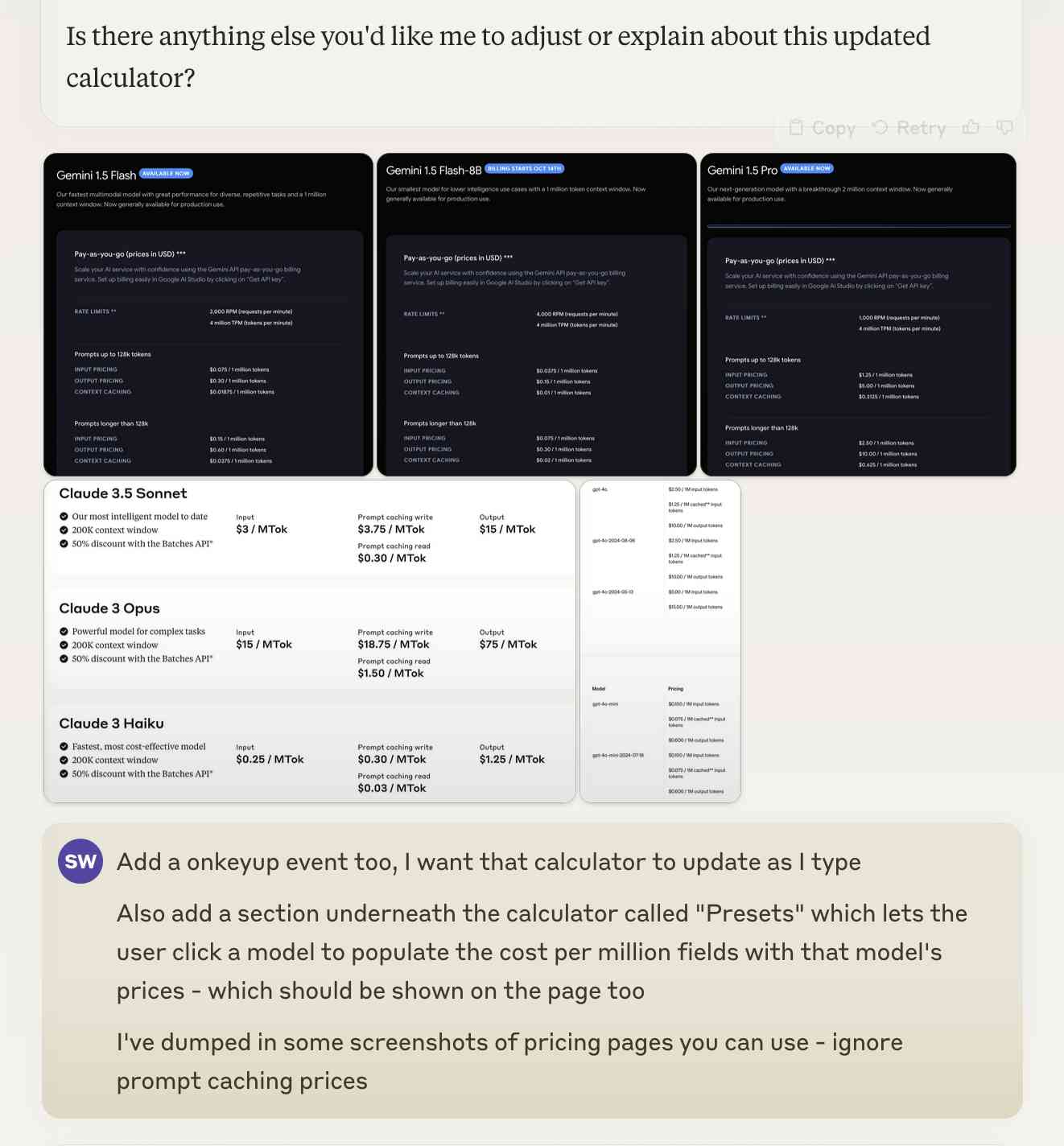

The entire thing was written by Claude. Here’s the full conversation transcript—we spent 19 minutes iterating on it through 10 different versions.

Rather than hunt down all of those prices myself, I took screenshots of the pricing pages for each of the model providers and dumped those directly into the Claude conversation:

More recent articles

- Two new Showboat tools: Chartroom and datasette-showboat - 17th February 2026

- Deep Blue - 15th February 2026

- The evolution of OpenAI's mission statement - 13th February 2026