Wednesday, 2nd October 2024

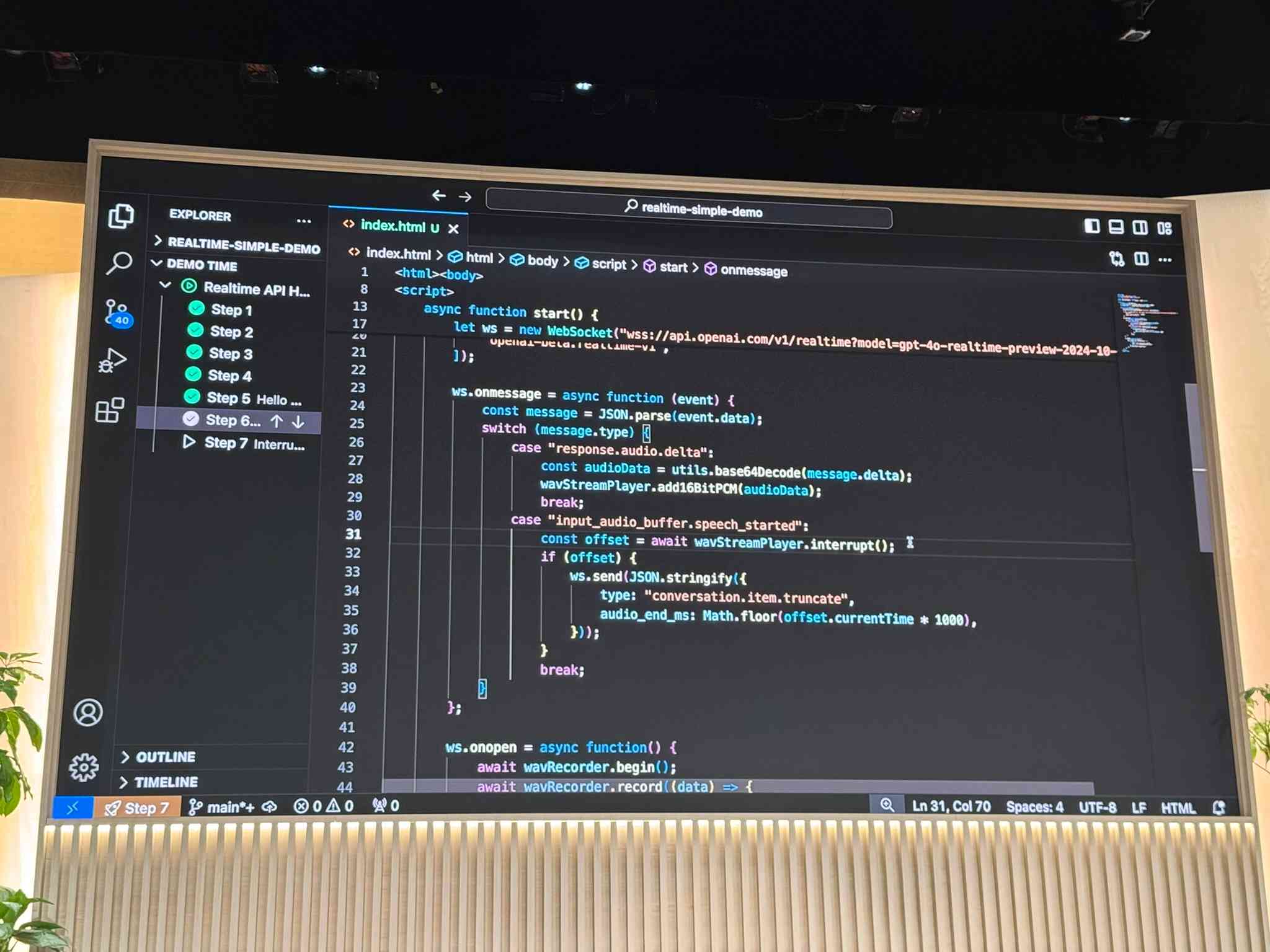

Building an automatically updating live blog in Django. Here's an extended write-up of how I implemented the live blog feature I used for my coverage of OpenAI DevDay yesterday. I built the first version using Claude while waiting for the keynote to start, then upgraded it during the lunch break with the help of GPT-4o to add sort options and incremental fetching of new updates.

Ethical Applications of AI to Public Sector Problems. Jacob Kaplan-Moss developed this model a few years ago (before the generative AI rush) while working with public-sector startups and is publishing it now. He starts by outright dismissing the snake-oil infested field of “predictive” models:

It’s not ethical to predict social outcomes — and it’s probably not possible. Nearly everyone claiming to be able to do this is lying: their algorithms do not, in fact, make predictions that are any better than guesswork. […] Organizations acting in the public good should avoid this area like the plague, and call bullshit on anyone making claims of an ability to predict social behavior.

Jacob then differentiates assistive AI and automated AI. Assistive AI helps human operators process and consume information, while leaving the human to take action on it. Automated AI acts upon that information without human oversight.

His conclusion: yes to assistive AI, and no to automated AI:

All too often, AI algorithms encode human bias. And in the public sector, failure carries real life or death consequences. In the private sector, companies can decide that a certain failure rate is OK and let the algorithm do its thing. But when citizens interact with their governments, they have an expectation of fairness, which, because AI judgement will always be available, it cannot offer.

On Mastodon I said to Jacob:

I’m heavily opposed to anything where decisions with consequences are outsourced to AI, which I think fits your model very well

(somewhat ironic that I wrote this message from the passenger seat of my first ever Waymo trip, and this weird car is making extremely consequential decisions dozens of times a second!)

Which sparked an interesting conversation about why life-or-death decisions made by self-driving cars feel different from decisions about social services. My take on that:

I think it’s about judgement: the decisions I care about are far more deep and non-deterministic than “should I drive forward or stop”.

Where there’s moral ambiguity, I want a human to own the decision both so there’s a chance for empathy, and also for someone to own the accountability for the choice.

That idea of ownership and accountability for decision making feels critical to me. A giant black box of matrix multiplication cannot take accountability for “decisions” that it makes.

OpenAI DevDay: Let’s build developer tools, not digital God

I had a fun time live blogging OpenAI DevDay yesterday—I’ve now shared notes about the live blogging system I threw other in a hurry on the day (with assistance from Claude and GPT-4o). Now that the smoke has settled a little, here are my impressions from the event.

[... 2,090 words]