Tuesday, 10th September 2024

Telling the AI to "make it better" after getting a result is just a folk method of getting an LLM to do Chain of Thought, which is why it works so well.

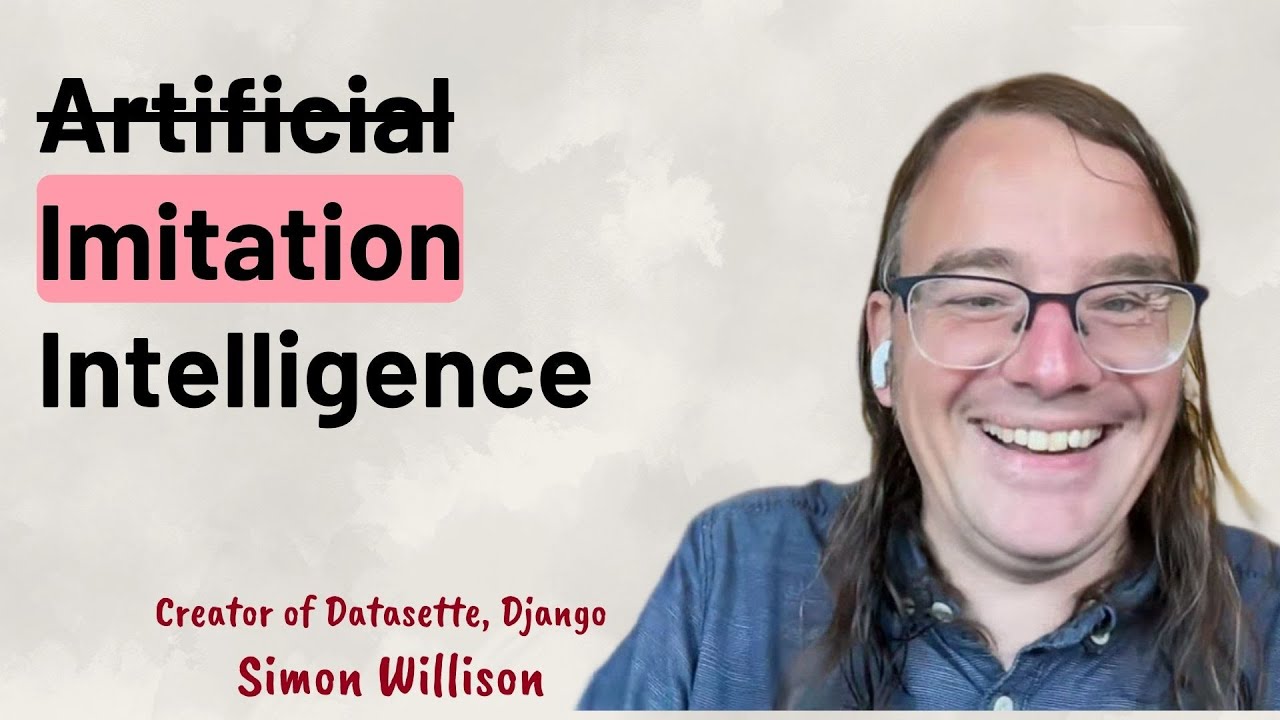

Notes from my appearance on the Software Misadventures Podcast

I was a guest on Ronak Nathani and Guang Yang’s Software Misadventures Podcast, which interviews seasoned software engineers about their careers so far and their misadventures along the way. Here’s the episode: LLMs are like your weird, over-confident intern | Simon Willison (Datasette).

[... 1,740 words]