12th July 2025 - Link Blog

Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity (via) METR - for Model Evaluation & Threat Research - are a non-profit research institute founded by Beth Barnes, a former alignment researcher at OpenAI (see Wikipedia). They've previously contributed to system cards for OpenAI and Anthropic, but this new research represents a slightly different direction for them:

We conduct a randomized controlled trial (RCT) to understand how early-2025 AI tools affect the productivity of experienced open-source developers working on their own repositories. Surprisingly, we find that when developers use AI tools, they take 19% longer than without—AI makes them slower.

The full paper (PDF) has a lot of details that are missing from the linked summary.

METR recruited 16 experienced open source developers for their study, with varying levels of exposure to LLM tools. They then assigned them tasks from their own open source projects, randomly assigning whether AI was allowed or not allowed for each of those tasks.

They found a surprising difference between developer estimates and actual completion times:

After completing the study, developers estimate that allowing AI reduced completion time by 20%. Surprisingly, we find that allowing AI actually increases completion time by 19%—AI tooling slowed developers down.

I shared my initial intuition about this paper on Hacker News the other day:

My personal theory is that getting a significant productivity boost from LLM assistance and AI tools has a much steeper learning curve than most people expect.

This study had 16 participants, with a mix of previous exposure to AI tools - 56% of them had never used Cursor before, and the study was mainly about Cursor.

They then had those 16 participants work on issues (about 15 each), where each issue was randomly assigned a "you can use AI" v.s. "you can't use AI" rule.

So each developer worked on a mix of AI-tasks and no-AI-tasks during the study.

A quarter of the participants saw increased performance, 3/4 saw reduced performance.

One of the top performers for AI was also someone with the most previous Cursor experience. The paper acknowledges that here:

However, we see positive speedup for the one developer who has more than 50 hours of Cursor experience, so it's plausible that there is a high skill ceiling for using Cursor, such that developers with significant experience see positive speedup.

My intuition here is that this study mainly demonstrated that the learning curve on AI-assisted development is high enough that asking developers to bake it into their existing workflows reduces their performance while they climb that learing curve.

I got an insightful reply there from Nate Rush, one of the authors of the study, which included these notes:

- Some prior studies that find speedup do so with developers that have similar (or less!) experience with the tools they use. In other words, the "steep learning curve" theory doesn't differentially explain our results vs. other results.

- Prior to the study, 90+% of developers had reasonable experience prompting LLMs. Before we found slowdown, this was the only concern that most external reviewers had about experience was about prompting -- as prompting was considered the primary skill. In general, the standard wisdom was/is Cursor is very easy to pick up if you're used to VSCode, which most developers used prior to the study.

- Imagine all these developers had a TON of AI experience. One thing this might do is make them worse programmers when not using AI (relatable, at least for me), which in turn would raise the speedup we find (but not because AI was better, but just because with AI is much worse). In other words, we're sorta in between a rock and a hard place here -- it's just plain hard to figure out what the right baseline should be!

- We shared information on developer prior experience with expert forecasters. Even with this information, forecasters were still dramatically over-optimistic about speedup.

- As you say, it's totally possible that there is a long-tail of skills to using these tools -- things you only pick up and realize after hundreds of hours of usage. Our study doesn't really speak to this. I'd be excited for future literature to explore this more.

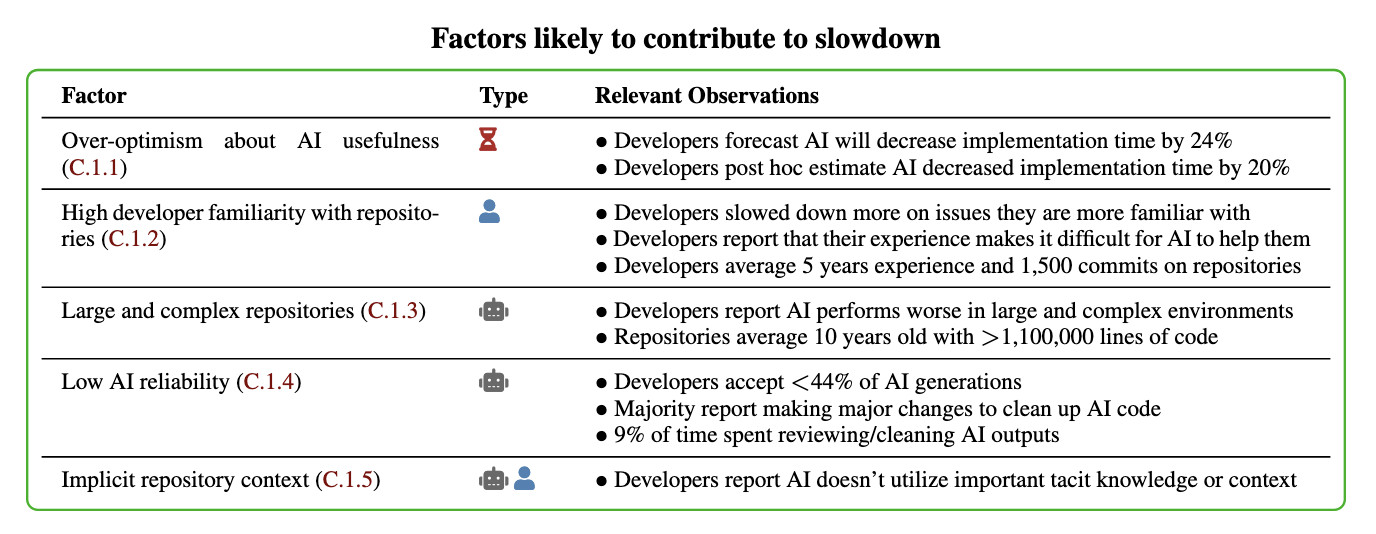

In general, these results being surprising makes it easy to read the paper, find one factor that resonates, and conclude "ah, this one factor probably just explains slowdown." My guess: there is no one factor -- there's a bunch of factors that contribute to this result -- at least 5 seem likely, and at least 9 we can't rule out (see the factors table on page 11).

Here's their table of the most likely factors:

I think Nate's right that jumping straight to a conclusion about a single factor is a shallow and unproductive way to think about this report.

That said, I can't resist the temptation to do exactly that! The factor that stands out most to me is that these developers were all working in repositories they have a deep understanding of already, presumably on non-trivial issues since any trivial issues are likely to have been resolved in the past.

I think this is a really interesting paper. Measuring developer productivity is notoriously difficult. I hope this paper inspires more work with a similar level of detail to analyzing how professional programmers spend their time:

To compare how developers spend their time with and without AI assistance, we manually label a subset of 128 screen recordings with fine-grained activity labels, totaling 143 hours of video.

Recent articles

- Writing about Agentic Engineering Patterns - 23rd February 2026

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026

- Two new Showboat tools: Chartroom and datasette-showboat - 17th February 2026