10 posts tagged “francois-chollet”

2025

To really understand a concept, you have to "invent" it yourself in some capacity. Understanding doesn't come from passive content consumption. It is always self-built. It is an active, high-agency, self-directed process of creating and debugging your own mental models.

I don't think people really appreciate how simple ARC-AGI-1 was, and what solving it really means.

It was designed as the simplest, most basic assessment of fluid intelligence possible. Failure to pass signifies a near-total inability to adapt or problem-solve in unfamiliar situations.

Passing it means your system exhibits non-zero fluid intelligence -- you're finally looking at something that isn't pure memorized skill. But it says rather little about how intelligent your system is, or how close to human intelligence it is.

2024

What's holding back research isn't a lack of verbose, low-signal, high-noise papers. Using LLMs to automatically generate 100x more of those will not accelerate science, it will slow it down.

— François Chollet, 12th May 2024

OpenAI o3 breakthrough high score on ARC-AGI-PUB. François Chollet is the co-founder of the ARC Prize and had advanced access to today's o3 results. His article here is the most insightful coverage I've seen of o3, going beyond just the benchmark results to talk about what this all means for the field in general.

One fascinating detail: it cost $6,677 to run o3 in "high efficiency" mode against the 400 public ARC-AGI puzzles for a score of 82.8%, and an undisclosed amount of money to run the "low efficiency" mode model to score 91.5%. A note says:

o3 high-compute costs not available as pricing and feature availability is still TBD. The amount of compute was roughly 172x the low-compute configuration.

So we can get a ballpark estimate here in that 172 * $6,677 = $1,148,444!

Here's how François explains the likely mechanisms behind o3, which reminds me of how a brute-force chess computer might work.

For now, we can only speculate about the exact specifics of how o3 works. But o3's core mechanism appears to be natural language program search and execution within token space – at test time, the model searches over the space of possible Chains of Thought (CoTs) describing the steps required to solve the task, in a fashion perhaps not too dissimilar to AlphaZero-style Monte-Carlo tree search. In the case of o3, the search is presumably guided by some kind of evaluator model. To note, Demis Hassabis hinted back in a June 2023 interview that DeepMind had been researching this very idea – this line of work has been a long time coming.

So while single-generation LLMs struggle with novelty, o3 overcomes this by generating and executing its own programs, where the program itself (the CoT) becomes the artifact of knowledge recombination. Although this is not the only viable approach to test-time knowledge recombination (you could also do test-time training, or search in latent space), it represents the current state-of-the-art as per these new ARC-AGI numbers.

Effectively, o3 represents a form of deep learning-guided program search. The model does test-time search over a space of "programs" (in this case, natural language programs – the space of CoTs that describe the steps to solve the task at hand), guided by a deep learning prior (the base LLM). The reason why solving a single ARC-AGI task can end up taking up tens of millions of tokens and cost thousands of dollars is because this search process has to explore an enormous number of paths through program space – including backtracking.

I'm not sure if o3 (and o1 and similar models) even qualifies as an LLM any more - there's clearly a whole lot more going on here than just next-token prediction.

On the question of if o3 should qualify as AGI (whatever that might mean):

Passing ARC-AGI does not equate to achieving AGI, and, as a matter of fact, I don't think o3 is AGI yet. o3 still fails on some very easy tasks, indicating fundamental differences with human intelligence.

Furthermore, early data points suggest that the upcoming ARC-AGI-2 benchmark will still pose a significant challenge to o3, potentially reducing its score to under 30% even at high compute (while a smart human would still be able to score over 95% with no training).

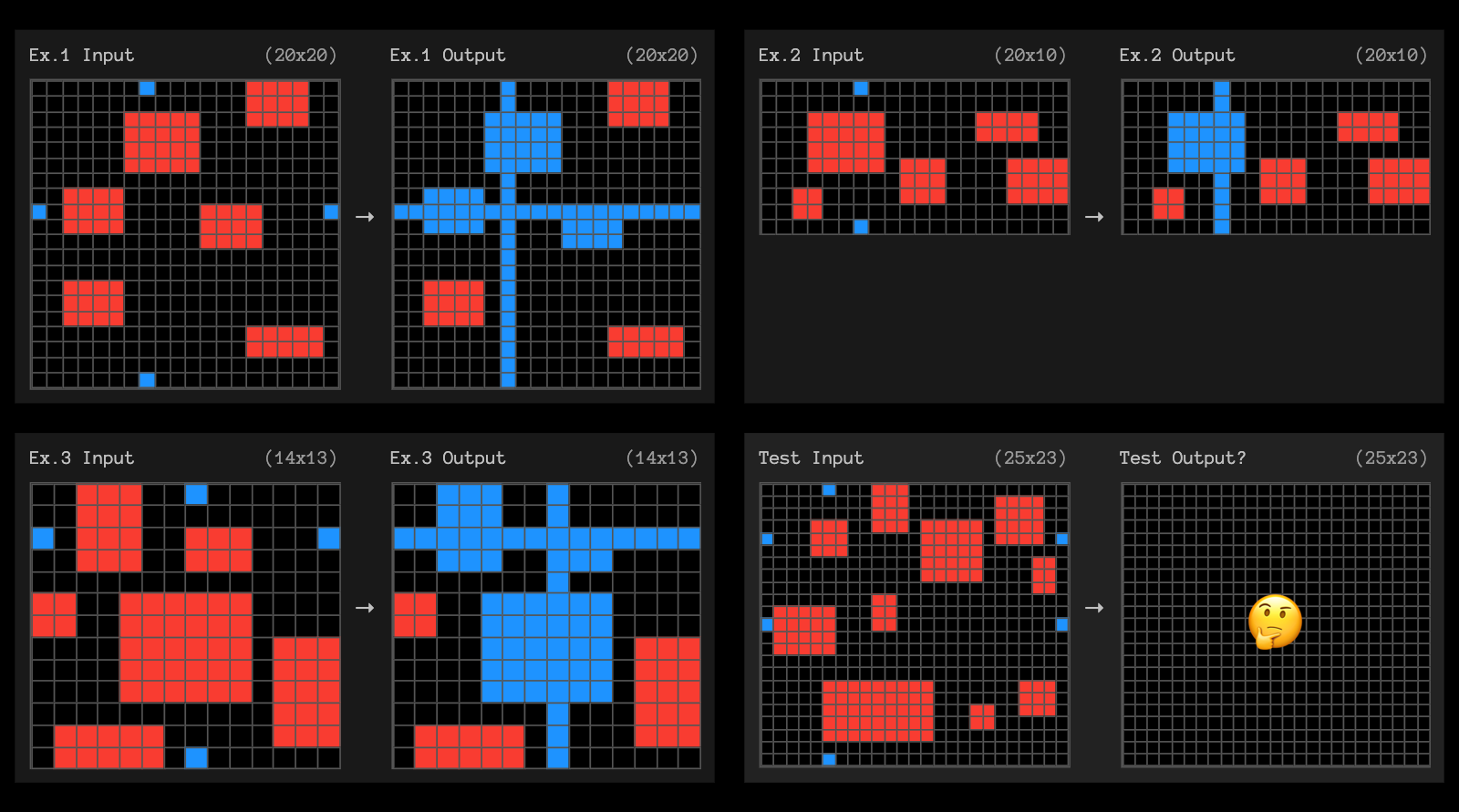

The post finishes with examples of the puzzles that o3 didn't manage to solve, including this one which reassured me that I can still solve at least some puzzles that couldn't be handled with thousands of dollars of GPU compute!

OpenAI's new o3 system - trained on the ARC-AGI-1 Public Training set - has scored a breakthrough 75.7% on the Semi-Private Evaluation set at our stated public leaderboard $10k compute limit. A high-compute (172x) o3 configuration scored 87.5%.

This is a surprising and important step-function increase in AI capabilities, showing novel task adaptation ability never seen before in the GPT-family models. For context, ARC-AGI-1 took 4 years to go from 0% with GPT-3 in 2020 to 5% in 2024 with GPT-4o. All intuition about AI capabilities will need to get updated for o3.

— François Chollet, Co-founder, ARC Prize

A common misconception about Transformers is to believe that they're a sequence-processing architecture. They're not.

They're a set-processing architecture. Transformers are 100% order-agnostic (which was the big innovation compared to RNNs, back in late 2016 -- you compute the full matrix of pairwise token interactions instead of processing one token at a time).

The way you add order awareness in a Transformer is at the feature level. You literally add to your token embeddings a position embedding / encoding that corresponds to its place in a sequence. The architecture itself just treats the input tokens as a set.

Reality is that LLMs are not AGI -- they're a big curve fit to a very large dataset. They work via memorization and interpolation. But that interpolative curve can be tremendously useful, if you want to automate a known task that's a match for its training data distribution.

Memorization works, as long as you don't need to adapt to novelty. You don't need intelligence to achieve usefulness across a set of known, fixed scenarios.

2023

Two things in AI may need regulation: reckless deployment of certain potentially harmful AI applications (same as any software really), and monopolistic behavior on the part of certain LLM providers. The technology itself doesn't need regulation anymore than databases or transistors. [...] Putting size/compute caps on deep learning models is akin to putting size caps on databases or transistor count caps on electronics. It's pointless and it won't age well.

If a LLM is like a database of millions of vector programs, then a prompt is like a search query in that database [...] this “program database” is continuous and interpolative — it’s not a discrete set of programs. This means that a slightly different prompt, like “Lyrically rephrase this text in the style of x” would still have pointed to a very similar location in program space, resulting in a program that would behave pretty closely but not quite identically. [...] Prompt engineering is the process of searching through program space to find the program that empirically seems to perform best on your target task.

2018

Without deep understanding of the basic tools needed to build and train new algorithms, he says, researchers creating AIs resort to hearsay, like medieval alchemists. "People gravitate around cargo-cult practices," relying on "folklore and magic spells," adds François Chollet, a computer scientist at Google in Mountain View, California.