19 posts tagged “moderation”

2025

I strongly suspect that Market Research Future, or a subcontractor, is conducting an automated spam campaign which uses a Large Language Model to evaluate a Mastodon instance, submit a plausible application for an account, and to post slop which links to Market Research Future reports. [...]

I don’t know how to run a community forum in this future. I do not have the time or emotional energy to screen out regular attacks by Large Language Models, with the knowledge that making the wrong decision costs a real human being their connection to a niche community.

— Aphyr, The Future of Forums is Lies, I Guess

I’ve disabled the pending geoblock of the UK because I now think the risks of the Online Safety Act to this site are low enough to change strategies to only geoblock if directly threatened by the regulator. [...]

It is not possible for a hobby site to comply with the Online Safety Act. The OSA is written to censor huge commercial sites with professional legal teams, and even understanding one's obligations under the regulations is an enormous project requiring expensive legal advice.

The law is 250 pages and the mandatory "guidance" from Ofcom is more than 3,000 pages of dense, cross-referenced UK-flavoured legalese. To find all the guidance you'll have to start here, click through to each of the 36 pages listed, and expand each page's collapsible sections that might have links to other pages and documents. (Though I can't be sure that leads to all their guidance, and note you'll have to check back regularly for planned updates.)

— Peter Bhat Harkins, site administrator, lobste.rs

2024

Private School Labeler on Bluesky. I am utterly delighted by this subversive use of Bluesky's labels feature, which allows you to subscribe to a custom application that then adds visible labels to profiles.

The feature was designed for moderation, but this labeler subverts it by displaying labels on accounts belonging to British public figures showing which expensive private school they went to and what the current fees are for that school.

Here's what it looks like on an account - tapping the label brings up the information about the fees:

These labels are only visible to users who have deliberately subscribed to the labeler. Unsurprisingly, some of those labeled aren't too happy about it!

In response to a comment about attending on a scholarship, the label creator said:

I'm explicit with the labeller that scholarship pupils, grant pupils, etc, are still included - because it's the later effects that are useful context - students from these schools get a leg up and a degree of privilege, which contributes eg to the overrepresentation in British media/politics

On the one hand, there are clearly opportunities for abuse here. But given the opt-in nature of the labelers, this doesn't feel hugely different to someone creating a separate webpage full of information about Bluesky profiles.

I'm intrigued by the possibilities of labelers. There's a list of others on bluesky-labelers.io, including another brilliant hack: Bookmarks, which lets you "report" a post to the labeler and then displays those reported posts in a custom feed - providing a private bookmarks feature that Bluesky itself currently lacks.

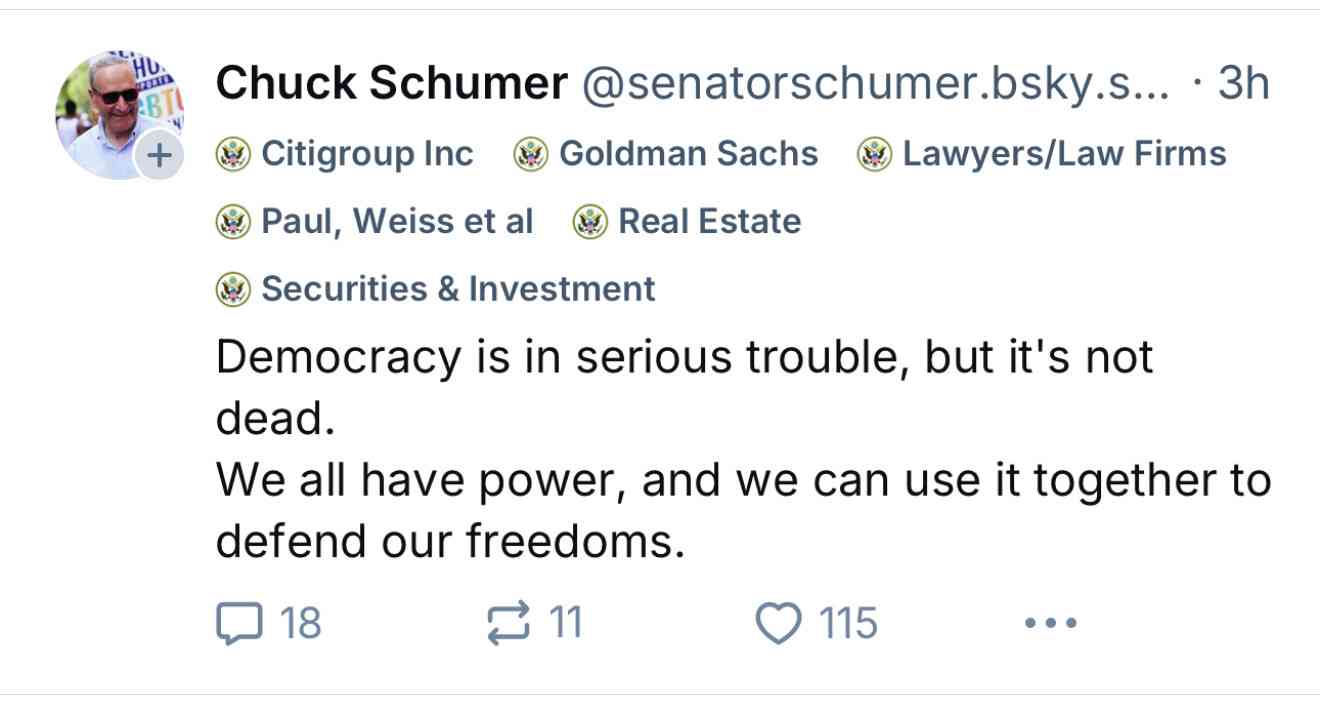

Update: @us-gov-funding.bsky.social is the inevitable labeler for US politicians showing which companies and industries are their top donors, built by Andrew Lisowski (source code here) using data sourced from OpenScrets. Here's what it looks like on this post:

We had to exclude [dead] and eventually even just [flagged] posts from the public API because many third-party clients and sites were displaying them as if they were regular posts. […]

IMO this issue is existential for HN. We've spent years and so much energy trying to find a balance between openness and human decency, a task which oscillates between barely-possible and simply-doomed, so the idea that anybody anywhere sees anything labeled "Hacker News" that pours all the toxic waste back into the ecosystem is physically painful to me.

— dang

Spam, and its cousins like content marketing, could kill HN if it became orders of magnitude greater—but from my perspective, it isn't the hardest problem on HN. [...]

By far the harder problem, from my perspective, is low-quality comments, and I don't mean by bad actors—the community is pretty good about flagging and reporting those; I mean lame and/or mean comments by otherwise good users who don't intend to and don't realize they're doing that.

— dang

2023

I remember that they [Ev and Biz at Twitter in 2008] very firmly believed spam was a concern, but, “we don’t think it's ever going to be a real problem because you can choose who you follow.” And this was one of my first moments thinking, “Oh, you sweet summer child.” Because once you have a big enough user base, once you have enough people on a platform, once the likelihood of profit becomes high enough, you’re going to have spammers.

2022

The essential truth of every social network is that the product is content moderation, and everyone hates the people who decide how content moderation works. Content moderation is what Twitter makes — it is the thing that defines the user experience.

Welcome to hell, Elon (via) If you only read one thing about the Elon acquisition of Twitter make it this, by Nilay Patel. Outstanding insights into what it actually takes to to run a commercial social media service.

2020

“I Have Blood on My Hands”: A Whistleblower Says Facebook Ignored Global Political Manipulation (via) Sophie Zhang worked as the data scientist for the Facebook Site Integrity fake engagement team. She gave up her severance package in order to speak out internally about what she saw there, and someone leaked her memo to BuzzFeed News. It’s a hell of a story: she saw bots and coordinated manual accounts used to influence politics in countries all around the world, and found herself constantly making moderation decisions that had lasting political impact. “With no oversight whatsoever, I was left in a situation where I was trusted with immense influence in my spare time". This sounds like a nightmare—imagine taking on responsibility for protecting democracy in so many different places.

I’ve found, in my 20 years of running the site, that whenever you ban an ironic Nazi, suddenly they become actual Nazis

2019

Lots of people calling for more aggressive moderation seem to imagine that if they yell enough the companies have a thoughtful, unbiased and nuance-understanding HAL 9000 they can deploy. It’s really more like the Censorship DMV.

In January, Facebook distributes a policy update stating that moderators should take into account recent romantic upheaval when evaluating posts that express hatred toward a gender. “I hate all men” has always violated the policy. But “I just broke up with my boyfriend, and I hate all men” no longer does.

2007

How to Moderate a Panel. By Derek Powazek. I tried to follow this advice a couple of days ago.

Social whitelisting with OpenID

A key feature of OpenID is that it provides a globally unique identifier for every user, no matter what site or service they are using on the Web.

[... 502 words]2005

Making Light: Virtual panel participation. Excellent thinking on the subject of online community moderation.

2004

Flagging issues on craigslist (via) Moderation in a high traffic environment.

2003

Managing Social Software

Moderation is a topic that goes hand in hand with online communities, but despite being a highly complex matter it is rarely given the coverage it deserves. That’s all set to change now thanks to Tom Coates’ excellent new blog, Everything in Moderation. The site’s topic is “creative ways to manage online communities and user-generated content”, and the content posted so far easily lives up to that claim. Of particular interest are the introductory post, the definitions of the four principle types of moderation and a fascinating entry about using stealth moderation tactics to deter abusive posters. Definitely one for the blogroll (at least once it starts pinging blo.gs).

Opening times for online forums?

Here’s something I’ve never seen before. The BBC’s Neighbours messageboard currently has a note up saying “This messageboard is currently closed”, with a link to the opening times: 9am until 10pm weekdays, opening 10am at weekends. You can still read the forums but you can’t post anything. This is obviously a moderation tactic to ensure there is always an administrator available to delete offensive material should any be posted—I’m writing about it here because I’ve never seen this approach used before.

[... 158 words]Battling comment spam

It’s a sad state of affairs when you come back to your blog after a week elsewhere and have to add another 56 domains to your blacklist. I’m actually getting more comment spam than legitimate comments now—this is becoming more than just a minor nuisance. I’m considering a number of improvements, including adding a moderation queue to comments on entries posted more than a month ago, disabling the comment form if the referral is a search engine (as per Russell Beattie’s suggestion) and adding some kind of wildcard support to the blacklist file.

[... 114 words]