5 posts tagged “notebooklm”

Google's NotebookLM "personalized research assistant".

2024

When we started working on what became NotebookLM in the summer of 2022, we could fit about 1,500 words in the context window. Now we can fit up to 1.5 million words. (And using various other tricks, effectively fit 25 million words.) The emergence of long context models is, I believe, the single most unappreciated AI development of the past two years, at least among the general public. It radically transforms the utility of these models in terms of actual, practical applications.

New in NotebookLM: Customizing your Audio Overviews. The most requested feature for Google's NotebookLM "audio overviews" (aka automatically generated podcast conversations) has been the ability to provide direction to those artificial podcast hosts - setting their expertise level or asking them to focus on specific topics.

Today's update adds exactly that:

Now you can provide instructions before you generate a "Deep Dive" Audio Overview. For example, you can focus on specific topics or adjust the expertise level to suit your audience. Think of it like slipping the AI hosts a quick note right before they go on the air, which will change how they cover your material.

I pasted in a link to my post about video scraping and prompted it like this:

You are both pelicans who work as data journalist at a pelican news service. Discuss this from the perspective of pelican data journalists, being sure to inject as many pelican related anecdotes as possible

Here's the resulting 7m40s MP3, and the transcript.

It starts off strong!

You ever find yourself wading through mountains of data trying to pluck out the juicy bits? It's like hunting for a single shrimp in a whole kelp forest, am I right?

Then later:

Think of those facial recognition systems they have for humans. We could have something similar for our finned friends. Although, gotta say, the ethical implications of that kind of tech are a whole other kettle of fish. We pelicans gotta use these tools responsibly and be transparent about it.

And when brainstorming some potential use-cases:

Imagine a pelican citizen journalist being able to analyze footage of a local council meeting, you know, really hold those pelicans in power accountable, or a pelican historian using video scraping to analyze old film reels, uncovering lost details about our pelican ancestors.

Plus this delightful conclusion:

The future of data journalism is looking brighter than a school of silversides reflecting the morning sun. Until next time, keep those wings spread, those eyes sharp, and those minds open. There's a whole ocean of data out there just waiting to be explored.

And yes, people on Reddit have got them to swear.

I listened to the whole 15-minute podcast this morning. It was, indeed, surprisingly effective. It remains somewhere in the uncanny valley, but not at all in a creepy way. Just more in a “this is a bit vapid and phony” way. [...] But ultimately the conversation has all the flavor of a bowl of unseasoned white rice.

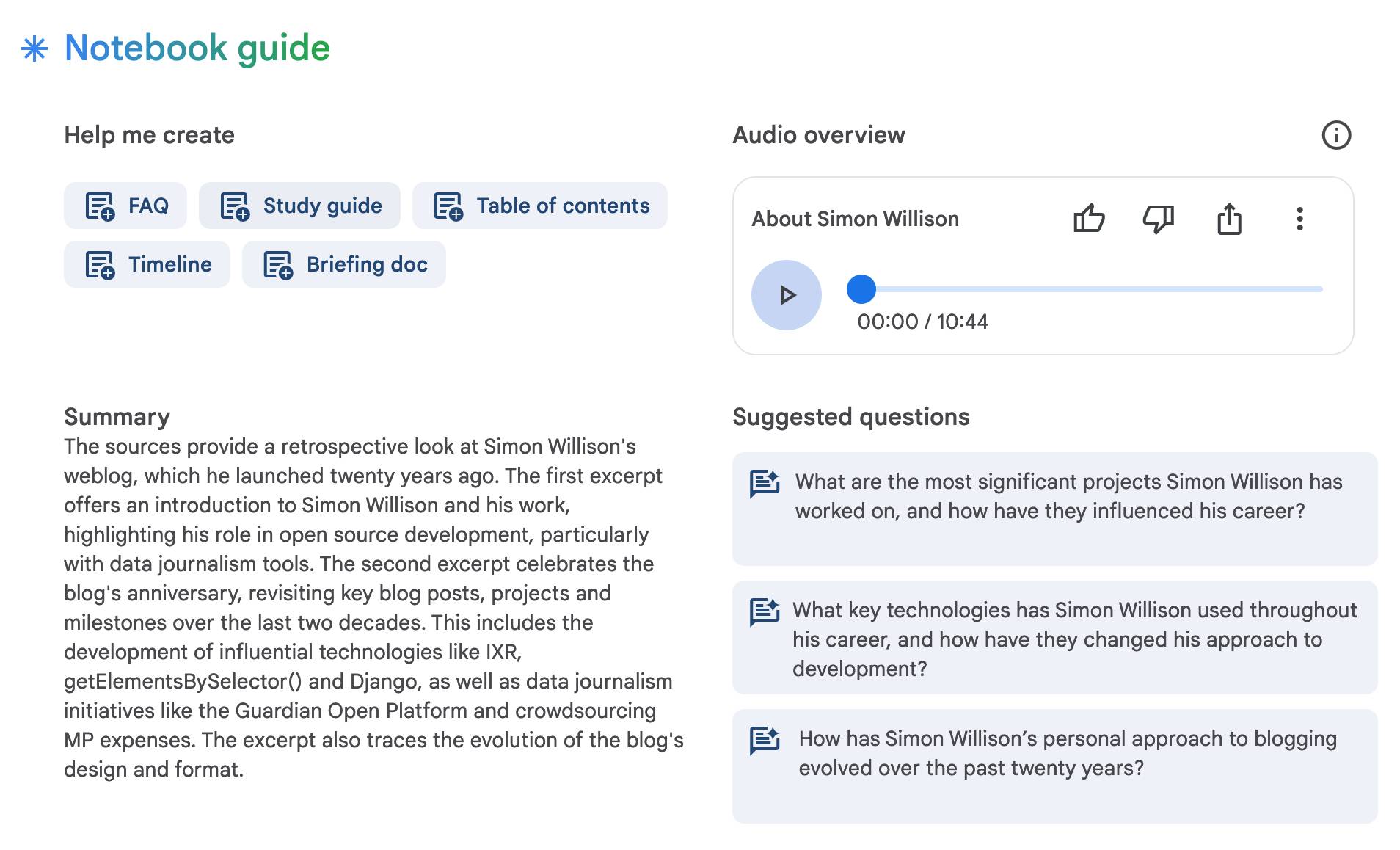

NotebookLM’s automatically generated podcasts are surprisingly effective

Audio Overview is a fun new feature of Google’s NotebookLM which is getting a lot of attention right now. It generates a one-off custom podcast against content you provide, where two AI hosts start up a “deep dive” discussion about the collected content. These last around ten minutes and are very podcast, with an astonishingly convincing audio back-and-forth conversation.

[... 1,489 words]Google NotebookLM Data Exfiltration (via) NotebookLM is a Google Labs product that lets you store information as sources (mainly text files in PDF) and then ask questions against those sources—effectively an interface for building your own custom RAG (Retrieval Augmented Generation) chatbots.

Unsurprisingly for anything that allows LLMs to interact with untrusted documents, it’s susceptible to prompt injection.

Johann Rehberger found some classic prompt injection exfiltration attacks: you can create source documents with instructions that cause the chatbot to load a Markdown image that leaks other private data to an external domain as data passed in the query string.

Johann reported this privately in the December but the problem has not yet been addressed. UPDATE: The NotebookLM team deployed a fix for this on 18th April.

A good rule of thumb is that any time you let LLMs see untrusted tokens there is a risk of an attack like this, so you should be very careful to avoid exfiltration vectors like Markdown images or even outbound links.