8 posts tagged “trust”

2024

Thoughts on the WWDC 2024 keynote on Apple Intelligence

Today’s WWDC keynote finally revealed Apple’s new set of AI features. The AI section (Apple are calling it Apple Intelligence) started over an hour into the keynote—this link jumps straight to that point in the archived YouTube livestream, or you can watch it embedded here:

[... 855 words]Update on the Recall preview feature for Copilot+ PCs (via) This feels like a very good call to me: in response to widespread criticism Microsoft are making Recall an opt-in feature (during system onboarding), adding encryption to the database and search index beyond just disk encryption and requiring Windows Hello face scanning to access the search feature.

In fact, Microsoft goes so far as to promise that it cannot see the data collected by Windows Recall, that it can't train any of its AI models on your data, and that it definitely can't sell that data to advertisers. All of this is true, but that doesn't mean people believe Microsoft when it says these things. In fact, many have jumped to the conclusion that even if it's true today, it won't be true in the future.

2023

The AI trust crisis

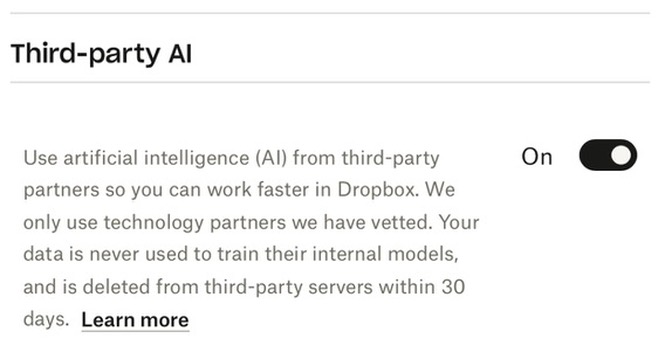

Dropbox added some new AI features. In the past couple of days these have attracted a firestorm of criticism. Benj Edwards rounds it up in Dropbox spooks users with new AI features that send data to OpenAI when used.

[... 1,733 words]AI and Trust. Barnstormer of an essay by Bruce Schneier about AI and trust. It’s worth spending some time with this—it’s hard to extract the highlights since there are so many of them.

A key idea is that we are predisposed to trust AI chat interfaces because they imitate humans, which means we are highly susceptible to profit-seeking biases baked into them.

Bruce suggests that what’s needed is public models, backed by government funds: “A public model is a model built by the public for the public. It requires political accountability, not just market accountability.”

Can We Trust Search Engines with Generative AI? A Closer Look at Bing’s Accuracy for News Queries (via) Computational journalism professor Nick Diakopoulos takes a deeper dive into the quality of the summarizations provided by AI-assisted Bing. His findings are troubling: for news queries, which are a great test for AI summarization since they include recent information that may have sparse or conflicting stories, Bing confidently produces answers with important errors: claiming the Ohio train derailment happened on February 9th when it actually happened on February 3rd for example.

2007

Wikipedia trust colouring (with demo) (via) “The text background of Wikipedia articles is colored according to a value of trust, computed from the reputation of the authors who contributed the text, as well as those who edited the text.”

An OpenID is not an account!

I’m excited to see that OpenID has finally started to gain serious traction outside of the Identity community. Understandably, misconceptions about OpenID continue to crop-up. The one I want to address in this entry is the idea that an OpenID can be used as a replacement for a regular user account.

[... 601 words]