Datasette 0.59: The annotated release notes

19th October 2021

Datasette 0.59 is out, with a miscellaneous grab-bag of improvements. Here are the annotated release notes.

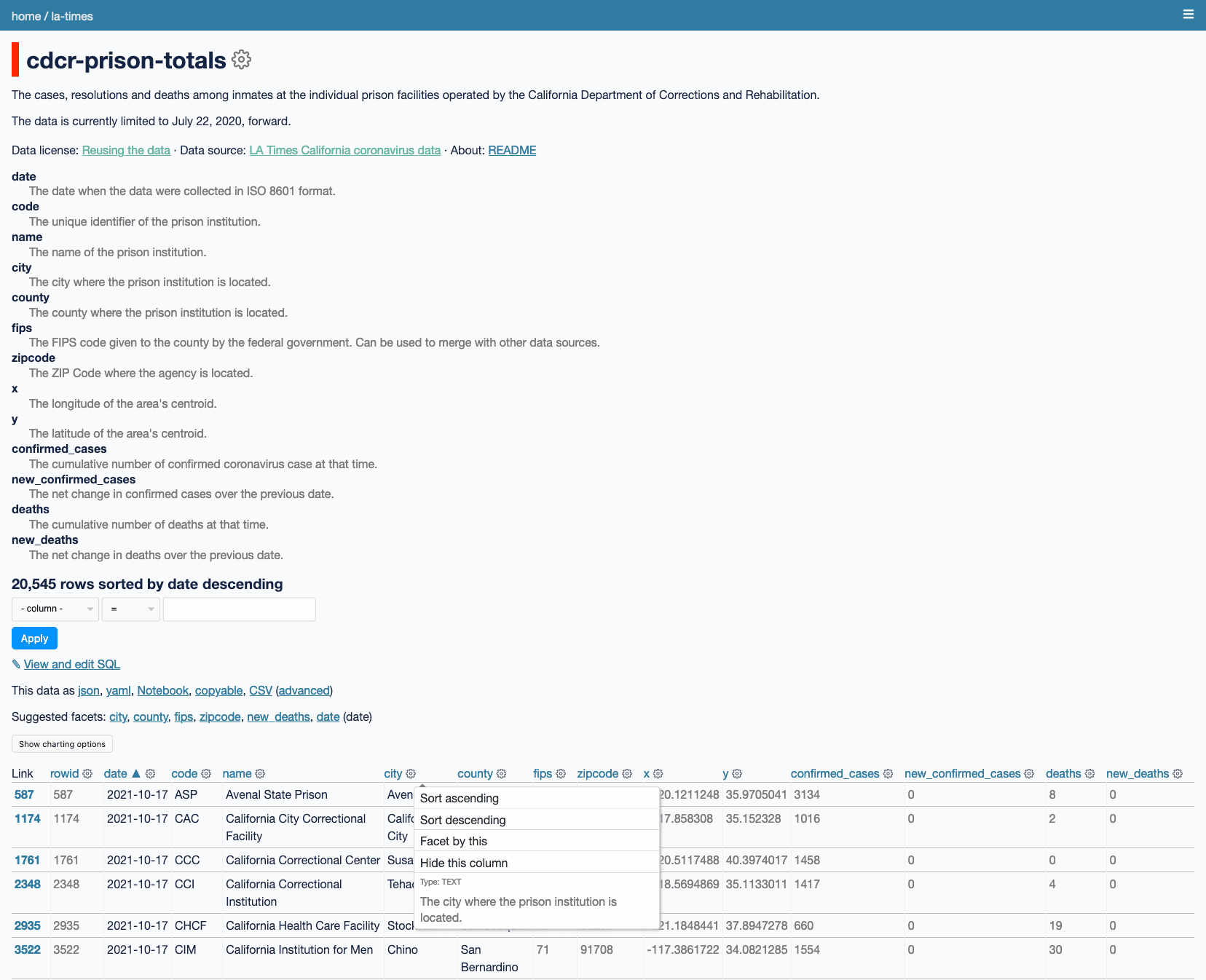

Column metadata

Columns can now have associated metadata descriptions in

metadata.json, see Column descriptions. (#942)

I’ve been wanting this for ages. Tables consist of columns, and column names very rarely give you enough information to truly understand the associated data. You can now drop extra column definitions into your metadata.json like so:

{

"databases": {

"la-times": {

"tables": {

"cdph-county-cases-deaths": {

"columns": {

"county": "The name of the county where the agency is based.",

"fips": "The FIPS code given to the county by the federal government. Can be used to merge with other data sources.",

"date": "The date when the data were retrieved in ISO 8601 format.",

"confirmed_cases": "The cumulative number of coronavirus cases that were confirmed as of that time. This is sometimes called the episode date by other sources.",

"reported_cases": "The cumulative number of coronavirus cases that were reported as of that time. This reflects when cases were first announced by the state.",

"probable_cases": "The cumulative number of probable coronavirus cases that were confirmed as of that time. This reflects the results of antigen tests, a rapid testing technique different from the standard test.",

"reported_and_probable_cases": "The cumulative number of reported and probable coronavirus cases as of that time.",

"reported_deaths": "The cumulative number of deaths reported at that time."

}

}

}

}

}

}The LA Times publish a meticulous data dictionary for the 46 CSV and GeoJSON files that they maintain tracking the pandemic in their california-coronavirus-data GitHub repository.

To demonstrate the new column metadata feature, I wrote a script that converts their Markdown data dictionary into Datasette’s metadata format and publishes it along with imported CSV data from their repository.

You can explore the result at covid-19.datasettes.com/la-times—here’s their cdcr-prison-totals table tracking Covid cases in prisons operated by the California Department of Corrections and Rehabilitation.

register_commands() plugin hook

New register_commands() plugin hook allows plugins to register additional Datasette CLI commands, e.g.

datasette mycommand file.db. (#1449)

I originally built this because I thought I would need it for Datasette Desktop, then found it wasn’t necessary for that project after all.

I held off on implementing this for quite a while on the basis that plugins which needed their own CLI interface could implement one entirely separately as a Click-based CLI app—dogsheep-beta implements this pattern, offering both a dogsheep-beta index command and registering itself as a Datasette plugin.

The problem with plugins implementing their own separate CLI commands is that users then need to understand where they have been installed. Datasette is designed to work well with virtual environments, but if a plugin is installed into a virtual environment I can’t guarantee that any CLI tools it includes will execute when the user types their name.

Now that plugins can register datasette subcommand subcommands this problem has a solution: provided users can run datasette they’ll also be able to run CLI commands provided by plugins, without needing to understand how to modify their path.

I expect this to be particularly useful for Datasette installed through Homebrew, which invisibly sets up its own virtual environment into which plugins can be installed using datasette install plugin-name.

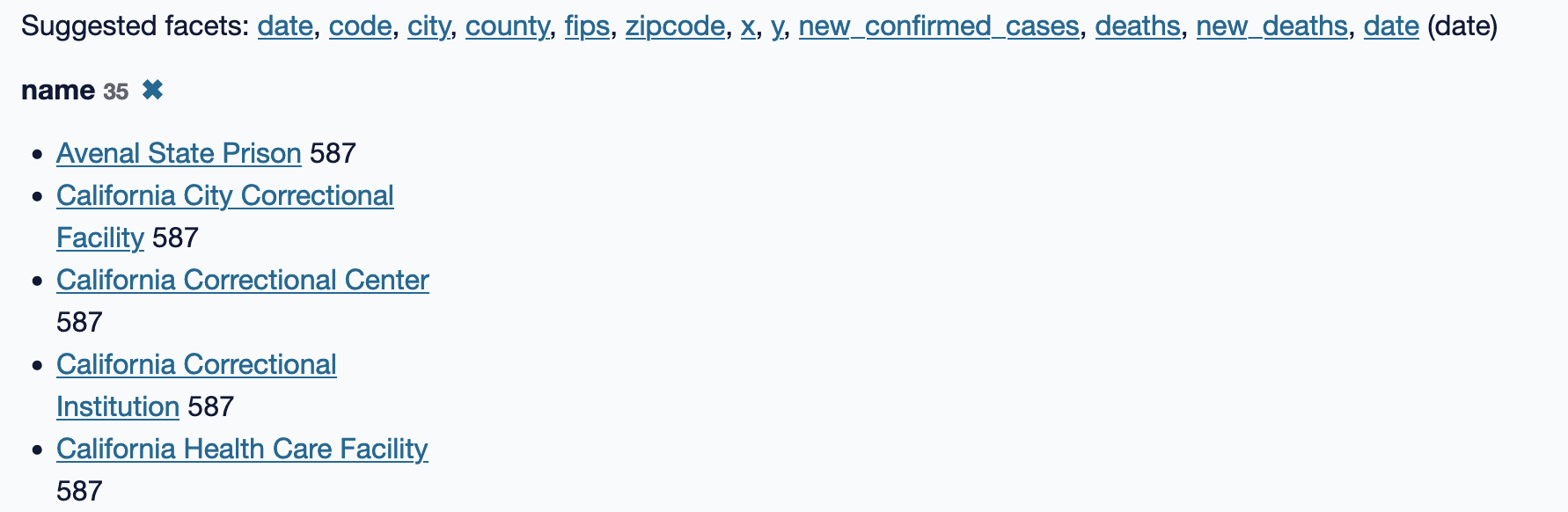

Count unique facet values with ?_facet_size=max

Adding

?_facet_size=maxto a table page now shows the number of unique values in each facet. (#1423)

When I’m using facets to explore data, I’m often interested in how many values are available in the facet—particularly if I’m faceting by a column such as country or state.

I added a ... link to show the maximim number of facets in Datasette 0.57. Clicking that link adds ?_facet_size=max to the URL, which now also adds a numeric count of the number of distinct facet values.

Here’s an example using that Californian prison data.

Upgrading httpx

Upgraded dependency httpx 0.20—the undocumented

allow_redirects=parameter to datasette.client is nowfollow_redirects=, and defaults toFalsewhere it previously defaulted toTrue. (#1488)

A while ago Tom Christie requested feedback on how httpx should handle redirects.

The requests library that inspired it automatically follows 301 and 302 redirects unless you explicitly tell it not to.

I’ve been caught out by this many times in the past—it’s not default behaviour that I want from my HTTP client library—so I chimed in as favouring a change in behaviour. I also suggested that follow_redirects=True would be a better term for it than allow_redirects=True.

Tom made that change for httpx 1.0, and then back-ported it for version 0.20—after all, pre-1.0 you’re allowed to make breaking changes like this.

... and Datasette broke, hard! Datasette embeds httpx pretty deeply inside itself, and the breaking change caused all kinds of errors and test failures.

This was the final push I needed to get 0.59 released.

(I just found out this also broke the Homebrew tests for Datasette, as those relied on datasette --get '/:memory:.json?sql=select+3*5' automatically following the redirect to /_memory.json?sql=select+3*5 instead.)

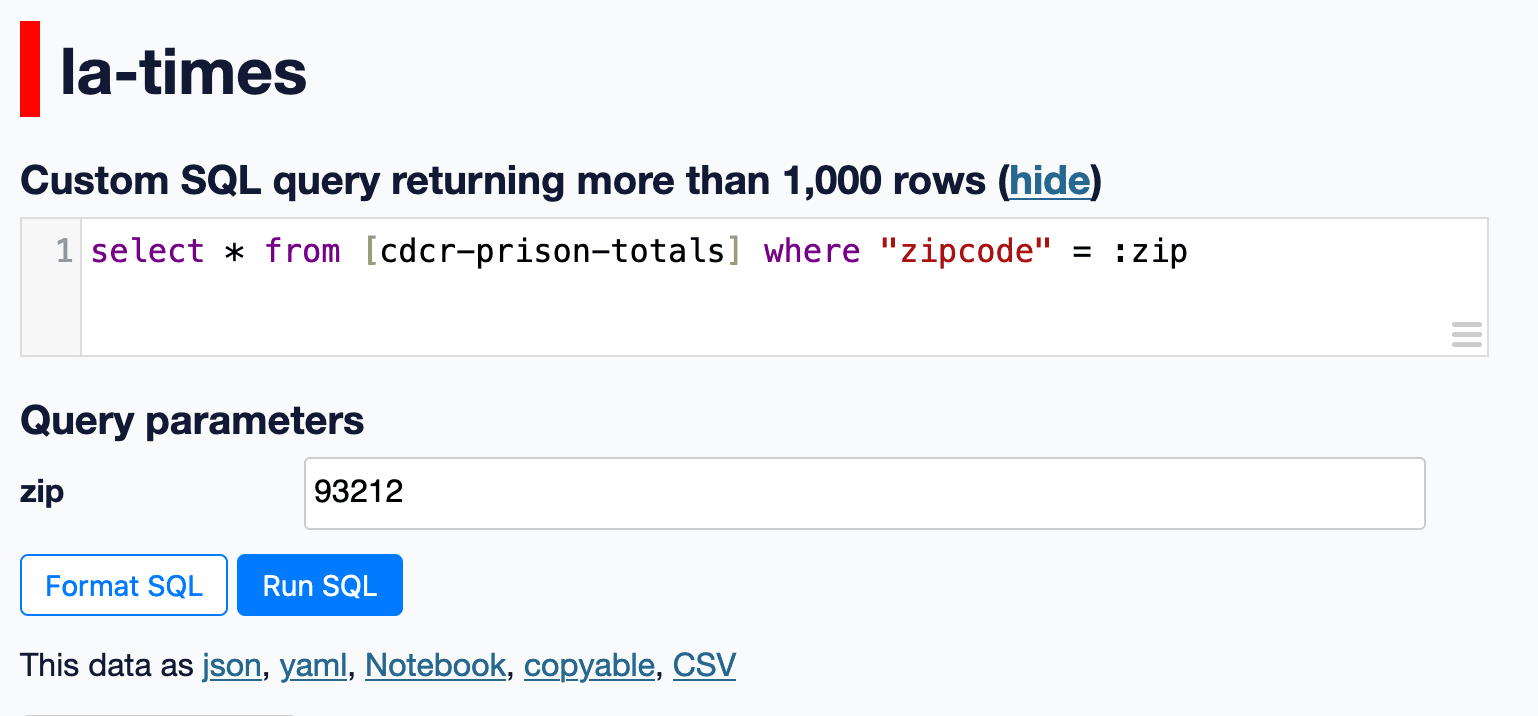

Code that figures out which named parameters a SQL query takes in order to display form fields for them is no longer confused by strings that contain colon characters. (#1421)

One of my favourite obscure features of Datasette is the way it can take a SQL query such as the following:

select * from [cdcr-prison-totals]

where "zipcode" = :zipAnd extract out that :zip parameter and turn it into an HTML form field, as seen here:

This used to use a regular expression, which meant that it could be confused by additional colons—the following SQL query for example:

select * from content

where created_time = '07:00'

and author = :authorI thought that solving this properly would require embedding a full SQLite-compatible SQL parser in Datasette.

Then I realized SQLite’s explain output included exactly the data I needed, for example:

| addr | opcode | p1 | p2 | p3 | p4 | p5 | comment |

|---|---|---|---|---|---|---|---|

| 0 | Init | 0 | 10 | 0 | 0 | ||

| 1 | OpenRead | 0 | 42 | 0 | 2 | 0 | |

| 2 | Rewind | 0 | 9 | 0 | 0 | ||

| 3 | Column | 0 | 1 | 1 | 0 | ||

| 4 | Ne | 2 | 8 | 1 | BINARY-8 | 82 | |

| 5 | Rowid | 0 | 3 | 0 | 0 | ||

| 6 | Column | 0 | 1 | 4 | 0 | ||

| 7 | ResultRow | 3 | 2 | 0 | 0 | ||

| 8 | Next | 0 | 3 | 0 | 1 | ||

| 9 | Halt | 0 | 0 | 0 | 0 | ||

| 10 | Transaction | 0 | 0 | 35 | 0 | 1 | |

| 11 | Variable | 1 | 2 | 0 | :name | 0 | |

| 12 | Goto | 0 | 1 | 0 | 0 |

The trick then is to run an explain, then find any rows with an opcode of Variable and read the p4 register to find out the name of those variables.

This is risky, since SQLite makes no promises about the stability of the explain output—but it’s such a useful trick that I’m now contemplating building an automated test suite around it such that if a future SQLite release breaks things I will at least know about it promptly.

Everything else

The

--corsoption now causes Datasette to return theAccess-Control-Allow-Headers: Authorizationheader, in addition toAccess-Control-Allow-Origin: *. (#1467)

This was a feature request from users of the datasette-auth-tokens plugin.

Renamed

--help-configoption to--help-settings. (#1431)

Part of my continuing goal to free up the term “config” to mean plugin configuration (which is currently mixed up with Datasette’s metadata concept) rather than meaning the options that can be passed to the Datasette CLI tool (now called settings).

datasette.databasesproperty is now a documented API. (#1443)

I’ve got into the habit of documenting any Datasette internals that I use in a plugin. In this case I needed it for datasette-block-robots.

The

base.htmltemplate now wraps everything other than the<footer>in a<div class="not-footer">element, to help with advanced CSS customization. (#1446)

I made this change so that Datasette Desktop could more easily implement a sticky footer that stuck to the bottom of the application window no matter how short or tall it was.

The render_cell() plugin hook can now return an awaitable function. This means the hook can execute SQL queries. (#1425)

Another example of the await me maybe pattern in action.

register_routes(datasette) plugin hook now accepts an optional

datasetteargument. (#1404)

This means plugins can conditionally register routes based on plugin configuration.

New

hide_sqlcanned query option for defaulting to hiding the SQL query used by a canned query, see Additional canned query options. (#1422)

The goal of canned queries is to provide an interface for people who don’t know SQL to execute queries written by other people, potentially providing their own inputs using the :parameter mechanism described above.

If it’s being used for that, there’s not much to be gained from making them scroll past the SQL query first!

Adding "hide_sql": true to the canned query configuration now defaults to hiding the query for them—though they can still click “Show SQL” to see it.

New

--cpuoption for datasette publish cloudrun. (#1420)

Google Cloud Run recently added the ability to specify 1, 2 or 4 vCPUs when running a deploy.

If Rich is installed in the same virtual environment as Datasette, it will be used to provide enhanced display of error tracebacks on the console. (#1416)

Rich is Will McGugan’s phenomenal Python library for building beautiful console interfaces. One of the many tricks up its sleeve is improved display of exceptions, including more detailed tracebacks and local variables. Datasette now takes advantage of this if Rich is installed in the same virtual environment.

datasette.utilsparse_metadata(content) function, used by the new datasette-remote-metadata plugin, is now a documented API. (#1405)

Another API that became documented after I used it in a plugin.

The datasette-remote-metadata plugin is pretty neat.

I sometimes find myself working on projects where I’m deploying a large database file—1 or 2 GB—to Google Cloud Run. Each deploy can take several minutes.

If I want to tweak a canned query or a few lines of text in the metadata for that deployment, it can be frustrating to have to push an entirely new deploy just to make those changes.

The remote metadata plugin allows me to host the metadata at a separate URL, which I can then update without needing a full deploy of the underlying database files.

The last two were simple bug fixes:

Releases this week

-

datasette-publish-vercel: 0.11—(17 releases total)—2021-10-18

Datasette plugin for publishing data using Vercel -

datasette-statistics: 0.2—(3 releases total)—2021-10-15

SQL statistics functions for Datasette -

datasette-auth-tokens: 0.3—(7 releases total)—2021-10-15

Datasette plugin for authenticating access using API tokens -

datasette: 0.59—(98 releases total)—2021-10-14

An open source multi-tool for exploring and publishing data

TIL this week

More recent articles

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026

- Two new Showboat tools: Chartroom and datasette-showboat - 17th February 2026

- Deep Blue - 15th February 2026