Stable Diffusion is a really big deal

29th August 2022

If you haven’t been paying attention to what’s going on with Stable Diffusion, you really should be.

Stable Diffusion is a new “text-to-image diffusion model” that was released to the public by Stability.ai six days ago, on August 22nd.

It’s similar to models like Open AI’s DALL-E, but with one crucial difference: they released the whole thing.

You can try it out online at beta.dreamstudio.ai (currently for free). Type in a text prompt and the model will generate an image.

You can download and run the model on your own computer (if you have a powerful enough graphics card). Here’s an FAQ on how to do that.

You can use it for commercial and non-commercial purposes, under the terms of the Creative ML OpenRAIL-M license—which lists some usage restrictions that include avoiding using it to break applicable laws, generate false information, discriminate against individuals or provide medical advice.

In just a few days, there has been an explosion of innovation around it. The things people are building are absolutely astonishing.

I’ve been tracking the r/StableDiffusion subreddit and following Stability.ai founder Emad Mostaque on Twitter.

img2img

Generating images from text is one thing, but generating images from other images is a whole new ballgame.

My favourite example so far comes from Reddit user argaman123. They created this image:

And added this prompt (or "something along those lines"):

A distant futuristic city full of tall buildings inside a huge transparent glass dome, In the middle of a barren desert full of large dunes, Sun rays, Artstation, Dark sky full of stars with a shiny sun, Massive scale, Fog, Highly detailed, Cinematic, Colorful

The model produced the following two images:

These are amazing. In my previous experiments with DALL-E I’ve tried to recreate photographs I have taken, but getting the exact composition I wanted has always proved impossible using just text. With this new capability I feel like I could get the AI to do pretty much exactly what I have in my mind.

Imagine having an on-demand concept artist that can generate anything you can imagine, and can iterate with you towards your ideal result. For free (or at least for very-cheap).

You can run this today on your own computer, if you can figure out how to set it up. You can try it in your browser using Replicate, or Hugging Face. This capability is apparently coming to the DreamStudio interface next week.

There’s so much more going on.

stable-diffusion-webui is an open source UI you can run on your own machine providing a powerful interface to the model. Here’s a Twitter thread showing what it can do.

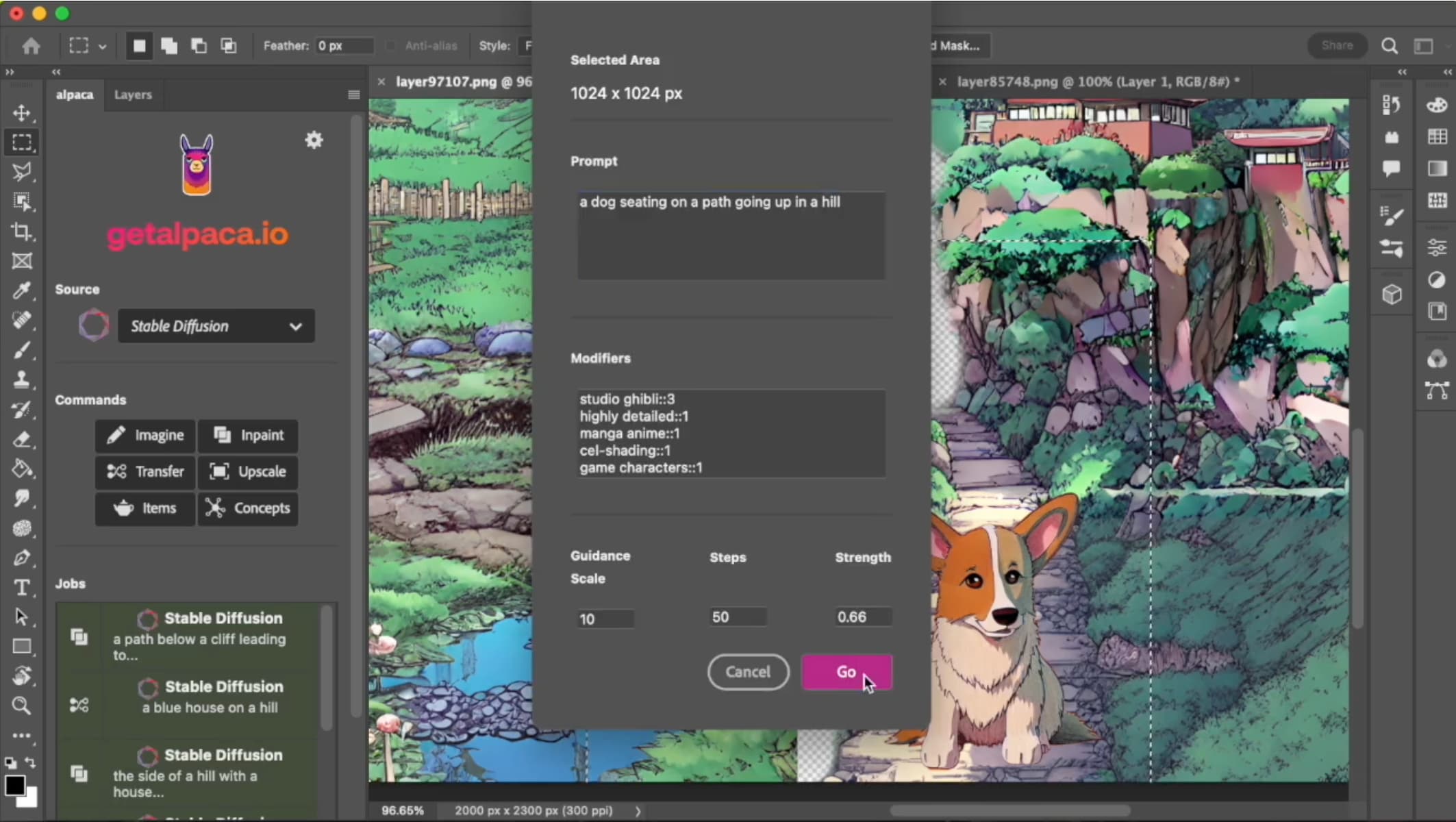

Reddit user alpacaAI shared a video demo of a Photoshop plugin they are developing which has to be seen to be believed. They have a registration form up on getalpaca.io for people who want to try it out once it’s ready.

Reddit user Hoppss ran a 2D animated clip from Disney’s Aladdin through img2img frame-by frame, using the following parameters:

--prompt "3D render" --strength 0.15 --seed 82345912 --n_samples 1 --ddim_steps 100 --n_iter 1 --scale 30.0 --skip_grid

The result was a 3D animated video. Not a great quality one, but pretty stunning for a shell script and a two word prompt!

The best description I’ve seen so far of an iterative process to build up an image using Stable Diffusion comes from Andy Salerno: 4.2 Gigabytes, or: How to Draw Anything.

Ben Firshman has published detailed instructions on how to Run Stable Diffusion on your M1 Mac’s GPU.

And there’s so much more to come

All of this happened in just six days since the model release. Emad Mostaque on Twitter:

We use as much compute as stable diffusion used every 36 hours for our upcoming open source models

This made me think of Google’s Parti paper, which included a demonstration that showed that once the model was trained to 200bn parameters it could generate images with correctly spelled text!

Ethics: will you be an AI vegan?

I’m finding the ethics of all of this extremely difficult.

Stable Diffusion has been trained on millions of copyrighted images scraped from the web.

The Stable Diffusion v1 Model Card has the full details, but the short version is that it uses LAION-5B (5.85 billion image-text pairs) and its laion-aesthetics v2 5+ subset (which I think is ~600M pairs filtered for aesthetics). These images were scraped from the web.

I’m not qualified to speak to the legality of this. I’m personally more concerned with the morality.

The final model is I believe around 4.2GB of data—a binary blob of floating point numbers. The fact that it can compress such an enormous quantity of visual information into such a small space is itself a fascinating detail.

As such, each image in the training set contributes only a tiny amount of information—a few tweaks to some numeric weights spread across the entire network.

But... the people who created these images did not give their consent. And the model can be seen as a direct threat to their livelihoods. No-one expected creative AIs to come for the artist jobs first, but here we are!

I’m still thinking through this, and I’m eager to consume more commentary about it. But my current mental model is to think about this in terms of veganism, as an analogy for people making their own personal ethical decisions.

I know many vegans. They have access to the same information as I do about the treatment of animals, and they have made informed decisions about their lifestyle, which I fully respect.

I myself remain a meat-eater.

There will be many people who will decide that the AI models trained on copyrighted images are incompatible with their values. I understand and respect that decision.

But when I look at that img2img example of the futuristic city in the dome, I can’t resist imagining what I could do with that capability.

If someone were to create a vegan model, trained entirely on out-of-copyright images, I would be delighted to promote it and try it out. If its results were good enough, I might even switch to it entirely.

Understanding the training data

Update: 30th August 2022. Andy Baio and I worked together on a deep dive into the training data behind Stable Diffusion. Andy wrote up some of our findings in Exploring 12 Million of the 2.3 Billion Images Used to Train Stable Diffusion’s Image Generator.

Indistinguishable from magic

Just a few months ago, if I’d seen someone on a fictional TV show using an interface like that Photoshop plugin I’d have grumbled about how that was a step too far even by the standards of American network TV dramas.

Science fiction is real now. Machine learning generative models are here, and the rate with which they are improving is unreal. It’s worth paying real attention to what they can do and how they are developing.

I’m tweeting about this stuff a lot these days. Follow @simonw on Twitter for more.

More recent articles

- Writing about Agentic Engineering Patterns - 23rd February 2026

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026

- Two new Showboat tools: Chartroom and datasette-showboat - 17th February 2026