s3-ocr: Extract text from PDF files stored in an S3 bucket

30th June 2022

I’ve released s3-ocr, a new tool that runs Amazon’s Textract OCR text extraction against PDF files in an S3 bucket, then writes the resulting text out to a SQLite database with full-text search configured so you can run searches against the extracted data.

You can search through a demo of 697 pages of OCRd text at s3-ocr-demo.datasette.io/pages/pages.

Textract works extremely well: it handles dodgy scanned PDFs full of typewritten code and reads handwritten text better than I can! It charges $1.50 per thousand pages processed.

Why I built this

My initial need for this is a collaboration I have running with the San Francisco Microscopy Society. They’ve been digitizing their archives—which stretch back to 1870!—and were looking for help turning the digital scans into something more useful.

The archives are full of hand-written and type-written notes, scanned and stored as PDFs.

I decided to wrap my work up as a tool because I’m sure there are a LOT of organizations out there with a giant bucket of PDF files that would benefit from being able to easily run OCR and turn the results into a searchable database.

Running Textract directly against large numbers of files is somewhat inconvenient (here’s my earlier TIL about it). s3-ocr is my attempt to make it easier.

Tutorial: How I built that demo

The demo instance uses three PDFs from the Library of Congress Harry Houdini Collection on the Internet Archive:

- The unmasking of Robert-Houdin from 1908

- The practical magician and ventriloquist’s guide: a practical manual of fireside magic and conjuring illusions: containing also complete instructions for acquiring & practising the art of ventriloquism from 1876

- Latest magic, being original conjuring tricks from 1918

I started by downloading PDFs of those three files.

Then I installed the two tools I needed:

pip install s3-ocr s3-credentials

I used my s3-credentials tool to create a new S3 bucket and credentials with the ability to write files to it, with the new --statement option (which I released today) to add textract permissions to the generated credentials:

s3-credentials create s3-ocr-demo --statement '{

"Effect": "Allow",

"Action": "textract:*",

"Resource": "*"

}' --create-bucket > ocr.json

(Note that you don’t need to use s3-credentials at all if you have AWS credentials configured on your machine with root access to your account—just leave off the -a ocr.json options in the following examples.)

s3-ocr-demo is now a bucket I can use for the demo. ocr.json contains JSON with an access key and secret key for an IAM user account that can interact with the that bucket, and also has permission to access the AWS Textract APIs.

I uploaded my three PDFs to the bucket:

s3-credentials put-object s3-ocr-demo latestmagicbeing00hoff.pdf latestmagicbeing00hoff.pdf -a ocr.json

s3-credentials put-object s3-ocr-demo practicalmagicia00harr.pdf practicalmagicia00harr.pdf -a ocr.json

s3-credentials put-object s3-ocr-demo unmaskingrobert00houdgoog.pdf unmaskingrobert00houdgoog.pdf -a ocr.json

(I often use Transmit as a GUI for this kind of operation.)

Then I kicked off OCR jobs against every PDF file in the bucket:

% s3-ocr start s3-ocr-demo --all -a ocr.json

Found 0 files with .s3-ocr.json out of 3 PDFs

Starting OCR for latestmagicbeing00hoff.pdf, Job ID: f66bc2d00fb75d1c42d1f829e5b6788891f9799fda404c4550580959f65a5402

Starting OCR for practicalmagicia00harr.pdf, Job ID: ef085728135d524a39bc037ad6f7253284b1fdbeb728dddcfbb260778d902b55

Starting OCR for unmaskingrobert00houdgoog.pdf, Job ID: 93bd46f02eb099eca369c41e384836d2bd3199b95d415c0257ef3fa3602cbef9

The --all option scans for any file with a .pdf extension. You can pass explicit file names instead if you just want to process one or two files at a time.

This returns straight away, but the OCR process itself can take several minutes depending on the size of the files.

The job IDs can be used to inspect the progress of each task like so:

% s3-ocr inspect-job f66bc2d00fb75d1c42d1f829e5b6788891f9799fda404c4550580959f65a5402

{

"DocumentMetadata": {

"Pages": 244

},

"JobStatus": "SUCCEEDED",

"DetectDocumentTextModelVersion": "1.0"

}

Once the job completed, I could preview the text extracted from the PDF like so:

% s3-ocr text s3-ocr-demo latestmagicbeing00hoff.pdf

111

.

116

LATEST MAGIC

BEING

ORIGINAL CONJURING TRICKS

INVENTED AND ARRANGED

BY

PROFESSOR HOFFMANN

(ANGELO LEWIS, M.A.)

Author of "Modern Magic," etc.

WITH NUMEROUS ILLUSTRATIONS

FIRST EDITION

NEW YORK

SPON & CHAMBERLAIN, 120 LIBERTY ST.

...

To create a SQLite database with a table containing rows for every page of scanned text, I ran this command:

% s3-ocr index s3-ocr-demo pages.db -a ocr.json

Fetching job details [####################################] 100%

Populating pages table [####--------------------------------] 13% 00:00:34

I then published the resulting pages.db SQLite database using Datasette—you can explore it here.

How s3-ocr works

s3-ocr works by calling Amazon’s S3 and Textract APIs.

Textract only works against PDF files in asynchronous mode: you call an API endpoint to tell it “start running OCR against this PDF file in this S3 bucket”, then wait for it to finish—which can take several minutes.

It defaults to storing the OCR results in its own storage, expiring after seven days. You can instead tell it to store them in your own S3 bucket—I use that option in s3-ocr.

A design challenge I faced was that I wanted to make the command restartable and resumable: if the user cancelled the task, I wanted to be able to pick up from where it had got to. I also want to be able to run it again after adding more PDFs to the bucket without repeating work for the previously processed files.

I also needed to persist those job IDs: Textract writes the OCR results to keys in the bucket called textract-output/JOB_ID/1-?—but there’s no indication as to which PDF file the results correspond to.

My solution is to write tiny extra JSON files to the bucket when the OCR job is first started.

If you have a file called latestmagicbeing00hoff.pdf the start command will create a new file called latestmagicbeing00hoff.pdf.s3-ocr.json with the following content:

{

"job_id": "f66bc2d00fb75d1c42d1f829e5b6788891f9799fda404c4550580959f65a5402",

"etag": "\"d79af487579dcbbef26c9b3be763eb5e-2\""

}This associates the job ID with the PDF file. It also records the original ETag of the PDF file—this is so in the future I can implement a system that can re-run OCR if the PDF has been updated.

The existence of these files lets me do two things:

- If you run

s3-ocr start s3-ocr-demo --allit can avoid re-submitting PDF files that have already been sent for OCR, by checking for the existence of the.s3-ocr.jsonfile. - When you later ask for the results of the OCR it can use these files to associate the PDF with the results.

Scatting .s3-ocr.json files all over the place feels a little messy, so I have an open issue considering moving them all to a s3-ocr/ prefix in the bucket instead.

Try it and let me know what you think

This is a brand new project, but I think it’s ready for other people to start trying it out.

I ran it against around 7,000 pages from 531 PDF files in the San Francisco Microscopy Society archive and it seemed to work well!

If you try this out and it works (or it doesn’t work) please let me know via Twitter or GitHub.

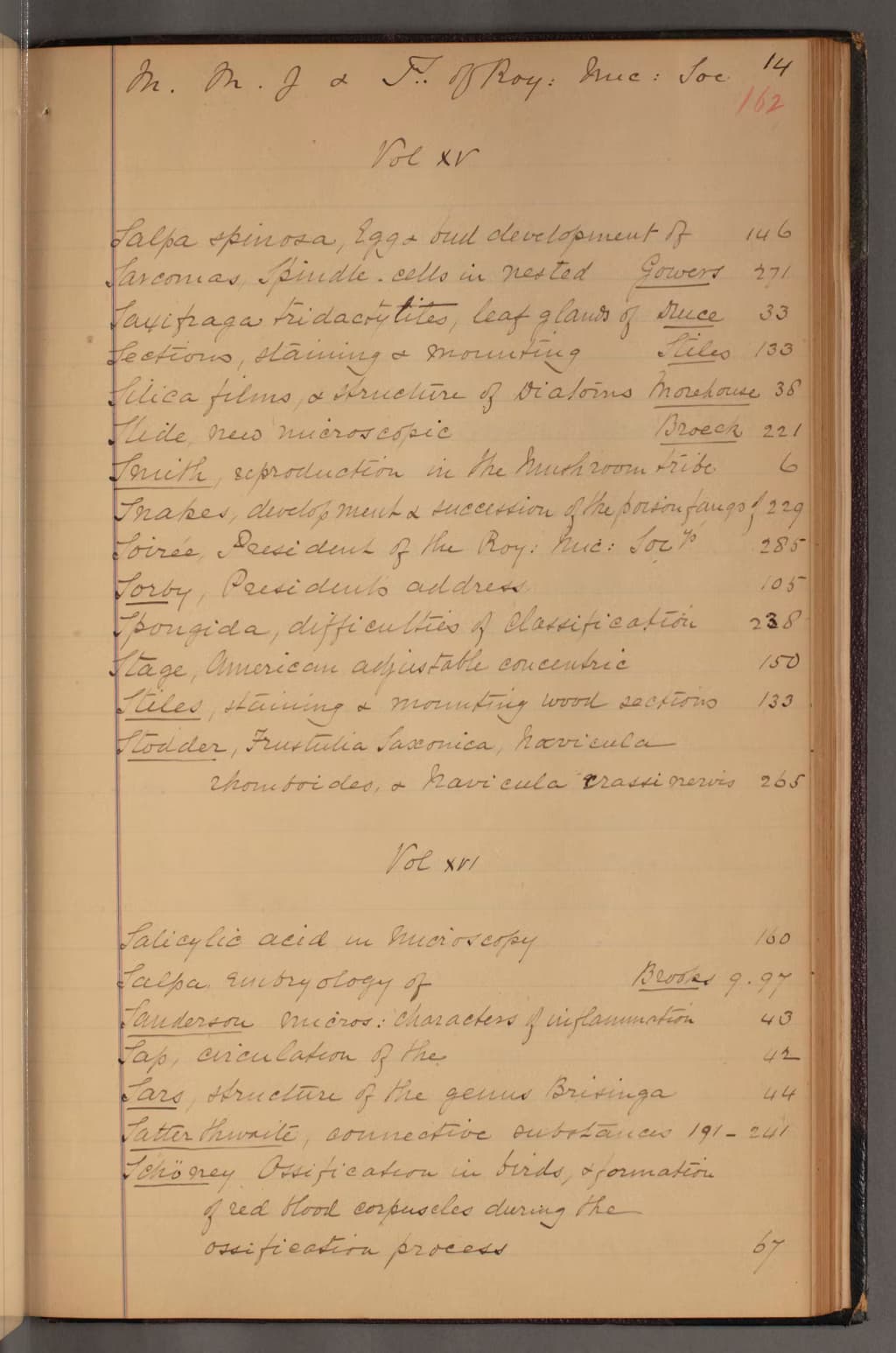

A challenging example page

Here’s one of the more challenging pages I processed using Textract:

Here’s the result:

In. In J a ... the Joe 14 162 Volxv Lalpa spinosa, Eggt bud development. of 146 Farcomas spindle. cells in nested gowers 271 Fayigaga tridactylites, leaf glaur of ruce 33 staining & mounting Stiles 133 tilica films, a structure of Diatoins morehouse 38 thile new microscopic Broeck 22 / Smith reproduction in the huntroom tribe 6 Trakes, develop mouht succession of the porsion tango/229 Soirce President of the Roy: truc: Soo 285 forby, Presidents address 105 pongida, difficulties of classification 238 tage, american adjustable concentric 150 ttlese staining & mountring wood sections 133 Stodder, Frustulia Iasconica, havicula chomboides, & havi cula crassinervis 265 Vol XVI falicylic acid u movorcopy 160 falpar enctry ology of Brooke 9.97 Sanderson micros: characters If inflammation 43 tap, circulation of the 42 Jars, structure of the genus Brisinga 44 latter throvite connective substances 191- 241 Jehorey Cessification in birds, formation of ed blood corpuseles during the ossification process by

Releases this week

-

s3-ocr: 0.4—(4 releases total)—2022-06-30

Tools for running OCR against files stored in S3 -

s3-credentials: 0.12—(12 releases total)—2022-06-30

A tool for creating credentials for accessing S3 buckets -

datasette-scale-to-zero: 0.1.2—(3 releases total)—2022-06-23

Quit Datasette if it has not received traffic for a specified time period

TIL this week

More recent articles

- Two new Showboat tools: Chartroom and datasette-showboat - 17th February 2026

- Deep Blue - 15th February 2026

- The evolution of OpenAI's mission statement - 13th February 2026