AI-enhanced development makes me more ambitious with my projects

27th March 2023

The thing I’m most excited about in our weird new AI-enhanced reality is the way it allows me to be more ambitious with my projects.

As an experienced developer, ChatGPT (and GitHub Copilot) save me an enormous amount of “figuring things out” time. For everything from writing a for loop in Bash to remembering how to make a cross-domain CORS request in JavaScript—I don’t need to even look things up any more, I can just prompt it and get the right answer 80% of the time.

This doesn’t just make me more productive: it lowers my bar for when a project is worth investing time in at all.

In the past I’ve had plenty of ideas for projects which I’ve ruled out because they would take a day—or days—of work to get to a point where they’re useful. I have enough other stuff to build already!

But if ChatGPT can drop that down to an hour or less, those projects can suddenly become viable.

Which means I’m building all sorts of weird and interesting little things that previously I wouldn’t have invested the time in.

I’ll describe my latest one of these mini-projects in detail.

Using ChatGPT to build a system to archive ChatGPT messages

I use ChatGPT a lot, and I want my own archive of conversations I’ve had with it.

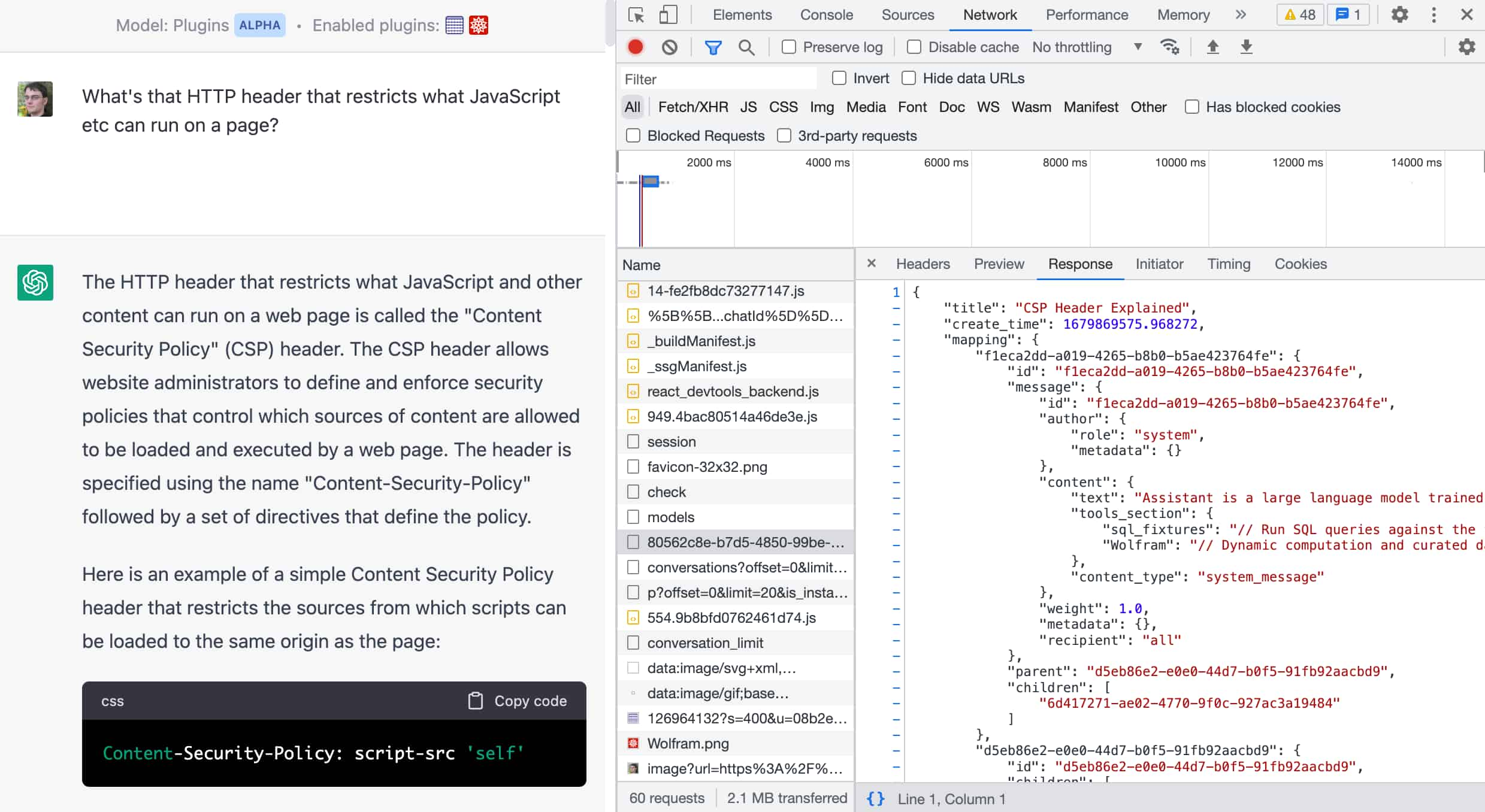

There’s no API for retrieving previous conversations at the moment, but if you open up the browser DevTools and watch the network tab you can see it loading JSON any time you navigate to an older conversation.

This gave me an idea for a solution...

What if I could run some JavaScript on the ChatGPT site that grabbed that JSON data and sent it off to my own server?

I couldn’t find the raw data in JavaScript variables available to the console, so I came up with an alternative approach: could I patch the default fetch() function to intercept calls made by the ChatGPT code to that endpoint, and intercept the data that way?

Normally this is where the project would end. Figuring out how to patch fetch() like that is non-trivial—where I’m using the software engineer’s definition of “non-trivial” as meaning “I don’t know how to do that off the top of my head”. I’d have to do some thinking and research! I’m already tempted to drop this idea and work on something else.

But now I have ChatGPT... so I prompted it:

Write a new JavaScript fetch() function which calls the old one but also saves the JSON that is retrieved—if it is used to retrieve JSON—in a window.fetchedData object

It gave me some code, but it wasn’t quite what I wanted. So I added a follow-up prompt:

No I need to replace the old window.fetch() function entirely—I want to patch it so that I can intercept calls made by other existing code

And it produced the following:

// Define a global object to store fetched JSON data

window.fetchedData = {};

// Store a reference to the original fetch function

const originalFetch = window.fetch;

// Define a new fetch function that wraps the original fetch

window.fetch = async function(url, options) {

try {

// Call the original fetch function

const response = await originalFetch(url, options);

// Check if the response has a JSON content type

const contentType = response.headers.get('content-type');

if (contentType && contentType.includes('application/json')) {

// If the response is JSON, clone the response so we can read it twice

const responseClone = response.clone();

// Parse the JSON data and save it to the fetchedData object

const jsonData = await responseClone.json();

window.fetchedData[url] = jsonData;

}

// Return the original response

return response;

} catch (error) {

// Handle any errors that occur during the fetch

console.error('Error fetching and saving JSON:', error);

throw error;

}

};That’s exactly what I wanted!

I pasted it into the DevTools console on the ChatGPT site and clicked on some links. It worked as intended.

Here’s that full ChatGPT transcript that got me to this point.

So now I have a prototype! I’ve proved to myself that I can intercept the JSON data fetched by ChatGPT’s own web application code.

I only wanted to run my code on requests that matched https://chat.openai.com/backend-api/conversation/...—I could write a regex for that, but I’d have to remember to escape the necessary characters. ChatGPT did that for me too:

const pattern = /^https:\/\/chat\.openai\.com\/backend-api\/conversation\//;So now I have the key building blocks I need for my solution: I can intercept JSON fetches and then filter to just the data from the endpoint I care about.

But I need CORS support

My plan was to have my intercepting fetch() call POST the JSON data to my own Datasette Cloud instance, similar to how I record scraped Hacker News listings as described in this post about Datasette’s new write API.

One big problem: this means that code running on the chat.openai.com domain needs to POST JSON to another server. And that means the other server needs to be serving CORS headers.

Datasette Cloud doesn’t (yet) support CORS—and I wasn’t about to implement a new production feature there just so I could solve a problem for this prototype.

What I really needed was some kind of CORS proxy... a URL running somewhere which forwards traffic on to Datasette Cloud but adds CORS headers to enable it to be called from elsewhere.

This represents another potential blocking point: do I really want to implement an entire proxy web application just for this little project?

Here’s my next ChatGPT prompt:

Write a web app in python Starlette which has CORS enabled—including for the auth header—and forwards all incoming requests to any path to another server host specified in an environment variable

I like Starlette and I know it has CORS support and is great for writing proxies. I was hopeful that GPT-4 had seen its documentation before the September 2021 cut-off date.

ChatGPT wrote me some very solid code! It used the python-dotenv package though, and I didn’t feel like using that dependency. So I added:

Rewrite that to use os.environ

The exception handling looked wrong to me, so I said:

Catch httpx.HTTPError as the only exception you care about

And after just those three prompts it produced the following:

import os from starlette.applications import Starlette from starlette.middleware import Middleware from starlette.middleware.cors import CORSMiddleware from starlette.routing import Route from starlette.requests import Request from starlette.responses import JSONResponse import httpx TARGET_SERVER_HOST = os.environ.get("TARGET_SERVER_HOST") middleware = [ Middleware( CORSMiddleware, allow_origins=["*"], allow_credentials=True, allow_methods=["*"], allow_headers=["*"], ) ] app = Starlette(middleware=middleware) async def forward_request(request: Request): try: async with httpx.AsyncClient() as client: target_url = f"{TARGET_SERVER_HOST}{request.url.path}" method = request.method headers = dict(request.headers) if "host" in headers: del headers["host"] if request.method in ["POST", "PUT", "PATCH"]: request_body = await request.json() else: request_body = None response = await client.request( method, target_url, headers=headers, json=request_body ) return JSONResponse(response.json(), status_code=response.status_code) except httpx.HTTPError as e: return JSONResponse({"error": str(e)}, status_code=500) app.add_route("/{path:path}", forward_request, methods=["GET", "POST", "PUT", "PATCH", "DELETE"]) if __name__ == "__main__": import uvicorn uvicorn.run(app, host="0.0.0.0", port=8000)

I tested this locally... and spotted a bug. It would fail with a 500 error if the incoming request included a content-length header. I fixed that myself by adding this code:

if "content-length" in headers: del headers["content-length"]

My finished code is here. Here’s the ChatGPT transcript.

I deployed this to Vercel using the method described in this TIL—and now I had a working proxy server.

Creating the tables and a token

ChatGPT had got me a long way. The rest of my implementation was now a small enough lift that I could quickly finish it by myself.

I created two tables in my Datasette Cloud instance by executing the following SQL (using the datasette-write plugin):

create table chatgpt_conversation (

id text primary key,

title text,

create_time float,

moderation_results text,

current_node text,

plugin_ids text

);

create table chatgpt_message (

id text primary key,

conversation_id text references chatgpt_conversation(id),

author_role text,

author_metadata text,

create_time float,

content text,

end_turn integer,

weight float,

metadata text,

recipient text

);Then I made myself a Datasette API token with permission to insert-row and update-row just for those two tables, using the new finely grained permissions feature in the 1.0 alpha series.

The last step was to combine this all together into a fetch() function that did the right thing. I wrote this code by hand, using the ChatGPT prototype as a starting point:

const TOKEN = "dstok_my-token-here";

// Store a reference to the original fetch function

window.originalFetch = window.fetch;

// Define a new fetch function that wraps the original fetch

window.fetch = async function (url, options) {

try {

// Call the original fetch function

const response = await originalFetch(url, options);

// Check if the response has a JSON content type

const contentType = response.headers.get("content-type");

if (contentType && contentType.includes("application/json")) {

// If the response is JSON, clone the response so we can read it twice

const responseClone = response.clone();

// Parse the JSON data and save it to the fetchedData object

const jsonData = await responseClone.json();

// NOW: if url for https://chat.openai.com/backend-api/conversation/...

// do something very special with it

const pattern =

/^https:\/\/chat\.openai\.com\/backend-api\/conversation\/(.*)/;

const match = url.match(pattern);

if (match) {

const conversationId = match[1];

console.log("conversationId", conversationId);

console.log("jsonData", jsonData);

const conversation = {

id: conversationId,

title: jsonData.title,

create_time: jsonData.create_time,

moderation_results: JSON.stringify(jsonData.moderation_results),

current_node: jsonData.current_node,

plugin_ids: JSON.stringify(jsonData.plugin_ids),

};

fetch(

"https://starlette-cors-proxy-simonw-datasette.vercel.app/data/chatgpt_conversation/-/insert",

{

method: "POST",

headers: {

"Content-Type": "application/json",

Authorization: `Bearer ${TOKEN}`,

},

mode: "cors",

body: JSON.stringify({

row: conversation,

replace: true,

}),

}

)

.then((d) => d.json())

.then((d) => console.log("d", d));

const messages = Object.values(jsonData.mapping)

.filter((m) => m.message)

.map((message) => {

m = message.message;

let content = "";

if (m.content) {

if (m.content.text) {

content = m.content.text;

} else {

content = m.content.parts.join("\n");

}

}

return {

id: m.id,

conversation_id: conversationId,

author_role: m.author ? m.author.role : null,

author_metadata: JSON.stringify(

m.author ? m.author.metadata : {}

),

create_time: m.create_time,

content: content,

end_turn: m.end_turn,

weight: m.weight,

metadata: JSON.stringify(m.metadata),

recipient: m.recipient,

};

});

fetch(

"https://starlette-cors-proxy-simonw-datasette.vercel.app/data/chatgpt_message/-/insert",

{

method: "POST",

headers: {

"Content-Type": "application/json",

Authorization: `Bearer ${TOKEN}`,

},

mode: "cors",

body: JSON.stringify({

rows: messages,

replace: true,

}),

}

)

.then((d) => d.json())

.then((d) => console.log("d", d));

}

}

// Return the original response

return response;

} catch (error) {

// Handle any errors that occur during the fetch

console.error("Error fetching and saving JSON:", error);

throw error;

}

};The fiddly bit here was writing the JavaScript that reshaped the ChatGPT JSON into the rows: [array-of-objects] format needed by the Datasette JSON APIs. I could probably have gotten ChatGPT to help with that—but in this case I pasted the SQL schema into a comment and let GitHub Copilot auto-complete parts of the JavaScript for me as I typed it.

And it works

Now I can paste the above block of code into the browser console on chat.openai.com and any time I click on one of my older conversations in the sidebar the fetch() will be intercepted and the JSON data will be saved to my Datasette Cloud instance.

It’s a lot more than just this project

This ChatGPT archiving problem is just one example from the past few months of things I’ve built that I wouldn’t have tackled without AI-assistance.

It took me longer to write this up than it did to implement the entire project from start to finish!

When evaluating if a new technology is worth learning and adopting, I have two criteria:

- Does this let me build things that would have been impossible to build without it?

- Can this reduce the effort required for some projects such that they tip over from “not worth it” to “worth it” and I end up building them?

Large language models like GPT3/4/LLaMA/Claude etc clearly meet both of those criteria—and their impact on point two keeps on getting stronger for me.

Some more examples

Here are a few more examples of projects I’ve worked on recently that wouldn’t have happened without at least some level of AI assistance:

- I used ChatGPT to generate me the OpenAI schema I needed to build the datasette-chatgpt-plugin plugin, allowing human language questions in ChatGPT to be answered by SQL queries executed against Datasette.

- Using ChatGPT to write AppleScript describes how I used ChatGPT to finally figure out enough AppleScript to liberate my notes data, resulting in building apple-notes-to-sqlite.

-

datasette-paste-tableisn’t in a usable state yet, but I built the first interactive prototype for that using ChatGPT. - Convert git log output to JSON using jq is something I figured out using ChatGPT—transcript here.

- Learning Rust with ChatGPT, Copilot and Advent of Code describes one of my earlier efforts to use ChatGPT to help learn a completely new (to me) programming language.

- Reading thermometer temperatures over time from a video describes a project I built using ffmpg and Google Cloud Vision.

- Interactive row selection prototype with Datasette explains a more complex HTML and JavaScript UI prototype I worked on.

More recent articles

- Writing about Agentic Engineering Patterns - 23rd February 2026

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026

- Two new Showboat tools: Chartroom and datasette-showboat - 17th February 2026