Thursday, 13th February 2025

URL-addressable Pyodide Python environments

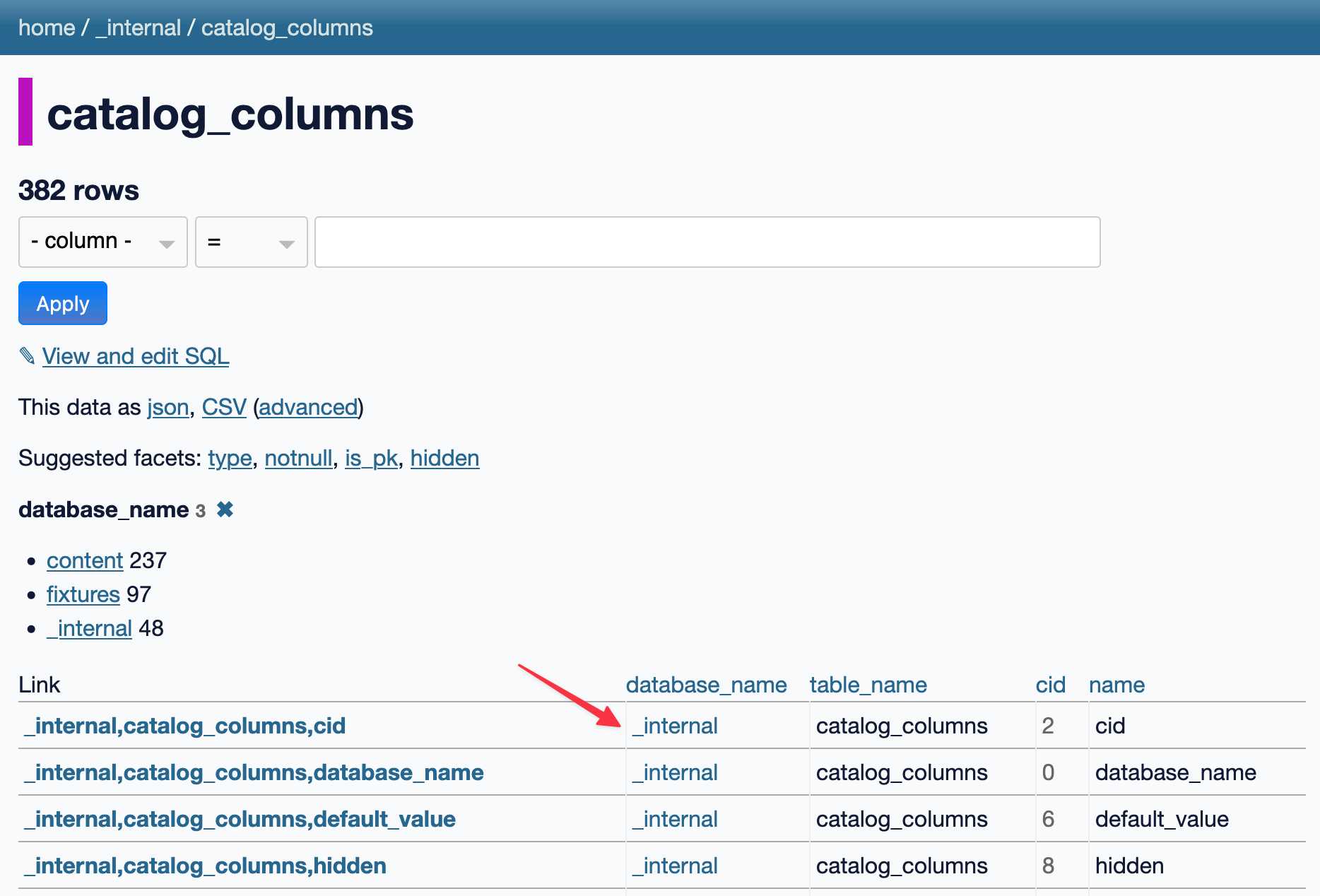

This evening I spotted an obscure bug in Datasette, using Datasette Lite. I figure it’s a good opportunity to highlight how useful it is to have a URL-addressable Python environment, powered by Pyodide and WebAssembly.

[... 1,905 words]python-build-standalone now has Python 3.14.0a5. Exciting news from Charlie Marsh:

We just shipped the latest Python 3.14 alpha (3.14.0a5) to uv and python-build-standalone. This is the first release that includes the tail-calling interpreter.

Our initial benchmarks show a ~20-30% performance improvement across CPython.

This is an optimization that was first discussed in faster-cpython in January 2024, then landed earlier this month by Ken Jin and included in the 3.14a05 release. The alpha release notes say:

A new type of interpreter based on tail calls has been added to CPython. For certain newer compilers, this interpreter provides significantly better performance. Preliminary numbers on our machines suggest anywhere from -3% to 30% faster Python code, and a geometric mean of 9-15% faster on pyperformance depending on platform and architecture. The baseline is Python 3.14 built with Clang 19 without this new interpreter.

This interpreter currently only works with Clang 19 and newer on x86-64 and AArch64 architectures. However, we expect that a future release of GCC will support this as well.

Including this in python-build-standalone means it's now trivial to try out via uv. I upgraded to the latest uv like this:

pip install -U uvThen ran uv python list to see the available versions:

cpython-3.14.0a5+freethreaded-macos-aarch64-none <download available>

cpython-3.14.0a5-macos-aarch64-none <download available>

cpython-3.13.2+freethreaded-macos-aarch64-none <download available>

cpython-3.13.2-macos-aarch64-none <download available>

cpython-3.13.1-macos-aarch64-none /opt/homebrew/opt/python@3.13/bin/python3.13 -> ../Frameworks/Python.framework/Versions/3.13/bin/python3.13

I downloaded the new alpha like this:

uv python install cpython-3.14.0a5And tried it out like so:

uv run --python 3.14.0a5 pythonThe Astral team have been using Ken's bm_pystones.py benchmarks script. I grabbed a copy like this:

wget 'https://gist.githubusercontent.com/Fidget-Spinner/e7bf204bf605680b0fc1540fe3777acf/raw/fa85c0f3464021a683245f075505860db5e8ba6b/bm_pystones.py'And ran it with uv:

uv run --python 3.14.0a5 bm_pystones.pyGiving:

Pystone(1.1) time for 50000 passes = 0.0511138

This machine benchmarks at 978209 pystones/second

Inspired by Charlie's example I decided to try the hyperfine benchmarking tool, which can run multiple commands to statistically compare their performance. I came up with this recipe:

brew install hyperfine

hyperfine \

"uv run --python 3.14.0a5 bm_pystones.py" \

"uv run --python 3.13 bm_pystones.py" \

-n tail-calling \

-n baseline \

--warmup 10![Running that command produced: Benchmark 1: tail-calling Time (mean ± σ): 71.5 ms ± 0.9 ms [User: 65.3 ms, System: 5.0 ms] Range (min … max): 69.7 ms … 73.1 ms 40 runs Benchmark 2: baseline Time (mean ± σ): 79.7 ms ± 0.9 ms [User: 73.9 ms, System: 4.5 ms] Range (min … max): 78.5 ms … 82.3 ms 36 runs Summary tail-calling ran 1.12 ± 0.02 times faster than baseline](https://static.simonwillison.net/static/2025/hyperfine-uv.jpg)

So 3.14.0a5 scored 1.12 times faster than 3.13 on the benchmark (on my extremely overloaded M2 MacBook Pro).

shot-scraper 1.6 with support for HTTP Archives. New release of my shot-scraper CLI tool for taking screenshots and scraping web pages.

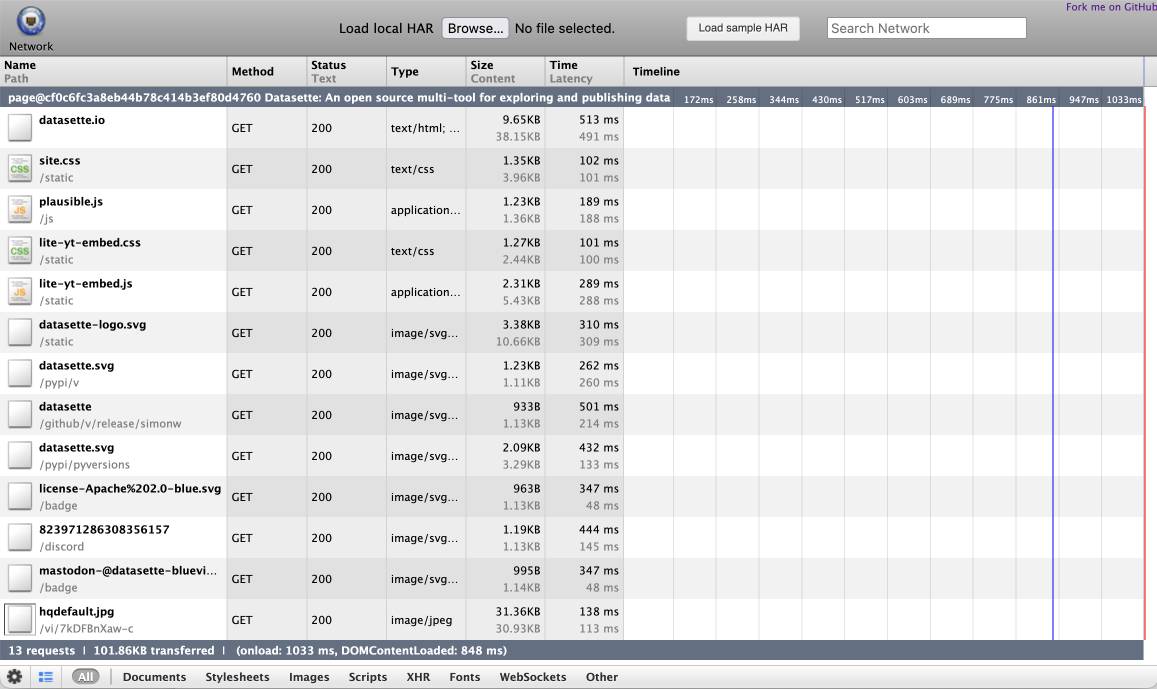

The big new feature is HTTP Archive (HAR) support. The new shot-scraper har command can now create an archive of a page and all of its dependents like this:

shot-scraper har https://datasette.io/

This produces a datasette-io.har file (currently 163KB) which is JSON representing the full set of requests used to render that page. Here's a copy of that file. You can visualize that here using ericduran.github.io/chromeHAR.

That JSON includes full copies of all of the responses, base64 encoded if they are binary files such as images.

You can add the --zip flag to instead get a datasette-io.har.zip file, containing JSON data in har.har but with the response bodies saved as separate files in that archive.

The shot-scraper multi command lets you run shot-scraper against multiple URLs in sequence, specified using a YAML file. That command now takes a --har option (or --har-zip or --har-file name-of-file), described in the documentation, which will produce a HAR at the same time as taking the screenshots.

Shots are usually defined in YAML that looks like this:

- output: example.com.png

url: http://www.example.com/

- output: w3c.org.png

url: https://www.w3.org/You can now omit the output: keys and generate a HAR file without taking any screenshots at all:

- url: http://www.example.com/

- url: https://www.w3.org/Run like this:

shot-scraper multi shots.yml --har

Which outputs:

Skipping screenshot of 'https://www.example.com/'

Skipping screenshot of 'https://www.w3.org/'

Wrote to HAR file: trace.har

shot-scraper is built on top of Playwright, and the new features use the browser.new_context(record_har_path=...) parameter.