Initial impressions of GPT-4.5

27th February 2025

GPT-4.5 is out today as a “research preview”—it’s available to OpenAI Pro ($200/month) customers and to developers with an API key. OpenAI also published a GPT-4.5 system card.

I’ve added it to LLM so you can run it like this:

llm -m gpt-4.5-preview 'impress me'It’s very expensive right now: currently $75.00 per million input tokens and $150/million for output! For comparison, o1 is $15/$60 and GPT-4o is $2.50/$10. GPT-4o mini is $0.15/$0.60 making OpenAI’s least expensive model 500x cheaper than GPT-4.5 for input and 250x cheaper for output!

As far as I can tell almost all of its key characteristics are the same as GPT-4o: it has the same 128,000 context length, handles the same inputs (text and image) and even has the same training cut-off date of October 2023.

So what’s it better at? According to OpenAI’s blog post:

Combining deep understanding of the world with improved collaboration results in a model that integrates ideas naturally in warm and intuitive conversations that are more attuned to human collaboration. GPT‑4.5 has a better understanding of what humans mean and interprets subtle cues or implicit expectations with greater nuance and “EQ”. GPT‑4.5 also shows stronger aesthetic intuition and creativity. It excels at helping with writing and design.

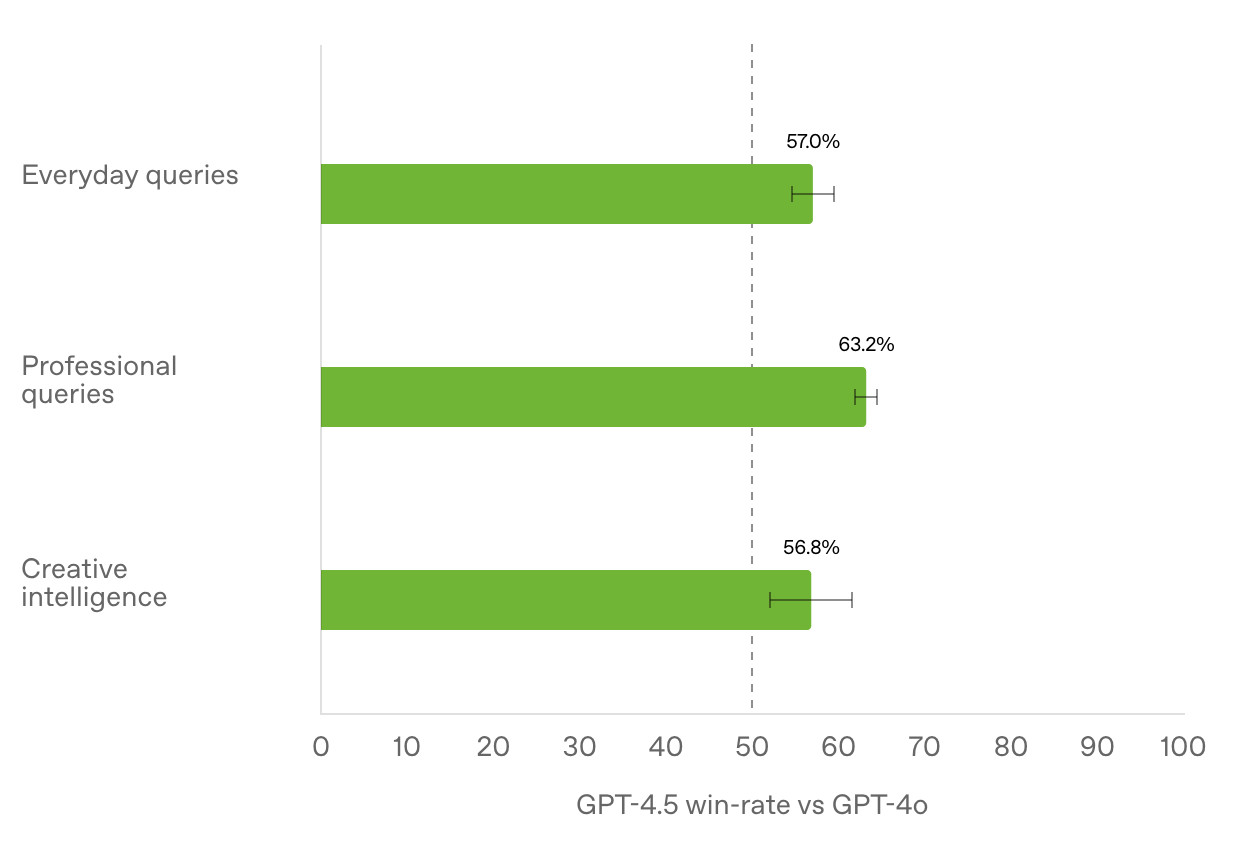

They include this chart of win-rates against GPT-4o, where it wins between 56.8% and 63.2% of the time for different classes of query:

They also report a SimpleQA hallucination rate of 37.1%—a big improvement on GPT-4o (61.8%) and o3-mini (80.3%) but not much better than o1 (44%). The coding benchmarks all appear to score similar to o3-mini.

Paul Gauthier reports a score of 45% on Aider’s polyglot coding benchmark—below DeepSeek V3 (48%), Sonnet 3.7 (60% without thinking, 65% with thinking) and o3-mini (60.4%) but significantly ahead of GPT-4o (23.1%).

OpenAI don’t seem to have enormous confidence in the model themselves:

GPT‑4.5 is a very large and compute-intensive model, making it more expensive than and not a replacement for GPT‑4o. Because of this, we’re evaluating whether to continue serving it in the API long-term as we balance supporting current capabilities with building future models.

It drew me this for “Generate an SVG of a pelican riding a bicycle”:

Accessed via the API the model feels weirdly slow—here’s an animation showing how that pelican was rendered—the full response took 112 seconds!

OpenAI’s Rapha Gontijo Lopes calls this “(probably) the largest model in the world”—evidently the problem with large models is that they are a whole lot slower than their smaller alternatives!

Andrej Karpathy has published some notes on the new model, where he highlights that the improvements are limited considering the 10x increase in training cost compute to GPT-4:

I remember being a part of a hackathon trying to find concrete prompts where GPT4 outperformed 3.5. They definitely existed, but clear and concrete “slam dunk” examples were difficult to find. [...] So it is with that expectation that I went into testing GPT4.5, which I had access to for a few days, and which saw 10X more pretraining compute than GPT4. And I feel like, once again, I’m in the same hackathon 2 years ago. Everything is a little bit better and it’s awesome, but also not exactly in ways that are trivial to point to.

Andrej is also running a fun vibes-based polling evaluation comparing output from GPT-4.5 and GPT-4o. Update GPT-4o won 4/5 rounds!

There’s an extensive thread about GPT-4.5 on Hacker News. When it hit 324 comments I ran a summary of it using GPT-4.5 itself with this script:

hn-summary.sh 43197872 -m gpt-4.5-previewHere’s the result, which took 154 seconds to generate and cost $2.11 (25797 input tokens and 1225 input, price calculated using my LLM pricing calculator).

For comparison, I ran the same prompt against GPT-4o, GPT-4o Mini, Claude 3.7 Sonnet, Claude 3.5 Haiku, Gemini 2.0 Flash, Gemini 2.0 Flash Lite and Gemini 2.0 Pro.

More recent articles

- Two new Showboat tools: Chartroom and datasette-showboat - 17th February 2026

- Deep Blue - 15th February 2026

- The evolution of OpenAI's mission statement - 13th February 2026