Monday, 2nd June 2025

My constant struggle is how to convince them that getting an education in the humanities is not about regurgitating ideas/knowledge that already exist. It’s about generating new knowledge, striving for creative insights, and having thoughts that haven’t been had before. I don’t want you to learn facts. I want you to think. To notice. To question. To reconsider. To challenge. Students don’t yet get that ChatGPT only rearranges preexisting ideas, whether they are accurate or not.

And even if the information was guaranteed to be accurate, they’re not learning anything by plugging a prompt in and turning in the resulting paper. They’ve bypassed the entire process of learning.

claude-trace (via) I've been thinking for a while it would be interesting to run some kind of HTTP proxy against the Claude Code CLI app to intercept its API traffic and take a peek at how it works.

Mario Zechner just published a really nice version of that. It works by monkey-patching global.fetch and the Node HTTP library and then running Claude Code using Node with an extra --require interceptor-loader.js option to inject the patches.

Provided you have Claude Code installed and configured already, an easy way to run it is via npx like this:

npx @mariozechner/claude-trace --include-all-requests

I tried it just now and it logs request/response pairs to a .claude-trace folder, as both jsonl files and HTML.

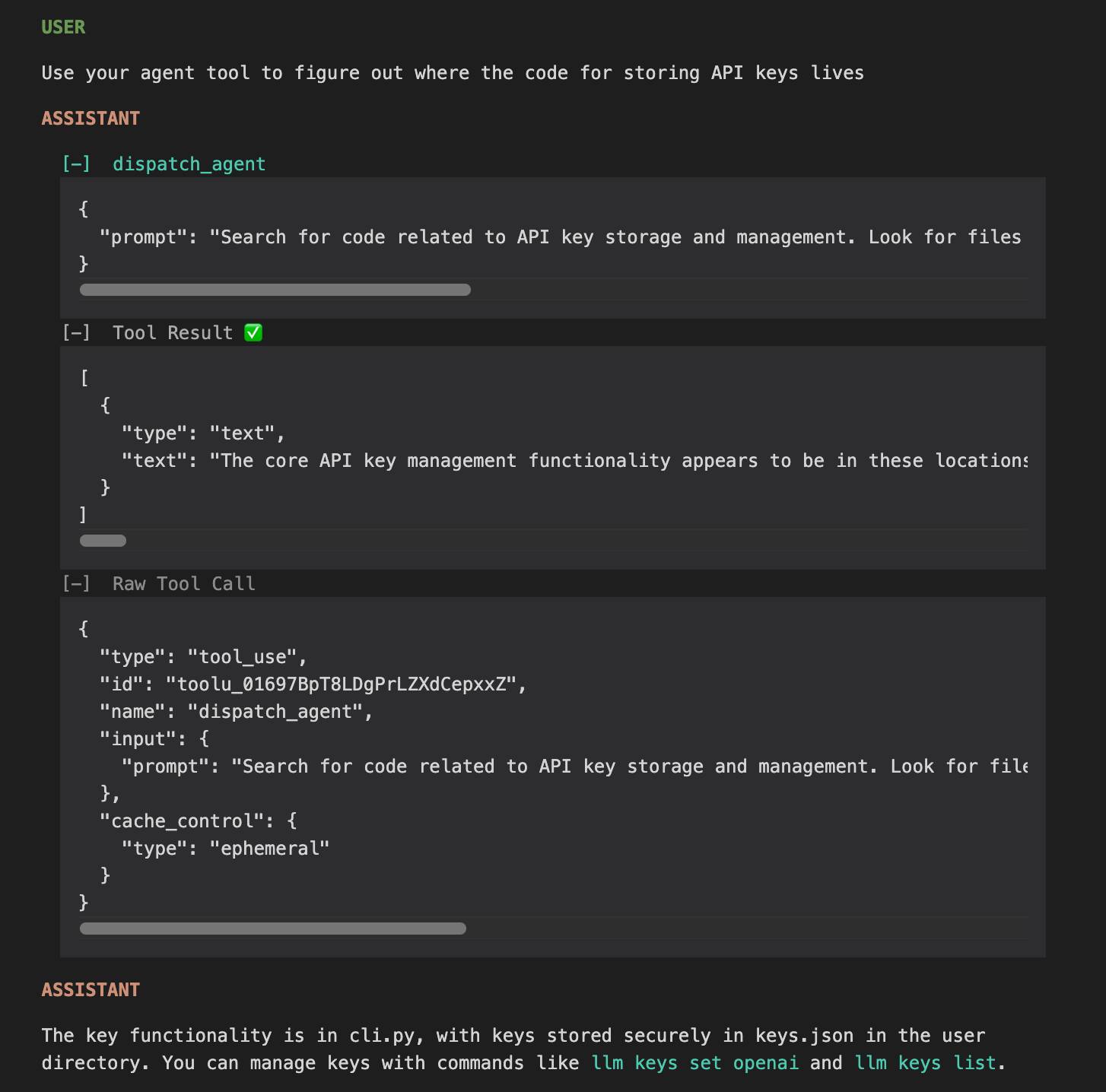

The HTML interface is really nice. Here's an example trace - I started everything running in my llm checkout and asked Claude to "tell me about this software" and then "Use your agent tool to figure out where the code for storing API keys lives".

I specifically requested the "agent" tool here because I noticed in the tool definitions a tool called dispatch_agent with this tool definition (emphasis mine):

Launch a new agent that has access to the following tools: GlobTool, GrepTool, LS, View, ReadNotebook. When you are searching for a keyword or file and are not confident that you will find the right match on the first try, use the Agent tool to perform the search for you. For example:

- If you are searching for a keyword like "config" or "logger", the Agent tool is appropriate

- If you want to read a specific file path, use the View or GlobTool tool instead of the Agent tool, to find the match more quickly

- If you are searching for a specific class definition like "class Foo", use the GlobTool tool instead, to find the match more quickly

Usage notes:

- Launch multiple agents concurrently whenever possible, to maximize performance; to do that, use a single message with multiple tool uses

- When the agent is done, it will return a single message back to you. The result returned by the agent is not visible to the user. To show the user the result, you should send a text message back to the user with a concise summary of the result.

- Each agent invocation is stateless. You will not be able to send additional messages to the agent, nor will the agent be able to communicate with you outside of its final report. Therefore, your prompt should contain a highly detailed task description for the agent to perform autonomously and you should specify exactly what information the agent should return back to you in its final and only message to you.

- The agent's outputs should generally be trusted

- IMPORTANT: The agent can not use Bash, Replace, Edit, NotebookEditCell, so can not modify files. If you want to use these tools, use them directly instead of going through the agent.

I'd heard that Claude Code uses the LLMs-calling-other-LLMs pattern - one of the reason it can burn through tokens so fast! It was interesting to see how this works under the hood - it's a tool call which is designed to be used concurrently (by triggering multiple tool uses at once).

Anthropic have deliberately chosen not to publish any of the prompts used by Claude Code. As with other hidden system prompts, the prompts themselves mainly act as a missing manual for understanding exactly what these tools can do for you and how they work.

Directive prologues and JavaScript dark matter (via) Tom MacWright does some archaeology and describes the three different magic comment formats that can affect how JavaScript/TypeScript files are processed:

"a directive"; is a directive prologue, most commonly seen with "use strict";.

/** @aPragma */ is a pragma for a transpiler, often used for /** @jsx h */.

//# aMagicComment is usually used for source maps - //# sourceMappingURL=<url> - but also just got used by v8 for their new explicit compile hints feature.

It took me a few days to build the library [cloudflare/workers-oauth-provider] with AI.

I estimate it would have taken a few weeks, maybe months to write by hand.

That said, this is a pretty ideal use case: implementing a well-known standard on a well-known platform with a clear API spec.

In my attempts to make changes to the Workers Runtime itself using AI, I've generally not felt like it saved much time. Though, people who don't know the codebase as well as I do have reported it helped them a lot.

I have found AI incredibly useful when I jump into other people's complex codebases, that I'm not familiar with. I now feel like I'm comfortable doing that, since AI can help me find my way around very quickly, whereas previously I generally shied away from jumping in and would instead try to get someone on the team to make whatever change I needed.

— Kenton Varda, in a Hacker News comment

My AI Skeptic Friends Are All Nuts (via) Thomas Ptacek's frustrated tone throughout this piece perfectly captures how it feels sometimes to be an experienced programmer trying to argue that "LLMs are actually really useful" in many corners of the internet.

Some of the smartest people I know share a bone-deep belief that AI is a fad — the next iteration of NFT mania. I’ve been reluctant to push back on them, because, well, they’re smarter than me. But their arguments are unserious, and worth confronting. Extraordinarily talented people are doing work that LLMs already do better, out of spite. [...]

You’ve always been responsible for what you merge to

main. You were five years go. And you are tomorrow, whether or not you use an LLM. [...]Reading other people’s code is part of the job. If you can’t metabolize the boring, repetitive code an LLM generates: skills issue! How are you handling the chaos human developers turn out on a deadline?

And on the threat of AI taking jobs from engineers (with a link to an old comment of mine):

So does open source. We used to pay good money for databases.

We're a field premised on automating other people's jobs away. "Productivity gains," say the economists. You get what that means, right? Fewer people doing the same stuff. Talked to a travel agent lately? Or a floor broker? Or a record store clerk? Or a darkroom tech?

The post has already attracted 695 comments on Hacker News in just two hours, which feels like some kind of record even by the usual standards of fights about AI on the internet.

Update: Thomas, another hundred or so comments later:

A lot of people are misunderstanding the goal of the post, which is not necessarily to persuade them, but rather to disrupt a static, unproductive equilibrium of uninformed arguments about how this stuff works. The commentary I've read today has to my mind vindicated that premise.