7th May 2025

Create and edit images with Gemini 2.0 in preview (via) Gemini 2.0 Flash has had image generation capabilities for a while now, and they're now available via the paid Gemini API - at 3.9 cents per generated image.

According to the API documentation you need to use the new gemini-2.0-flash-preview-image-generation model ID and specify {"responseModalities":["TEXT","IMAGE"]} as part of your request.

Here's an example that calls the API using curl (and fetches a Gemini key from the llm keys get store):

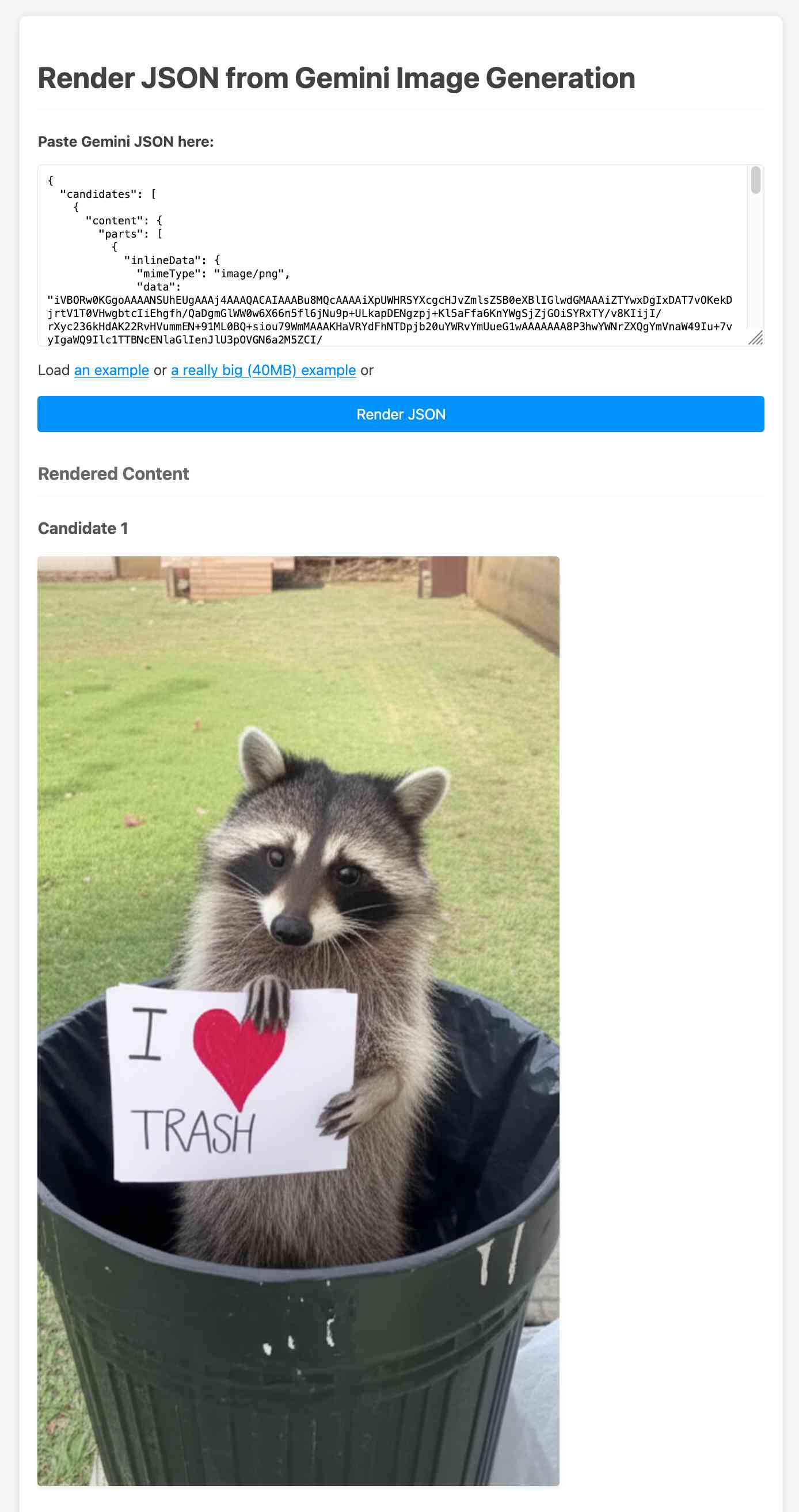

curl -s -X POST \ "https://generativelanguage.googleapis.com/v1beta/models/gemini-2.0-flash-preview-image-generation:generateContent?key=$(llm keys get gemini)" \ -H "Content-Type: application/json" \ -d '{ "contents": [{ "parts": [ {"text": "Photo of a raccoon in a trash can with a paw-written sign that says I love trash"} ] }], "generationConfig":{"responseModalities":["TEXT","IMAGE"]} }' > /tmp/raccoon.json

Here's the response. I got Gemini 2.5 Pro to vibe-code me a new debug tool for visualizing that JSON. If you visit that tool and click the "Load an example" link you'll see the result of the raccoon image visualized:

The other prompt I tried was this one:

Provide a vegetarian recipe for butter chicken but with chickpeas not chicken and include many inline illustrations along the way

The result of that one was a 41MB JSON file(!) containing 28 images - which presumably cost over a dollar since images are 3.9 cents each.

Some of the illustrations it chose for that one were somewhat unexpected:

If you want to see that one you can click the "Load a really big example" link in the debug tool, then wait for your browser to fetch and render the full 41MB JSON file.

The most interesting feature of Gemini (as with GPT-4o images) is the ability to accept images as inputs. I tried that out with this pelican photo like this:

cat > /tmp/request.json << EOF { "contents": [{ "parts":[ {"text": "Modify this photo to add an inappropriate hat"}, { "inline_data": { "mime_type":"image/jpeg", "data": "$(base64 -i pelican.jpg)" } } ] }], "generationConfig": {"responseModalities": ["TEXT", "IMAGE"]} } EOF # Execute the curl command with the JSON file curl -X POST \ 'https://generativelanguage.googleapis.com/v1beta/models/gemini-2.0-flash-preview-image-generation:generateContent?key='$(llm keys get gemini) \ -H 'Content-Type: application/json' \ -d @/tmp/request.json \ > /tmp/out.json

And now the pelican is wearing a hat:

Recent articles

- Two new Showboat tools: Chartroom and datasette-showboat - 17th February 2026

- Deep Blue - 15th February 2026

- The evolution of OpenAI's mission statement - 13th February 2026