19th November 2025 - Link Blog

Building more with GPT-5.1-Codex-Max (via) Hot on the heels of yesterday's Gemini 3 Pro release comes a new model from OpenAI called GPT-5.1-Codex-Max.

(Remember when GPT-5 was meant to bring in a new era of less confusing model names? That didn't last!)

It's currently only available through their Codex CLI coding agent, where it's the new default model:

Starting today, GPT‑5.1-Codex-Max will replace GPT‑5.1-Codex as the default model in Codex surfaces. Unlike GPT‑5.1, which is a general-purpose model, we recommend using GPT‑5.1-Codex-Max and the Codex family of models only for agentic coding tasks in Codex or Codex-like environments.

It's not available via the API yet but should be shortly.

The timing of this release is interesting given that Gemini 3 Pro appears to have aced almost all of the benchmarks just yesterday. It's reminiscent of the period in 2024 when OpenAI consistently made big announcements that happened to coincide with Gemini releases.

OpenAI's self-reported SWE-Bench Verified score is particularly notable: 76.5% for thinking level "high" and 77.9% for the new "xhigh". That was the one benchmark where Gemini 3 Pro was out-performed by Claude Sonnet 4.5 - Gemini 3 Pro got 76.2% and Sonnet 4.5 got 77.2%. OpenAI now have the highest scoring model there by a full .7 of a percentage point!

They also report a score of 58.1% on Terminal Bench 2.0, beating Gemini 3 Pro's 54.2% (and Sonnet 4.5's 42.8%.)

The most intriguing part of this announcement concerns the model's approach to long context problems:

GPT‑5.1-Codex-Max is built for long-running, detailed work. It’s our first model natively trained to operate across multiple context windows through a process called compaction, coherently working over millions of tokens in a single task. [...]

Compaction enables GPT‑5.1-Codex-Max to complete tasks that would have previously failed due to context-window limits, such as complex refactors and long-running agent loops by pruning its history while preserving the most important context over long horizons. In Codex applications, GPT‑5.1-Codex-Max automatically compacts its session when it approaches its context window limit, giving it a fresh context window. It repeats this process until the task is completed.

There's a lot of confusion on Hacker News about what this actually means. Claude Code already does a version of compaction, automatically summarizing previous turns when the context runs out. Does this just mean that Codex-Max is better at that process?

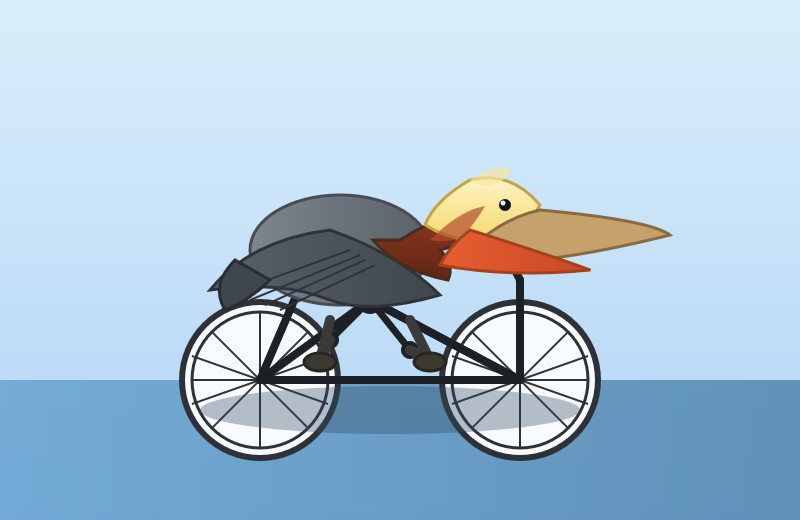

I had it draw me a couple of pelicans by typing "Generate an SVG of a pelican riding a bicycle" directly into the Codex CLI tool. Here's thinking level medium:

And here's thinking level "xhigh":

I also tried xhigh on the my longer pelican test prompt, which came out like this:

Also today: GPT-5.1 Pro is rolling out today to all Pro users. According to the ChatGPT release notes:

GPT-5.1 Pro is rolling out today for all ChatGPT Pro users and is available in the model picker. GPT-5 Pro will remain available as a legacy model for 90 days before being retired.

That's a pretty fast deprecation cycle for the GPT-5 Pro model that was released just three months ago.

Recent articles

- I vibe coded my dream macOS presentation app - 25th February 2026

- Writing about Agentic Engineering Patterns - 23rd February 2026

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026