The evolution of OpenAI’s mission statement

13th February 2026

As a USA 501(c)(3) the OpenAI non-profit has to file a tax return each year with the IRS. One of the required fields on that tax return is to “Briefly describe the organization’s mission or most significant activities”—this has actual legal weight to it as the IRS can use it to evaluate if the organization is sticking to its mission and deserves to maintain its non-profit tax-exempt status.

You can browse OpenAI’s tax filings by year on ProPublica’s excellent Nonprofit Explorer.

I went through and extracted that mission statement for 2016 through 2024, then had Claude Code help me fake the commit dates to turn it into a git repository and share that as a Gist—which means that Gist’s revisions page shows every edit they’ve made since they started filing their taxes!

It’s really interesting seeing what they’ve changed over time.

The original 2016 mission reads as follows (and yes, the apostrophe in “OpenAIs” is missing in the original):

OpenAIs goal is to advance digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return. We think that artificial intelligence technology will help shape the 21st century, and we want to help the world build safe AI technology and ensure that AI’s benefits are as widely and evenly distributed as possible. Were trying to build AI as part of a larger community, and we want to openly share our plans and capabilities along the way.

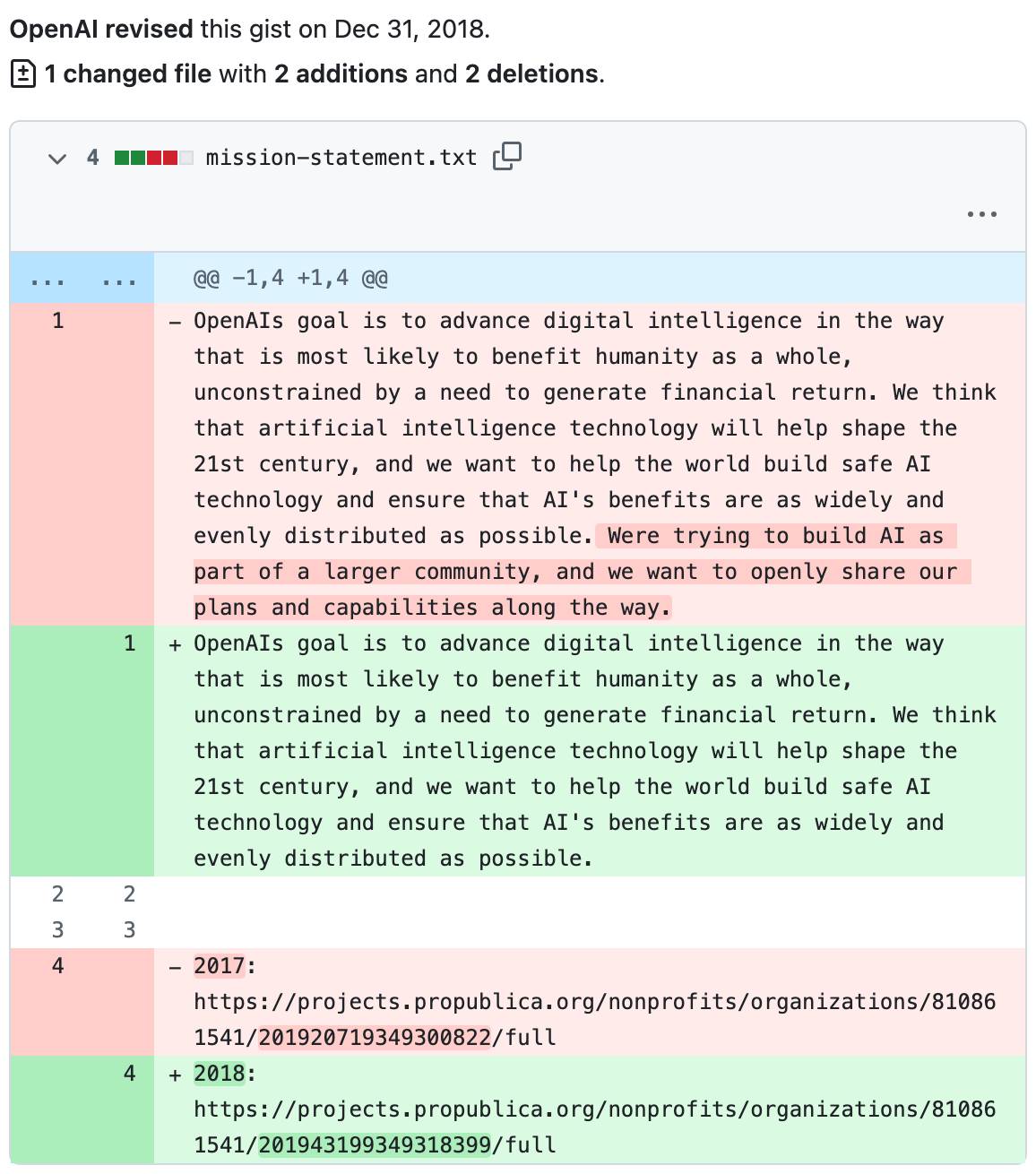

In 2018 they dropped the part about “trying to build AI as part of a larger community, and we want to openly share our plans and capabilities along the way.”

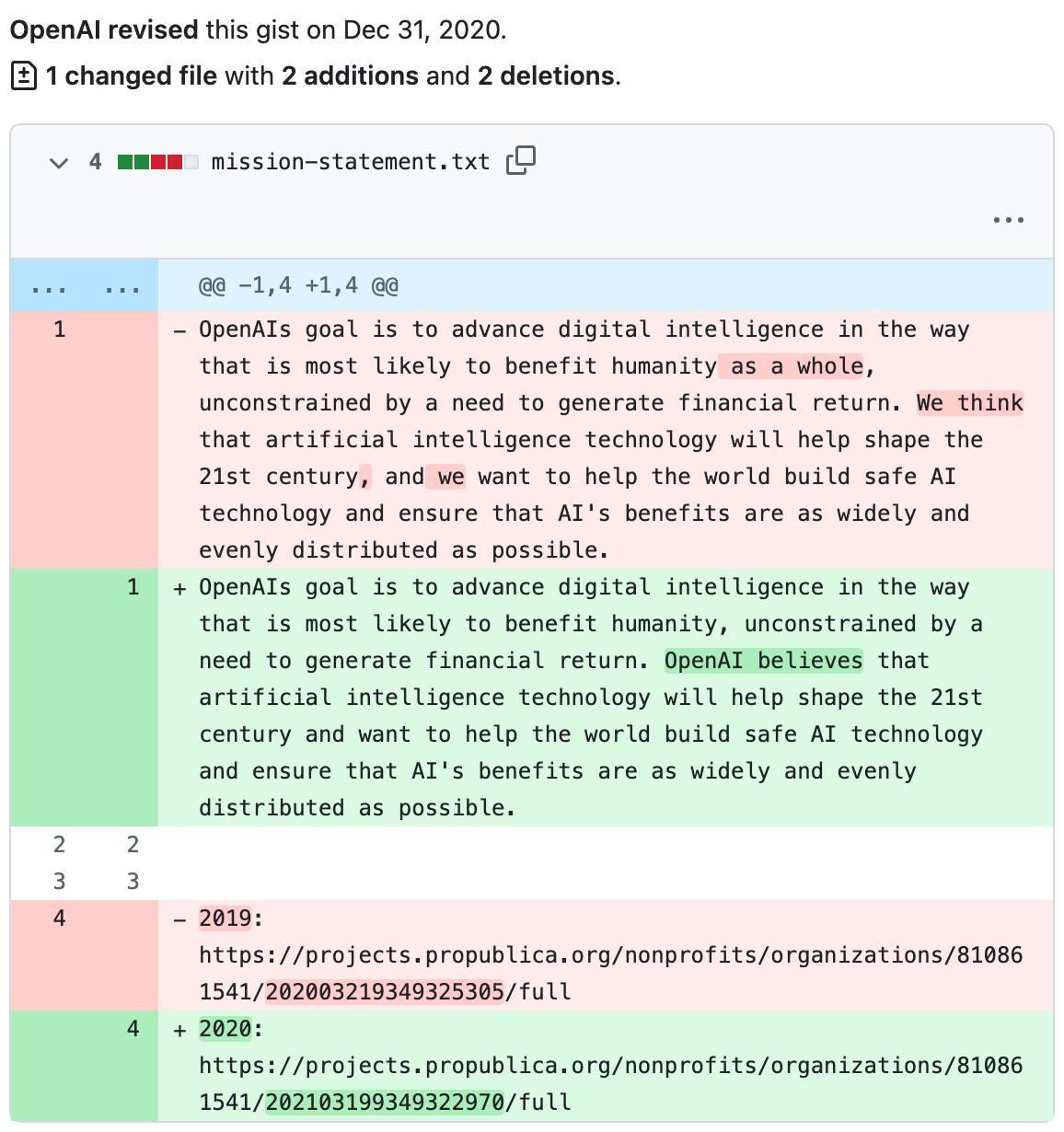

In 2020 they dropped the words “as a whole” from “benefit humanity as a whole”. They’re still “unconstrained by a need to generate financial return” though.

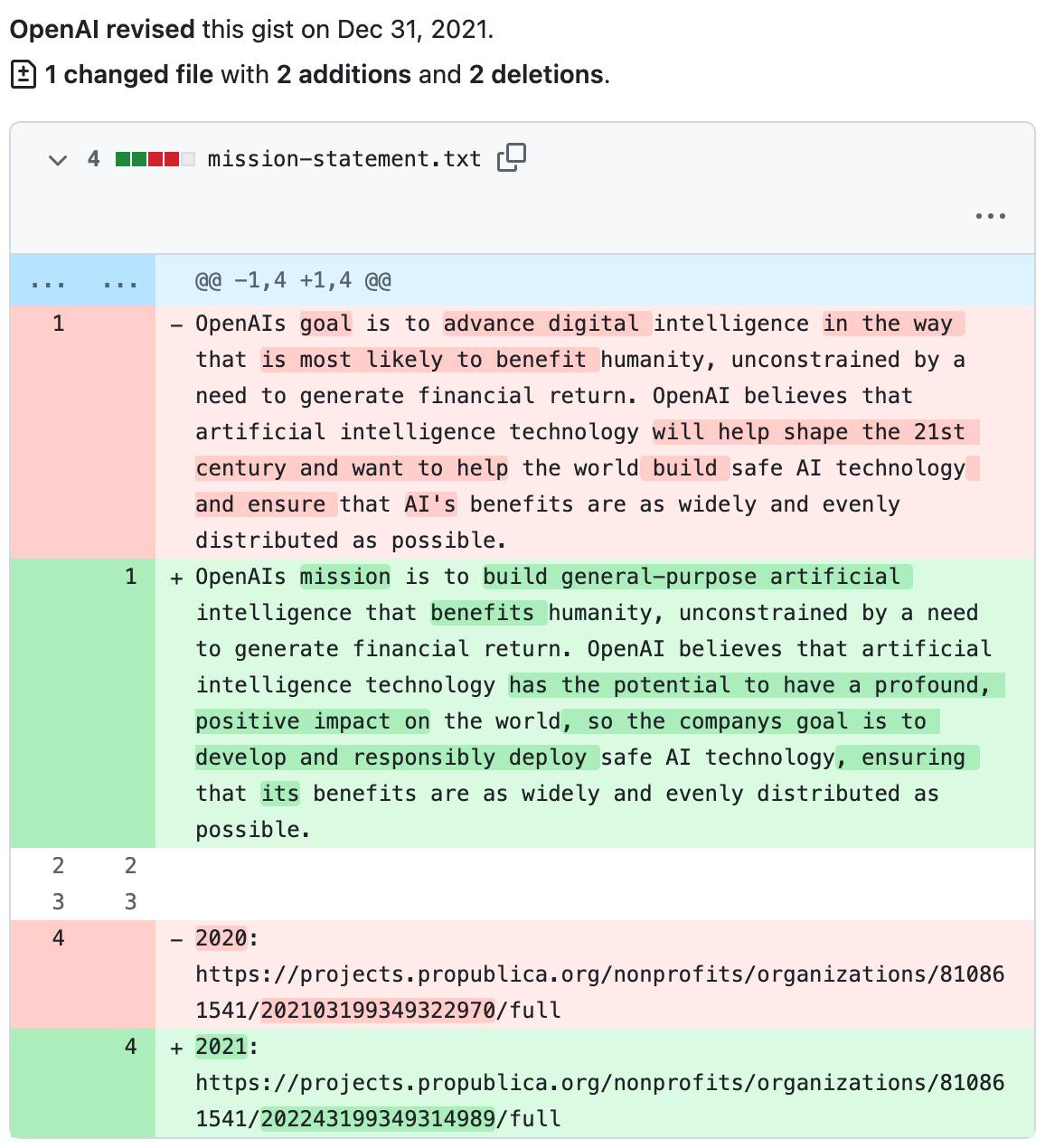

Some interesting changes in 2021. They’re still unconstrained by a need to generate financial return, but here we have the first reference to “general-purpose artificial intelligence” (replacing “digital intelligence”). They’re more confident too: it’s not “most likely to benefit humanity”, it’s just “benefits humanity”.

They previously wanted to “help the world build safe AI technology”, but now they’re going to do that themselves: “the companys goal is to develop and responsibly deploy safe AI technology”.

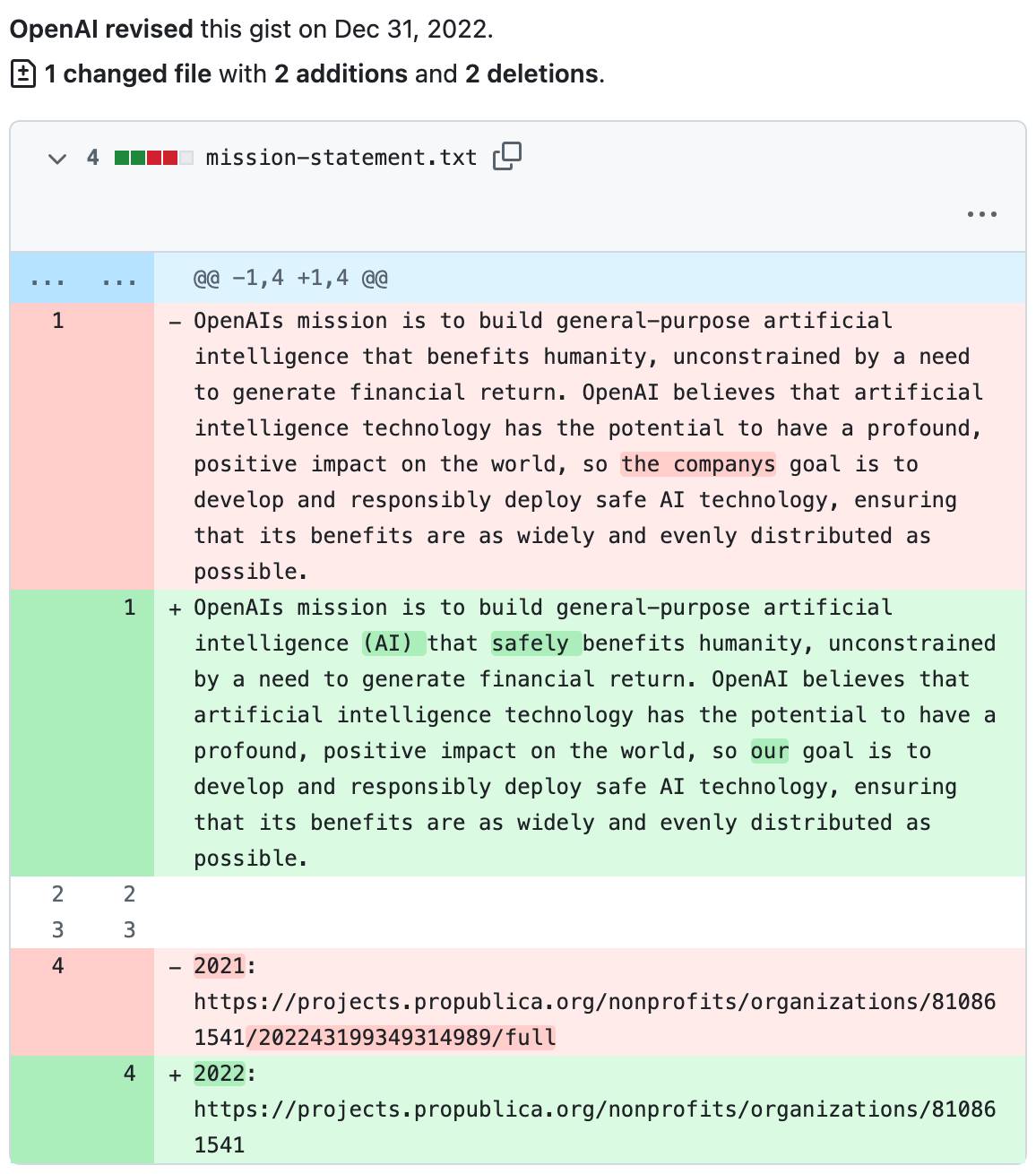

2022 only changed one significant word: they added “safely” to “build ... (AI) that safely benefits humanity”. They’re still unconstrained by those financial returns!

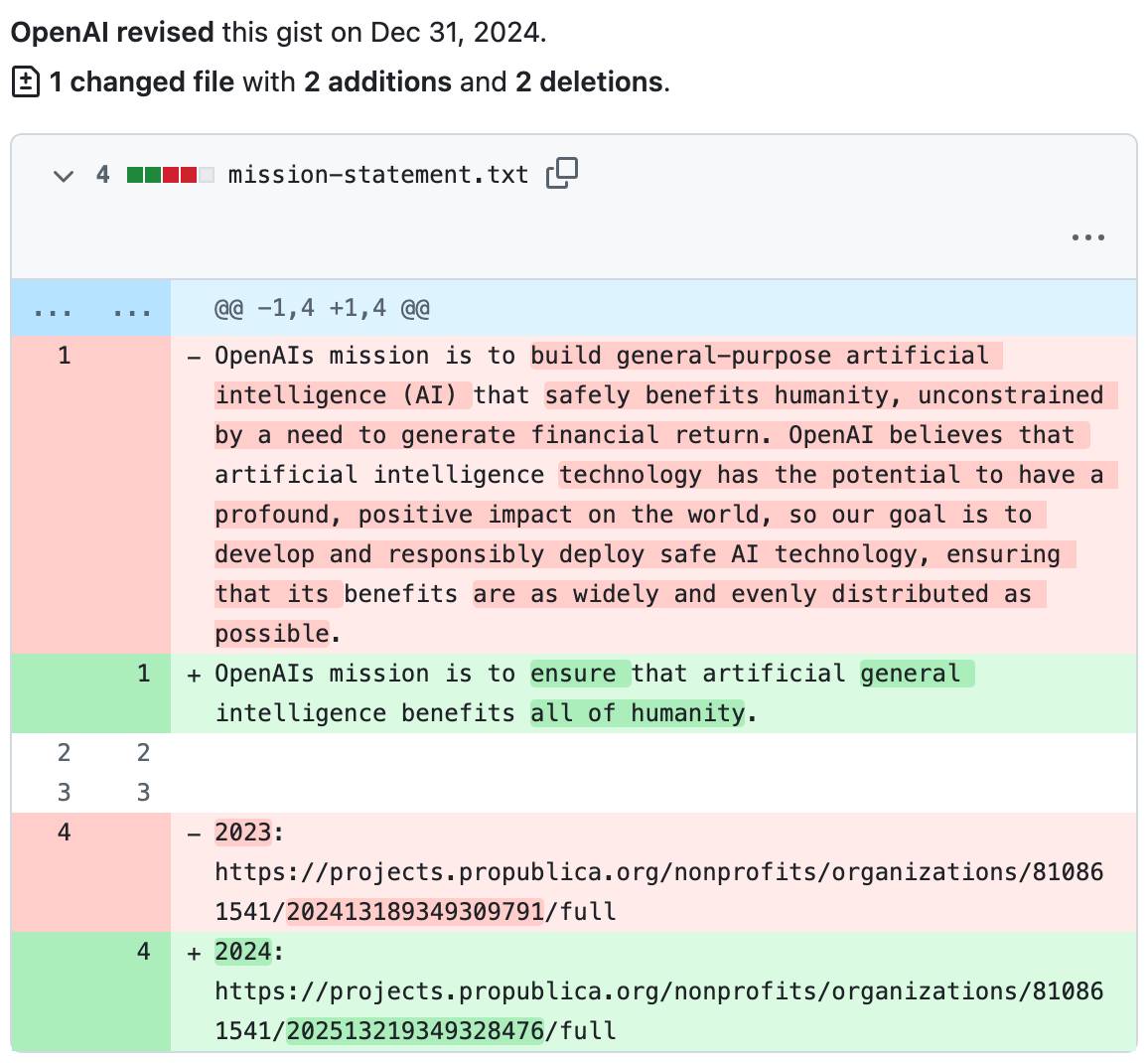

No changes in 2023... but then in 2024 they deleted almost the entire thing, reducing it to simply:

OpenAIs mission is to ensure that artificial general intelligence benefits all of humanity.

They’ve expanded “humanity” to “all of humanity”, but there’s no mention of safety any more and I guess they can finally start focusing on that need to generate financial returns!

Update: I found loosely equivalent but much less interesting documents from Anthropic.

More recent articles

- Can coding agents relicense open source through a “clean room” implementation of code? - 5th March 2026

- Something is afoot in the land of Qwen - 4th March 2026

- I vibe coded my dream macOS presentation app - 25th February 2026