302 posts tagged “sqlite”

SQLite is the world's most widely deployed database engine.

2020

Weeknotes: Datasette 0.41, photos breakthroughs

Shorter weeknotes this week, because my main project for the week warrants a detailed write-up on its own (coming soon... update 21st May here it is).

[... 867 words]Weeknotes: Datasette 0.39 and many other projects

This week’s theme: Well, I’m not going anywhere. So a ton of progress to report on various projects.

[... 806 words]hacker-news-to-sqlite (via) The latest in my Dogsheep series of tools: hacker-news-to-sqlite uses the Hacker News API to fetch your comments and submissions from Hacker News and save them to a SQLite database.

Weeknotes: Datasette Writes

As discussed previously, the biggest hole in Datasette’s feature set at the moment involves writing to the database.

[... 604 words]Things I learned about shapefiles building shapefile-to-sqlite

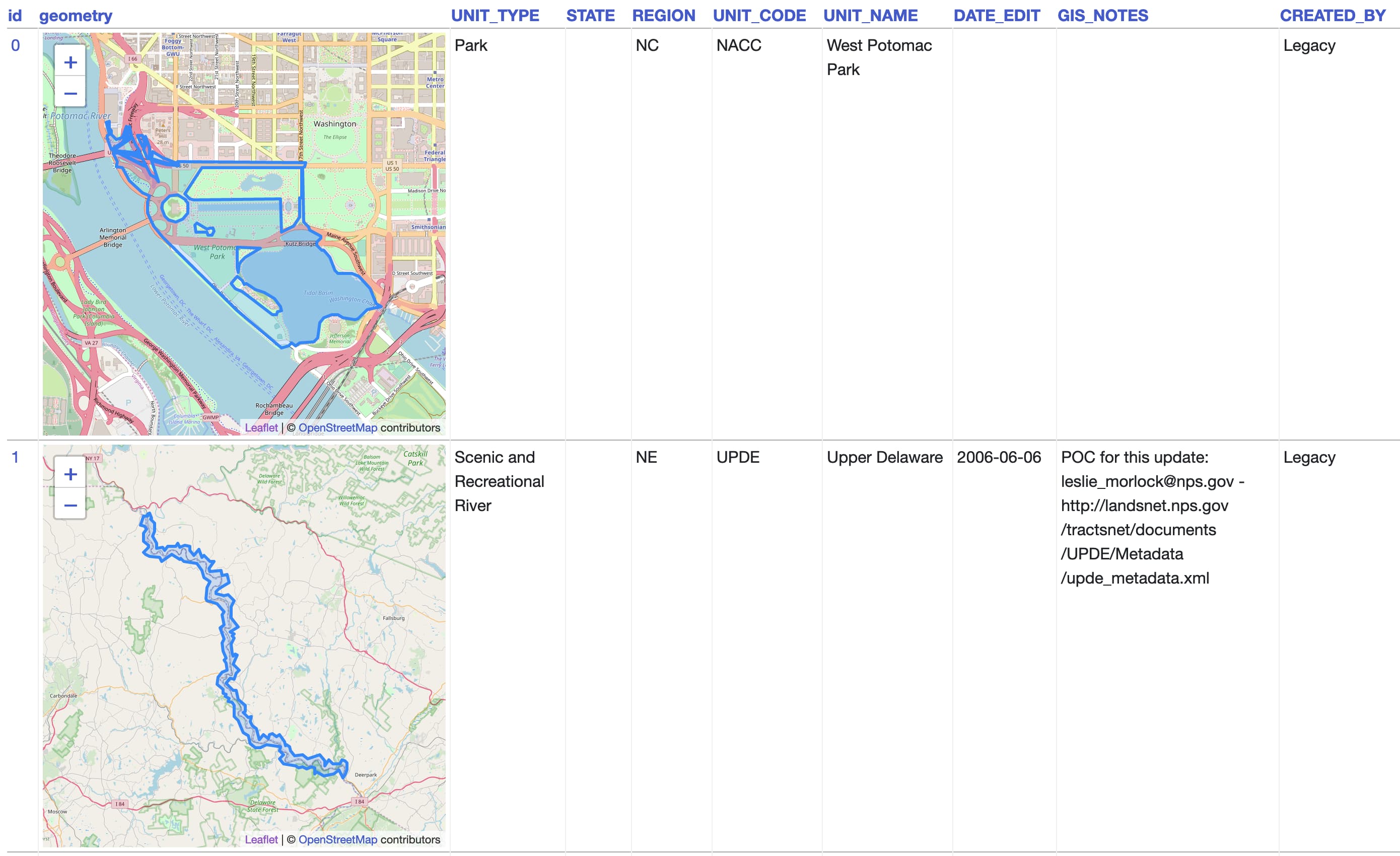

The latest in my series of x-to-sqlite tools is shapefile-to-sqlite. I learned a whole bunch of things about the ESRI shapefile format while building it.

[... 1,073 words]geojson-to-sqlite (via) I just put out the first release of geojson-to-sqlite—a CLI tool that can convert GeoJSON files (consisting of a Feature or a set of features in a FeatureCollection) into a table in a SQLite database. If you use the --spatialite option it will initalize the table with SpatiaLite and store the geometries in a spacially indexed geometry field—without that option it stores them as GeoJSON.

Generated Columns in SQLite (via) SQLite 3.31.0 released today, and generated columns are the single most notable new feature. PostgreSQL 12 added these in October 2019, and MySQL has had them since 5.7 in October 2015. MySQL and SQLite both offer either “stored” or “virtual” generated columns, with virtual columns being calculated at runtime. PostgreSQL currently only supports stored columns.

Serving 100µs reads with 100% availability (via) Fascinating use-case for SQLite from Segment: they needed a massively replicated configuration database across all of their instances that process streaming data. They chose to make the configuration available as a ~50GB SQLite database file mirrored to every instance, meaning lookups against that data could complete in microseconds. Changes to the central MySQL configuration store are pulled every 2-3 seconds, resulting in a trade-off of consistency for availability which fits their use-case just fine.

2019

sqlite-utils 2.0: real upserts

I just released version 2.0 of my sqlite-utils library/CLI tool to PyPI.

[... 1,140 words]athena-sqlite (via) Amazon Athena is the AWS tool for querying data stored in S3—as CSV, JSON or Apache Parquet files—using SQL. It’s an interesting way of buliding a very cheap data warehouse on top of S3 without having to run any additional services. Athena recently added a query federation SDK which lets you define additional custom data sources using Lambda functions. Damon Cortesi used this to write a custom connector for SQLite, which lets you run queries against data stored in SQLite files that you have uploaded to S3. You can then run joins between that data and other Athena sources.

Logging to SQLite using ASGI middleware

I had some fun playing around with ASGI middleware and logging during our flight back to England for the holidays.

[... 2,535 words]sqlite-transform. I released a new CLI tool today: sqlite-transform, which lets you run “transformations” against a SQLite database. I built it out of frustration of constantly running into CSV files that use horrible American date formatting—the “sqlite-transform parsedatetime my.db mytable col1” command runs dateutil’s parser against those columns and replaces them with a nice, sortable ISO formatted timestamp. I’ve also added a “sqlite-transform lambda” command that lets you specify Python code directly on the command-line that should be used to transform every value in a specified column.

goodreads-to-sqlite (via) This is so cool! Tobias Kunze built a Python CLI tool to import your Goodreads data into a SQLite database, inspired by github-to-sqlite and my various other Dogsheep tools. It’s the first Dogsheep style tool I’ve seen that wasn’t built by me—and Tobias’ write-up includes some neat examples of queries you can run against your Goodreads data. I’ve now started using Goodreads and I’m importing my books into my own private Dogsheep Datasette instance.

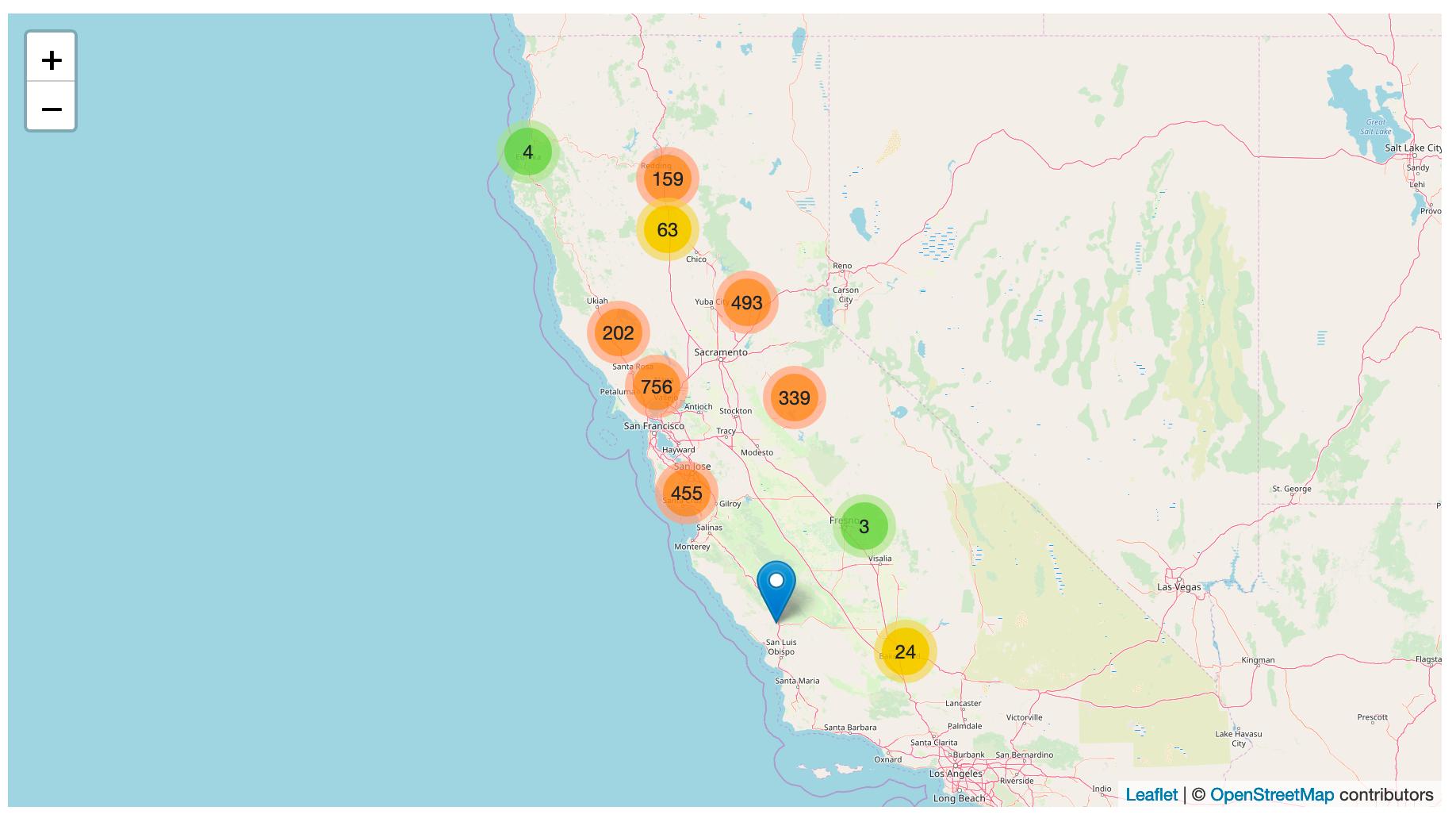

Tracking PG&E outages by scraping to a git repo

PG&E have cut off power to several million people in northern California, supposedly as a precaution against wildfires.

[... 868 words]SQL Murder Mystery in Datasette (via) “A crime has taken place and the detective needs your help. The detective gave you the crime scene report, but you somehow lost it. You vaguely remember that the crime was a murder that occurred sometime on Jan.15, 2018 and that it took place in SQL City. Start by retrieving the corresponding crime scene report from the police department’s database.”—Really fun game to help exercise your skills with SQL by the NU Knight Lab. I loaded their SQLite database into Datasette so you can play in your browser.

Weeknotes: Dogsheep

Having figured out my Stanford schedule, this week I started getting back into the habit of writing some code.

[... 1,367 words]Weeknotes: Design thinking for journalists, genome-to-sqlite, datasette-atom

I haven’t had much time for code this week: we’ve had a full five day workshop at JSK with Tran Ha (a JSK alumni) learning how to apply Design Thinking to our fellowship projects and generally to challenges facing journalism.

[... 870 words]genome-to-sqlite. I just found out 23andMe let you export your genome as a zipped TSV file, so I wrote a little Python command-line tool to import it into a SQLite database.

Weeknotes: ONA19, twitter-to-sqlite, datasette-rure

I’ve decided to start writing weeknotes for the duration of my JSK fellowship. Here goes!

[... 919 words]sqlite-utils 1.11. Amjith Ramanujam contributed an excellent new feature to sqlite-utils, which I’ve now released as part of version 1.11. Previously you could enable SQLite full-text-search on a table using the .enable_fts() method (or the “sqlite-utils enable-fts” CLI command) but it wouldn’t reflect future changes to the table—you had to use populate_fts() any time you inserted new records. Thanks to Amjith you can now pass create_triggers=True (or --create-triggers) to cause sqlite-utils to automatically add triggers that keeps the FTS index up-to-date any time a row is inserted, updated or deleted from the table.

Working with many-to-many relationships in sqlite-utils (via) I just released sqlite-utils 1.9 with syntactic sugar support for creating many-to-many relationships for records stored in SQLite databases.

healthkit-to-sqlite. Ever since I got an Apple Watch I’ve been itching to get my hands on the step tracking and health data that it’s been collecting for me. I know it’s there in a SQLite database on my wrist, but I couldn’t figure out how to get it! A few days ago I stumbled across the “Export Health Data” button in the iOS Health app, and it turns out it creates a zip file containing XML with a full dump of the data collected by Apple Health. healthkit-to-sqlite is the tool I’ve built that can read that export and use it to create a SQLite database ready to be queried and explored with Datasette. It’s a pretty basic implementation but it’s already giving me access to over 3 million rows of data. Lots of potential here for interesting work with personal analytics.

db-to-sqlite 1.0 release. I’ve released version 1.0 of my db-to-sqlite tool, which lets you create a SQLite database copy of any database supported by SQLAlchemy (I’ve tested it against MySQL and PostgreSQL). The tool has a bunch of new features: you can use --redact to redact specific columns, specify --table multiple times to copy a subset of tables, and the --all option now efficiently adds all foreign keys at the end of the import. The project now has unit tests which run against MySQL and PostgreSQL in Travis CI. Also included in the README: a shell one-liner for creating a local SQLite copy of a remote Heroku Postgres database based on extracting the connection string from a Heroku config environment variable.

Convert Locations.kml (pulled from an iPhone backup) to SQLite. I’ve been playing around with data from my iPhone using the iPhone Backup Extractor app and one of the things it exports for you is a Locations.kml file full of location history data. I wrote a tiny script using Python’s ElementTree XMLPullParser to efficiently iterate through the Placemarks and yield them as dictionaries, which I then batch-inserted into sqlite-utils to create a SQLite database.

sqlite-utils 1.0. I just released sqlite-utils 1.0, with a couple of handy new features over 0.14: it can now automatically add columns to a database table if you attempt to insert data which doesn’t quite fit (using alter=True in the Python API or the --alter option to the “sqlite-utils insert” command). It also has the ability to output nested JSON column values on the command-line using the new --json-cols option. This is the first project I’ve marked as a 1.0 release in a very long time—I’ll be sticking to semver for this project from now on, bumping the major version only in the case of a backwards incompatible change.

VisiData

(via)

Intriguing tool by Saul Pwanson: VisiData is a command-line "textpunk utility" for browsing and manipulating tabular data. pip3 install visidata and then vd myfile.csv (or .json or .xls or SQLite or others) and get an interactive terminal UI for quickly searching through the data, conducting frequency analysis of columns, manipulating it and much more besides. Two tips for if you start playing with it: hit gq to exit, and hit Ctrl+H to view the help screen.

huey. Charles Leifer’s “little task queue for Python”. Similar to Celery, but it’s designed to work with Redis, SQLite or in the parent process using background greenlets. Worth checking out for the really neat design. The project is new to me, but it’s been under active development since 2011 and has a very healthy looking rate of releases.

sqlite-utils: a Python library and CLI tool for building SQLite databases

sqlite-utils is a combination Python library and command-line tool I’ve been building over the past six months which aims to make creating new SQLite databases as quick and easy as possible.

[... 1,237 words]socrata2sql (via) Phenomenal new open source tool released by Andrew Chavez at the Dallas Morning News. Socrata is the open data portal software used by huge numbers of local governments worldwide. socrata2sql is a tool that interacts with the standard Socrata API and can use it to suck down a dataset and save it as a SQLite, PostgreSQL, MySQL or other SQLAlchemy-supported database. I just tried this and it took a single command to create a SQLite database of every police arrest in Dallas in the past five years.

db-to-sqlite (via) I just released version 0.2 of a tiny CLI utility I’ve been working on. It builds on top of SQLAlchemy and lets you connect to any SQLAlchemy-supported database and convert the data from it to a local SQLite database file. The new --all option will mirror all available tables (including foreign key relationships), or you can use --sql to save the results of custom SQL queries.