9 posts tagged “val-town”

Val Town provides a web interface for building, running and deploying server-side JavaScript apps.

2025

Tom MacWright: Observable Notebooks 2.0. Observable announced Observable Notebooks 2.0 last week - the latest take on their JavaScript notebook technology, this time with an open file format and a brand new macOS desktop app.

Tom MacWright worked at Observable during their first iteration and here provides thoughtful commentary from an insider-to-outsider perspective on how their platform has evolved over time.

I particularly appreciated this aside on the downsides of evolving your own not-quite-standard language syntax:

Notebook Kit and Desktop support vanilla JavaScript, which is excellent and cool. The Observable changes to JavaScript were always tricky and meant that we struggled to use off-the-shelf parsers, and users couldn't use standard JavaScript tooling like eslint. This is stuff like the

viewofoperator which meant that Observable was not JavaScript. [...] Sidenote: I now work on Val Town, which is also a platform based on writing JavaScript, and when I joined it also had a tweaked version of JavaScript. We used the@character to let you 'mention' other vals and implicitly import them. This was, like it was in Observable, not worth it and we switched to standard syntax: don't mess with language standards folks!

Stevens: a hackable AI assistant using a single SQLite table and a handful of cron jobs. Geoffrey Litt reports on Stevens, a shared digital assistant he put together for his family using SQLite and scheduled tasks running on Val Town.

The design is refreshingly simple considering how much it can do. Everything works around a single memories table. A memory has text, tags, creation metadata and an optional date for things like calendar entries and weather reports.

Everything else is handled by scheduled jobs to popular weather information and events from Google Calendar, a Telegram integration offering a chat UI and a neat system where USPS postal email delivery notifications are run through Val's own email handling mechanism to trigger a Claude prompt to add those as memories too.

Here's the full code on Val Town, including the daily briefing prompt that incorporates most of the personality of the bot.

What we learned copying all the best code assistants (via) Steve Krouse describes Val Town's experience so far building features that use LLMs, starting with completions (powered by Codeium and Val Town's own codemirror-codeium extension) and then rolling through several versions of their Townie code assistant, initially powered by GPT 3.5 but later upgraded to Claude 3.5 Sonnet.

This is a really interesting space to explore right now because there is so much activity in it from larger players. Steve classifies Val Town's approach as "fast following" - trying to spot the patterns that are proven to work and bring them into their own product.

It's challenging from a strategic point of view because Val Town's core differentiator isn't meant to be AI coding assistance: they're trying to build the best possible ecosystem for hosting and iterating lightweight server-side JavaScript applications. Isn't this stuff all a distraction from that larger goal?

Steve concludes:

However, it still feels like there’s a lot to be gained with a fully-integrated web AI code editor experience in Val Town – even if we can only get 80% of the features that the big dogs have, and a couple months later. It doesn’t take that much work to copy the best features we see in other tools. The benefits to a fully integrated experience seems well worth that cost. In short, we’ve had a lot of success fast-following so far, and think it’s worth continuing to do so.

It continues to be wild to me how features like this are easy enough to build now that they can be part-time side features at a small startup, and not the entire project.

2024

Cerebras Coder (via) Val Town founder Steve Krouse has been building demos on top of the Cerebras API that runs Llama3.1-70b at 2,000 tokens/second.

Having a capable LLM with that kind of performance turns out to be really interesting. Cerebras Coder is a demo that implements Claude Artifact-style on-demand JavaScript apps, and having it run at that speed means changes you request are visible within less than a second:

Steve's implementation (created with the help of Townie, the Val Town code assistant) demonstrates the simplest possible version of an iframe sandbox:

<iframe

srcDoc={code}

sandbox="allow-scripts allow-modals allow-forms allow-popups allow-same-origin allow-top-navigation allow-downloads allow-presentation allow-pointer-lock"

/>

Where code is populated by a setCode(...) call inside a React component.

The most interesting applications of LLMs continue to be where they operate in a tight loop with a human - this can make those review loops potentially much faster and more productive.

System prompt for val.town/townie (via) Val Town (previously) provides hosting and a web-based coding environment for Vals - snippets of JavaScript/TypeScript that can run server-side as scripts, on a schedule or hosting a web service.

Townie is Val's new AI bot, providing a conversational chat interface for creating fullstack web apps (with blob or SQLite persistence) as Vals.

In the most recent release of Townie Val added the ability to inspect and edit its system prompt!

I've archived a copy in this Gist, as a snapshot of how Townie works today. It's surprisingly short, relying heavily on the model's existing knowledge of Deno and TypeScript.

I enjoyed the use of "tastefully" in this bit:

Tastefully add a view source link back to the user's val if there's a natural spot for it and it fits in the context of what they're building. You can generate the val source url via import.meta.url.replace("esm.town", "val.town").

The prompt includes a few code samples, like this one demonstrating how to use Val's SQLite package:

import { sqlite } from "https://esm.town/v/stevekrouse/sqlite";

let KEY = new URL(import.meta.url).pathname.split("/").at(-1);

(await sqlite.execute(`select * from ${KEY}_users where id = ?`, [1])).rows[0].idIt also reveals the existence of Val's very own delightfully simple image generation endpoint Val, currently powered by Stable Diffusion XL Lightning on fal.ai.

If you want an AI generated image, use https://maxm-imggenurl.web.val.run/the-description-of-your-image to dynamically generate one.

Here's a fun colorful raccoon with a wildly inappropriate hat.

Val are also running their own gpt-4o-mini proxy, free to users of their platform:

import { OpenAI } from "https://esm.town/v/std/openai";

const openai = new OpenAI();

const completion = await openai.chat.completions.create({

messages: [

{ role: "user", content: "Say hello in a creative way" },

],

model: "gpt-4o-mini",

max_tokens: 30,

});Val developer JP Posma wrote a lot more about Townie in How we built Townie – an app that generates fullstack apps, describing their prototyping process and revealing that the current model it's using is Claude 3.5 Sonnet.

Their current system prompt was refined over many different versions - initially they were including 50 example Vals at quite a high token cost, but they were able to reduce that down to the linked system prompt which includes condensed documentation and just one templated example.

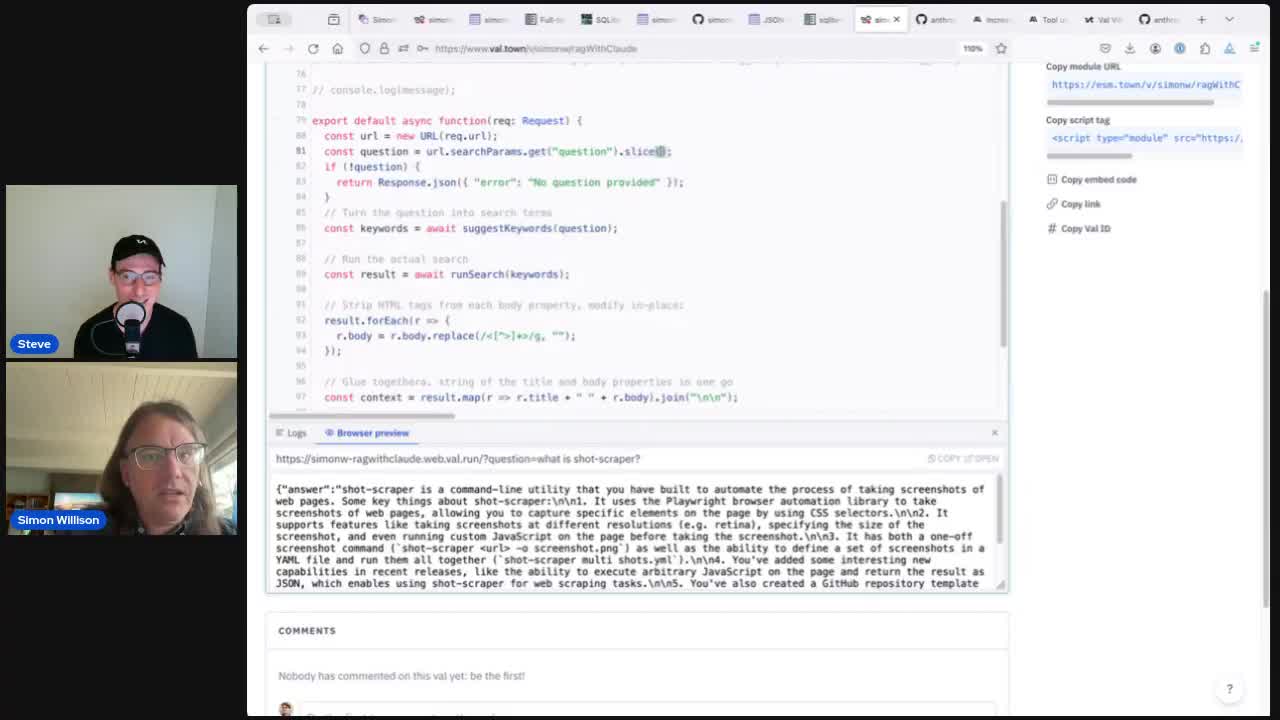

Building search-based RAG using Claude, Datasette and Val Town

Retrieval Augmented Generation (RAG) is a technique for adding extra “knowledge” to systems built on LLMs, allowing them to answer questions against custom information not included in their training data. A common way to implement this is to take a question from a user, translate that into a set of search queries, run those against a search engine and then feed the results back into the LLM to generate an answer.

[... 3,372 words]Val Vibes: Semantic search in Val Town. A neat case-study by JP Posma on how Val Town's developers can use Val Town Vals to build prototypes of new features that later make it into Val Town core.

This one explores building out semantic search against Vals using OpenAI embeddings and the PostgreSQL pgvector extension.

Val Town Newsletter 15 (via) I really like how Val Town founder Steve Krouse now accompanies their “what’s new” newsletter with a video tour of the new features. I’m seriously considering imitating this for my own projects.

The first four Val Town runtimes

(via)

Val Town solves one of my favourite technical problems: how to run untrusted code in a safe sandbox. They're on their fourth iteration of this now, currently using a Node.js application that launches Deno sub-processes using the node-deno-vm npm package and runs code in those, taking advantage of the Deno sandboxing mechanism and terminating processes that take too long in order to protect against while(true) style attacks.