33 posts tagged “video”

2025

Video models are zero-shot learners and reasoners. Fascinating new paper from Google DeepMind which makes a very convincing case that their Veo 3 model - and generative video models in general - serve a similar role in the machine learning visual ecosystem as LLMs do for text.

LLMs took the ability to predict the next token and turned it into general purpose foundation models for all manner of tasks that used to be handled by dedicated models - summarization, translation, parts of speech tagging etc can now all be handled by single huge models, which are getting both more powerful and cheaper as time progresses.

Generative video models like Veo 3 may well serve the same role for vision and image reasoning tasks.

From the paper:

We believe that video models will become unifying, general-purpose foundation models for machine vision just like large language models (LLMs) have become foundation models for natural language processing (NLP). [...]

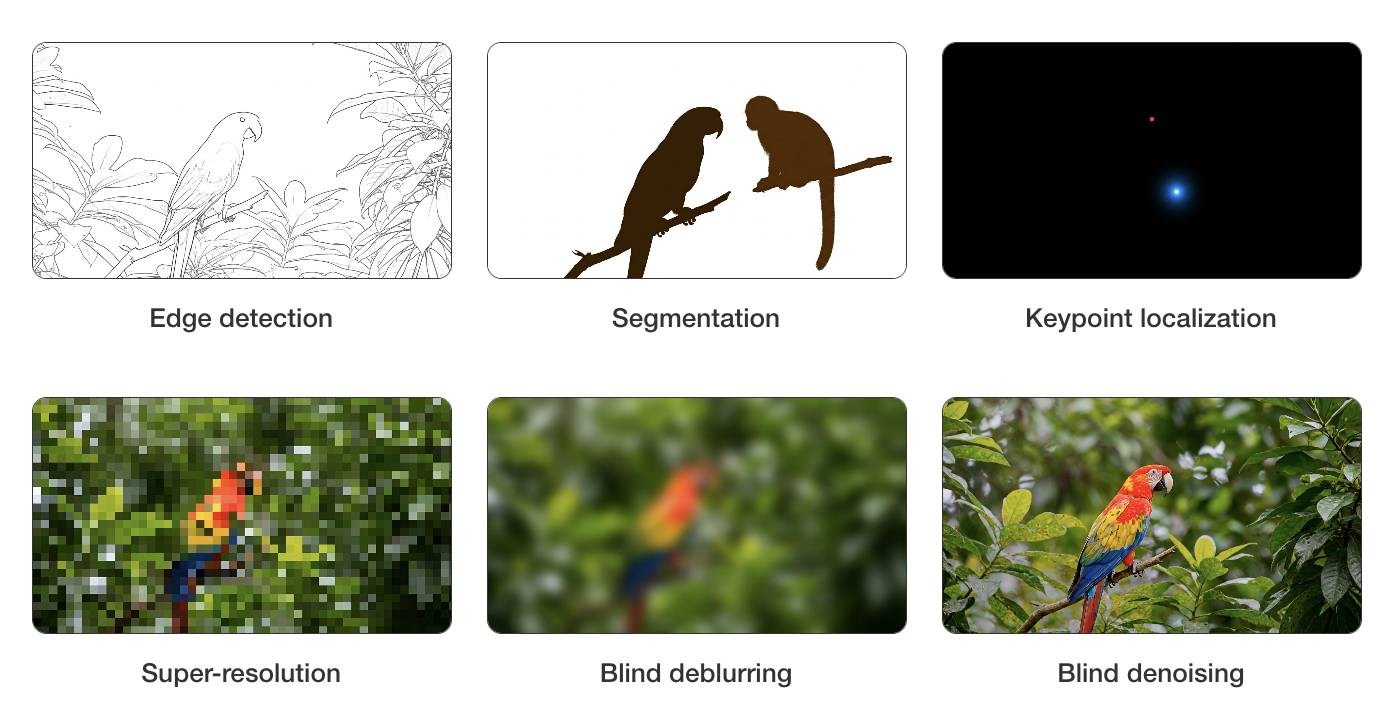

Machine vision today in many ways resembles the state of NLP a few years ago: There are excellent task-specific models like “Segment Anything” for segmentation or YOLO variants for object detection. While attempts to unify some vision tasks exist, no existing model can solve any problem just by prompting. However, the exact same primitives that enabled zero-shot learning in NLP also apply to today’s generative video models—large-scale training with a generative objective (text/video continuation) on web-scale data. [...]

- Analyzing 18,384 generated videos across 62 qualitative and 7 quantitative tasks, we report that Veo 3 can solve a wide range of tasks that it was neither trained nor adapted for.

- Based on its ability to perceive, model, and manipulate the visual world, Veo 3 shows early forms of “chain-of-frames (CoF)” visual reasoning like maze and symmetry solving.

- While task-specific bespoke models still outperform a zero-shot video model, we observe a substantial and consistent performance improvement from Veo 2 to Veo 3, indicating a rapid advancement in the capabilities of video models.

I particularly enjoyed the way they coined the new term chain-of-frames to reflect chain-of-thought in LLMs. A chain-of-frames is how a video generation model can "reason" about the visual world:

Perception, modeling, and manipulation all integrate to tackle visual reasoning. While language models manipulate human-invented symbols, video models can apply changes across the dimensions of the real world: time and space. Since these changes are applied frame-by-frame in a generated video, this parallels chain-of-thought in LLMs and could therefore be called chain-of-frames, or CoF for short. In the language domain, chain-of-thought enabled models to tackle reasoning problems. Similarly, chain-of-frames (a.k.a. video generation) might enable video models to solve challenging visual problems that require step-by-step reasoning across time and space.

They note that, while video models remain expensive to run today, it's likely they will follow a similar pricing trajectory as LLMs. I've been tracking this for a few years now and it really is a huge difference - a 1,200x drop in price between GPT-3 in 2022 ($60/million tokens) and GPT-5-Nano today ($0.05/million tokens).

The PDF is 45 pages long but the main paper is just the first 9.5 pages - the rest is mostly appendices. Reading those first 10 pages will give you the full details of their argument.

The accompanying website has dozens of video demos which are worth spending some time with to get a feel for the different applications of the Veo 3 model.

It's worth skimming through the appendixes in the paper as well to see examples of some of the prompts they used. They compare some of the exercises against equivalent attempts using Google's Nano Banana image generation model.

For edge detection, for example:

Veo: All edges in this image become more salient by transforming into black outlines. Then, all objects fade away, with just the edges remaining on a white background. Static camera perspective, no zoom or pan.

Nano Banana: Outline all edges in the image in black, make everything else white.

Here's a tip that works on YouTube and almost any other web page that shows you a video. You can increase the playback rate beyond the usually-exposed 2x by running this in your browser DevTools console:

document.querySelector('video').playbackRate = 2.5

I find this is the fastest I can reasonably watch most videos at, with subtitles on to help my comprehension - it turns a 40 minute video into just 16 minutes, short enough that I don't feel too guilty taking time off whatever else I'm doing to watch it!

2024

Sora (via) OpenAI's released their long-threatened Sora text-to-video model this morning, available in most non-European countries to subscribers to ChatGPT Plus ($20/month) or Pro ($200/month).

Here's what I got for the very first test prompt I ran through it:

A pelican riding a bicycle along a coastal path overlooking a harbor

The Pelican inexplicably morphs to cycle in the opposite direction half way through, but I don't see that as a particularly significant issue: Sora is built entirely around the idea of directly manipulating and editing and remixing the clips it generates, so the goal isn't to have it produce usable videos from a single prompt.

How developers are using Gemini 1.5 Pro’s 1 million token context window. I got to be a talking head for a few seconds in an intro video for today's Google I/O keynote, talking about how I used Gemini Pro 1.5 to index my bookshelf (and with a cameo from my squirrel nutcracker). I'm at 1m25s.

(Or at 10m6s in the full video of the keynote)

When I first published the micrograd repo, it got some traction on GitHub but then somewhat stagnated and it didn't seem that people cared much. [...] When I made the video that built it and walked through it, it suddenly almost 100X'd the overall interest and engagement with that exact same piece of code.

[...] you might be leaving somewhere 10-100X of the potential of that exact same piece of work on the table just because you haven't made it sufficiently accessible.

Val Town Newsletter 15 (via) I really like how Val Town founder Steve Krouse now accompanies their “what’s new” newsletter with a video tour of the new features. I’m seriously considering imitating this for my own projects.

Google Research: Lumiere. The latest in text-to-video from Google Research, described as “a text-to-video diffusion model designed for synthesizing videos that portray realistic, diverse and coherent motion”.

Most existing text-to-video models generate keyframes and then use other models to fill in the gaps, which frequently leads to a lack of coherency. Lumiere “generates the full temporal duration of the video at once”, which avoids this problem.

Disappointingly but unsurprisingly the paper doesn’t go into much detail on the training data, beyond stating “We train our T2V model on a dataset containing 30M videos along with their text caption. The videos are 80 frames long at 16 fps (5 seconds)”.

The examples of “stylized generation” which combine a text prompt with a single reference image for style are particularly impressive.

2017

Evolution of <img>: Gif without the GIF

(via)

Safari Technology Preview lets you use <img src="movie.mp4">, for high quality animated gifs in 1/14th of the file size.

2010

Blowing up HTML5 video and mapping it into 3D space. The canvas drawImage() method can take an HTML video element as its source, making all kinds of interesting effects possible. The author notes that performance was dramatically improved by copying the video frame in to a separate canvas element and then copying regions out of that element rather than grabbing regions from the video directly.

Video on the Web—Dive Into HTML5. Everything a web developer needs to know about video containers, video codecs, adio containers, audio codecs, h.264, theora, vorbis, licensing, encoding, batch encoding and the html5 video element.

HTML5 video markup, compatibility and playback. Everything you need to know about embedding HTML5 video on a page, complete with multiple codecs to cover the various supporting browsers and a fallback to Flash.

SublimeVideo—HTML5 Video Player. Still a fair way to go (no Firefox support yet, and they plan to add a Flash fallback for IE) but in Safari this is pretty extraordinary. Smooth video, beautiful UI, full window mode and full screen mode in the latest WebKit nightlies. I’d go as far as saying that this is the nicest online video implementation I’ve seen (at least on the Mac).

2009

Armadillo Cam—Armadillo Running and Sniffing Small Shrub. From the awesome Museum of Animal Perspectives.

Unlike progressive downloads, HTTP Live Streaming actually does stream content in real time, although there can be a latency of as much as 30 seconds. [...] the content to be broadcast is encoded into an MPEG transport stream and chopped into segments that are around ten seconds long. Rather than getting a continuous stream of new data over RTSP, the new protocol simply asks for the first couple clips, then asks for additional clips as needed. This works great through firewalls, and doesn't require any special servers because any standard web server can deliver the chopped up video segments.

Video for Everybody! Reminiscent of the early days of Web Standards, Kroc Camen has created a fiendishly clever chunk of HTML which can play a video on any browser, starting with HTML5 video then falling back on Flash and eventually just an HTML message telling the user where they can download the file. No JavaScript to be seen, but conditional comments abound. Requires you to encode as both Ogg and H.264, but Kroc includes details instructions for doing that using Handbrake.

Codecs for <audio> and <video>. HTML 5 will not be requiring support for specific audio and video codecs—Ian Hickson explains why, in great detail. Short version: Apple won’t implement Theora due to lack of hardware support and an “uncertain patent landscape”, while open source browsers (Chromium and Mozilla) can’t support H.264 due to the cost of the licenses.

Firefox 3.5 for developers. It’s out today, and the feature list is huge. Highlights include HTML 5 drag ’n’ drop, audio and video elements, offline resources, downloadable fonts, text-shadow, CSS transforms with -moz-transform, localStorage, geolocation, web workers, trackpad swipe events, native JSON, cross-site HTTP requests, text API for canvas, defer attribute for the script element and TraceMonkey for better JS performance!

Google container data center tour (on YouTube). 45,000 servers in 45 shipping containers, along with some serious looking plumbing.

2008

Zeppelin 101 in 5 mins (via) Ribot videoed my five minute lightning talk on Zeppelins at last night’s Skillswap Brighton.

Videos from FOWA 2008. The Carsonified team have a scarily fast turnaround on the videos from this year’s Future of Web Apps. Most of yesterday’s talks are already available to watch online, as a full talk or the edited highlight reel.

OSCON in 37 minutes. 45 OSCON talks summarised by their presenters in just 37 minutes, compiled by Gregg Pollack. I get to rant about OpenID for a minute at 27:22.

Video speech matching on TheyWorkForYou.com. Launched this morning at BarCamp London by Matthew Somerville—TheyWorkForYou now has video from BBC Parliament but they need your help matching it exactly to their transcripts from Hansard. Neat example of a game that helps process large amounts of data.

Video on Flickr! There’s a 90 second length limit, because “... Flickr is all about sharing photos that you yourself have taken. Video will be no different and so what quickly bubbled up was the idea of long photos, of capturing slices of life to share.”

The Dark Side Of The Moon (via) Robert O’Callahan believes that Moonlight is a strategic mistake, because it gives credibility to Microsoft’s entry to a new market which they will use to “keep the competition on a treadmill”; Moonlight can also never be entirely free due to the need for a proprietary codec (VC-1) available only as a binary blob.

2007

HTML5 Media Support in WebKit. WebKit continues to lead the pack when it comes to trying out new HTML5 proposals. The new audio and video elements make embedding media easy, and provide a neat listener API for hooking in to “playback ended” events.

Hello Revver.com 2.0. Revver, one of the more established video startups, have launched their new version which is powered by Django.

Videos tagged ’hd’ on Vimeo. Vimeo are now hosting HD videos. Worth playing full screen—I had no idea Flash video was capable of that kind of quality. The speed of loading is pretty astonishing; I get no delay at all, making this essentially TV quality video on demand.

H.264 support coming to the Flash player. It looks like this is a response to the higher video quality offered by Silverlight. I wonder if YouTube knew about this when they started transcoding their videos to H.264 for the Apple TV and iPhone.

Binary marble adding machine. Watch the video.

So that was Oxford Geek Night 2. Nat’s writeup, including video of the local news coverage (in which I look like a total dork).