Saturday, 29th April 2023

MLC LLM (via) From MLC, the team that gave us Web LLM and Web Stable Diffusion. “MLC LLM is a universal solution that allows any language model to be deployed natively on a diverse set of hardware backends and native applications”. I installed their iPhone demo from TestFlight this morning and it does indeed provide an offline LLM that runs on my phone. It’s reasonably capable—the underlying model for the app is vicuna-v1-7b, a LLaMA derivative.

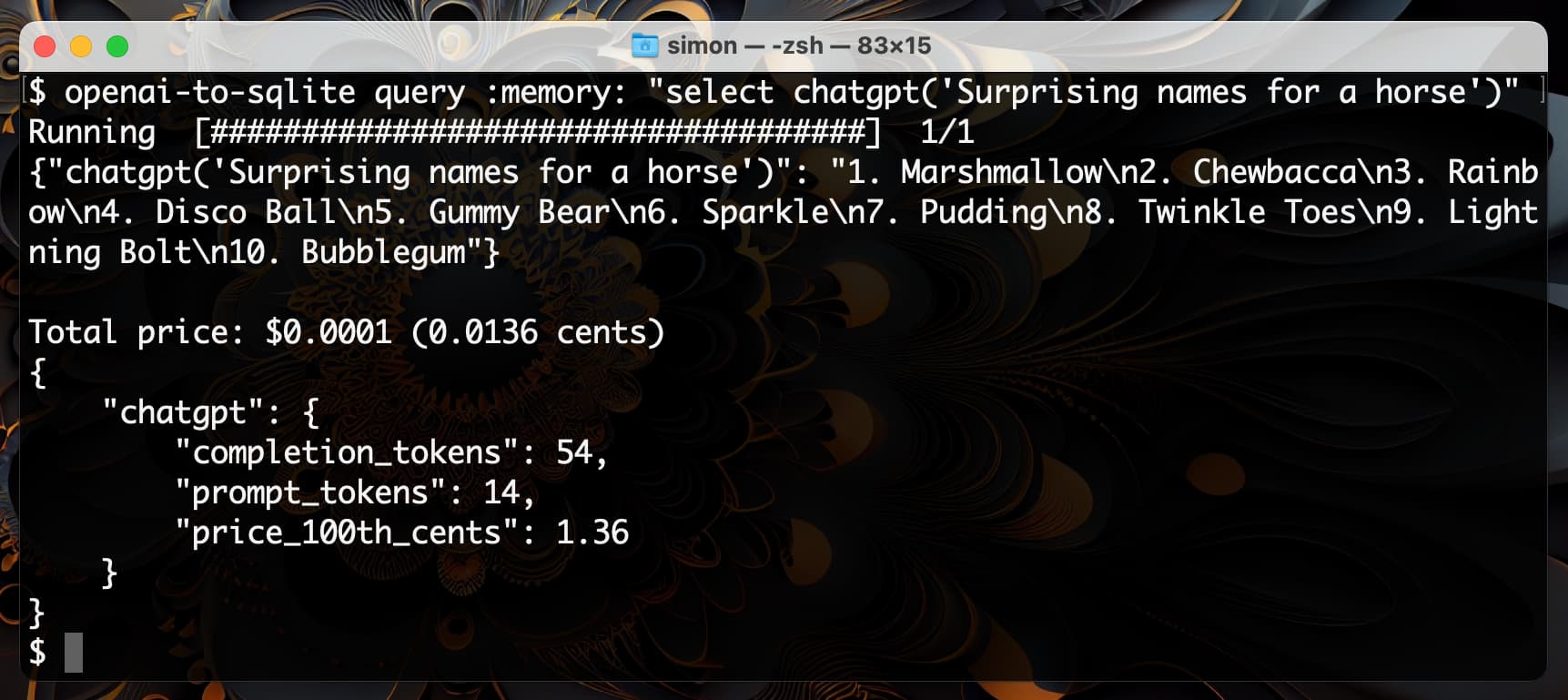

Enriching data with GPT3.5 and SQLite SQL functions

I shipped openai-to-sqlite 0.3 yesterday with a fun new feature: you can now use the command-line tool to enrich data in a SQLite database by running values through an OpenAI model and saving the results, all in a single SQL query.

[... 1,219 words]MRSK. A new open source web application deployment tool from 37signals, developed to help migrate their Hey webmail app out of the cloud and onto their own managed hardware. The key feature is one that I care about deeply: it enables zero-downtime deploys by running all traffic through a Traefik reverse proxy in a way that allows requests to be paused while a new deployment is going out—so end users get a few seconds delay on their HTTP requests before being served by the replaced application.