December 2023

69 posts: 7 entries, 26 links, 12 quotes, 24 beats

Dec. 1, 2023

So something everybody I think pretty much agrees on, including Sam Altman, including Yann LeCun, is LLMs aren't going to make it. The current LLMs are not a path to ASI. They're getting more and more expensive, they're getting more and more slow, and the more we use them, the more we realize their limitations.

We're also getting better at taking advantage of them, and they're super cool and helpful, but they appear to be behaving as extremely flexible, fuzzy, compressed search engines, which when you have enough data that's kind of compressed into the weights, turns out to be an amazingly powerful operation to have at your disposal.

[...] And the thing you can really see missing here is this planning piece, right? So if you try to get an LLM to solve fairly simple graph coloring problems or fairly simple stacking problems, things that require backtracking and trying things and stuff, unless it's something pretty similar in its training, they just fail terribly.

[...] So that's the theory about what something like Q* might be, or just in general, how do we get past this current constraint that we have?

Seamless Communication (via) A new “family of AI research models” from Meta AI for speech and text translation. The live demo is particularly worth trying—you can record a short webcam video of yourself speaking and get back the same video with your speech translated into another language.

The key to it is the new SeamlessM4T v2 model, which supports 101 languages for speech input, 96 Languages for text input/output and 35 languages for speech output. SeamlessM4T-Large v2 is a 9GB file, available on Hugging Face.

Also in this release: SeamlessExpressive, which “captures certain underexplored aspects of prosody such as speech rate and pauses”—effectively maintaining things like expressed enthusiasm across languages.

Plus SeamlessStreaming, “a model that can deliver speech and text translations with around two seconds of latency”.

Write shaders for the Vegas sphere (via) Alexandre Devaux built this phenomenal three.js / WebGL demo, which displays a rotating flyover of the Vegas Sphere and lets you directly edit shader code to render your own animations on it and see what they would look like. The via Hacker News thread includes dozens of examples of scripts you can paste in.

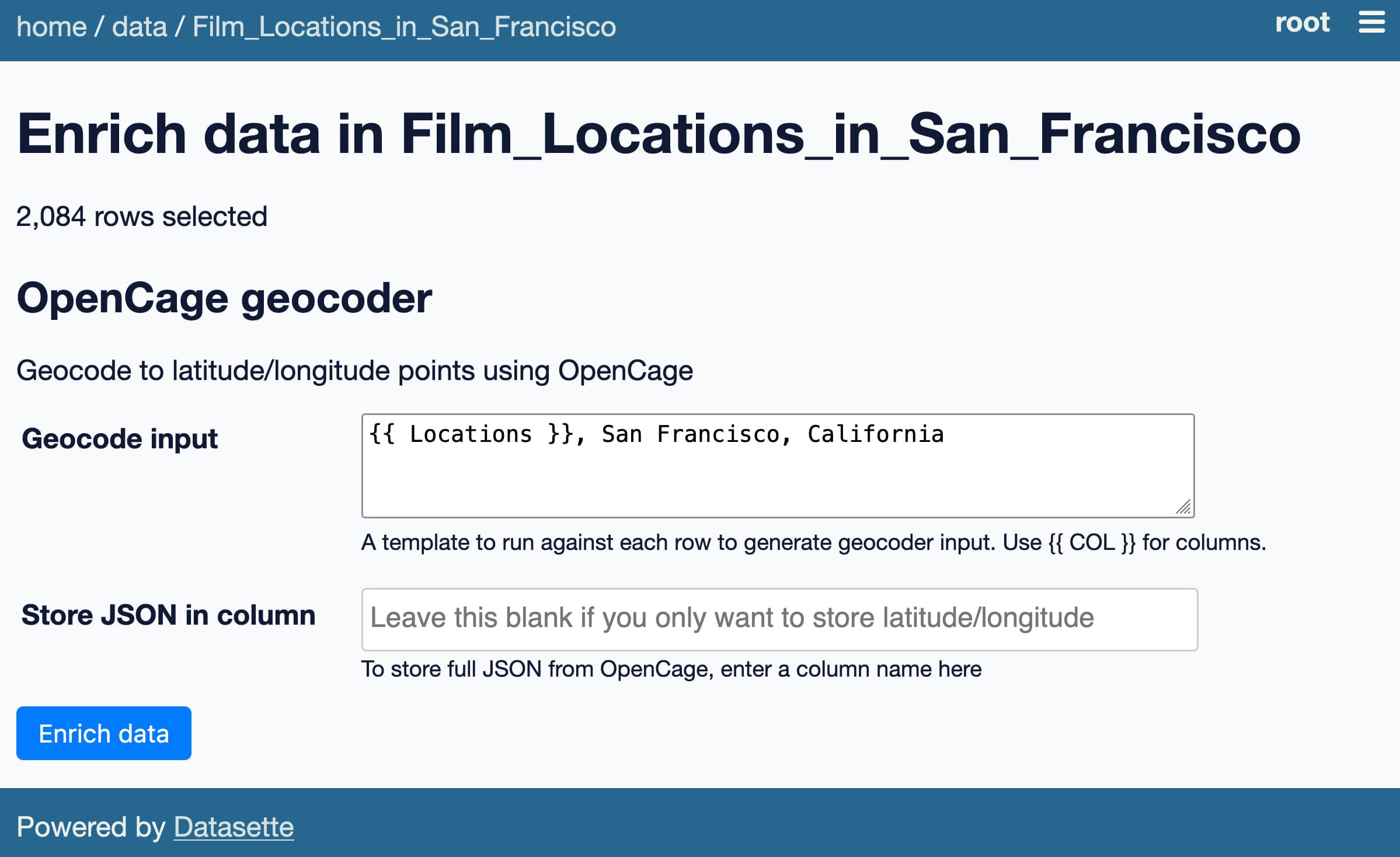

Datasette Enrichments: a new plugin framework for augmenting your data

Today I’m releasing datasette-enrichments, a new feature for Datasette which provides a framework for applying “enrichments” that can augment your data.

[... 1,202 words]Dec. 4, 2023

LLM Visualization. Brendan Bycroft’s beautifully crafted interactive explanation of the transformers architecture—that universal but confusing model diagram, only here you can step through and see a representation of the flurry of matrix algebra that occurs every time you get a Large Language Model to generate the next token.

Dec. 5, 2023

Spider-Man: Across the Spider-Verse screenplay (PDF) (via) Phil Lord shared this on Twitter yesterday—the final screenplay for Spider-Man: Across the Spider-Verse. It’s a really fun read.

A calculator has a well-defined, well-scoped set of use cases, a well-defined, well-scoped user interface, and a set of well-understood and expected behaviors that occur in response to manipulations of that interface.

Large language models, when used to drive chatbots or similar interactive text-generation systems, have none of those qualities. They have an open-ended set of unspecified use cases.

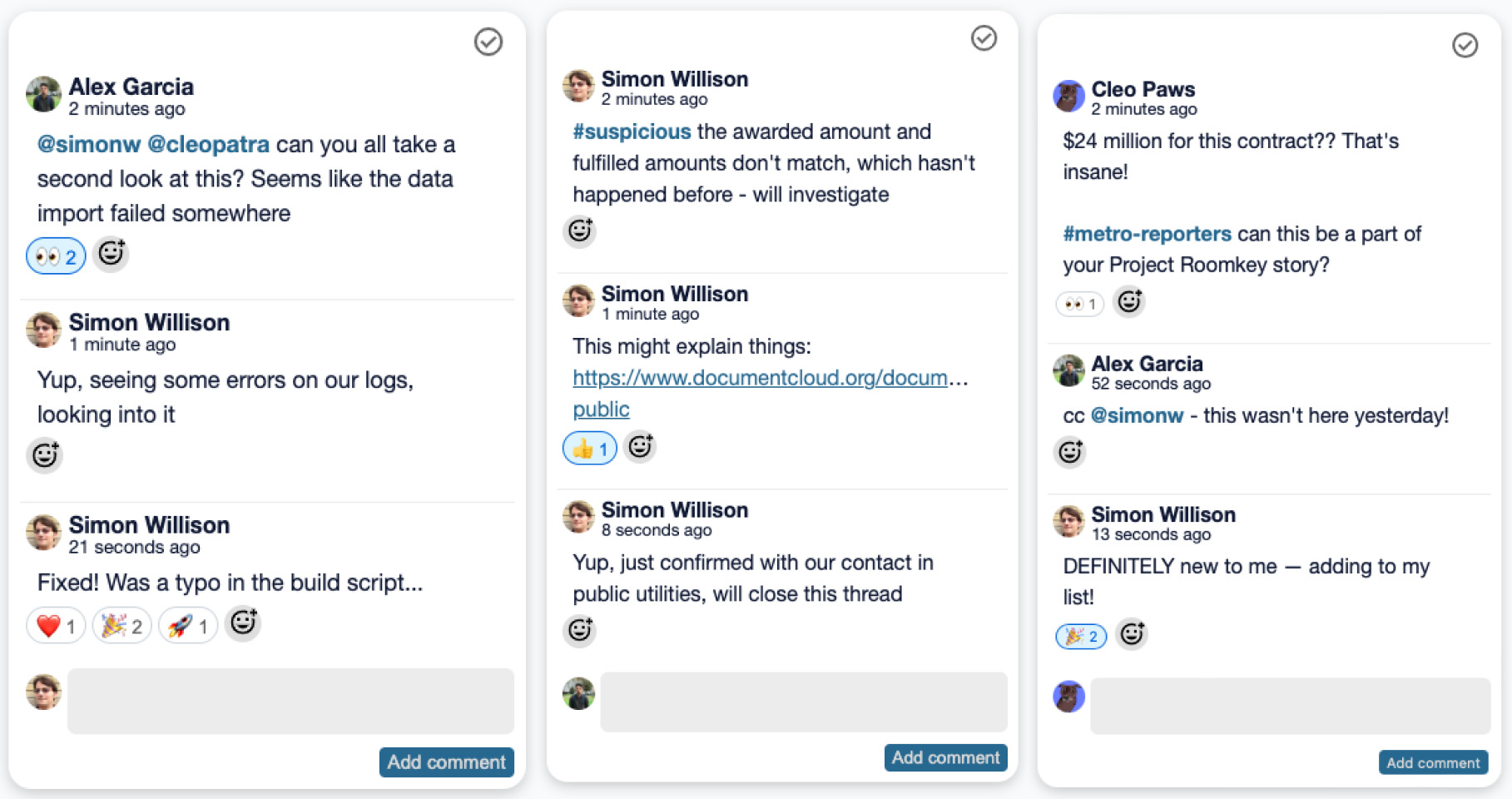

Simon Willison (Part Two): How Datasette Helps With Investigative Reporting. The second part of my Newsroom Robots podcast conversation with Nikita Roy. This episode includes my best audio answer yet to the “what is Datasette?” question, plus notes on how to use LLMs in journalism despite their propensity to make things up.

GPT and other large language models are aesthetic instruments rather than epistemological ones. Imagine a weird, unholy synthesizer whose buttons sample textual information, style, and semantics. Such a thing is compelling not because it offers answers in the form of text, but because it makes it possible to play text—all the text, almost—like an instrument.

AI and Trust. Barnstormer of an essay by Bruce Schneier about AI and trust. It’s worth spending some time with this—it’s hard to extract the highlights since there are so many of them.

A key idea is that we are predisposed to trust AI chat interfaces because they imitate humans, which means we are highly susceptible to profit-seeking biases baked into them.

Bruce suggests that what’s needed is public models, backed by government funds: “A public model is a model built by the public for the public. It requires political accountability, not just market accountability.”

Dec. 6, 2023

Ice Cubes GPT-4 prompts. The Ice Cubes open source Mastodon app recently grew a very good "describe this image" feature to help people add alt text to their images. I had a dig around in their repo and it turns out they're using GPT-4 Vision for this (and regular GPT-4 for other features), passing the image with this prompt:

What’s in this image? Be brief, it's for image alt description on a social network. Don't write in the first person.

Long context prompting for Claude 2.1. Claude 2.1 has a 200,000 token context, enough for around 500 pages of text. Convincing it to answer a question based on a single sentence buried deep within that content can be difficult, but Anthropic found that adding “Assistant: Here is the most relevant sentence in the context:” to the end of the prompt was enough to raise Claude 2.1’s score from 27% to 98% on their evaluation.

Dec. 7, 2023

SVG Tutorial: Learn SVG through 25 examples (via) Hunor Márton Borbély published this fantastic advent calendar of tutorials for learning SVG, from the basics up to advanced concepts like animation and interactivity.

Dec. 8, 2023

We like to assume that automation technology will maintain or increase wage levels for a few skilled supervisors. But in the long-term skilled automation supervisors also tend to earn less.

Here's an example: In 1801 the Jacquard loom was invented, which automated silkweaving with punchcards. Around 1800, a manual weaver could earn 30 shillings/week. By the 1830s the same weaver would only earn around 5s/week. A Jacquard operator earned 15s/week, but he was also 12x more productive.

The Jacquard operator upskilled and became an automation supervisor, but their wage still dropped. For manual weavers the wages dropped even more. If we believe assistive AI will deliver unseen productivity gains, we can assume that wage erosion will also be unprecedented.

Standard Webhooks 1.0.0 (via) A loose specification for implementing webhooks, put together by a technical steering committee that includes representatives from Zapier, Twilio and more.

These recommendations look great to me. Even if you don’t follow them precisely, this document is still worth reviewing any time you consider implementing webhooks—it covers a bunch of non-obvious challenges, such as responsible retry scheduling, thin-vs-thick hook payloads, authentication, custom HTTP headers and protecting against Server side request forgery attacks.

Weeknotes: datasette-enrichments, datasette-comments, sqlite-chronicle

I’ve mainly been working on Datasette Enrichments and continuing to explore the possibilities enabled by sqlite-chronicle.

[... 1,123 words]Announcing Purple Llama: Towards open trust and safety in the new world of generative AI (via) New from Meta AI, Purple Llama is “an umbrella project featuring open trust and safety tools and evaluations meant to level the playing field for developers to responsibly deploy generative AI models and experiences”.

There are three components: a 27 page “Responsible Use Guide”, a new open model called Llama Guard and CyberSec Eval, “a set of cybersecurity safety evaluations benchmarks for LLMs”.

Disappointingly, despite this being an initiative around trustworthy LLM development,prompt injection is mentioned exactly once, in the Responsible Use Guide, with an incorrect description describing it as involving “attempts to circumvent content restrictions”!

The Llama Guard model is interesting: it’s a fine-tune of Llama 2 7B designed to help spot “toxic” content in input or output from a model, effectively an openly released alternative to OpenAI’s moderation API endpoint.

The CyberSec Eval benchmarks focus on two concepts: generation of insecure code, and preventing models from assisting attackers from generating new attacks. I don’t think either of those are anywhere near as important as prompt injection mitigation.

My hunch is that the reason prompt injection didn’t get much coverage in this is that, like the rest of us, Meta’s AI research teams have no idea how to fix it yet!

Create a culture that favors begging forgiveness (and reversing decisions quickly) rather than asking permission. Invest in infrastructure such as progressive / cancellable rollouts. Use asynchronous written docs to get people aligned (“comment in this doc by Friday if you disagree with the plan”) rather than meetings (“we’ll get approval at the next weekly review meeting”).

Dec. 9, 2023

3D Gaussian Splatting—Why Graphics Will Never Be The Same (via) Gaussian splatting is an intriguing new approach to 3D computer graphics that’s getting a lot of buzz at the moment. This 2m11s YouTube video is the best condensed explanation I’ve seen of the key idea.