Wednesday, 5th February 2025

AI-generated slop is already in your public library (via) US libraries that use the Hoopla system to offer ebooks to their patrons sign agreements where they pay a license fee for anything selected by one of their members that's in the Hoopla catalog.

The Hoopla catalog is increasingly filling up with junk AI slop ebooks like "Fatty Liver Diet Cookbook: 2000 Days of Simple and Flavorful Recipes for a Revitalized Liver", which then cost libraries money if someone checks them out.

Apparently librarians already have a term for this kind of low-quality, low effort content that predates it being written by LLMs: vendor slurry.

Libraries stand against censorship, making this a difficult issue to address through removing those listings.

Sarah Lamdan, deputy director of the American Library Association says:

If library visitors choose to read AI eBooks, they should do so with the knowledge that the books are AI-generated.

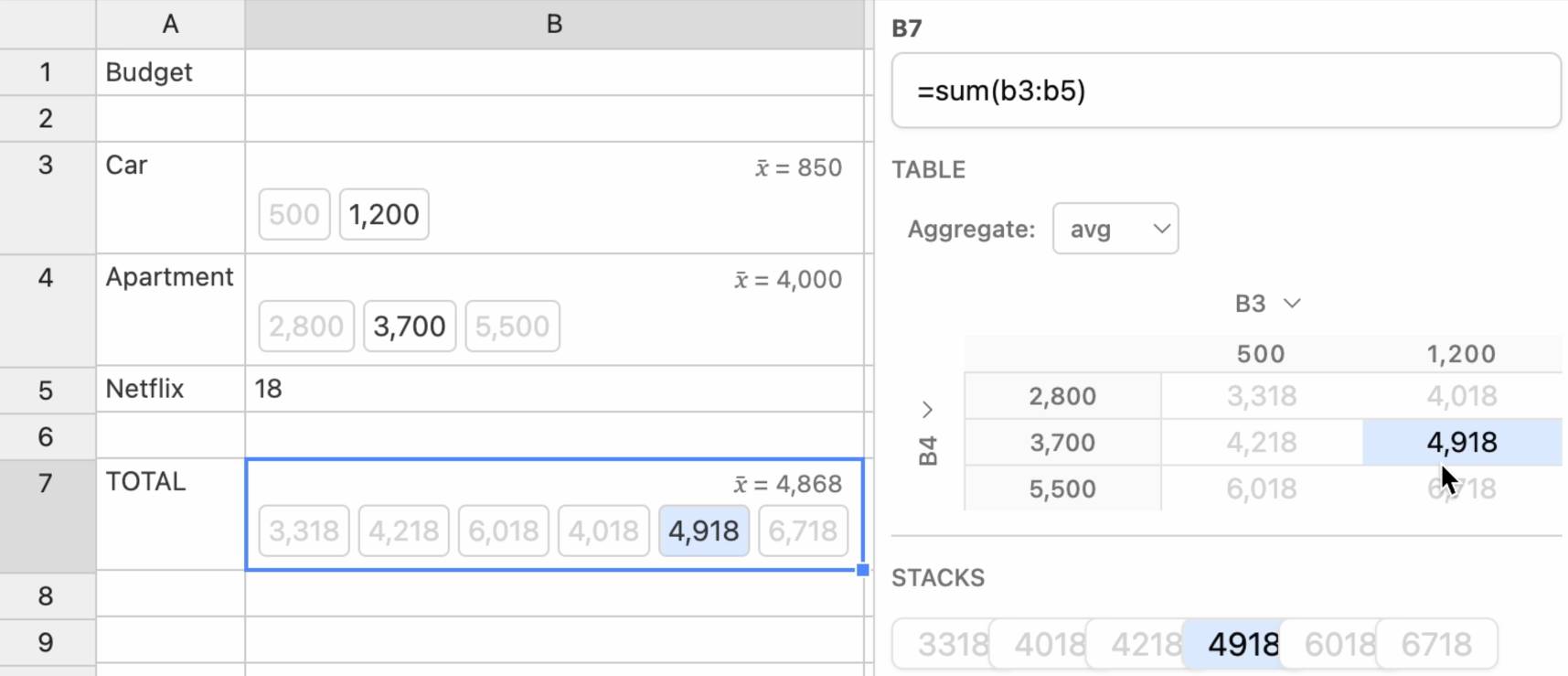

Ambsheets: Spreadsheets for exploring scenarios (via) Delightful UI experiment by Alex Warth and Geoffrey Litt at Ink & Switch, exploring the idea of a spreadsheet with cells that can handle multiple values at once, which they call "amb" (for "ambiguous") values. A single sheet can then be used to model multiple scenarios.

Here the cell for "Car" contains {500, 1200} and the cell for "Apartment" contains {2800, 3700, 5500}, resulting in a "Total" cell with six different values. Hovering over a calculated highlights its source values and a side panel shows a table of calculated results against those different combinations.

Always interesting to see neat ideas like this presented on top of UIs that haven't had a significant upgrade in a very long time.

o3-mini is really good at writing internal documentation. I wanted to refresh my knowledge of how the Datasette permissions system works today. I already have extensive hand-written documentation for that, but I thought it would be interesting to see if I could derive any insights from running an LLM against the codebase.

o3-mini has an input limit of 200,000 tokens. I used LLM and my files-to-prompt tool to generate the documentation like this:

cd /tmp

git clone https://github.com/simonw/datasette

cd datasette

files-to-prompt datasette -e py -c | \

llm -m o3-mini -s \

'write extensive documentation for how the permissions system works, as markdown'The files-to-prompt command is fed the datasette subdirectory, which contains just the source code for the application - omitting tests (in tests/) and documentation (in docs/).

The -e py option causes it to only include files with a .py extension - skipping all of the HTML and JavaScript files in that hierarchy.

The -c option causes it to output Claude's XML-ish format - a format that works great with other LLMs too.

You can see the output of that command in this Gist.

Then I pipe that result into LLM, requesting the o3-mini OpenAI model and passing the following system prompt:

write extensive documentation for how the permissions system works, as markdown

Specifically requesting Markdown is important.

The prompt used 99,348 input tokens and produced 3,118 output tokens (320 of those were invisible reasoning tokens). That's a cost of 12.3 cents.

Honestly, the results are fantastic. I had to double-check that I hadn't accidentally fed in the documentation by mistake.

(It's possible that the model is picking up additional information about Datasette in its training set, but I've seen similar high quality results from other, newer libraries so I don't think that's a significant factor.)

In this case I already had extensive written documentation of my own, but this was still a useful refresher to help confirm that the code matched my mental model of how everything works.

Documentation of project internals as a category is notorious for going out of date. Having tricks like this to derive usable how-it-works documentation from existing codebases in just a few seconds and at a cost of a few cents is wildly valuable.

Gemini 2.0 is now available to everyone. Big new Gemini 2.0 releases today:

- Gemini 2.0 Pro (Experimental) is Google's "best model yet for coding performance and complex prompts" - currently available as a free preview.

- Gemini 2.0 Flash is now generally available.

-

Gemini 2.0 Flash-Lite looks particularly interesting:

We’ve gotten a lot of positive feedback on the price and speed of 1.5 Flash. We wanted to keep improving quality, while still maintaining cost and speed. So today, we’re introducing 2.0 Flash-Lite, a new model that has better quality than 1.5 Flash, at the same speed and cost. It outperforms 1.5 Flash on the majority of benchmarks.

That means Gemini 2.0 Flash-Lite is priced at 7.5c/million input tokens and 30c/million output tokens - half the price of OpenAI's GPT-4o mini (15c/60c).

Gemini 2.0 Flash isn't much more expensive: 10c/million for text/image input, 70c/million for audio input, 40c/million for output. Again, cheaper than GPT-4o mini.

I pushed a new LLM plugin release, llm-gemini 0.10, adding support for the three new models:

llm install -U llm-gemini

llm keys set gemini

# paste API key here

llm -m gemini-2.0-flash "impress me"

llm -m gemini-2.0-flash-lite-preview-02-05 "impress me"

llm -m gemini-2.0-pro-exp-02-05 "impress me"

Here's the output for those three prompts.

I ran Generate an SVG of a pelican riding a bicycle through the three new models. Here are the results, cheapest to most expensive:

gemini-2.0-flash-lite-preview-02-05

gemini-2.0-flash

gemini-2.0-pro-exp-02-05

I also ran the same prompt I tried with o3-mini the other day:

cd /tmp

git clone https://github.com/simonw/datasette

cd datasette

files-to-prompt datasette -e py -c | \

llm -m gemini-2.0-pro-exp-02-05 \

-s 'write extensive documentation for how the permissions system works, as markdown' \

-o max_output_tokens 10000

Here's the result from that - you can compare that to o3-mini's result here.

S1: The $6 R1 Competitor? Tim Kellogg shares his notes on a new paper, s1: Simple test-time scaling, which describes an inference-scaling model fine-tuned on top of Qwen2.5-32B-Instruct for just $6 - the cost for 26 minutes on 16 NVIDIA H100 GPUs.

Tim highlight the most exciting result:

After sifting their dataset of 56K examples down to just the best 1K, they found that the core 1K is all that's needed to achieve o1-preview performance on a 32B model.

The paper describes a technique called "Budget forcing":

To enforce a minimum, we suppress the generation of the end-of-thinking token delimiter and optionally append the string “Wait” to the model’s current reasoning trace to encourage the model to reflect on its current generation

That's the same trick Theia Vogel described a few weeks ago.

Here's the s1-32B model on Hugging Face. I found a GGUF version of it at brittlewis12/s1-32B-GGUF, which I ran using Ollama like so:

ollama run hf.co/brittlewis12/s1-32B-GGUF:Q4_0

I also found those 1,000 samples on Hugging Face in the simplescaling/s1K data repository there.

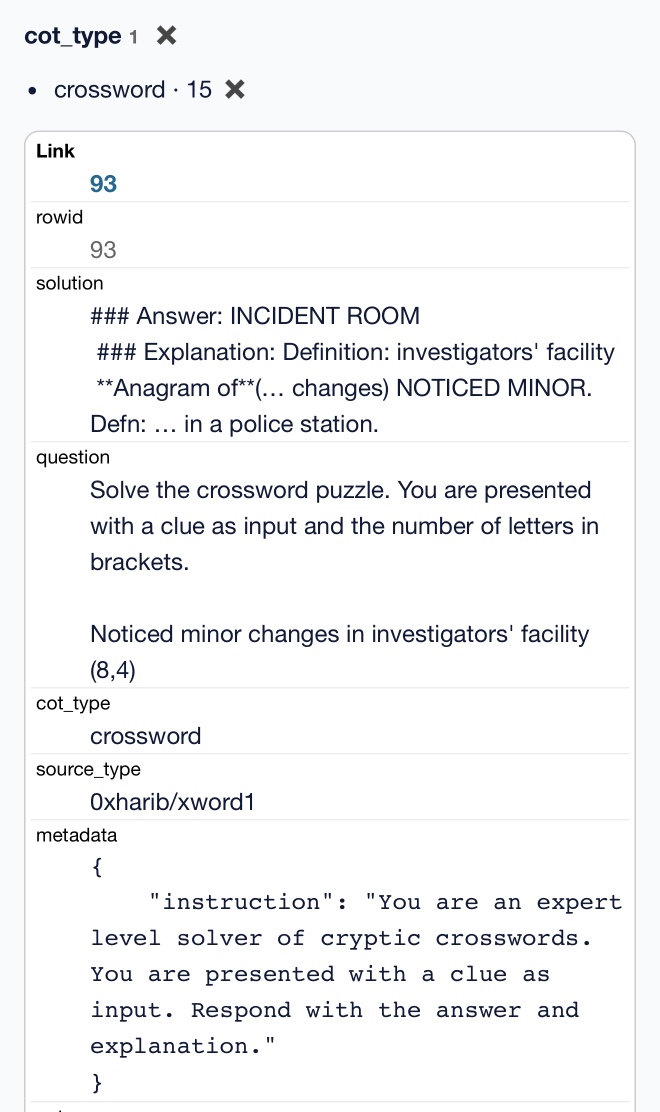

I used DuckDB to convert the parquet file to CSV (and turn one VARCHAR[] column into JSON):

COPY (

SELECT

solution,

question,

cot_type,

source_type,

metadata,

cot,

json_array(thinking_trajectories) as thinking_trajectories,

attempt

FROM 's1k-00001.parquet'

) TO 'output.csv' (HEADER, DELIMITER ',');

Then I loaded that CSV into sqlite-utils so I could use the convert command to turn a Python data structure into JSON using json.dumps() and eval():

# Load into SQLite

sqlite-utils insert s1k.db s1k output.csv --csv

# Fix that column

sqlite-utils convert s1k.db s1u metadata 'json.dumps(eval(value))' --import json

# Dump that back out to CSV

sqlite-utils rows s1k.db s1k --csv > s1k.csv

Here's that CSV in a Gist, which means I can load it into Datasette Lite.

It really is a tiny amount of training data. It's mostly math and science, but there are also 15 cryptic crossword examples.