Friday, 31st January 2025

The surprising way to save memory with BytesIO

(via)

Itamar Turner-Trauring explains that if you have a BytesIO object in Python calling .read() on it will create a full copy of that object, doubling the amount of memory used - but calling .getvalue() returns a bytes object that uses no additional memory, instead using copy-on-write.

.getbuffer() is another memory-efficient option but it returns a memoryview which has less methods than the bytes you get back from .getvalue()- it doesn't have .find() for example.

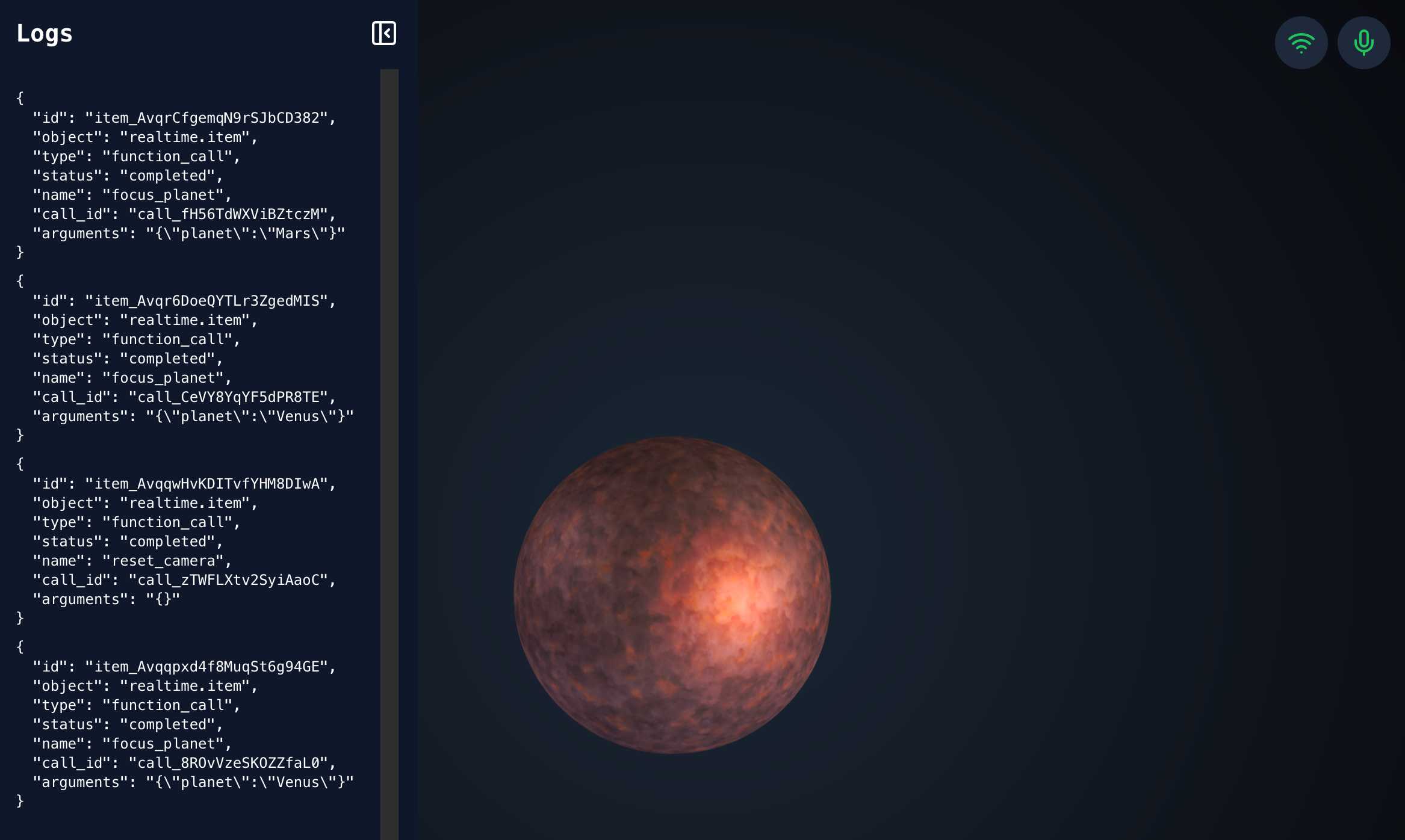

openai-realtime-solar-system. This was my favourite demo from OpenAI DevDay back in October - a voice-driven exploration of the solar system, developed by Katia Gil Guzman, where you could say things out loud like "show me Mars" and it would zoom around showing you different planetary bodies.

OpenAI finally released the code for it, now upgraded to use the new, easier to use WebRTC API they released in December.

I ran it like this, loading my OpenAI API key using llm keys get:

cd /tmp

git clone https://github.com/openai/openai-realtime-solar-system

cd openai-realtime-solar-system

npm install

OPENAI_API_KEY="$(llm keys get openai)" npm run dev

You need to click on both the Wifi icon and the microphone icon before you can instruct it with your voice. Try "Show me Mars".

Latest black (25.1.0) adds a newline after docstring and before pass in an exception class.

I filed a bug report against Black when the latest release - 25.1.0 - reformatted the following code to add an ugly (to me) newline between the docstring and the pass:

class ModelError(Exception): "Models can raise this error, which will be displayed to the user" pass

Black maintainer Jelle Zijlstra confirmed that this is intended behavior with respect to Black's 2025 stable style, but also helped me understand that the pass there is actually unnecessary so I can fix the aesthetics by removing that entirely.

I'm linking to this issue because it's a neat example of how I like to include steps-to-reproduce using uvx to create one-liners you can paste into a terminal to see the bug that I'm reporting. In this case I shared the following:

Here's a way to see that happen using

uvx. With the previous Black version:echo 'class ModelError(Exception): "Models can raise this error, which will be displayed to the user" pass' | uvx --with 'black==24.10.0' black -This outputs:

class ModelError(Exception): "Models can raise this error, which will be displayed to the user" pass All done! ✨ 🍰 ✨ 1 file left unchanged.But if you bump to

25.1.0this happens:echo 'class ModelError(Exception): "Models can raise this error, which will be displayed to the user" pass' | uvx --with 'black==25.1.0' black -Output:

class ModelError(Exception): "Models can raise this error, which will be displayed to the user" pass reformatted - All done! ✨ 🍰 ✨ 1 file reformatted.

Via David Szotten I learned that you can use uvx black@25.1.0 here instead.

OpenAI o3-mini, now available in LLM

OpenAI’s o3-mini is out today. As with other o-series models it’s a slightly difficult one to evaluate—we now need to decide if a prompt is best run using GPT-4o, o1, o3-mini or (if we have access) o1 Pro.

[... 748 words]