11th July 2025

moonshotai/Kimi-K2-Instruct (via) Colossal new open weights model release today from Moonshot AI, a two year old Chinese AI lab with a name inspired by Pink Floyd’s album The Dark Side of the Moon.

My HuggingFace storage calculator says the repository is 958.52 GB. It's a mixture-of-experts model with "32 billion activated parameters and 1 trillion total parameters", trained using the Muon optimizer as described in Moonshot's joint paper with UCLA Muon is Scalable for LLM Training.

I think this may be the largest ever open weights model? DeepSeek v3 is 671B.

I created an API key for Moonshot, added some dollars and ran a prompt against it using my LLM tool. First I added this to the extra-openai-models.yaml file:

- model_id: kimi-k2

model_name: kimi-k2-0711-preview

api_base: https://api.moonshot.ai/v1

api_key_name: moonshot

Then I set the API key:

llm keys set moonshot

# Paste key here

And ran a prompt:

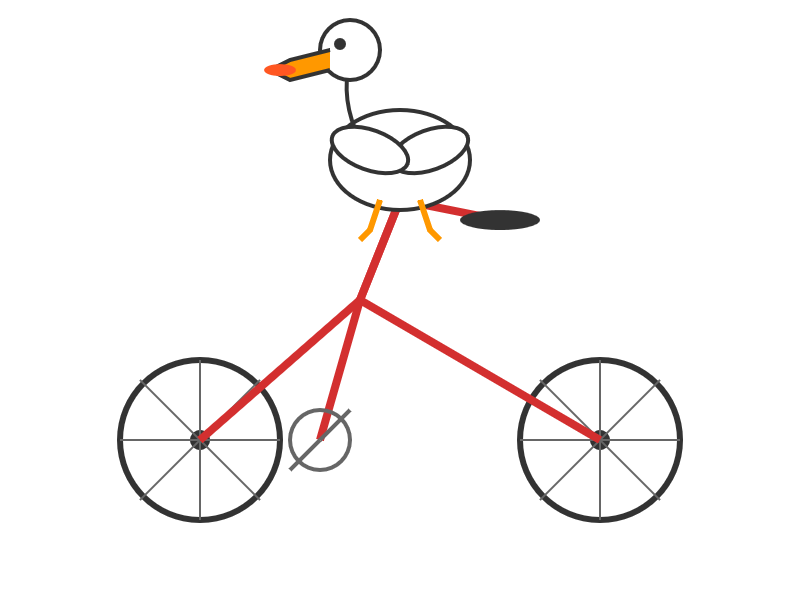

llm -m kimi-k2 "Generate an SVG of a pelican riding a bicycle" \

-o max_tokens 2000

(The default max tokens setting was too short.)

This is pretty good! The spokes are a nice touch. Full transcript here.

This one is open weights but not open source: they're using a modified MIT license with this non-OSI-compliant section tagged on at the end:

Our only modification part is that, if the Software (or any derivative works thereof) is used for any of your commercial products or services that have more than 100 million monthly active users, or more than 20 million US dollars (or equivalent in other currencies) in monthly revenue, you shall prominently display "Kimi K2" on the user interface of such product or service.

Update: MLX developer Awni Hannun reports:

The new Kimi K2 1T model (4-bit quant) runs on 2 512GB M3 Ultras with mlx-lm and mx.distributed.

1 trillion params, at a speed that's actually quite usable

Recent articles

- Two new Showboat tools: Chartroom and datasette-showboat - 17th February 2026

- Deep Blue - 15th February 2026

- The evolution of OpenAI's mission statement - 13th February 2026