Wednesday, 7th May 2025

astral-sh/ty (via) Astral have been working on this "extremely fast Python type checker and language server, written in Rust" quietly but in-the-open for a while now. Here's the first alpha public release - albeit not yet announced - as ty on PyPI (nice donated two-letter name!)

You can try it out via uvx like this - run the command in a folder full of Python code and see what comes back:

uvx ty check

I got zero errors for my recent, simple condense-json library and a ton of errors for my more mature sqlite-utils library - output here.

It really is fast:

cd /tmp

git clone https://github.com/simonw/sqlite-utils

cd sqlite-utils

time uvx ty check

Reports it running in around a tenth of a second (0.109 total wall time) using multiple CPU cores:

uvx ty check 0.18s user 0.07s system 228% cpu 0.109 total

Running time uvx mypy . in the same folder (both after first ensuring the underlying tools had been cached) took around 7x longer:

uvx mypy . 0.46s user 0.09s system 74% cpu 0.740 total

This isn't a fair comparison yet as ty still isn't feature complete in comparison to mypy.

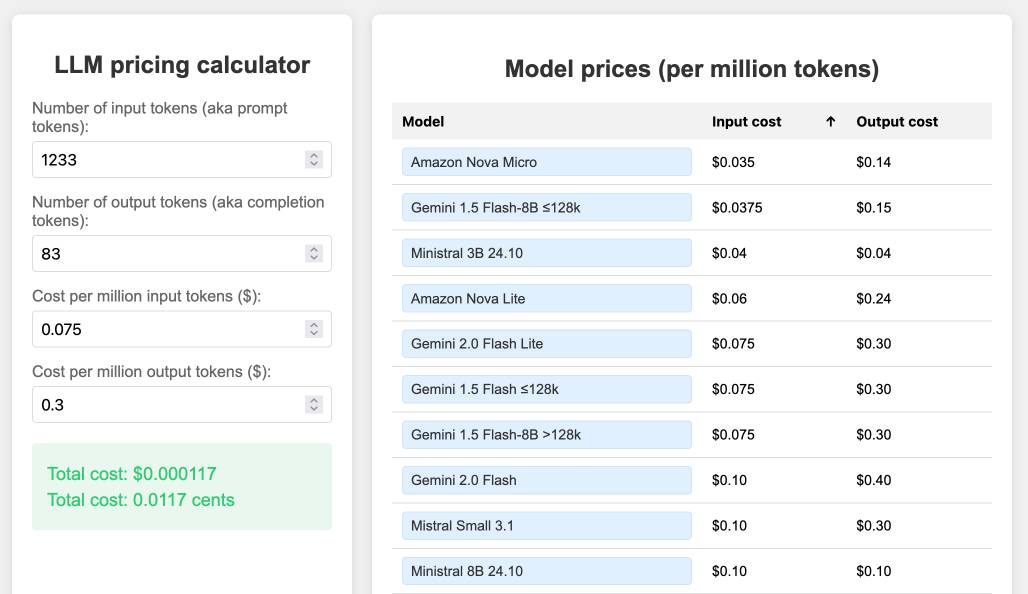

llm-prices.com.

I've been maintaining a simple LLM pricing calculator since October last year. I finally decided to split it out to its own domain name (previously it was hosted at tools.simonwillison.net/llm-prices), running on Cloudflare Pages.

The site runs out of my simonw/llm-prices GitHub repository. I ported the history of the old llm-prices.html file using a vibe-coded bash script that I forgot to save anywhere.

I rarely use AI-generated imagery in my own projects, but for this one I found an excellent reason to use GPT-4o image outputs... to generate the favicon! I dropped a screenshot of the site into ChatGPT (o4-mini-high in this case) and asked for the following:

design a bunch of options for favicons for this site in a single image, white background

![]()

I liked the top right one, so I cropped it into Pixelmator and made a 32x32 version. Here's what it looks like in my browser:

![]()

I added a new feature just now: the state of the calculator is now reflected in the #fragment-hash URL of the page, which means you can link to your previous calculations.

I implemented that feature using the new gemini-2.5-pro-preview-05-06, since that model boasts improved front-end coding abilities. It did a pretty great job - here's how I prompted it:

llm -m gemini-2.5-pro-preview-05-06 -f https://www.llm-prices.com/ -s 'modify this code so that the state of the page is reflected in the fragmenth hash URL - I want to capture the values filling out the form fields and also the current sort order of the table. These should be respected when the page first loads too. Update them using replaceHistory, no need to enable the back button.'

Here's the transcript and the commit updating the tool, plus an example link showing the new feature in action (and calculating the cost for that Gemini 2.5 Pro prompt at 16.8224 cents, after fixing the calculation.)

Medium is the new large. New model release from Mistral - this time closed source/proprietary. Mistral Medium claims strong benchmark scores similar to GPT-4o and Claude 3.7 Sonnet, but is priced at $0.40/million input and $2/million output - about the same price as GPT 4.1 Mini. For comparison, GPT-4o is $2.50/$10 and Claude 3.7 Sonnet is $3/$15.

The model is a vision LLM, accepting both images and text.

More interesting than the price is the deployment model. Mistral Medium may not be open weights but it is very much available for self-hosting:

Mistral Medium 3 can also be deployed on any cloud, including self-hosted environments of four GPUs and above.

Mistral's other announcement today is Le Chat Enterprise. This is a suite of tools that can integrate with your company's internal data and provide "agents" (these look similar to Claude Projects or OpenAI GPTs), again with the option to self-host.

Is there a new open weights model coming soon? This note tucked away at the bottom of the Mistral Medium 3 announcement seems to hint at that:

With the launches of Mistral Small in March and Mistral Medium today, it's no secret that we're working on something 'large' over the next few weeks. With even our medium-sized model being resoundingly better than flagship open source models such as Llama 4 Maverick, we're excited to 'open' up what's to come :)

I released llm-mistral 0.12 adding support for the new model.

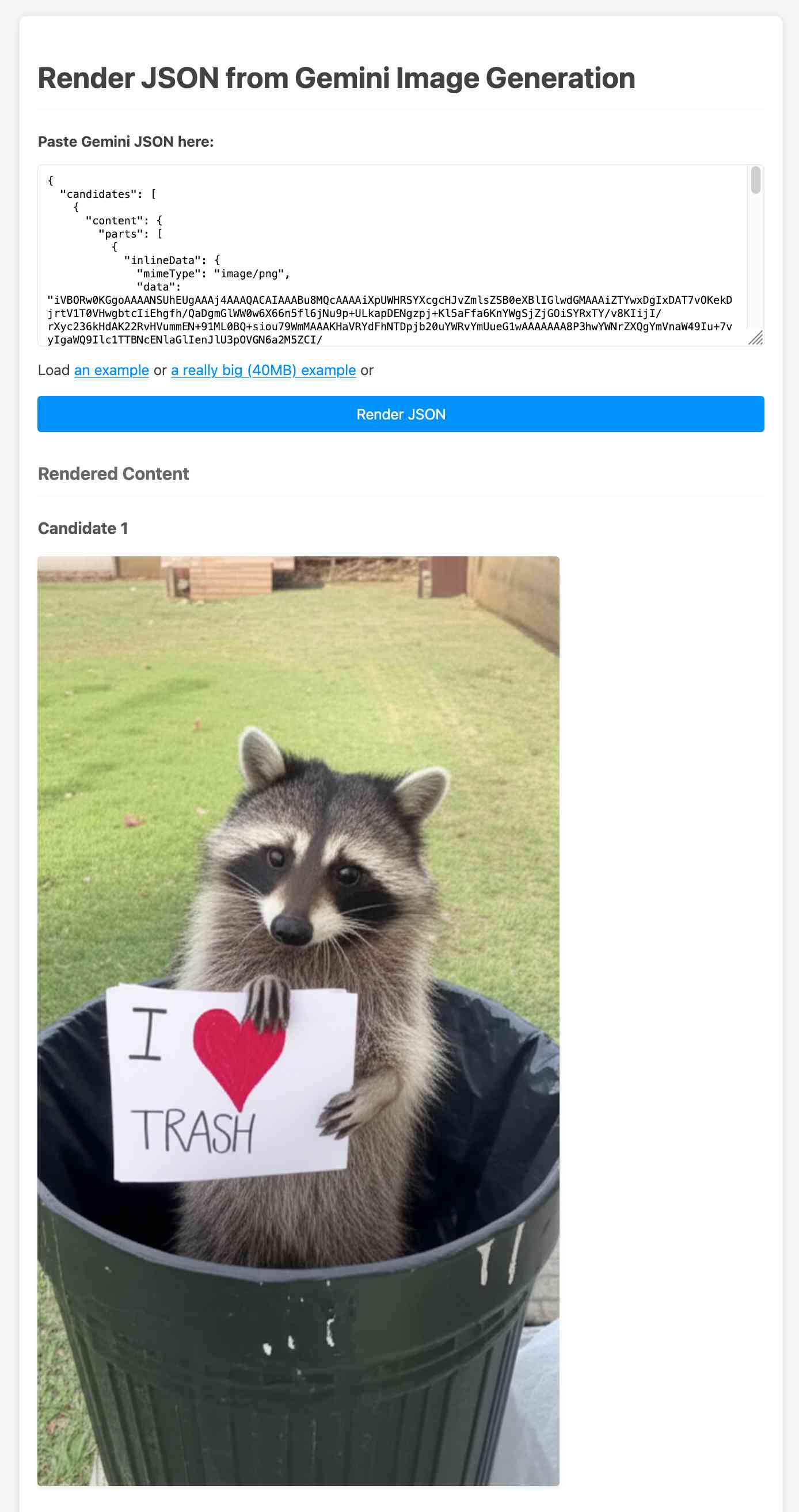

Create and edit images with Gemini 2.0 in preview (via) Gemini 2.0 Flash has had image generation capabilities for a while now, and they're now available via the paid Gemini API - at 3.9 cents per generated image.

According to the API documentation you need to use the new gemini-2.0-flash-preview-image-generation model ID and specify {"responseModalities":["TEXT","IMAGE"]} as part of your request.

Here's an example that calls the API using curl (and fetches a Gemini key from the llm keys get store):

curl -s -X POST \ "https://generativelanguage.googleapis.com/v1beta/models/gemini-2.0-flash-preview-image-generation:generateContent?key=$(llm keys get gemini)" \ -H "Content-Type: application/json" \ -d '{ "contents": [{ "parts": [ {"text": "Photo of a raccoon in a trash can with a paw-written sign that says I love trash"} ] }], "generationConfig":{"responseModalities":["TEXT","IMAGE"]} }' > /tmp/raccoon.json

Here's the response. I got Gemini 2.5 Pro to vibe-code me a new debug tool for visualizing that JSON. If you visit that tool and click the "Load an example" link you'll see the result of the raccoon image visualized:

The other prompt I tried was this one:

Provide a vegetarian recipe for butter chicken but with chickpeas not chicken and include many inline illustrations along the way

The result of that one was a 41MB JSON file(!) containing 28 images - which presumably cost over a dollar since images are 3.9 cents each.

Some of the illustrations it chose for that one were somewhat unexpected:

If you want to see that one you can click the "Load a really big example" link in the debug tool, then wait for your browser to fetch and render the full 41MB JSON file.

The most interesting feature of Gemini (as with GPT-4o images) is the ability to accept images as inputs. I tried that out with this pelican photo like this:

cat > /tmp/request.json << EOF { "contents": [{ "parts":[ {"text": "Modify this photo to add an inappropriate hat"}, { "inline_data": { "mime_type":"image/jpeg", "data": "$(base64 -i pelican.jpg)" } } ] }], "generationConfig": {"responseModalities": ["TEXT", "IMAGE"]} } EOF # Execute the curl command with the JSON file curl -X POST \ 'https://generativelanguage.googleapis.com/v1beta/models/gemini-2.0-flash-preview-image-generation:generateContent?key='$(llm keys get gemini) \ -H 'Content-Type: application/json' \ -d @/tmp/request.json \ > /tmp/out.json

And now the pelican is wearing a hat:

Introducing web search on the Anthropic API

(via)

Anthropic's web search (presumably still powered by Brave) is now also available through their API, in the shape of a new web search tool called web_search_20250305.

You can specify a maximum number of uses per prompt and you can also pass a list of disallowed or allowed domains, plus hints as to the user's current location.

Search results are returned in a format that looks similar to the Anthropic Citations API.

It's charged at $10 per 1,000 searches, which is a little more expensive than what the Brave Search API charges ($3 or $5 or $9 per thousand depending on how you're using them).

I couldn't find any details of additional rules surrounding storage or display of search results, which surprised me because both Google Gemini and OpenAI have these for their own API search results.