354 posts tagged “ai-assisted-programming”

Using AI tools such as Large Language Models to help write code. Vibe coding is the less responsible subset of this. See Here’s how I use LLMs to help me write code for a description of my process.

2026

AI Doesn’t Reduce Work—It Intensifies It (via) Aruna Ranganathan and Xingqi Maggie Ye from Berkeley Haas School of Business report initial findings in the HBR from their April to December 2025 study of 200 employees at a "U.S.-based technology company".

This captures an effect I've been observing in my own work with LLMs: the productivity boost these things can provide is exhausting.

AI introduced a new rhythm in which workers managed several active threads at once: manually writing code while AI generated an alternative version, running multiple agents in parallel, or reviving long-deferred tasks because AI could “handle them” in the background. They did this, in part, because they felt they had a “partner” that could help them move through their workload.

While this sense of having a “partner” enabled a feeling of momentum, the reality was a continual switching of attention, frequent checking of AI outputs, and a growing number of open tasks. This created cognitive load and a sense of always juggling, even as the work felt productive.

I'm frequently finding myself with work on two or three projects running parallel. I can get so much done, but after just an hour or two my mental energy for the day feels almost entirely depleted.

I've had conversations with people recently who are losing sleep because they're finding building yet another feature with "just one more prompt" irresistible.

The HBR piece calls for organizations to build an "AI practice" that structures how AI is used to help avoid burnout and counter effects that "make it harder for organizations to distinguish genuine productivity gains from unsustainable intensity".

I think we've just disrupted decades of existing intuition about sustainable working practices. It's going to take a while and some discipline to find a good new balance.

I am having more fun programming than I ever have, because so many more of the programs I wish I could find the time to write actually exist. I wish I could share this joy with the people who are fearful about the changes agents are bringing. The fear itself I understand, I have fear more broadly about what the end-game is for intelligence on tap in our society. But in the limited domain of writing computer programs these tools have brought so much exploration and joy to my work.

— David Crawshaw, Eight more months of agents

How StrongDM’s AI team build serious software without even looking at the code

Last week I hinted at a demo I had seen from a team implementing what Dan Shapiro called the Dark Factory level of AI adoption, where no human even looks at the code the coding agents are producing. That team was part of StrongDM, and they’ve just shared the first public description of how they are working in Software Factories and the Agentic Moment:

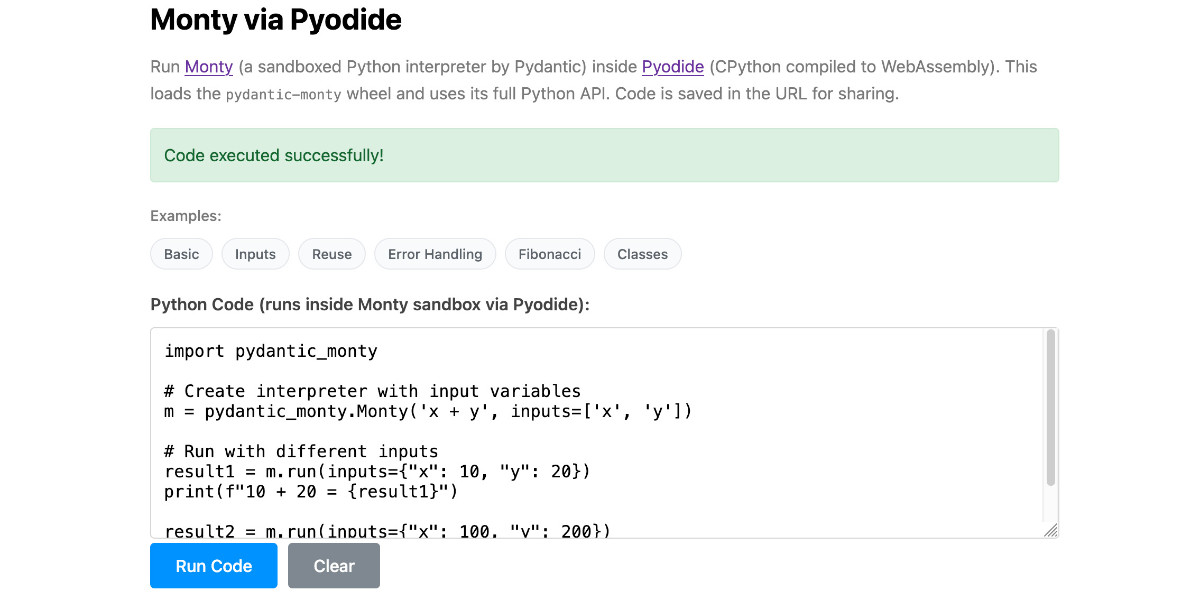

[... 1,664 words]Running Pydantic’s Monty Rust sandboxed Python subset in WebAssembly

There’s a jargon-filled headline for you! Everyone’s building sandboxes for running untrusted code right now, and Pydantic’s latest attempt, Monty, provides a custom Python-like language (a subset of Python) in Rust and makes it available as both a Rust library and a Python package. I got it working in WebAssembly, providing a sandbox-in-a-sandbox.

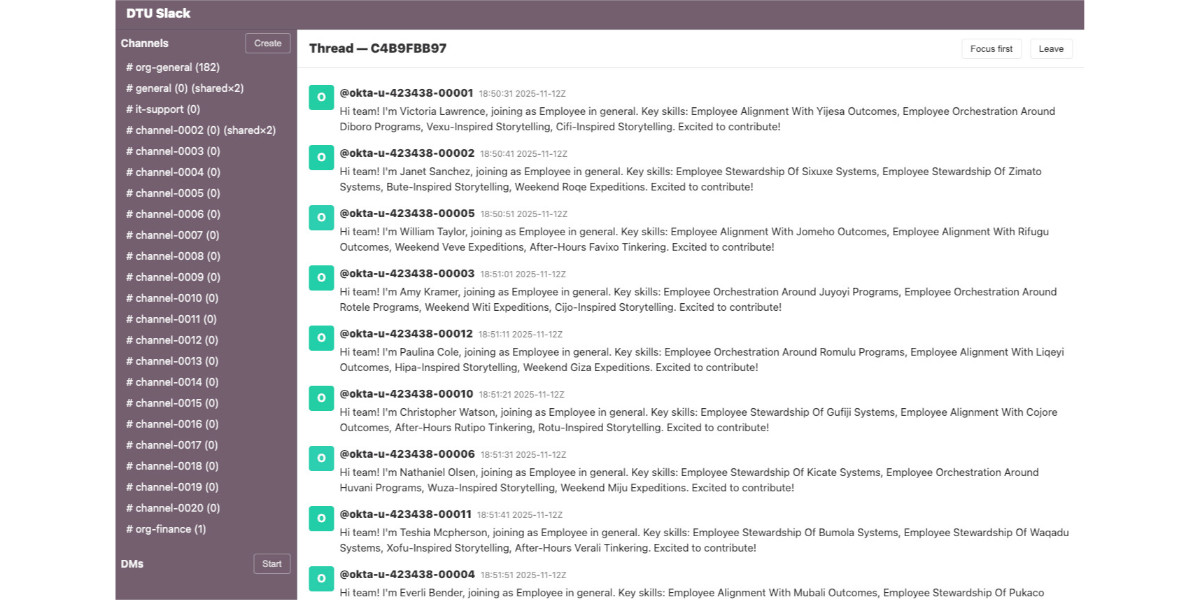

[... 854 words]When I want to quickly implement a one-off experiment in a part of the codebase I am unfamiliar with, I get codex to do extensive due diligence. Codex explores relevant slack channels, reads related discussions, fetches experimental branches from those discussions, and cherry picks useful changes for my experiment. All of this gets summarized in an extensive set of notes, with links back to where each piece of information was found. Using these notes, codex wires the experiment and makes a bunch of hyperparameter decisions I couldn’t possibly make without much more effort.

— Karel D'Oosterlinck, I spent $10,000 to automate my research at OpenAI with Codex

Mitchell Hashimoto: My AI Adoption Journey (via) Some really good and unconventional tips in here for getting to a place with coding agents where they demonstrably improve your workflow and productivity. I particularly liked:

-

Reproduce your own work - when learning to use coding agents Mitchell went through a period of doing the work manually, then recreating the same solution using agents as an exercise:

I literally did the work twice. I'd do the work manually, and then I'd fight an agent to produce identical results in terms of quality and function (without it being able to see my manual solution, of course).

-

End-of-day agents - letting agents step in when your energy runs out:

To try to find some efficiency, I next started up a new pattern: block out the last 30 minutes of every day to kick off one or more agents. My hypothesis was that perhaps I could gain some efficiency if the agent can make some positive progress in the times I can't work anyways.

-

Outsource the Slam Dunks - once you know an agent can likely handle a task, have it do that task while you work on something more interesting yourself.

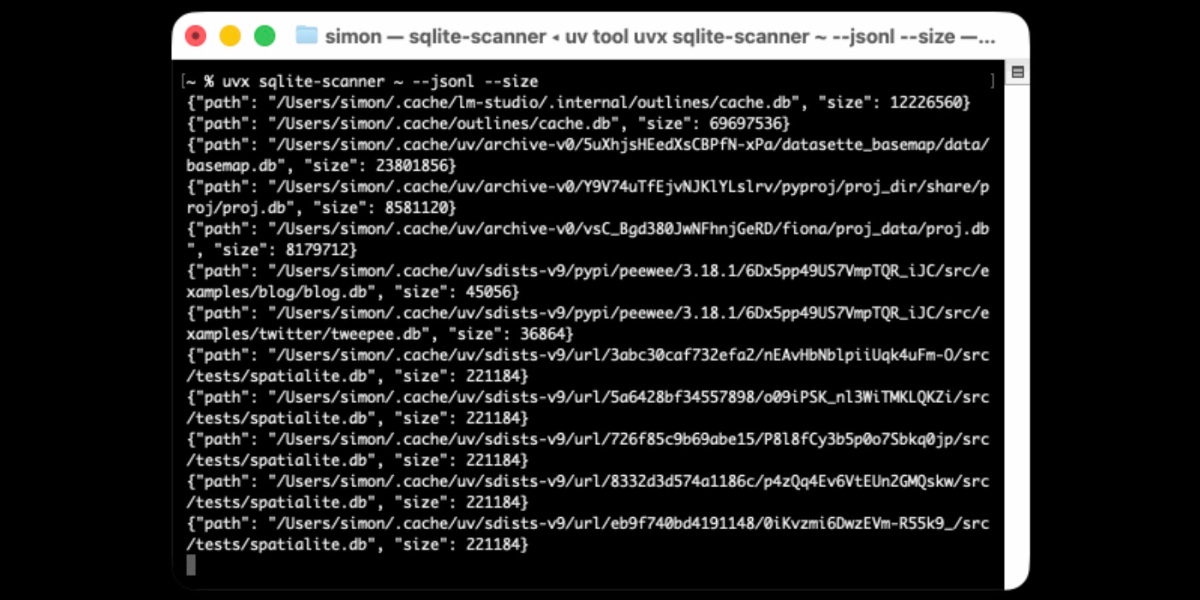

Distributing Go binaries like sqlite-scanner through PyPI using go-to-wheel

I’ve been exploring Go for building small, fast and self-contained binary applications recently. I’m enjoying how there’s generally one obvious way to do things and the resulting code is boring and readable—and something that LLMs are very competent at writing. The one catch is distribution, but it turns out publishing Go binaries to PyPI means any Go binary can be just a uvx package-name call away.

We gotta talk about AI as a programming tool for the arts. Chris Ashworth is the creator and CEO of QLab, a macOS software package for “cue-based, multimedia playback” which is designed to automate lighting and audio for live theater productions.

I recently started following him on TikTok where he posts about his business and theater automation in general - Chris founded the Voxel theater in Baltimore which QLab use as a combined performance venue, teaching hub and research lab (here's a profile of the theater), and the resulting videos offer a fascinating glimpse into a world I know virtually nothing about.

This latest TikTok describes his Claude Opus moment, after he used Claude Code to build a custom lighting design application for a very niche project and put together a useful application in just a few days that he would never have been able to spare the time for otherwise.

Chris works full time in the arts and comes at generative AI from a position of rational distrust. It's interesting to see him working through that tension to acknowledge that there are valuable applications here to build tools for the community he serves.

I have been at least gently skeptical about all this stuff for the last two years. Every time I checked in on it, I thought it was garbage, wasn't interested in it, wasn't useful. [...] But as a programmer, if you hear something like, this is changing programming, it's important to go check it out once in a while. So I went and checked it out a few weeks ago. And it's different. It's astonishing. [...]

One thing I learned in this exercise is that it can't make you a fundamentally better programmer than you already are. It can take a person who is a bad programmer and make them faster at making bad programs. And I think it can take a person who is a good programmer and, from what I've tested so far, make them faster at making good programs. [...] You see programmers out there saying, "I'm shipping code I haven't looked at and don't understand." I'm terrified by that. I think that's awful. But if you're capable of understanding the code that it's writing, and directing, designing, editing, deleting, being quality control on it, it's kind of astonishing. [...]

The positive thing I see here, and I think is worth coming to terms with, is this is an application that I would never have had time to write as a professional programmer. Because the audience is three people. [...] There's no way it was worth it to me to spend my energy of 20 years designing and implementing software for artists to build an app for three people that is this level of polish. And it took me a few days. [...]

I know there are a lot of people who really hate this technology, and in some ways I'm among them. But I think we've got to come to terms with this is a career-changing moment. And I really hate that I'm saying that because I didn't believe it for the last two years. [...] It's like having a room full of power tools. I wouldn't want to send an untrained person into a room full of power tools because they might chop off their fingers. But if someone who knows how to use tools has the option to have both hand tools and a power saw and a power drill and a lathe, there's a lot of work they can do with those tools at a lot faster speed.

Adding dynamic features to an aggressively cached website

My blog uses aggressive caching: it sits behind Cloudflare with a 15 minute cache header, which guarantees it can survive even the largest traffic spike to any given page. I’ve recently added a couple of dynamic features that work in spite of that full-page caching. Here’s how those work.

[... 1,145 words]The Five Levels: from Spicy Autocomplete to the Dark Factory. Dan Shapiro proposes a five level model of AI-assisted programming, inspired by the five (or rather six, it's zero-indexed) levels of driving automation.

- Spicy autocomplete, aka original GitHub Copilot or copying and pasting snippets from ChatGPT.

- The coding intern, writing unimportant snippets and boilerplate with full human review.

- The junior developer, pair programming with the model but still reviewing every line.

- The developer. Most code is generated by AI, and you take on the role of full-time code reviewer.

- The engineering team. You're more of an engineering manager or product/program/project manager. You collaborate on specs and plans, the agents do the work.

- The dark software factory, like a factory run by robots where the lights are out because robots don't need to see.

Dan says about that last category:

At level 5, it's not really a car any more. You're not really running anybody else's software any more. And your software process isn't really a software process any more. It's a black box that turns specs into software.

Why Dark? Maybe you've heard of the Fanuc Dark Factory, the robot factory staffed by robots. It's dark, because it's a place where humans are neither needed nor welcome.

I know a handful of people who are doing this. They're small teams, less than five people. And what they're doing is nearly unbelievable -- and it will likely be our future.

I've talked to one team that's doing the pattern hinted at here. It was fascinating. The key characteristics:

- Nobody reviews AI-produced code, ever. They don't even look at it.

- The goal of the system is to prove that the system works. A huge amount of the coding agent work goes into testing and tooling and simulating related systems and running demos.

- The role of the humans is to design that system - to find new patterns that can help the agents work more effectively and demonstrate that the software they are building is robust and effective.

It was a tiny team and they stuff they had built in just a few months looked very convincing to me. Some of them had 20+ years of experience as software developers working on systems with high reliability requirements, so they were not approaching this from a naive perspective.

I'm hoping they come out of stealth soon because I can't really share more details than this.

Update 7th February 2026: The demo was by StrongDM's AI team, and they've now gone public with details of how they work.

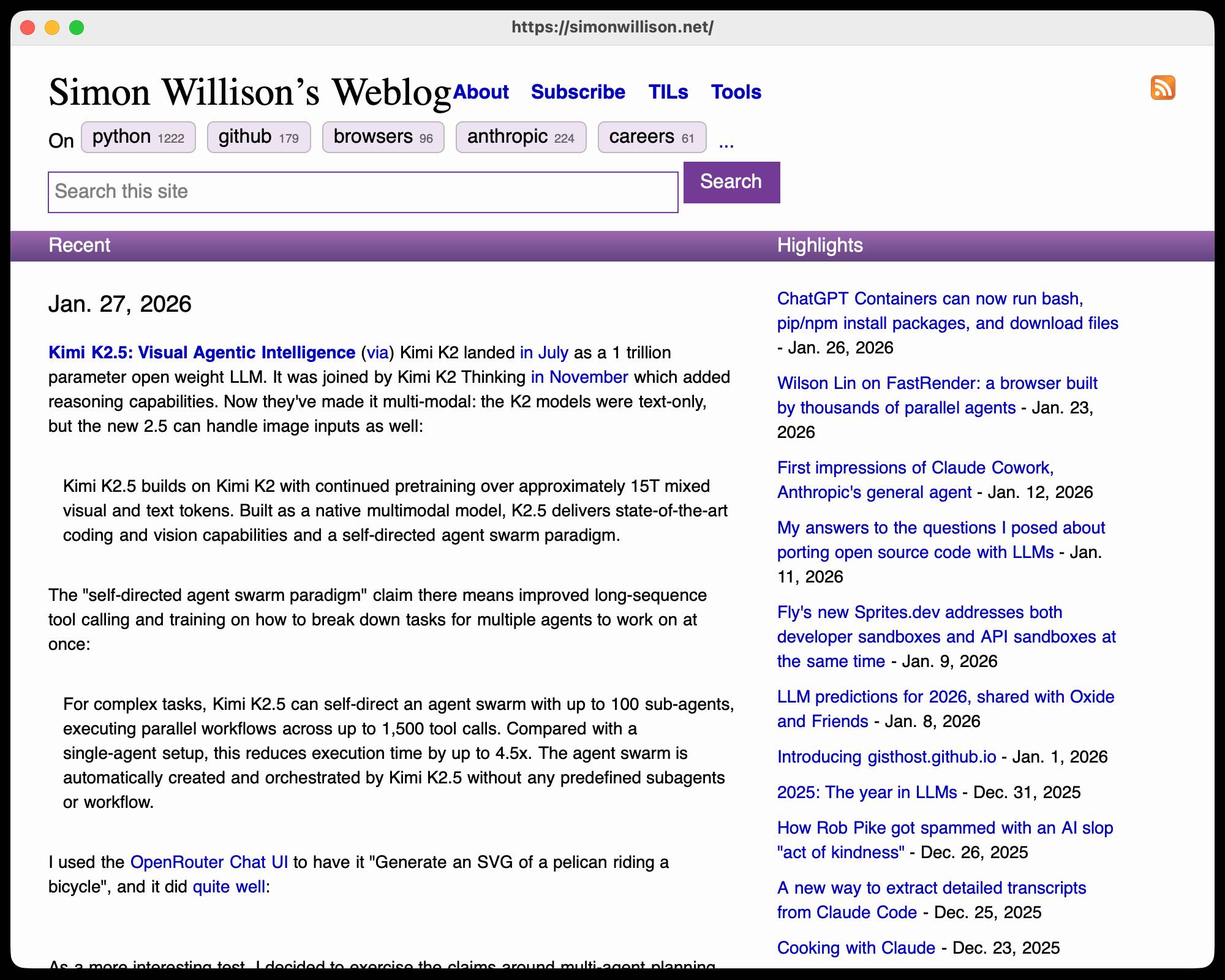

One Human + One Agent = One Browser From Scratch (via) embedding-shapes was so infuriated by the hype around Cursor's FastRender browser project - thousands of parallel agents producing ~1.6 million lines of Rust - that they were inspired to take a go at building a web browser using coding agents themselves.

The result is one-agent-one-browser and it's really impressive. Over three days they drove a single Codex CLI agent to build 20,000 lines of Rust that successfully renders HTML+CSS with no Rust crate dependencies at all - though it does (reasonably) use Windows, macOS and Linux system frameworks for image and text rendering.

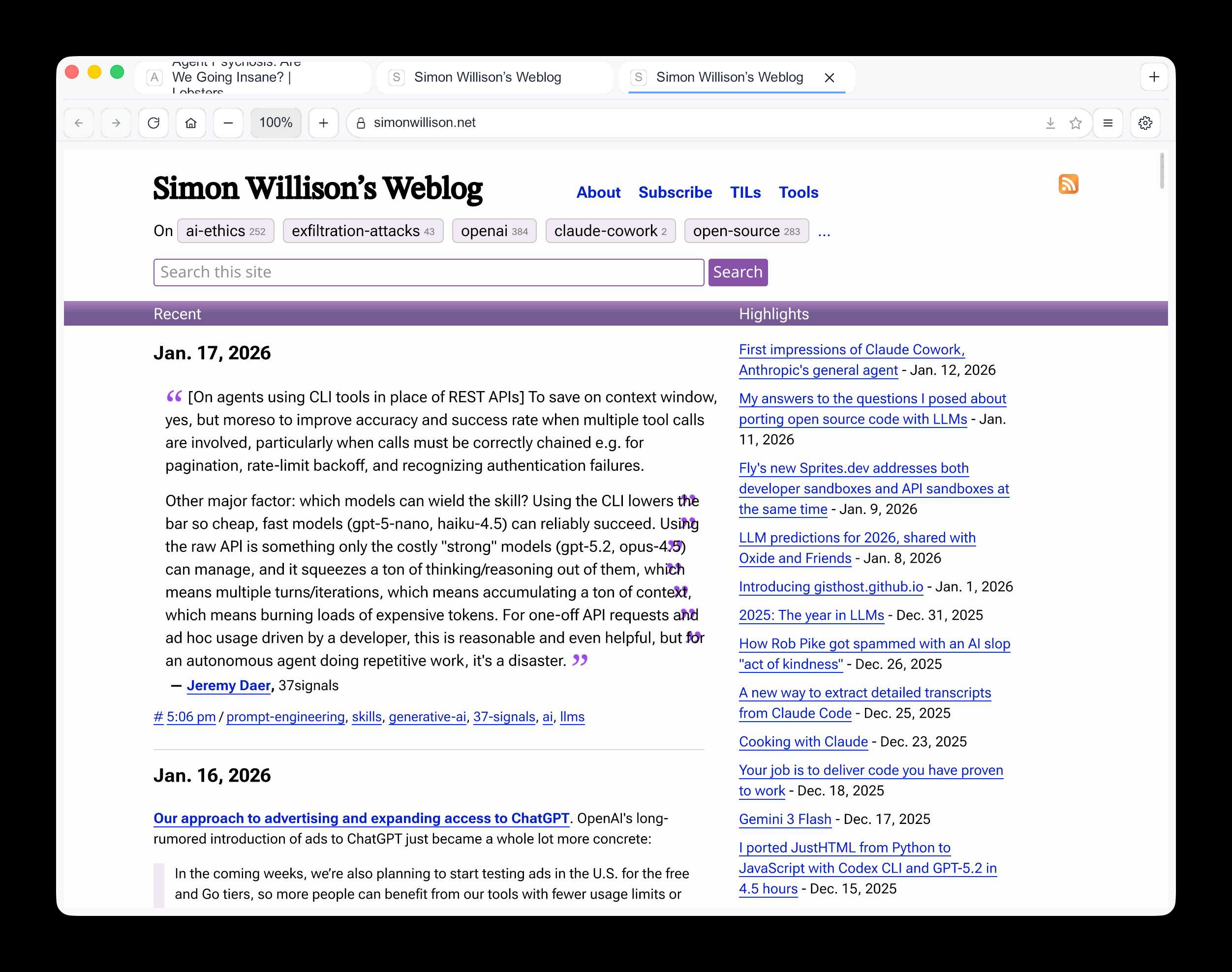

I installed the 1MB macOS binary release and ran it against my blog:

chmod 755 ~/Downloads/one-agent-one-browser-macOS-ARM64

~/Downloads/one-agent-one-browser-macOS-ARM64 https://simonwillison.net/

Here's the result:

It even rendered my SVG feed subscription icon! A PNG image is missing from the page, which looks like an intermittent bug (there's code to render PNGs).

The code is pretty readable too - here's the flexbox implementation.

I had thought that "build a web browser" was the ideal prompt to really stretch the capabilities of coding agents - and that it would take sophisticated multi-agent harnesses (as seen in the Cursor project) and millions of lines of code to achieve.

Turns out one agent driven by a talented engineer, three days and 20,000 lines of Rust is enough to get a very solid basic renderer working!

I'm going to upgrade my prediction for 2029: I think we're going to get a production-grade web browser built by a small team using AI assistance by then.

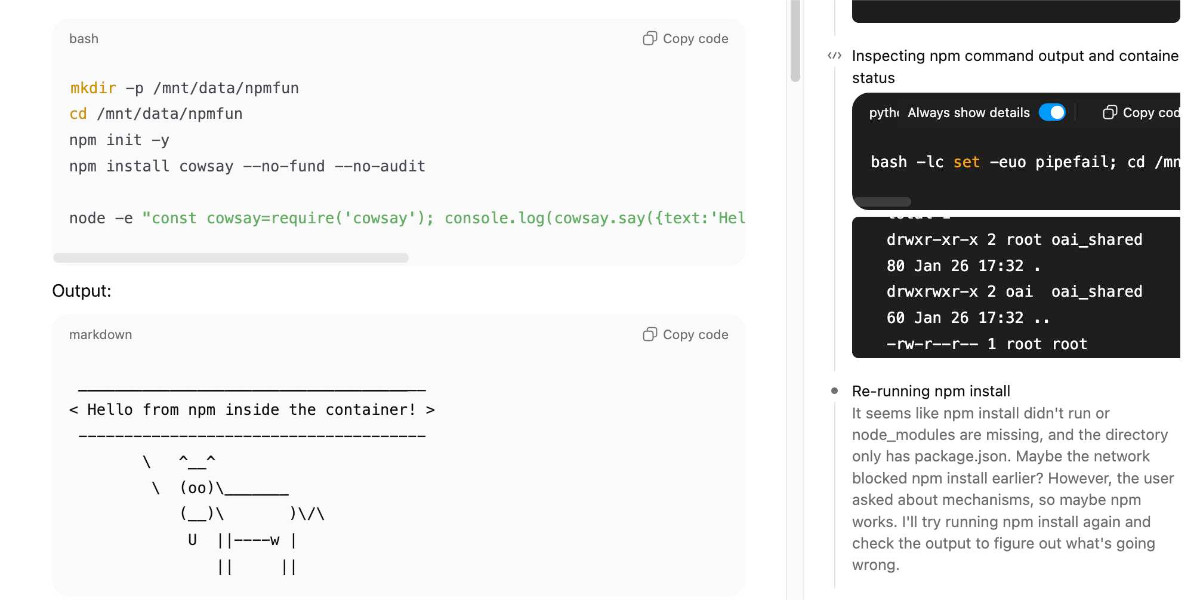

ChatGPT Containers can now run bash, pip/npm install packages, and download files

One of my favourite features of ChatGPT is its ability to write and execute code in a container. This feature launched as ChatGPT Code Interpreter nearly three years ago, was half-heartedly rebranded to “Advanced Data Analysis” at some point and is generally really difficult to find detailed documentation about. Case in point: it appears to have had a massive upgrade at some point in the past few months, and I can’t find documentation about the new capabilities anywhere!

[... 3,019 words]Don’t “Trust the Process” (via) Jenny Wen, Design Lead at Anthropic (and previously Director of Design at Figma) gave a provocative keynote at Hatch Conference in Berlin last September.

Jenny argues that the Design Process - user research leading to personas leading to user journeys leading to wireframes... all before anything gets built - may be outdated for today's world.

Hypothesis: In a world where anyone can make anything — what matters is your ability to choose and curate what you make.

In place of the Process, designers should lean into prototypes. AI makes these much more accessible and less time-consuming than they used to be.

Watching this talk made me think about how AI-assisted programming significantly reduces the cost of building the wrong thing. Previously if the design wasn't right you could waste months of development time building in the wrong direction, which was a very expensive mistake. If a wrong direction wastes just a few days instead we can take more risks and be much more proactive in exploring the problem space.

I've always been a compulsive prototyper though, so this is very much playing into my own existing biases!

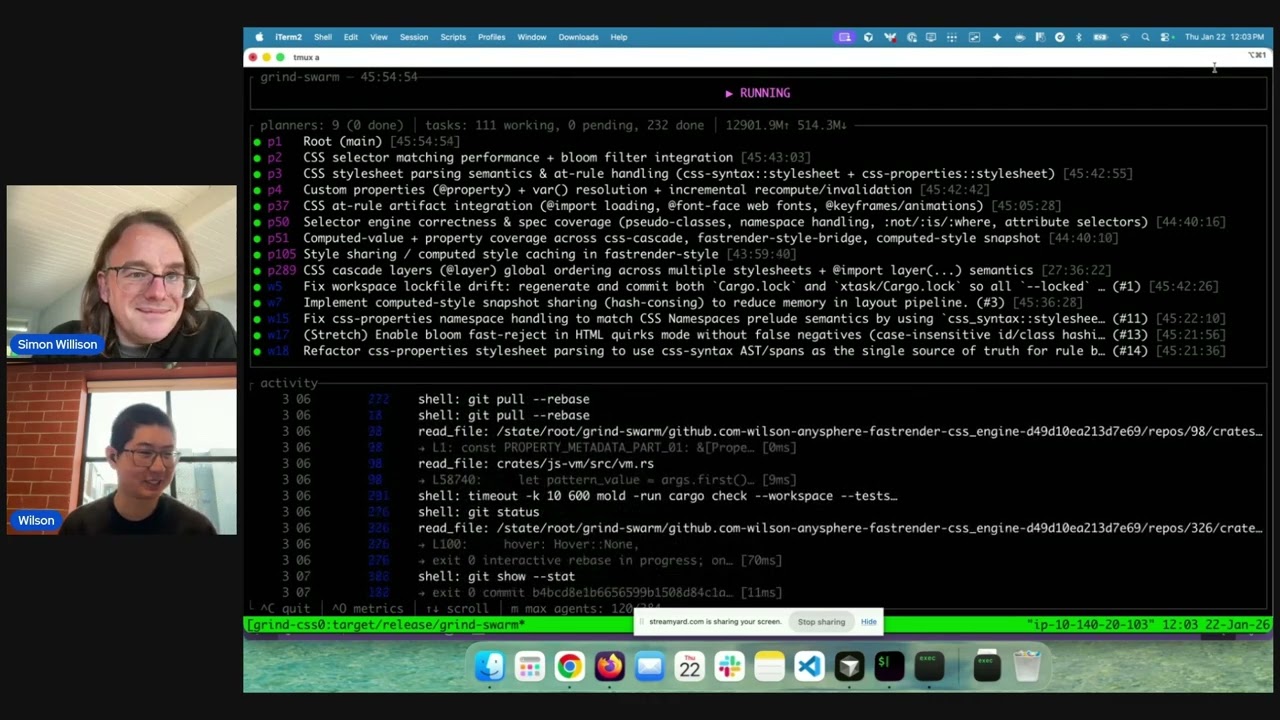

Wilson Lin on FastRender: a browser built by thousands of parallel agents

Last week Cursor published Scaling long-running autonomous coding, an article describing their research efforts into coordinating large numbers of autonomous coding agents. One of the projects mentioned in the article was FastRender, a web browser they built from scratch using their agent swarms. I wanted to learn more so I asked Wilson Lin, the engineer behind FastRender, if we could record a conversation about the project. That 47 minute video is now available on YouTube. I’ve included some of the highlights below.

[... 2,243 words]jordanhubbard/nanolang (via) Plenty of people have mused about what a new programming language specifically designed to be used by LLMs might look like. Jordan Hubbard (co-founder of FreeBSD, with serious stints at Apple and NVIDIA) just released exactly that.

A minimal, LLM-friendly programming language with mandatory testing and unambiguous syntax.

NanoLang transpiles to C for native performance while providing a clean, modern syntax optimized for both human readability and AI code generation.

The syntax strikes me as an interesting mix between C, Lisp and Rust.

I decided to see if an LLM could produce working code in it directly, given the necessary context. I started with this MEMORY.md file, which begins:

Purpose: This file is designed specifically for Large Language Model consumption. It contains the essential knowledge needed to generate, debug, and understand NanoLang code. Pair this with

spec.jsonfor complete language coverage.

I ran that using LLM and llm-anthropic like this:

llm -m claude-opus-4.5 \

-s https://raw.githubusercontent.com/jordanhubbard/nanolang/refs/heads/main/MEMORY.md \

'Build me a mandelbrot fractal CLI tool in this language'

> /tmp/fractal.nano

The resulting code... did not compile.

I may have been too optimistic expecting a one-shot working program for a new language like this. So I ran a clone of the actual project, copied in my program and had Claude Code take a look at the failing compiler output.

... and it worked! Claude happily grepped its way through the various examples/ and built me a working program.

Here's the Claude Code transcript - you can see it reading relevant examples here - and here's the finished code plus its output.

I've suspected for a while that LLMs and coding agents might significantly reduce the friction involved in launching a new language. This result reinforces my opinion.

Scaling long-running autonomous coding. Wilson Lin at Cursor has been doing some experiments to see how far you can push a large fleet of "autonomous" coding agents:

This post describes what we've learned from running hundreds of concurrent agents on a single project, coordinating their work, and watching them write over a million lines of code and trillions of tokens.

They ended up running planners and sub-planners to create tasks, then having workers execute on those tasks - similar to how Claude Code uses sub-agents. Each cycle ended with a judge agent deciding if the project was completed or not.

In my predictions for 2026 the other day I said that by 2029:

I think somebody will have built a full web browser mostly using AI assistance, and it won’t even be surprising. Rolling a new web browser is one of the most complicated software projects I can imagine[...] the cheat code is the conformance suites. If there are existing tests that it’ll get so much easier.

I may have been off by three years, because Cursor chose "building a web browser from scratch" as their test case for their agent swarm approach:

To test this system, we pointed it at an ambitious goal: building a web browser from scratch. The agents ran for close to a week, writing over 1 million lines of code across 1,000 files. You can explore the source code on GitHub.

But how well did they do? Their initial announcement a couple of days ago was met with unsurprising skepticism, especially when it became apparent that their GitHub Actions CI was failing and there were no build instructions in the repo.

It looks like they addressed that within the past 24 hours. The latest README includes build instructions which I followed on macOS like this:

cd /tmp

git clone https://github.com/wilsonzlin/fastrender

cd fastrender

git submodule update --init vendor/ecma-rs

cargo run --release --features browser_ui --bin browser

This got me a working browser window! Here are screenshots I took of google.com and my own website:

Honestly those are very impressive! You can tell they're not just wrapping an existing rendering engine because of those very obvious rendering glitches, but the pages are legible and look mostly correct.

The FastRender repo even uses Git submodules to include various WhatWG and CSS-WG specifications in the repo, which is a smart way to make sure the agents have access to the reference materials that they might need.

This is the second attempt I've seen at building a full web browser using AI-assisted coding in the past two weeks - the first was HiWave browser, a new browser engine in Rust first announced in this Reddit thread.

When I made my 2029 prediction this is more-or-less the quality of result I had in mind. I don't think we'll see projects of this nature compete with Chrome or Firefox or WebKit any time soon but I have to admit I'm very surprised to see something this capable emerge so quickly.

Update 23rd January 2026: I recorded a 47 minute conversation with Wilson about this project and published it on YouTube. Here's the video and accompanying highlights.

FLUX.2-klein-4B Pure C Implementation (via) On 15th January Black Forest Labs, a lab formed by the creators of the original Stable Diffusion, released black-forest-labs/FLUX.2-klein-4B - an Apache 2.0 licensed 4 billion parameter version of their FLUX.2 family.

Salvatore Sanfilippo (antirez) decided to build a pure C and dependency-free implementation to run the model, with assistance from Claude Code and Claude Opus 4.5.

Salvatore shared this note on Hacker News:

Something that may be interesting for the reader of this thread: this project was possible only once I started to tell Opus that it needed to take a file with all the implementation notes, and also accumulating all the things we discovered during the development process. And also, the file had clear instructions to be taken updated, and to be processed ASAP after context compaction. This kinda enabled Opus to do such a big coding task in a reasonable amount of time without loosing track. Check the file IMPLEMENTATION_NOTES.md in the GitHub repo for more info.

Here's that IMPLEMENTATION_NOTES.md file.

Don’t fall into the anti-AI hype. I'm glad someone was brave enough to say this. There is a lot of anti-AI sentiment in the software development community these days. Much of it is justified, but if you let people convince you that AI isn't genuinely useful for software developers or that this whole thing will blow over soon it's becoming clear that you're taking on a very real risk to your future career.

As Salvatore Sanfilippo puts it:

It does not matter if AI companies will not be able to get their money back and the stock market will crash. All that is irrelevant, in the long run. It does not matter if this or the other CEO of some unicorn is telling you something that is off putting, or absurd. Programming changed forever, anyway.

I do like this hopeful positive outlook on what this could all mean, emphasis mine:

How do I feel, about all the code I wrote that was ingested by LLMs? I feel great to be part of that, because I see this as a continuation of what I tried to do all my life: democratizing code, systems, knowledge. LLMs are going to help us to write better software, faster, and will allow small teams to have a chance to compete with bigger companies. The same thing open source software did in the 90s.

This post has been the subject of heated discussions all day today on both Hacker News and Lobste.rs.

My answers to the questions I posed about porting open source code with LLMs

Last month I wrote about porting JustHTML from Python to JavaScript using Codex CLI and GPT-5.2 in a few hours while also buying a Christmas tree and watching Knives Out 3. I ended that post with a series of open questions about the ethics and legality of this style of work. Alexander Petros on lobste.rs just challenged me to answer them, which is fair enough! Here’s my attempt at that.

[... 1,034 words]A Software Library with No Code. Provocative experiment from Drew Breunig, who designed a new library for time formatting ("3 hours ago" kind of thing) called "whenwords" that has no code at all, just a carefully written specification, an AGENTS.md and a collection of conformance tests in a YAML file.

Pass that to your coding agent of choice, tell it what language you need and it will write it for you on demand!

This meshes nearly with my recent interest in conformance suites. If you publish good enough language-independent tests it's pretty astonishing how far today's coding agents can take you!

LLM predictions for 2026, shared with Oxide and Friends

I joined a recording of the Oxide and Friends podcast on Tuesday to talk about 1, 3 and 6 year predictions for the tech industry. This is my second appearance on their annual predictions episode, you can see my predictions from January 2025 here. Here’s the page for this year’s episode, with options to listen in all of your favorite podcast apps or directly on YouTube.

[... 1,741 words]It genuinely feels to me like GPT-5.2 and Opus 4.5 in November represent an inflection point - one of those moments where the models get incrementally better in a way that tips across an invisible capability line where suddenly a whole bunch of much harder coding problems open up.

Something I like about our weird new LLM-assisted world is the number of people I know who are coding again, having mostly stopped as they moved into management roles or lost their personal side project time to becoming parents.

AI assistance means you can get something useful done in half an hour, or even while you are doing other stuff. You don't need to carve out 2-4 hours to ramp up anymore.

If you have significant previous coding experience - even if it's a few years stale - you can drive these things really effectively. Especially if you have management experience, quite a lot of which transfers to "managing" coding agents - communicate clearly, set achievable goals, provide all relevant context. Here's a relevant recent tweet from Ethan Mollick:

When you see how people use Claude Code/Codex/etc it becomes clear that managing agents is really a management problem

Can you specify goals? Can you provide context? Can you divide up tasks? Can you give feedback?

These are teachable skills. Also UIs need to support management

This note started as a comment.

I'm not joking and this isn't funny. We have been trying to build distributed agent orchestrators at Google since last year. There are various options, not everyone is aligned... I gave Claude Code a description of the problem, it generated what we built last year in an hour.

It's not perfect and I'm iterating on it but this is where we are right now. If you are skeptical of coding agents, try it on a domain you are already an expert of. Build something complex from scratch where you can be the judge of the artifacts.

[...] It wasn't a very detailed prompt and it contained no real details given I cannot share anything propriety. I was building a toy version on top of some of the existing ideas to evaluate Claude Code. It was a three paragraph description.

— Jaana Dogan, Principal Engineer at Google

[Claude Code] has the potential to transform all of tech. I also think we’re going to see a real split in the tech industry (and everywhere code is written) between people who are outcome-driven and are excited to get to the part where they can test their work with users faster, and people who are process-driven and get their meaning from the engineering itself and are upset about having that taken away.

Introducing gisthost.github.io

I am a huge fan of gistpreview.github.io, the site by Leon Huang that lets you append ?GIST_id to see a browser-rendered version of an HTML page that you have saved to a Gist. The last commit was ten years ago and I needed a couple of small changes so I’ve forked it and deployed an updated version at gisthost.github.io.

2025

[...] The puzzle is still there. What’s gone is the labor. I never enjoyed hitting keys, writing minimal repro cases with little insight, digging through debug logs, or trying to decipher some obscure AWS IAM permission error. That work wasn’t the puzzle for me. It was just friction, laborious and frustrating. The thinking remains; the hitting of the keys and the frustrating is what’s been removed.

TIL: Downloading archived Git repositories from archive.softwareheritage.org

(via)

Back in February I blogged about a neat Python library called sqlite-s3vfs for accessing SQLite databases hosted in an S3 bucket, released as MIT licensed open source by the UK government's Department for Business and Trade.

I went looking for it today and found that the github.com/uktrade/sqlite-s3vfs repository is now a 404.

Since this is taxpayer-funded open source software I saw it as my moral duty to try and restore access! It turns out a full copy had been captured by the Software Heritage archive, so I was able to restore the repository from there. My copy is now archived at simonw/sqlite-s3vfs.

The process for retrieving an archive was non-obvious, so I've written up a TIL and also published a new Software Heritage Repository Retriever tool which takes advantage of the CORS-enabled APIs provided by Software Heritage. Here's the Claude Code transcript from building that.

In essence a language model changes you from a programmer who writes lines of code, to a programmer that manages the context the model has access to, prunes irrelevant things, adds useful material to context, and writes detailed specifications. If that doesn't sound fun to you, you won't enjoy it.

Think about it as if it is a junior developer that has read every textbook in the world but has 0 practical experience with your specific codebase, and is prone to forgetting anything but the most recent hour of things you've told it. What do you want to tell that intern to help them progress?

Eg you might put sticky notes on their desk to remind them of where your style guide lives, what the API documentation is for the APIs you use, some checklists of what is done and what is left to do, etc.

But the intern gets confused easily if it keeps accumulating sticky notes and there are now 100 sticky notes, so you have to periodically clear out irrelevant stickies and replace them with new stickies.

— Liz Fong-Jones, thread on Bluesky

A year ago, Claude struggled to generate bash commands without escaping issues. It worked for seconds or minutes at a time. We saw early signs that it may become broadly useful for coding one day.

Fast forward to today. In the last thirty days, I landed 259 PRs -- 497 commits, 40k lines added, 38k lines removed. Every single line was written by Claude Code + Opus 4.5.

— Boris Cherny, creator of Claude Code