73 posts tagged “aws”

2025

s3-credentials 0.17. New release of my s3-credentials CLI tool for managing credentials needed to access just one S3 bucket. Here are the release notes in full:

That s3-credentials localserver command (documented here) is a little obscure, but I found myself wanting something like that to help me test out a new feature I'm building to help create temporary Litestream credentials using Amazon STS.

Most of that new feature was built by Claude Code from the following starting prompt:

Add a feature s3-credentials localserver which starts a localhost weberver running (using the Python standard library stuff) on port 8094 by default but -p/--port can set a different port and otherwise takes an option that names a bucket and then takes the same options for read--write/read-only etc as other commands. It also takes a required --refresh-interval option which can be set as 5m or 10h or 30s. All this thing does is reply on / to a GET request with the IAM expiring credentials that allow access to that bucket with that policy for that specified amount of time. It caches internally the credentials it generates and will return the exact same data up until they expire (it also tracks expected expiry time) after which it will generate new credentials (avoiding dog pile effects if multiple requests ask at the same time) and return and cache those instead.

For resiliency, the DNS Enactor operates redundantly and fully independently in three different Availability Zones (AZs). [...] When the second Enactor (applying the newest plan) completed its endpoint updates, it then invoked the plan clean-up process, which identifies plans that are significantly older than the one it just applied and deletes them. At the same time that this clean-up process was invoked, the first Enactor (which had been unusually delayed) applied its much older plan to the regional DDB endpoint, overwriting the newer plan. [...] The second Enactor's clean-up process then deleted this older plan because it was many generations older than the plan it had just applied. As this plan was deleted, all IP addresses for the regional endpoint were immediately removed.

— AWS, Amazon DynamoDB Service Disruption in Northern Virginia (US-EAST-1) Region (14.5 hours long!)

An MVCC-like columnar table on S3 with constant-time deletes (via) s3's support for conditional writes (previously) makes it an interesting, scalable and often inexpensive platform for all kinds of database patterns.

Shayon Mukherjee presents an ingenious design for a Parquet-backed database in S3 which accepts concurrent writes, presents a single atomic view for readers and even supports reliable row deletion despite Parquet requiring a complete file rewrite in order to remove data.

The key to the design is a _latest_manifest JSON file at the top of the bucket, containing an integer version number. Clients use compare-and-swap to increment that version - only one client can succeed at this, so the incremented version they get back is guaranteed unique to them.

Having reserved a version number the client can write a unique manifest file for that version - manifest/v00000123.json - with a more complex data structure referencing the current versions of every persisted file, including the one they just uploaded.

Deleted rows are written to tombstone files as either a list of primary keys or a list of of ranges. Clients consult these when executing reads, filtering out deleted rows as part of resolving a query.

The pricing estimates are especially noteworthy:

For a workload ingesting 6 TB/day with 2 TB of deletes and 50K queries/day:

- PUT requests: ~380K/day (≈4 req/s) = $1.88/day

- GET requests: highly variable, depends on partitioning effectiveness

- Best case (good time-based partitioning): ~100K-200K/day = $0.04-$0.08/day

- Worst case (poor partitioning, scanning many files): ~2M/day = $0.80/day

~$3/day for ingesting 6TB of data is pretty fantastic!

Watch out for storage costs though - each new TB of data at $0.023/GB/month adds $23.55 to the ongoing monthly bill.

Having worked inside AWS I can tell you one big reason [that they don't describe their internals] is the attitude/fear that anything we put in out public docs may end up getting relied on by customers. If customers rely on the implementation to work in a specific way, then changing that detail requires a LOT more work to prevent breaking customer's workloads. If it is even possible at that point.

— TheSoftwareGuy, comment on Hacker News

I was at a leadership group and people were telling me "We think that with AI we can replace all of our junior people in our company." I was like, "That's the dumbest thing I've ever heard. They're probably the least expensive employees you have, they're the most leaned into your AI tools, and how's that going to work when you go 10 years in the future and you have no one that has built up or learned anything?

— Matt Garman, CEO, Amazon Web Services

AWS in 2025: The Stuff You Think You Know That’s Now Wrong (via) Absurdly useful roundup from Corey Quinn of AWS changes you may have missed that can materially affect your architectural decisions about how you use their services.

A few that stood out to me:

- EC2 instances can now live-migrate between physical hosts, and can have their security groups, IAM roles and EBS volumes modified without a restart. They now charge by the second; they used to round up to the hour.

- S3 Glacier restore fees are now fast and predictably priced.

- AWS Lambdas can now run containers, execute for up to 15 minutes, use up to 10GB of RAM and request 10GB of /tmp storage.

Also this note on AWS's previously legendary resistance to shutting things down:

While deprecations remain rare, they’re definitely on the rise; if an AWS service sounds relatively niche or goofy, consider your exodus plan before building atop it.

Using S3 triggers to maintain a list of files in DynamoDB. I built an experimental prototype this morning of a system for efficiently tracking files that have been added to a large S3 bucket by maintaining a parallel DynamoDB table using S3 triggers and AWS lambda.

I got 80% of the way there with this single prompt (complete with typos) to my custom Claude Project:

Python CLI app using boto3 with commands for creating a new S3 bucket which it also configures to have S3 lambada event triggers which moantian a dynamodb table containing metadata about all of the files in that bucket. Include these commands

create_bucket - create a bucket and sets up the associated triggers and dynamo tableslist_files - shows me a list of files based purely on querying dynamo

ChatGPT then took me to the 95% point. The code Claude produced included an obvious bug, so I pasted the code into o3-mini-high on the basis that "reasoning" is often a great way to fix those kinds of errors:

Identify, explain and then fix any bugs in this code:code from Claude pasted here

... and aside from adding a couple of time.sleep() calls to work around timing errors with IAM policy distribution, everything worked!

Getting from a rough idea to a working proof of concept of something like this with less than 15 minutes of prompting is extraordinarily valuable.

This is exactly the kind of project I've avoided in the past because of my almost irrational intolerance of the frustration involved in figuring out the individual details of each call to S3, IAM, AWS Lambda and DynamoDB.

(Update: I just found out about the new S3 Metadata system which launched a few weeks ago and might solve this exact problem!)

2024

Building Python tools with a one-shot prompt using uv run and Claude Projects

I’ve written a lot about how I’ve been using Claude to build one-shot HTML+JavaScript applications via Claude Artifacts. I recently started using a similar pattern to create one-shot Python utilities, using a custom Claude Project combined with the dependency management capabilities of uv.

[... 899 words]DSQL Vignette: Reads and Compute. Marc Brooker is one of the engineers behind AWS's new Aurora DSQL horizontally scalable database. Here he shares all sorts of interesting details about how it works under the hood.

The system is built around the principle of separating storage from compute: storage uses S3, while compute runs in Firecracker:

Each transaction inside DSQL runs in a customized Postgres engine inside a Firecracker MicroVM, dedicated to your database. When you connect to DSQL, we make sure there are enough of these MicroVMs to serve your load, and scale up dynamically if needed. We add MicroVMs in the AZs and regions your connections are coming from, keeping your SQL query processor engine as close to your client as possible to optimize for latency.

We opted to use PostgreSQL here because of its pedigree, modularity, extensibility, and performance. We’re not using any of the storage or transaction processing parts of PostgreSQL, but are using the SQL engine, an adapted version of the planner and optimizer, and the client protocol implementation.

The system then provides strong repeatable-read transaction isolation using MVCC and EC2's high precision clocks, enabling reads "as of time X" including against nearby read replicas.

The storage layer supports index scans, which means the compute layer can push down some operations allowing it to load a subset of the rows it needs, reducing round-trips that are affected by speed-of-light latency.

The overall approach here is disaggregation: we’ve taken each of the critical components of an OLTP database and made it a dedicated service. Each of those services is independently horizontally scalable, most of them are shared-nothing, and each can make the design choices that is most optimal in its domain.

Amazon Bedrock doesn't store or log your prompts and completions. Amazon Bedrock doesn't use your prompts and completions to train any AWS models and doesn't distribute them to third parties.

Claude 3.5 Haiku price drops by 20%. Buried in this otherwise quite dry post about Anthropic's ongoing partnership with AWS:

To make this model even more accessible for a wide range of use cases, we’re lowering the price of Claude 3.5 Haiku to $0.80 per million input tokens and $4 per million output tokens across all platforms.

The previous price was $1/$5. I've updated my LLM pricing calculator and modified yesterday's piece comparing prices with Amazon Nova as well.

Confusing matters somewhat, the article also announces a new way to access Claude 3.5 Haiku at the old price but with "up to 60% faster inference speed":

This faster version of Claude 3.5 Haiku, powered by Trainium2, is available in the US East (Ohio) Region via cross-region inference and is offered at $1 per million input tokens and $5 per million output tokens.

Using "cross-region inference" involve sending something called an "inference profile" to the Bedrock API. I have an open issue to figure out what that means for my llm-bedrock plugin.

Also from this post: AWS now offer a Bedrock model distillation preview which includes the ability to "teach" Claude 3 Haiku using Claude 3.5 Sonnet. It sounds similar to OpenAI's model distillation feature announced at their DevDay event back in October.

Introducing Amazon Aurora DSQL (via) New, weird-shaped database from AWS. It's (loosely) PostgreSQL compatible, claims "virtually unlimited scale" and can be set up as a single-region cluster or as a multi-region setup that somehow supports concurrent reads and writes across all regions. I'm hoping they publish technical details on how that works at some point in the future (update: they did), right now they just say this:

When you create a multi-Region cluster, Aurora DSQL creates another cluster in a different Region and links them together. Adding linked Regions makes sure that all changes from committed transactions are replicated to the other linked Regions. Each linked cluster has a Regional endpoint, and Aurora DSQL synchronously replicates writes across Regions, enabling strongly consistent reads and writes from any linked cluster.

Here's the list of unsupported PostgreSQL features - most notably views, triggers, sequences, foreign keys and extensions. A single transaction can also modify only up to 10,000 rows.

No pricing information yet (it's in a free preview) but it looks like this one may be true scale-to-zero, unlike some of their other recent "serverless" products - Amazon Aurora Serverless v2 has a baseline charge no matter how heavily you are using it. (Update: apparently that changed on 20th November 2024 when they introduced an option to automatically pause a v2 serverless instance, which then "takes less than 15 seconds to resume".)

Amazon S3 adds new functionality for conditional writes (via)

Amazon S3 can now perform conditional writes that evaluate if an object is unmodified before updating it. This helps you coordinate simultaneous writes to the same object and prevents multiple concurrent writers from unintentionally overwriting the object without knowing the state of its content. You can use this capability by providing the ETag of an object [...]

This new conditional header can help improve the efficiency of your large-scale analytics, distributed machine learning, and other highly parallelized workloads by reliably offloading compare and swap operations to S3.

(Both Azure Blob Storage and Google Cloud have this feature already.)

When AWS added conditional write support just for if an object with that key exists or not back in August I wrote about Gunnar Morling's trick for Leader Election With S3 Conditional Writes. This new capability opens up a whole set of new patterns for implementing distributed locking systems along those lines.

Here's a useful illustrative example by lxgr on Hacker News:

As a (horribly inefficient, in case of non-trivial write contention) toy example, you could use S3 as a lock-free concurrent SQLite storage backend: Reads work as expected by fetching the entire database and satisfying the operation locally; writes work like this:

- Download the current database copy

- Perform your write locally

- Upload it back using "Put-If-Match" and the pre-edit copy as the matched object.

- If you get success, consider the transaction successful.

- If you get failure, go back to step 1 and try again.

AWS also just added the ability to enforce conditional writes in bucket policies:

To enforce conditional write operations, you can now use s3:if-none-match or s3:if-match condition keys to write a bucket policy that mandates the use of HTTP if-none-match or HTTP if-match conditional headers in S3 PutObject and CompleteMultipartUpload API requests. With this bucket policy in place, any attempt to write an object to your bucket without the required conditional header will be rejected.

Amazon S3 Express One Zone now supports the ability to append data to an object. This is a first for Amazon S3: it is now possible to append data to an existing object in a bucket, where previously the only supported operation was to atomically replace the object with an updated version.

This is only available for S3 Express One Zone, a bucket class introduced a year ago which provides storage in just a single availability zone, providing significantly lower latency at the cost of reduced redundancy and a much higher price (16c/GB/month compared to 2.3c for S3 standard tier).

The fact that appends have never been supported for multi-availability zone S3 provides an interesting clue as to the underlying architecture. Guaranteeing that every copy of an object has received and applied an append is significantly harder than doing a distributed atomic swap to a new version.

More details from the documentation:

There is no minimum size requirement for the data you can append to an object. However, the maximum size of the data that you can append to an object in a single request is 5GB. This is the same limit as the largest request size when uploading data using any Amazon S3 API.

With each successful append operation, you create a part of the object and each object can have up to 10,000 parts. This means you can append data to an object up to 10,000 times. If an object is created using S3 multipart upload, each uploaded part is counted towards the total maximum of 10,000 parts. For example, you can append up to 9,000 times to an object created by multipart upload comprising of 1,000 parts.

That 10,000 limit means this won't quite work for constantly appending to a log file in a bucket.

Presumably it will be possible to "tail" an object that is receiving appended updates using the HTTP Range header.

Leader Election With S3 Conditional Writes (via) Amazon S3 added support for conditional writes last week, so you can now write a key to S3 with a reliable failure if someone else has has already created it.

This is a big deal. It reminds me of the time in 2020 when S3 added read-after-write consistency, an astonishing piece of distributed systems engineering.

Gunnar Morling demonstrates how this can be used to implement a distributed leader election system. The core flow looks like this:

- Scan an S3 bucket for files matching

lock_*- likelock_0000000001.json. If the highest number contains{"expired": false}then that is the leader - If the highest lock has expired, attempt to become the leader yourself: increment that lock ID and then attempt to create

lock_0000000002.jsonwith a PUT request that includes the newIf-None-Match: *header - set the file content to{"expired": false} - If that succeeds, you are the leader! If not then someone else beat you to it.

- To resign from leadership, update the file with

{"expired": true}

There's a bit more to it than that - Gunnar also describes how to implement lock validity timeouts such that a crashed leader doesn't leave the system leaderless.

Elasticsearch is open source, again (via) Three and a half years ago, Elastic relicensed their core products from Apache 2.0 to dual-license under the Server Side Public License (SSPL) and the new Elastic License, neither of which were OSI-compliant open source licenses. They explained this change as a reaction to AWS, who were offering a paid hosted search product that directly competed with Elastic's commercial offering.

AWS were also sponsoring an "open distribution" alternative packaging of Elasticsearch, created in 2019 in response to Elastic releasing components of their package as the "x-pack" under alternative licenses. Stephen O'Grady wrote about that at the time.

AWS subsequently forked Elasticsearch entirely, creating the OpenSearch project in April 2021.

Now Elastic have made another change: they're triple-licensing their core products, adding the OSI-complaint AGPL as the third option.

This announcement of the change from Elastic creator Shay Banon directly addresses the most obvious conclusion we can make from this:

“Changing the license was a mistake, and Elastic now backtracks from it”. We removed a lot of market confusion when we changed our license 3 years ago. And because of our actions, a lot has changed. It’s an entirely different landscape now. We aren’t living in the past. We want to build a better future for our users. It’s because we took action then, that we are in a position to take action now.

By "market confusion" I think he means the trademark disagreement (later resolved) with AWS, who no longer sell their own Elasticsearch but sell OpenSearch instead.

I'm not entirely convinced by this explanation, but if it kicks off a trend of other no-longer-open-source companies returning to the fold I'm all for it!

For the past 10 years or so, AWS has been rolling out these peripheral services at an astonishing rate, dozens every year. A few get traction, most don’t—but they all stick around, undead zombies behind impressive-looking marketing pages, because historically AWS just doesn’t make many breaking changes. [...]

AWS made this mess for themselves by rushing all sorts of half-baked services to market. The mess had to be cleaned up at some point, and they’re doing that. But now they’ve explicitly revealed something to customers: The new stuff we release isn’t guaranteed to stick around.

After giving it a lot of thought, we made the decision to discontinue new access to a small number of services, including AWS CodeCommit.

While we are no longer onboarding new customers to these services, there are no plans to change the features or experience you get today, including keeping them secure and reliable. [...]

The services I'm referring to are: S3 Select, CloudSearch, Cloud9, SimpleDB, Forecast, Data Pipeline, and CodeCommit.

AWS CodeCommit quietly deprecated (via) CodeCommit is AWS's Git hosting service. In a reply from an AWS employee to this forum thread:

Beginning on 06 June 2024, AWS CodeCommit ceased onboarding new customers. Going forward, only customers who have an existing repository in AWS CodeCommit will be able to create additional repositories.

[...] If you would like to use AWS CodeCommit in a new AWS account that is part of your AWS Organization, please let us know so that we can evaluate the request for allowlisting the new account. If you would like to use an alternative to AWS CodeCommit given this news, we recommend using GitLab, GitHub, or another third party source provider of your choice.

What's weird about this is that, as far as I can tell, this is the first official public acknowledgement from AWS that CodeCommit is no longer accepting customers. The CodeCommit landing page continues to promote the product, though it does link to the How to migrate your AWS CodeCommit repository to another Git provider blog post from July 25th, which gives no direct indication that CodeCommit is being quietly sunset.

I wonder how long they'll continue to support their existing customers?

Amazon QLDB too

It looks like AWS may be having a bit of a clear-out. Amazon QLDB - Quantum Ledger Database (a blockchain-adjacent immutable ledger, launched in 2019) - quietly put out a deprecation announcement in their release history on July 18th (again, no official announcement elsewhere):

End of support notice: Existing customers will be able to use Amazon QLDB until end of support on 07/31/2025. For more details, see Migrate an Amazon QLDB Ledger to Amazon Aurora PostgreSQL.

This one is more surprising, because migrating to a different Git host is massively less work than entirely re-writing a system to use a fundamentally different database.

It turns out there's an infrequently updated community GitHub repo called SummitRoute/aws_breaking_changes which tracks these kinds of changes. Other services listed there include CodeStar, Cloud9, CloudSearch, OpsWorks, Workdocs and Snowmobile, and they cleverly (ab)use the GitHub releases mechanism to provide an Atom feed.

How an empty S3 bucket can make your AWS bill explode (via) Maciej Pocwierz accidentally created an S3 bucket with a name that was already used as a placeholder value in a widely used piece of software. They saw 100 million PUT requests to their new bucket in a single day, racking up a big bill since AWS charges $5/million PUTs.

It turns out AWS charge that same amount for PUTs that result in a 403 authentication error, a policy that extends even to "requester pays" buckets!

So, if you know someone's S3 bucket name you can DDoS their AWS bill just by flooding them with meaningless unauthenticated PUT requests.

AWS support refunded Maciej's bill as an exception here, but I'd like to see them reconsider this broken policy entirely.

Update from Jeff Barr:

We agree that customers should not have to pay for unauthorized requests that they did not initiate. We’ll have more to share on exactly how we’ll help prevent these charges shortly.

s3-credentials 0.16.

I spent entirely too long this evening trying to figure out why files in my new supposedly public S3 bucket were unavailable to view. It turns out these days you need to set a PublicAccessBlockConfiguration of {"BlockPublicAcls": false, "IgnorePublicAcls": false, "BlockPublicPolicy": false, "RestrictPublicBuckets": false}.

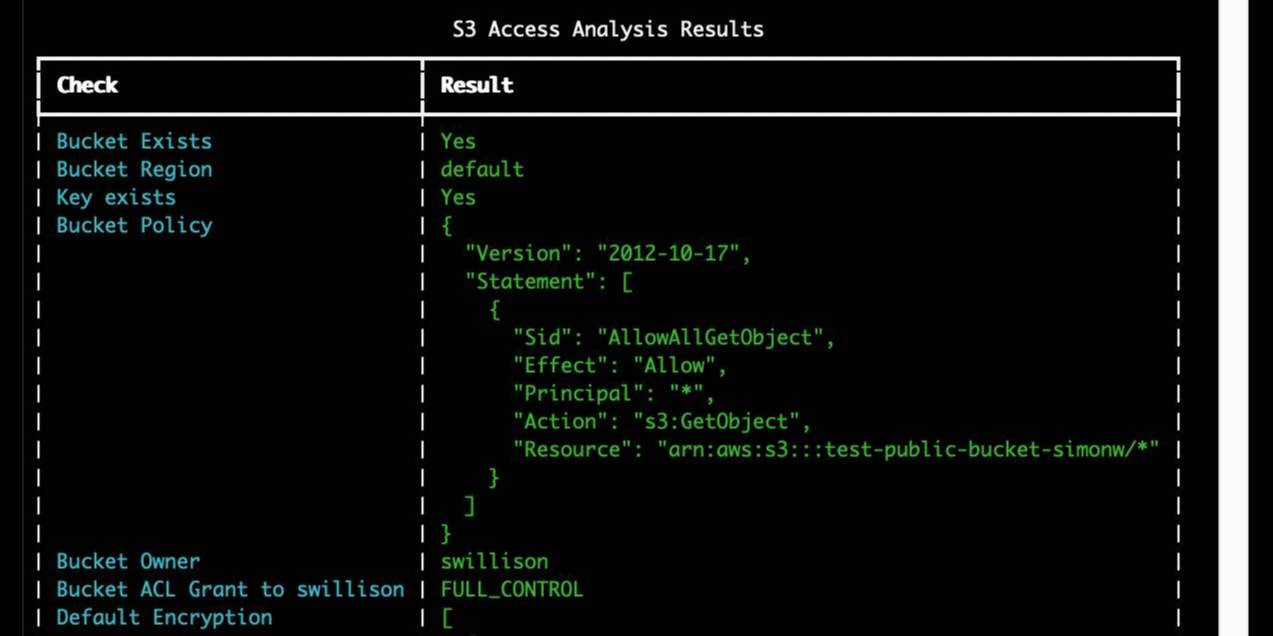

The s3-credentials --create-bucket --public option now does that for you. I also added a s3-credentials debug-bucket name-of-bucket command to help figure out why a bucket isn't working as expected.

textract-cli. This is my other OCR project from yesterday: I built the thinnest possible CLI wrapper around Amazon Textract, out of frustration at how hard that tool is to use on an ad-hoc basis.

It only works with JPEGs and PNGs (not PDFs) up to 5MB in size, reflecting limitations in Textract’s synchronous API: it can handle PDFs amazingly well but you have to upload them to an S3 bucket yet and I decided to keep the scope tight for the first version of this tool.

Assuming you’ve configured AWS credentials already, this is all you need to know:

pipx install textract-cli

textract-cli image.jpeg > output.txt

S3 is files, but not a filesystem (via) Cal Paterson helps some concepts click into place for me: S3 imitates a file system but has a number of critical missing features, the most important of which is the lack of partial updates. Any time you want to modify even a few bytes in a file you have to upload and overwrite the entire thing. Almost every database system is dependent on partial updates to function, which is why there are so few databases that can use S3 directly as a backend storage mechanism.

The power of two random choices, visualized. Grant Slatton shares a visualization illustrating “a favorite load balancing technique at AWS”: pick two nodes at random and then send the task to whichever of those two has the lowest current load score.

Why just two nodes? “The function grows logarithmically, so it’s a big jump from 1 to 2 and then tapers off *real* quick.”

AWS Fixes Data Exfiltration Attack Angle in Amazon Q for Business. An indirect prompt injection (where the AWS Q bot consumes malicious instructions) could result in Q outputting a markdown link to a malicious site that exfiltrated the previous chat history in a query string.

Amazon fixed it by preventing links from being output at all—apparently Microsoft 365 Chat uses the same mitigation.

Slashing Data Transfer Costs in AWS by 99% (via) Brilliant trick by Daniel Kleinstein. If you have data in two availability zones in the same AWS region, transferring a TB will cost you $10 in ingress and $10 in egress at the inter-zone rates charged by AWS.

But... transferring data to an S3 bucket in that same region is free (aside from S3 storage costs). And buckets are available with free transfer to all availability zones in their region, which means that TB of data can be transferred between availability zones for mere cents of S3 storage costs provided you delete the data as soon as it’s transferred.

2023

How ima.ge.cx works (via) ima.ge.cx is Aidan Steele’s web tool for browsing the contents of Docker images hosted on Docker Hub. The architecture is really interesting: it’s a set of AWS Lambda functions, written in Go, that fetch metadata about the images using Step Functions and then cache it in DynamoDB and S3. It uses S3 Select to serve directory listings from newline-delimited JSON in S3 without retrieving the whole file.

2022

You should have lots of AWS accounts (via) Richard Crowley makes the case for maintaining multiple AWS accounts within a single company, because “AWS accounts are the most complete form of isolation on offer”.

Figure out how to serve an AWS Lambda function with a Function URL from a custom subdomain (via) This took me five hours and 77 issue comments to figure out, but I finally managed to serve an AWS Lambda function running Datasette on a custom subdomain with an HTTPS certificate. I was going to write this up as a TIL but I’m exhausted so I decided to share my private notes thread instead.

Deploying Python web apps as AWS Lambda functions. After literally years of failed half-hearted attempts, I finally managed to deploy an ASGI Python web application (Datasette) to an AWS Lambda function! Here are my extensive notes.