59 posts tagged “docker”

2026

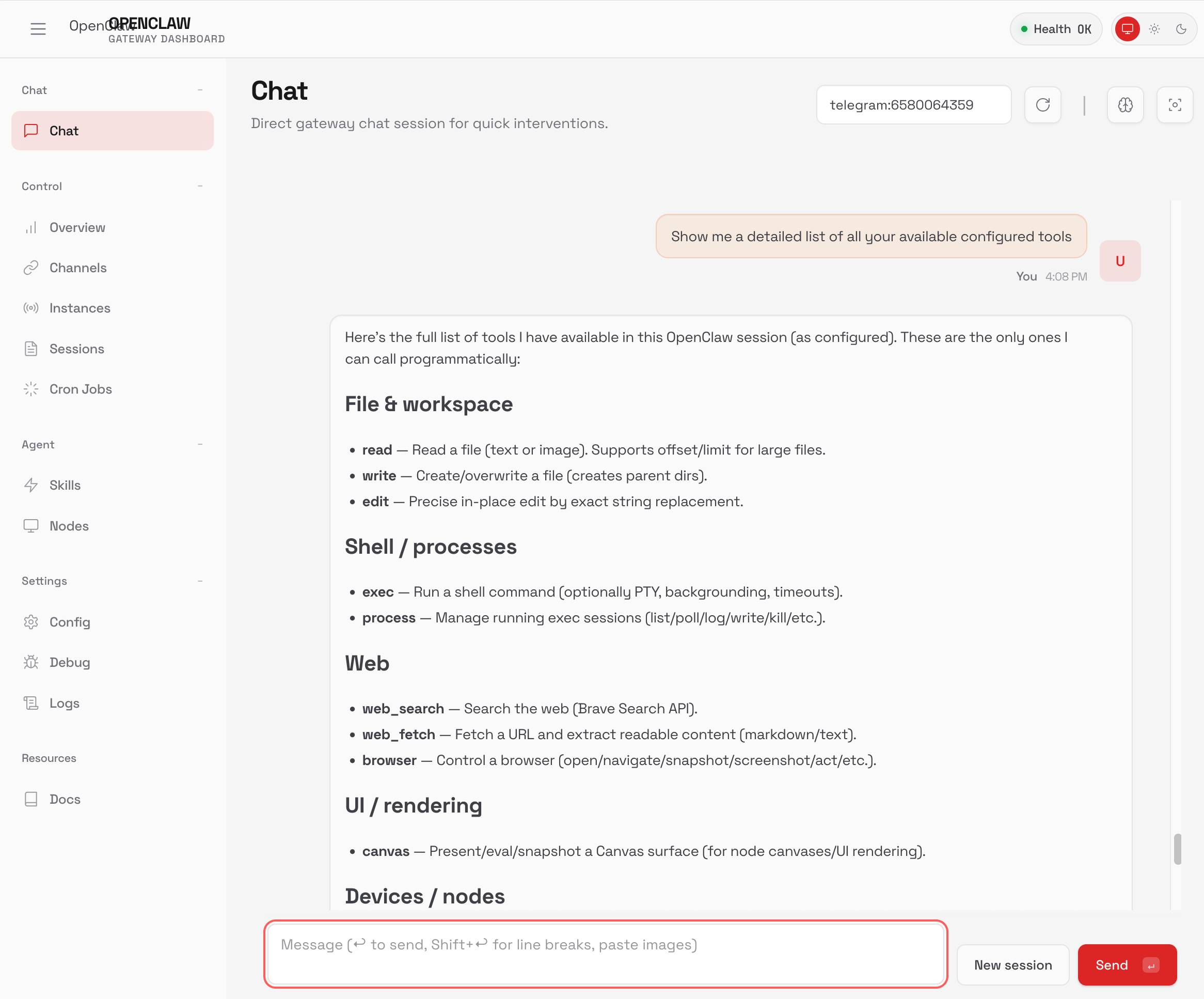

TIL: Running OpenClaw in Docker. I've been running OpenClaw using Docker on my Mac. Here are the first in my ongoing notes on how I set that up and the commands I'm using to administer it.

- Use their Docker Compose configuration

- Answering all of those questions

- Running administrative commands

- Setting up a Telegram bot

- Accessing the web UI

- Running commands as root

Here's a screenshot of the web UI that this serves on localhost:

2025

Fun-reliable side-channels for cross-container communication (via) Here's a very clever hack for communicating between different processes running in different containers on the same machine. It's based on clever abuse of POSIX advisory locks which allow a process to create and detect locks across byte offset ranges:

These properties combined are enough to provide a basic cross-container side-channel primitive, because a process in one container can set a read-lock at some interval on

/proc/self/ns/time, and a process in another container can observe the presence of that lock by querying for a hypothetically intersecting write-lock.

I dumped the C proof-of-concept into GPT-5 for a code-level explanation, then had it help me figure out how to run it in Docker. Here's the recipe that worked for me:

cd /tmp

wget https://github.com/crashappsec/h4x0rchat/blob/9b9d0bd5b2287501335acca35d070985e4f51079/h4x0rchat.c

docker run --rm -it -v "$PWD:/src" \

-w /src gcc:13 bash -lc 'gcc -Wall -O2 \

-o h4x0rchat h4x0rchat.c && ./h4x0rchat'

Run that docker run line in two separate terminal windows and you can chat between the two of them like this:

Getting DeepSeek-OCR working on an NVIDIA Spark via brute force using Claude Code

DeepSeek released a new model yesterday: DeepSeek-OCR, a 6.6GB model fine-tuned specifically for OCR. They released it as model weights that run using PyTorch and CUDA. I got it running on the NVIDIA Spark by having Claude Code effectively brute force the challenge of getting it working on that particular hardware.

[... 1,971 words]NVIDIA DGX Spark: great hardware, early days for the ecosystem

NVIDIA sent me a preview unit of their new DGX Spark desktop “AI supercomputer”. I’ve never had hardware to review before! You can consider this my first ever sponsored post if you like, but they did not pay me any cash and aside from an embargo date they did not request (nor would I grant) any editorial input into what I write about the device.

[... 1,846 words]Static Sites with Python, uv, Caddy, and Docker (via) Nik Kantar documents his Docker-based setup for building and deploying mostly static web sites in line-by-line detail.

I found this really useful. The Dockerfile itself without comments is just 8 lines long:

FROM ghcr.io/astral-sh/uv:debian AS build

WORKDIR /src

COPY . .

RUN uv python install 3.13

RUN uv run --no-dev sus

FROM caddy:alpine

COPY Caddyfile /etc/caddy/Caddyfile

COPY --from=build /src/output /srv/

He also includes a Caddyfile that shows how to proxy a subset of requests to the Plausible analytics service.

The static site is built using his sus package for creating static URL redirecting sites, but would work equally well for another static site generator you can install and run with uv run.

Nik deploys his sites using Coolify, a new-to-me take on the self-hosting alternative to Heroku/Vercel pattern which helps run multiple sites on a collection of hosts using Docker containers.

A bunch of the Hacker News comments dismissed this as over-engineering. I don't think that criticism is justified - given Nik's existing deployment environment I think this is a lightweight way to deploy static sites in a way that's consistent with how everything else he runs works already.

More importantly, the world needs more articles like this that break down configuration files and explain what every single line of them does.

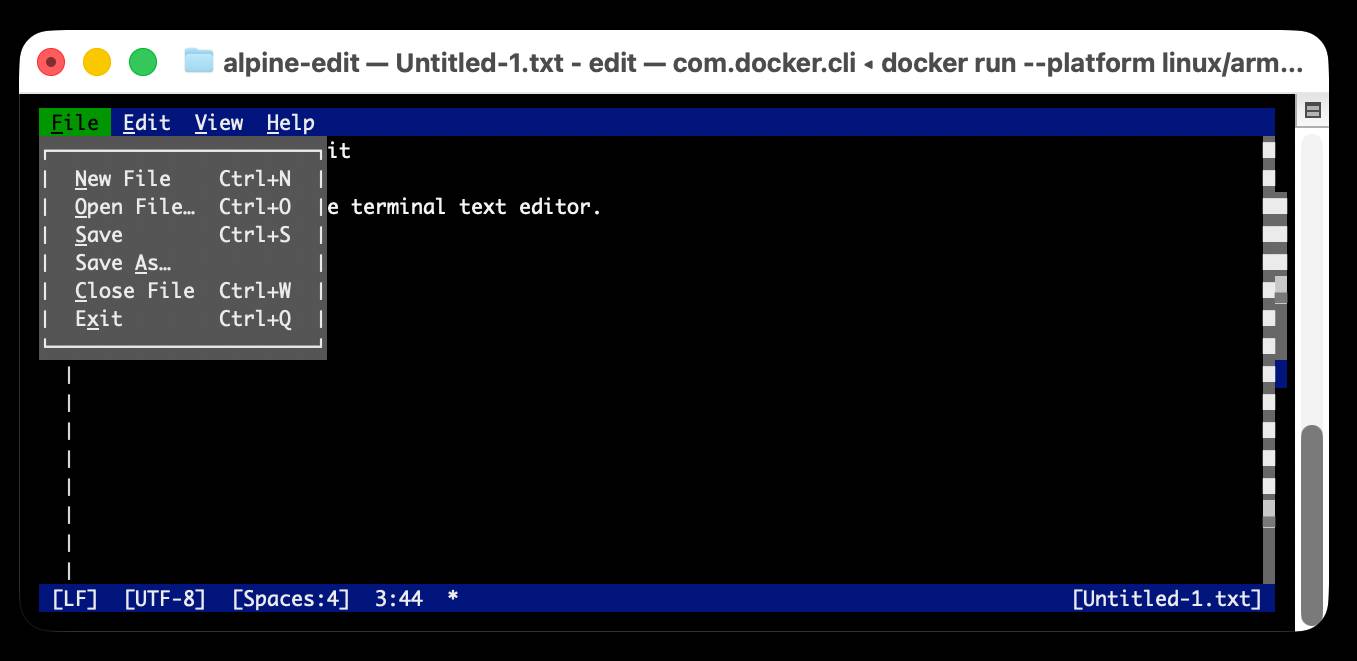

Edit is now open source (via) Microsoft released a new text editor! Edit is a terminal editor - similar to Vim or nano - that's designed to ship with Windows 11 but is open source, written in Rust and supported across other platforms as well.

Edit is a small, lightweight text editor. It is less than 250kB, which allows it to keep a small footprint in the Windows 11 image.

The microsoft/edit GitHub releases page currently has pre-compiled binaries for Windows and Linux, but they didn't have one for macOS.

(They do have build instructions using Cargo if you want to compile from source.)

I decided to try and get their released binary working on my Mac using Docker. One thing lead to another, and I've now built and shipped a container to the GitHub Container Registry that anyone with Docker on Apple silicon can try out like this:

docker run --platform linux/arm64 \

-it --rm \

-v $(pwd):/workspace \

ghcr.io/simonw/alpine-edit

Running that command will download a 9.59MB container image and start Edit running against the files in your current directory. Hit Ctrl+Q or use File -> Exit (the mouse works too) to quit the editor and terminate the container.

Claude 4 has a training cut-off date of March 2025, so it was able to guide me through almost everything even down to which page I should go to in GitHub to create an access token with permission to publish to the registry!

I wrote up a new TIL on Publishing a Docker container for Microsoft Edit to the GitHub Container Registry with a revised and condensed version of everything I learned today.

2024

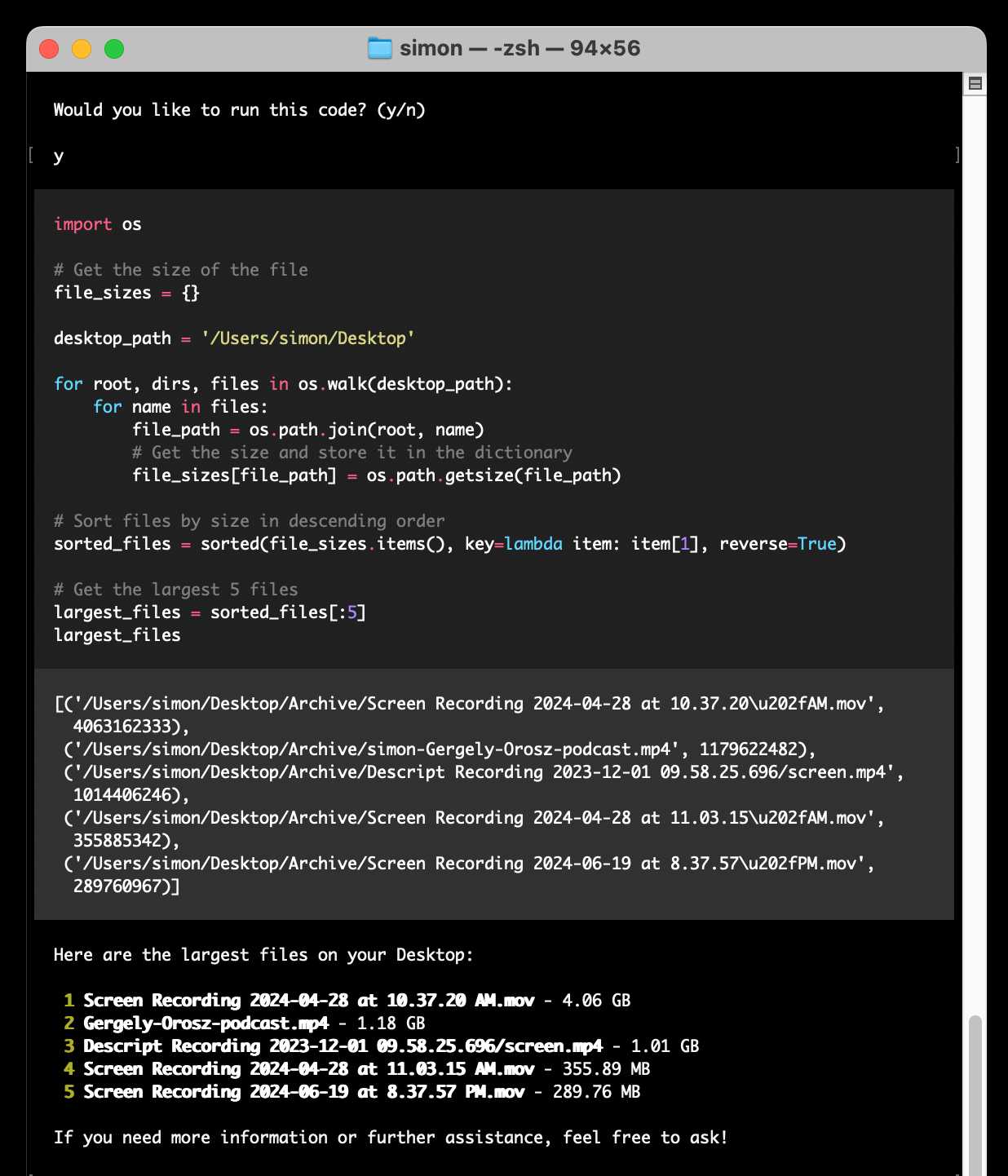

open-interpreter (via) This "natural language interface for computers" open source ChatGPT Code Interpreter alternative has been around for a while, but today I finally got around to trying it out.

Here's how I ran it (without first installing anything) using uv:

uvx --from open-interpreter interpreter

The default mode asks you for an OpenAI API key so it can use gpt-4o - there are a multitude of other options, including the ability to use local models with interpreter --local.

It runs in your terminal and works by generating Python code to help answer your questions, asking your permission to run it and then executing it directly on your computer.

I pasted in an API key and then prompted it with this:

find largest files on my desktop

Here's the full transcript.

Since code is run directly on your machine there are all sorts of ways things could go wrong if you don't carefully review the generated code before hitting "y". The team have an experimental safe mode in development which works by scanning generated code with semgrep. I'm not convinced by that approach, I think executing code in a sandbox would be a much more robust solution here - but sandboxing Python is still a very difficult problem.

They do at least have an experimental Docker integration.

Initial explorations of Anthropic’s new Computer Use capability

Two big announcements from Anthropic today: a new Claude 3.5 Sonnet model and a new API mode that they are calling computer use.

[... 1,569 words]jefftriplett/django-startproject

(via)

Django's django-admin startproject and startapp commands include a --template option which can be used to specify an alternative template for generating the initial code.

Jeff Triplett actively maintains his own template for new projects, which includes the pattern that I personally prefer of keeping settings and URLs in a config/ folder. It also configures the development environment to run using Docker Compose.

The latest update adds support for Python 3.13, Django 5.1 and uv. It's neat how you can get started without even installing Django using uv run like this:

uv run --with=django django-admin startproject \

--extension=ini,py,toml,yaml,yml \

--template=https://github.com/jefftriplett/django-startproject/archive/main.zip \

example_project

What’s New in Ruby on Rails 8 (via)

Rails 8 takes SQLite from a lightweight development tool to a reliable choice for production use, thanks to extensive work on the SQLite adapter and Ruby driver.

With the introduction of the solid adapters discussed above, SQLite now has the capability to power Action Cable, Rails.cache, and Active Job effectively, expanding its role beyond just prototyping or testing environments. [...]

- Transactions default to

IMMEDIATEmode to improve concurrency.

Also included in Rails 8: Kamal, a new automated deployment system by 37signals for self-hosting web applications on hardware or virtual servers:

Kamal basically is Capistrano for Containers, without the need to carefully prepare servers in advance. No need to ensure that the servers have just the right version of Ruby or other dependencies you need. That all lives in the Docker image now. You can boot a brand new Ubuntu (or whatever) server, add it to the list of servers in Kamal, and it’ll be auto-provisioned with Docker, and run right away.

More from the official blog post about the release:

At 37signals, we're building a growing suite of apps that use SQLite in production with ONCE. There are now thousands of installations of both Campfire and Writebook running in the wild that all run SQLite. This has meant a lot of real-world pressure on ensuring that Rails (and Ruby) is working that wonderful file-based database as well as it can be. Through proper defaults like WAL and IMMEDIATE mode. Special thanks to Stephen Margheim for a slew of such improvements and Mike Dalessio for solving a last-minute SQLite file corruption issue in the Ruby driver.

Docker images using uv’s python (via) Michael Kennedy interviewed uv/Ruff lead Charlie Marsh on his Talk Python podcast, and was inspired to try uv with Talk Python's own infrastructure, a single 8 CPU server running 17 Docker containers (status page here).

The key line they're now using is this:

RUN uv venv --python 3.12.5 /venv

Which downloads the uv selected standalone Python binary for Python 3.12.5 and creates a virtual environment for it at /venv all in one go.

Why I Still Use Python Virtual Environments in Docker (via) Hynek Schlawack argues for using virtual environments even when running Python applications in a Docker container. This argument was most convincing to me:

I'm responsible for dozens of services, so I appreciate the consistency of knowing that everything I'm deploying is in

/app, and if it's a Python application, I know it's a virtual environment, and if I run/app/bin/python, I get the virtual environment's Python with my application ready to be imported and run.

Also:

It’s good to use the same tools and primitives in development and in production.

Also worth a look: Hynek's guide to Production-ready Docker Containers with uv, an actively maintained guide that aims to reflect ongoing changes made to uv itself.

Testcontainers (via) Not sure how I missed this: Testcontainers is a family of testing libraries (for Python, Go, JavaScript, Ruby, Rust and a bunch more) that make it trivial to spin up a service such as PostgreSQL or Redis in a container for the duration of your tests and then spin it back down again.

The Python example code is delightful:

redis = DockerContainer("redis:5.0.3-alpine").with_exposed_ports(6379)

redis.start()

wait_for_logs(redis, "Ready to accept connections")

I much prefer integration-style tests over unit tests, and I like to make sure any of my projects that depend on PostgreSQL or similar can run their tests against a real running instance. I've invested heavily in spinning up Varnish or Elasticsearch ephemeral instances in the past - Testcontainers look like they could save me a lot of time.

The open source project started in 2015, span off a company called AtomicJar in 2021 and was acquired by Docker in December 2023.

container2wasm (via) “Converts a container to WASM with emulation by Bochs (for x86_64 containers) and TinyEMU (for riscv64 containers)”—effectively letting you take a Docker container and turn it into a WebAssembly blob that can then run in any WebAssembly host environment, including the browser.

Run “c2w ubuntu:22.04 out.wasm” to output a WASM binary for the Ubuntu 22:04 container from Docker Hub, then “wasmtime out.wasm uname -a” to run a command.

Even better, check out the live browser demos linked fro the README, which let you do things like run a Python interpreter in a Docker container directly in your browser.

2023

How ima.ge.cx works (via) ima.ge.cx is Aidan Steele’s web tool for browsing the contents of Docker images hosted on Docker Hub. The architecture is really interesting: it’s a set of AWS Lambda functions, written in Go, that fetch metadata about the images using Step Functions and then cache it in DynamoDB and S3. It uses S3 Select to serve directory listings from newline-delimited JSON in S3 without retrieving the whole file.

Docker can copy in files directly from another image. I did not know you could do this in a Dockerfile:

COPY --from=lubien/tired-proxy:2 /tired-proxy /tired-proxy

2022

Testing Datasette parallel SQL queries in the nogil/python fork. As part of my ongoing research into whether Datasette can be sped up by running SQL queries in parallel I’ve been growing increasingly suspicious that the GIL is holding me back. I know the sqlite3 module releases the GIL and was hoping that would give me parallel queries, but it looks like there’s still a ton of work going on in Python GIL land creating Python objects representing the results of the query.

Sam Gross has been working on a nogil fork of Python and I decided to give it a go. It’s published as a Docker image and it turns out trying it out really did just take a few commands... and it produced the desired results, my parallel code started beating my serial code where previously the two had produced effectively the same performance numbers.

I’m pretty stunned by this. I had no idea how far along the nogil fork was. It’s amazing to see it in action.

How to push tagged Docker releases to Google Artifact Registry with a GitHub Action. Ben Welsh’s writeup includes detailed step-by-step instructions for getting the mysterious “Workload Identity Federation” mechanism to work with GitHub Actions and Google Cloud. I’ve been dragging my heels on figuring this out for quite a while, so it’s great to see the steps described at this level of detail.

2021

Introduction to heredocs in Dockerfiles

(via)

This is a fantastic upgrade to Dockerfile syntax, enabled by BuildKit and a new frontend for executing the Dockerfile that can be specified with a #syntax= directive. I often like to create a standalone Dockerfile that works without needing other files from a directory, so being able to use <<EOF syntax to populate configure files from inline blocks of code is really handy.

Weeknotes: Apache proxies in Docker containers, refactoring Datasette

Updates to six major projects this week, plus finally some concrete progress towards Datasette 1.0.

[... 1,630 words]aws-lambda-adapter. AWS Lambda added support for Docker containers last year, but with a very weird shape: you can run anything on Lambda that fits in a Docker container, but unlike Google Cloud Run your application doesn’t get to speak HTTP: it needs to run code that listens for proprietary AWS lambda events instead. The obvious way to fix this is to run some kind of custom proxy inside the container which turns AWS runtime events into HTTP calls to a regular web application. Serverlessish and re:Web are two open source projects that implemented this, and now AWS have their own implementation of that pattern, written in Rust.

Weeknotes: Learning Kubernetes, learning Web Components

I’ve been mainly climbing the learning curve for Kubernetes and Web Components this week. I also released Datasette 0.59.1 with Python 3.10 compatibility and an updated Docker image.

[... 1,101 words]We never shipped a great commercial product. The reason for that is we didn’t focus. We tried to do a little bit of everything. It’s hard enough to maintain the growth of your developer community and build one great commercial product, let alone three or four, and it is impossible to do both, but that’s what we tried to do and we spent an enormous amount of money doing it.

GitHub’s Engineering Team has moved to Codespaces. My absolute dream development environment is one where I can spin up a new, working development environment in seconds—to try something new on a branch, or because I broke something and don’t want to spend time figuring out how to fix it. This article from GitHub explains how they got there: from a half-day setup to a 45 minute bootstrap in a codespace, then to five minutes through shallow cloning and a nightly pre-built Docker image and finally to 10 seconds be setting up “pools of codespaces, fully cloned and bootstrapped, waiting to be connected with a developer who wants to get to work”.

Best Practices Around Production Ready Web Apps with Docker Compose (via) I asked on Twitter for some tips on Docker Compose and was pointed to this article by Nick Janetakis, which has a whole host of useful tips and patterns I hadn’t encountered before.

Weeknotes: Docker architectures, sqlite-utils 3.7, nearly there with Datasette 0.57

This week I learned a whole bunch about using Docker to emulate different architectures, released sqlite-utils 3.7 and made a ton of progress towards the almost-ready-to-ship Datasette 0.57.

[... 1,081 words]logpaste (via) Useful example of how to use the Litestream SQLite replication tool in a Dockerized application: S3 credentials are passed to the container on startup, it then attempts to restore the SQLite database from S3 and starts a Litestream process in the same container to periodically synchronize changes back up to the S3 bucket.

2020

New for AWS Lambda – Container Image Support. “You can now package and deploy Lambda functions as container images of up to 10 GB in size”—can’t wait to try this out with Datasette.

Sandboxing and Workload Isolation (via) Fly.io run other people’s code in containers, so workload isolation is a Big Deal for them. This blog post goes deep into the history of isolation and the various different approaches you can take, and fills me with confidence that the team at Fly.io know their stuff. I got to the bottom and found it had been written by Thomas Ptacek, which didn’t surprise me in the slightest.

GitHub Actions: Manual triggers with workflow_dispatch (via) New GitHub Actions feature which fills a big gap in the offering: you can now create “workflow dispatch” events which provide a button for manually triggering an action—and you can specify extra UI form fields that can customize how that action runs. This turns Actions into an interactive automation engine for any code that can be wrapped in a Docker container.