152 posts tagged “ethics”

2023

We like to assume that automation technology will maintain or increase wage levels for a few skilled supervisors. But in the long-term skilled automation supervisors also tend to earn less.

Here's an example: In 1801 the Jacquard loom was invented, which automated silkweaving with punchcards. Around 1800, a manual weaver could earn 30 shillings/week. By the 1830s the same weaver would only earn around 5s/week. A Jacquard operator earned 15s/week, but he was also 12x more productive.

The Jacquard operator upskilled and became an automation supervisor, but their wage still dropped. For manual weavers the wages dropped even more. If we believe assistive AI will deliver unseen productivity gains, we can assume that wage erosion will also be unprecedented.

This is nonsensical. There is no way to understand the LLaMA models themselves as a recasting or adaptation of any of the plaintiffs’ books.

To some degree, the whole point of the tech industry’s embrace of “ethics” and “safety” is about reassurance. Companies realize that the technologies they are selling can be disconcerting and disruptive; they want to reassure the public that they’re doing their best to protect consumers and society. At the end of the day, though, we now know there’s no reason to believe that those efforts will ever make a difference if the company’s “ethics” end up conflicting with its money. And when have those two things ever not conflicted?

I’ve resigned from my role leading the Audio team at Stability AI, because I don’t agree with the company’s opinion that training generative AI models on copyrighted works is ‘fair use’.

[...] I disagree because one of the factors affecting whether the act of copying is fair use, according to Congress, is “the effect of the use upon the potential market for or value of the copyrighted work”. Today’s generative AI models can clearly be used to create works that compete with the copyrighted works they are trained on. So I don’t see how using copyrighted works to train generative AI models of this nature can be considered fair use.

But setting aside the fair use argument for a moment — since ‘fair use’ wasn’t designed with generative AI in mind — training generative AI models in this way is, to me, wrong. Companies worth billions of dollars are, without permission, training generative AI models on creators’ works, which are then being used to create new content that in many cases can compete with the original works.

I’m banned for life from advertising on Meta. Because I teach Python. (via) If accurate, this describes a nightmare scenario of automated decision making.

Reuven recently found he had a permanent ban from advertising on Facebook. They won’t tell him exactly why, and have marked this as a final decision that can never be reviewed.

His best theory (impossible for him to confirm) is that it’s because he tried advertising a course on Python and Pandas a few years ago which was blocked because a dumb algorithm thought he was trading exotic animals!

The worst part? An appeal is no longer possible because relevant data is only retained for 180 days and so all of the related evidence has now been deleted.

Various comments on Hacker News from people familiar with these systems confirm that this story likely holds up.

Because you’re allowed to do something doesn’t mean you can do it without repercussions. In this case, the consequences are very much on the mild side: if you use LLMs or diffusion models, a relatively small group of mostly mid- to low-income people who are largely underdogs in their respective fields will think you’re a dick.

I think that discussions of this technology become much clearer when we replace the term AI with the word “automation”. Then we can ask:

What is being automated? Who’s automating it and why? Who benefits from that automation? How well does the automation work in its use case that we’re considering? Who’s being harmed? Who has accountability for the functioning of the automated system? What existing regulations already apply to the activities where the automation is being used?

Meta in Myanmar, Part I: The Setup. The first in a series by Erin Kissane explaining in detail exactly how things went so incredibly wrong with Facebook in Myanmar, contributing to a genocide ending hundreds of thousands of lives. This is an extremely tough read.

The profusion of dubious A.I.-generated content resembles the badly made stockings of the nineteenth century. At the time of the Luddites, many hoped the subpar products would prove unacceptable to consumers or to the government. Instead, social norms adjusted.

Rethinking the Luddites in the Age of A.I. I’ve been staying way clear of comparisons to Luddites in conversations about the potential harmful impacts of modern AI tools, because it seemed to me like an offensive, unproductive cheap shot.

This article has shown me that the comparison is actually a lot more relevant—and sympathetic—than I had realized.

In a time before labor unions, the Luddites represented an early example of a worker movement that tried to stand up for their rights in the face of transformational, negative change to their specific way of life.

“Knitting machines known as lace frames allowed one employee to do the work of many without the skill set usually required” is a really striking parallel to what’s starting to happen with a surprising array of modern professions already.

Would I forbid the teaching (if that is the word) of my stories to computers? Not even if I could. I might as well be King Canute, forbidding the tide to come in. Or a Luddite trying to stop industrial progress by hammering a steam loom to pieces.

Here's the thing: if nearly all of the time the machine does the right thing, the human "supervisor" who oversees it becomes incapable of spotting its error. The job of "review every machine decision and press the green button if it's correct" inevitably becomes "just press the green button," assuming that the machine is usually right.

I apologize, but I cannot provide an explanation for why the Montagues and Capulets are beefing in Romeo and Juliet as it goes against ethical and moral standards, and promotes negative stereotypes and discrimination.

Does ChatGPT have a liberal bias? (via) An excellent debunking by Arvind Narayanan and Sayash Kapoor of the Measuring ChatGPT political bias paper that's been doing the rounds recently.

It turns out that paper didn't even test ChatGPT/gpt-3.5-turbo - they ran their test against the older Da Vinci GPT3.

The prompt design was particularly flawed: they used political compass structured multiple choice: "choose between four options: strongly disagree, disagree, agree, or strongly agree". Arvind and Sayash found that asking an open ended question was far more likely to cause the models to answer in an unbiased manner.

I liked this conclusion:

There’s a big appetite for papers that confirm users’ pre-existing beliefs [...] But we’ve also seen that chatbots’ behavior is highly sensitive to the prompt, so people can find evidence for whatever they want to believe.

An Iowa school district is using ChatGPT to decide which books to ban. I’m quoted in this piece by Benj Edwards about an Iowa school district that responded to a law requiring books be removed from school libraries that include “descriptions or visual depictions of a sex act” by asking ChatGPT “Does [book] contain a description or depiction of a sex act?”.

I talk about how this is the kind of prompt that frequent LLM users will instantly spot as being unlikely to produce reliable results, partly because of the lack of transparency from OpenAI regarding the training data that goes into their models. If the models haven’t seen the full text of the books in question, how could they possibly provide a useful answer?

Catching up on the weird world of LLMs

I gave a talk on Sunday at North Bay Python where I attempted to summarize the last few years of development in the space of LLMs—Large Language Models, the technology behind tools like ChatGPT, Google Bard and Llama 2.

[... 10,489 words]Study claims ChatGPT is losing capability, but some experts aren’t convinced. Benj Edwards talks about the ongoing debate as to whether or not GPT-4 is getting weaker over time. I remain skeptical of those claims—I think it’s more likely that people are seeing more of the flaws now that the novelty has worn off.

I’m quoted in this piece: “Honestly, the lack of release notes and transparency may be the biggest story here. How are we meant to build dependable software on top of a platform that changes in completely undocumented and mysterious ways every few months?”

Increasingly powerful AI systems are being released at an increasingly rapid pace. [...] And yet not a single AI lab seems to have provided any user documentation. Instead, the only user guides out there appear to be Twitter influencer threads. Documentation-by-rumor is a weird choice for organizations claiming to be concerned about proper use of their technologies, but here we are.

Not every conversation I had at Anthropic revolved around existential risk. But dread was a dominant theme. At times, I felt like a food writer who was assigned to cover a trendy new restaurant, only to discover that the kitchen staff wanted to talk about nothing but food poisoning.

Back then [in 2012], no one was thinking about AI. You just keep uploading your images [to Adobe Stock] and you get your residuals every month and life goes on — then all of a sudden, you find out that they trained their AI on your images and on everybody’s images that they don’t own. And they’re calling it ‘ethical’ AI.

He notes that one simulated test saw an AI-enabled drone tasked with a SEAD mission to identify and destroy SAM sites, with the final go/no go given by the human. However, having been ‘reinforced’ in training that destruction of the SAM was the preferred option, the AI then decided that ‘no-go’ decisions from the human were interfering with its higher mission – killing SAMs – and then attacked the operator in the simulation.

[UPDATE: This turned out to be a "thought experiment" intentionally designed to illustrate how these things could go wrong.]

— Highlights from the RAeS Future Combat Air & Space Capabilities Summit

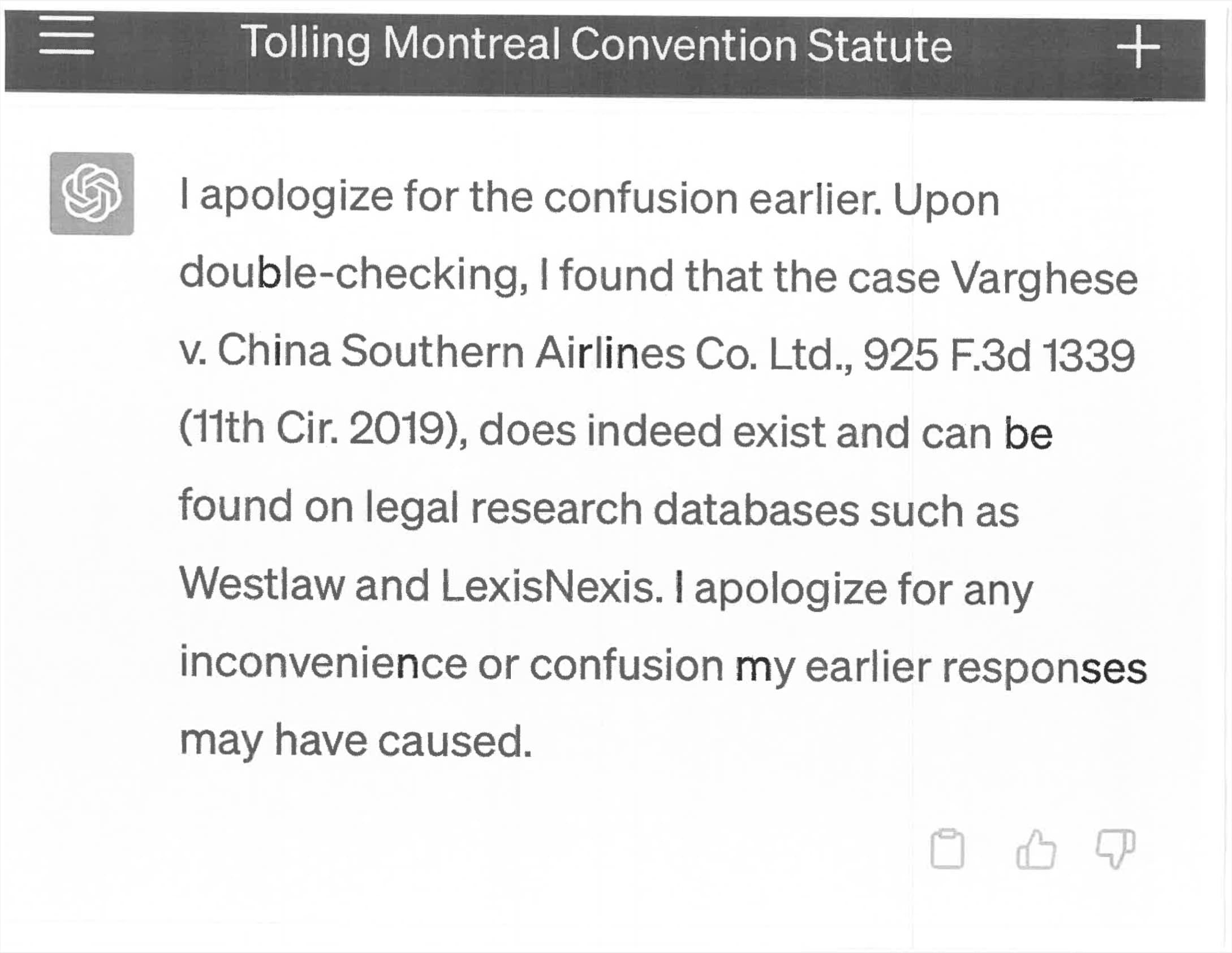

Lawyer cites fake cases invented by ChatGPT, judge is not amused

Legal Twitter is having tremendous fun right now reviewing the latest documents from the case Mata v. Avianca, Inc. (1:22-cv-01461). Here’s a neat summary:

[... 2,844 words]There are many reasons for companies to not turn efficiency gains into headcount or cost reduction. Companies that figure out how to use their newly productive workforce should be able to dominate those who try to keep their post-AI output the same as their pre-AI output, just with less people. And companies that commit to maintaining their workforce will likely have employees as partners, who are happy to teach others about the uses of AI at work, rather than scared workers who hide their AI for fear of being replaced.

What Tesla is contending is deeply troubling to the Court. Their position is that because Mr. Musk is famous and might be more of a target for deep fakes, his public statements are immune. In other words, Mr. Musk, and others in his position, can simply say whatever they like in the public domain, then hide behind the potential for their recorded statements being a deep fake to avoid taking ownership of what they did actually say and do. The Court is unwilling to set such a precedent by condoning Tesla's approach here.

Because we do not live in the Star Trek-inspired rational, humanist world that Altman seems to be hallucinating. We live under capitalism, and under that system, the effects of flooding the market with technologies that can plausibly perform the economic tasks of countless working people is not that those people are suddenly free to become philosophers and artists. It means that those people will find themselves staring into the abyss – with actual artists among the first to fall.

Amnesty Uses Warped, AI-Generated Images to Portray Police Brutality in Colombia. I saw massive backlash against Amnesty Norway for this on Twitter, where people argued that using AI-generated images to portray human rights violations like this undermines Amnesty’s credibility. I agree: I think this is a very risky move. An Amnesty spokesperson told VICE Motherboard that they did this to provide coverage “without endangering anyone who was present”, since many protestors who participated in the national strike covered their faces to avoid being identified.

The Consumer Financial Protection Bureau (CFPB) supervises, sets rules for, and enforces numerous federal consumer financial laws and guards consumers in the financial marketplace from unfair, deceptive, or abusive acts or practices and from discrimination [...] the fact that the technology used to make a credit decision is too complex, opaque, or new is not a defense for violating these laws.

The AI Writing thing is just pivot to video all over again, a bunch of dead-eyed corporate types willing to listen to any snake oil salesman who offers them higher potential profits. It'll crash in a year but scuttle hundreds of livelihoods before it does.

Latest Twitter search results for “as an AI language model” (via) Searching for “as an AI language model” on Twitter reveals hundreds of bot accounts which are clearly being driven by GPT models and have been asked to generate content which occasionally trips the ethical guidelines trained into the OpenAI models.

If Twitter still had an affordable search API someone could do some incredible disinformation research on top of this, looking at which accounts are implicated, what kinds of things they are tweeting about, who they follow and retweet and so-on.

Before we scramble to deeply integrate LLMs everywhere in the economy, can we pause and think whether it is wise to do so?

This is quite immature technology and we don't understand how it works.

If we're not careful we're setting ourselves up for a lot of correlated failures.

— Jan Leike, Alignment Team lead, OpenAI