51 posts tagged “git”

2025

TIL: Downloading archived Git repositories from archive.softwareheritage.org

(via)

Back in February I blogged about a neat Python library called sqlite-s3vfs for accessing SQLite databases hosted in an S3 bucket, released as MIT licensed open source by the UK government's Department for Business and Trade.

I went looking for it today and found that the github.com/uktrade/sqlite-s3vfs repository is now a 404.

Since this is taxpayer-funded open source software I saw it as my moral duty to try and restore access! It turns out a full copy had been captured by the Software Heritage archive, so I was able to restore the repository from there. My copy is now archived at simonw/sqlite-s3vfs.

The process for retrieving an archive was non-obvious, so I've written up a TIL and also published a new Software Heritage Repository Retriever tool which takes advantage of the CORS-enabled APIs provided by Software Heritage. Here's the Claude Code transcript from building that.

simonw/git-scraper-template. I built this new GitHub template repository in preparation for a workshop I'm giving at NICAR (the data journalism conference) next week on Cutting-edge web scraping techniques.

One of the topics I'll be covering is Git scraping - creating a GitHub repository that uses scheduled GitHub Actions workflows to grab copies of websites and data feeds and store their changes over time using Git.

This template repository is designed to be the fastest possible way to get started with a new Git scraper: simple create a new repository from the template and paste the URL you want to scrape into the description field and the repository will be initialized with a custom script that scrapes and stores that URL.

It's modeled after my earlier shot-scraper-template tool which I described in detail in Instantly create a GitHub repository to take screenshots of a web page.

The new git-scraper-template repo took some help from Claude to figure out. It uses a custom script to download the provided URL and derive a filename to use based on the URL and the content type, detected using file --mime-type -b "$file_path" against the downloaded file.

It also detects if the downloaded content is JSON and, if it is, pretty-prints it using jq - I find this is a quick way to generate much more useful diffs when the content changes.

2024

otterwiki (via) It's been a while since I've seen a new-ish Wiki implementation, and this one by Ralph Thesen is really nice. It's written in Python (Flask + SQLAlchemy + mistune for Markdown + GitPython) and keeps all of the actual wiki content as Markdown files in a local Git repository.

The installation instructions are a little in-depth as they assume a production installation with Docker or systemd - I figured out this recipe for trying it locally using uv:

git clone https://github.com/redimp/otterwiki.git

cd otterwiki

mkdir -p app-data/repository

git init app-data/repository

echo "REPOSITORY='${PWD}/app-data/repository'" >> settings.cfg

echo "SQLALCHEMY_DATABASE_URI='sqlite:///${PWD}/app-data/db.sqlite'" >> settings.cfg

echo "SECRET_KEY='$(echo $RANDOM | md5sum | head -c 16)'" >> settings.cfg

export OTTERWIKI_SETTINGS=$PWD/settings.cfg

uv run --with gunicorn gunicorn --bind 127.0.0.1:8080 otterwiki.server:app

Why GitHub Actually Won (via) GitHub co-founder Scott Chacon shares some thoughts on how GitHub won the open source code hosting market. Shortened to two words: timing, and taste.

There are some interesting numbers in here. I hadn't realized that when GitHub launched in 2008 the term "open source" had only been coined ten years earlier, in 1998. This paper by Dirk Riehle estimates there were 18,000 open source projects in 2008 - Scott points out that today there are over 280 million public repositories on GitHub alone.

Scott's conclusion:

We were there when a new paradigm was being born and we approached the problem of helping people embrace that new paradigm with a developer experience centric approach that nobody else had the capacity for or interest in.

AI-powered Git Commit Function

(via)

Andrej Karpathy built a shell alias, gcm, which passes your staged Git changes to an LLM via my LLM tool, generates a short commit message and then asks you if you want to "(a)ccept, (e)dit, (r)egenerate, or (c)ancel?".

Here's the incantation he's using to generate that commit message:

git diff --cached | llm "

Below is a diff of all staged changes, coming from the command:

\`\`\`

git diff --cached

\`\`\`

Please generate a concise, one-line commit message for these changes."This pipes the data into LLM (using the default model, currently gpt-4o-mini unless you set it to something else) and then appends the prompt telling it what to do with that input.

EpicEnv

(via)

Dan Goodman's tool for managing shared secrets via a Git repository. This uses a really neat trick: you can run epicenv invite githubuser and the tool will retrieve that user's public key from github.com/{username}.keys (here's mine) and use that to encrypt the secrets such that the user can decrypt them with their private key.

1991-WWW-NeXT-Implementation on GitHub. I fell down a bit of a rabbit hole today trying to answer that question about when World Wide Web Day was first celebrated. I found my way to www.w3.org/History/1991-WWW-NeXT/Implementation/ - an Apache directory listing of the source code for Tim Berners-Lee's original WorldWideWeb application for NeXT!

The code wasn't particularly easy to browse: clicking a .m file would trigger a download rather than showing the code in the browser, and there were no niceties like syntax highlighting.

So I decided to mirror that code to a new repository on GitHub. I grabbed the code using wget -r and was delighted to find that the last modified dates (from the early 1990s) were preserved ... which made me want to preserve them in the GitHub repo too.

I used Claude to write a Python script to back-date those commits, and wrote up what I learned in this new TIL: Back-dating Git commits based on file modification dates.

End result: I now have a repo with Tim's original code, plus commit dates that reflect when that code was last modified.

AWS CodeCommit quietly deprecated (via) CodeCommit is AWS's Git hosting service. In a reply from an AWS employee to this forum thread:

Beginning on 06 June 2024, AWS CodeCommit ceased onboarding new customers. Going forward, only customers who have an existing repository in AWS CodeCommit will be able to create additional repositories.

[...] If you would like to use AWS CodeCommit in a new AWS account that is part of your AWS Organization, please let us know so that we can evaluate the request for allowlisting the new account. If you would like to use an alternative to AWS CodeCommit given this news, we recommend using GitLab, GitHub, or another third party source provider of your choice.

What's weird about this is that, as far as I can tell, this is the first official public acknowledgement from AWS that CodeCommit is no longer accepting customers. The CodeCommit landing page continues to promote the product, though it does link to the How to migrate your AWS CodeCommit repository to another Git provider blog post from July 25th, which gives no direct indication that CodeCommit is being quietly sunset.

I wonder how long they'll continue to support their existing customers?

Amazon QLDB too

It looks like AWS may be having a bit of a clear-out. Amazon QLDB - Quantum Ledger Database (a blockchain-adjacent immutable ledger, launched in 2019) - quietly put out a deprecation announcement in their release history on July 18th (again, no official announcement elsewhere):

End of support notice: Existing customers will be able to use Amazon QLDB until end of support on 07/31/2025. For more details, see Migrate an Amazon QLDB Ledger to Amazon Aurora PostgreSQL.

This one is more surprising, because migrating to a different Git host is massively less work than entirely re-writing a system to use a fundamentally different database.

It turns out there's an infrequently updated community GitHub repo called SummitRoute/aws_breaking_changes which tracks these kinds of changes. Other services listed there include CodeStar, Cloud9, CloudSearch, OpsWorks, Workdocs and Snowmobile, and they cleverly (ab)use the GitHub releases mechanism to provide an Atom feed.

Add tests in a commit before the fix. They should pass, showing the behavior before your change. Then, the commit with your change will update the tests. The diff between these commits represents the change in behavior. This helps the author test their tests (I've written tests thinking they covered the relevant case but didn't), the reviewer to more precisely see the change in behavior and comment on it, and the wider community to understand what the PR description is about.

— Ed Page

uv pip install --exclude-newer example

(via)

A neat new feature of the uv pip install command is the --exclude-newer option, which can be used to avoid installing any package versions released after the specified date.

Here's a clever example of that in use from the typing_extensions packages CI tests that run against some downstream packages:

uv pip install --system -r test-requirements.txt --exclude-newer $(git show -s --date=format:'%Y-%m-%dT%H:%M:%SZ' --format=%cd HEAD)

They use git show to get the date of the most recent commit (%cd means commit date) formatted as an ISO timestamp, then pass that to --exclude-newer.

Use an llm to automagically generate meaningful git commit messages. Neat, thoroughly documented recipe by Harper Reed using my LLM CLI tool as part of a scheme for if you’re feeling too lazy to write a commit message—it uses a prepare-commit-msg Git hook which runs any time you commit without a message and pipes your changes to a model along with a custom system prompt.

How I use git worktrees (via) TIL about worktrees, a Git feature that lets you have multiple repository branches checked out to separate directories at the same time.

The default UI for them is a little unergonomic (classic Git) but Bill Mill here shares a neat utility script for managing them in a more convenient way.

One particularly neat trick: Bill’s “worktree” Bash script checks for a node_modules folder and, if one exists, duplicates it to the new directory using copy-on-write, saving you from having to run yet another lengthy “npm install”.

Figure out who’s leaving the company: dump, diff, repeat (via) Rachel Kroll describes a neat hack for companies with an internal LDAP server or similar machine-readable employee directory: run a cron somewhere internal that grabs the latest version and diffs it against the previous to figure out who has joined or left the company.

I suggest using Git for this - a form of Git scraping - as then you get a detailed commit log of changes over time effectively for free.

I really enjoyed Rachel's closing thought:

Incidentally, if someone gets mad about you running this sort of thing, you probably don't want to work there anyway. On the other hand, if you're able to build such tools without IT or similar getting "threatened" by it, then you might be somewhere that actually enjoys creating interesting and useful stuff. Treasure such places. They don't tend to last.

Inside .git. This single diagram filled in all sorts of gaps in my mental model of how git actually works under the hood.

2023

See the History of a Method with git log -L (via) Neat Git trick from Caleb Hearth that I hadn’t seen before, and it works for Python out of the box:

git log -L :path_with_format:__init__.py

That command displays a log (with diffs) of just the portion of commits that changed the path_with_format function in the __init__.py file.

Tracking SQLite Database Changes in Git (via) A neat trick from Garrit Franke that I hadn’t seen before: you can teach “git diff” how to display human readable versions of the differences between binary files with a specific extension using the following:

git config diff.sqlite3.binary true

git config diff.sqlite3.textconv “echo .dump | sqlite3”

That way you can store binary files in your repo but still get back SQL diffs to compare them.

I still worry about the efficiency of storing binary files in Git, since I expect multiple versions of a text text file to compress together better.

2022

The Perfect Commit

For the last few years I’ve been trying to center my work around creating what I consider to be the Perfect Commit. This is a single commit that contains all of the following:

[... 2,061 words]sqlite-comprehend: run AWS entity extraction against content in a SQLite database

I built a new tool this week: sqlite-comprehend, which passes text from a SQLite database through the AWS Comprehend entity extraction service and stores the returned entities.

[... 1,146 words]A tiny CI system (via) Christian Ştefănescu shares a recipe for building a tiny self-hosted CI system using Git and Redis. A post-receive hook runs when a commit is pushed to the repo and uses redis-cli to push jobs to a list. Then a separate bash script runs a loop with a blocking “redis-cli blpop jobs” operation which waits for new jobs and then executes the CI job as a shell script.

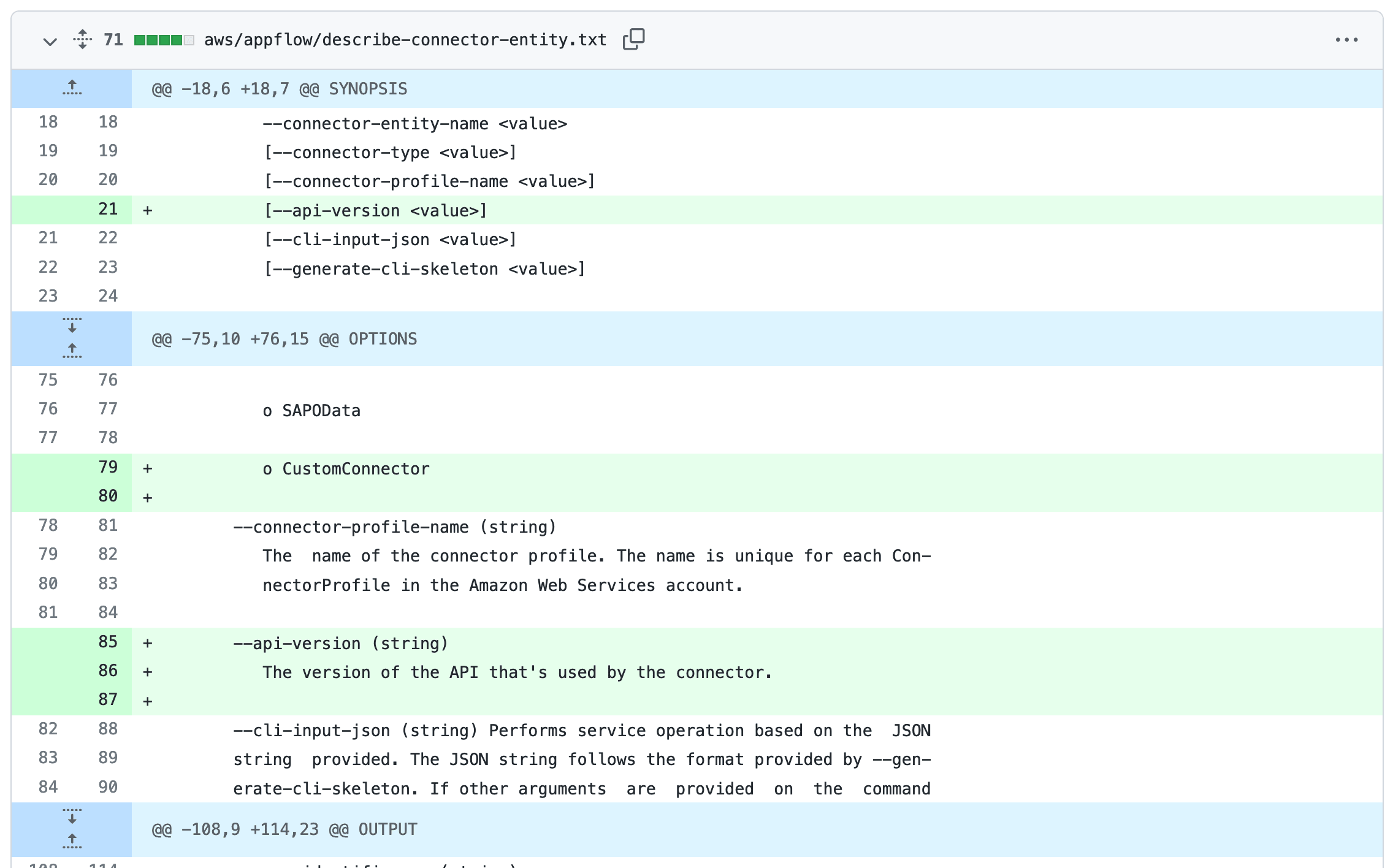

Help scraping: track changes to CLI tools by recording their --help using Git

I’ve been experimenting with a new variant of Git scraping this week which I’m calling Help scraping. The key idea is to track changes made to CLI tools over time by recording the output of their --help commands in a Git repository.

How I build a feature

I’m maintaining a lot of different projects at the moment. I thought it would be useful to describe the process I use for adding a new feature to one of them, using the new sqlite-utils create-database command as an example.

[... 2,850 words]2021

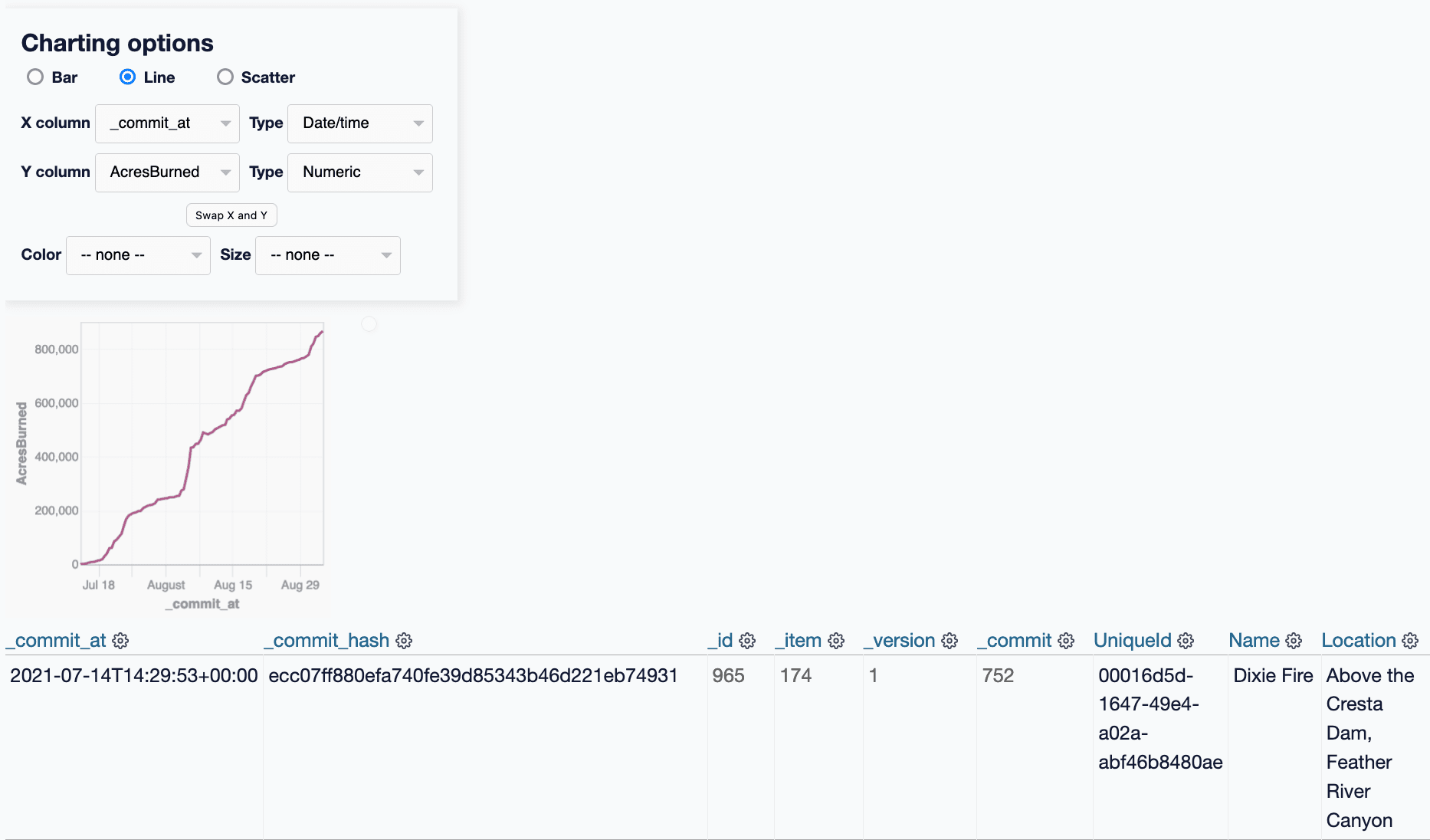

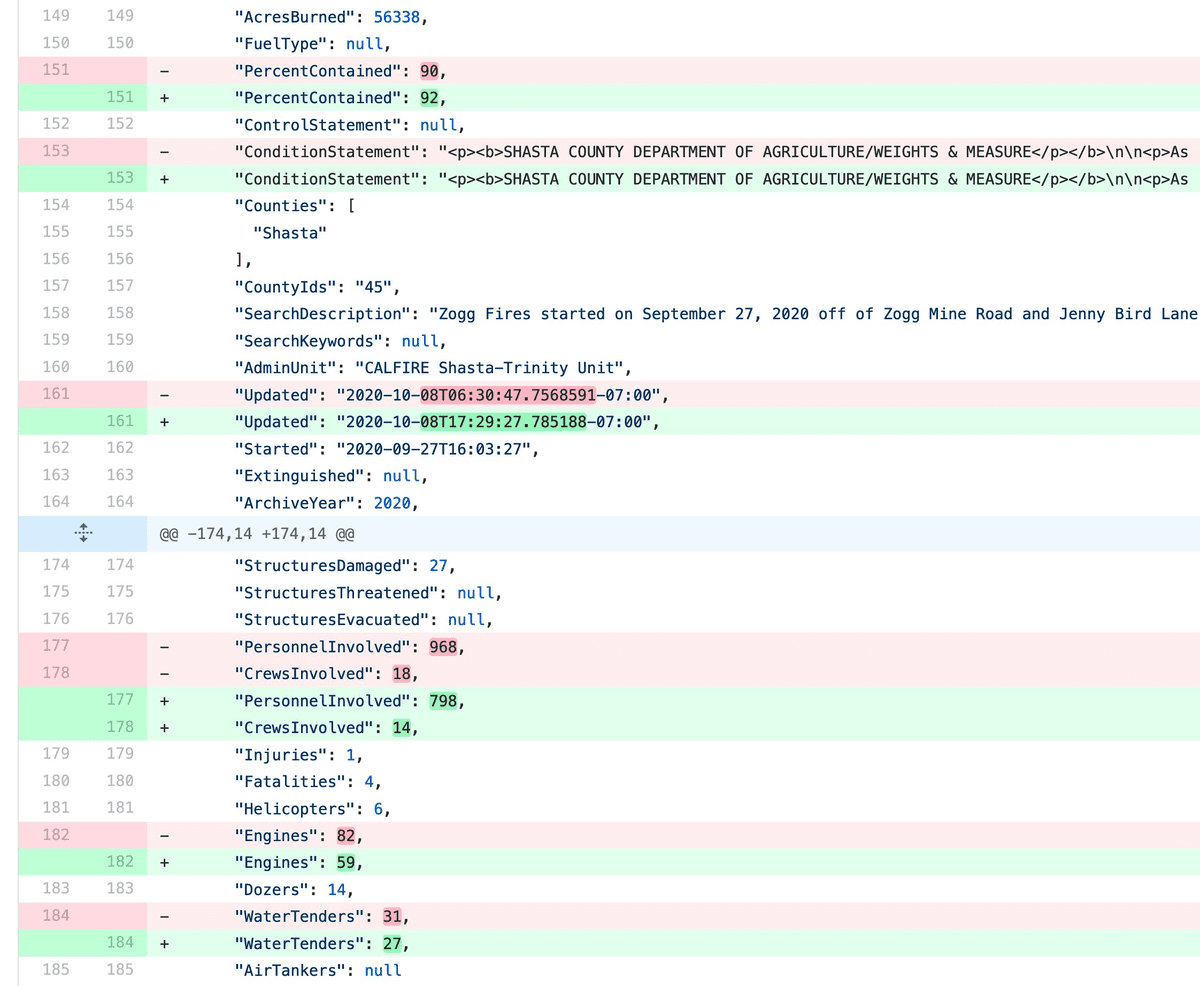

git-history: a tool for analyzing scraped data collected using Git and SQLite

I described Git scraping last year: a technique for writing scrapers where you periodically snapshot a source of data to a Git repository in order to record changes to that source over time.

[... 2,002 words]2020

Commits are snapshots, not diffs (via) Useful, clearly explained revision of some Git fundamentals.

nyt-2020-election-scraper. Brilliant application of git scraping by Alex Gaynor and a growing team of contributors. Takes a JSON snapshot of the NYT’s latest election poll figures every five minutes, then runs a Python script to iterate through the history and build an HTML page showing the trends, including what percentage of the remaining votes each candidate needs to win each state. This is the perfect case study in why it can be useful to take a “snapshot if the world right now” data source and turn it into a git revision history over time.

Git scraping: track changes over time by scraping to a Git repository

Git scraping is the name I’ve given a scraping technique that I’ve been experimenting with for a few years now. It’s really effective, and more people should use it.

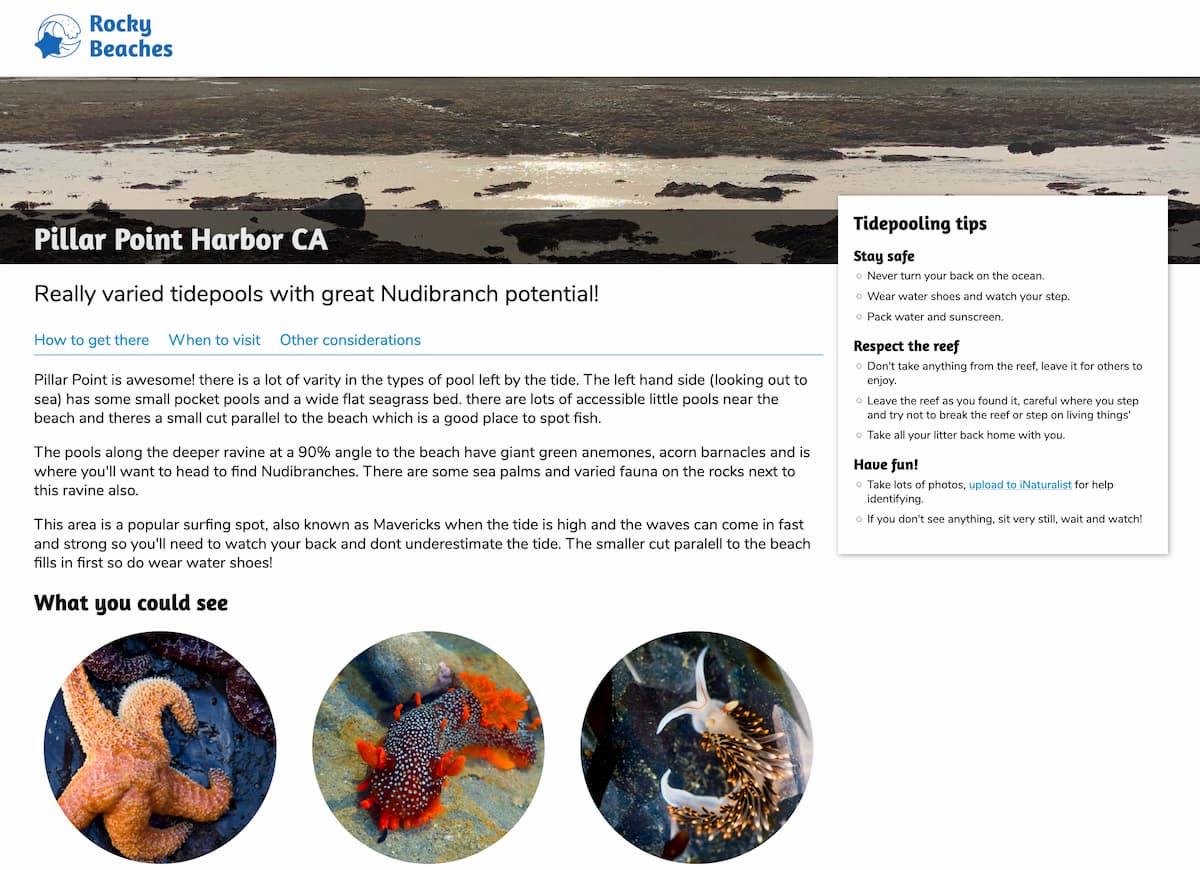

[... 963 words]Weeknotes: Rocky Beaches, Datasette 0.48, a commit history of my database

This week I helped Natalie launch Rocky Beaches, shipped Datasette 0.48 and several releases of datasette-graphql, upgraded the CSRF protection for datasette-upload-csvs and figured out how to get a commit log of changes to my blog by backing up its database to a GitHub repository.

Weeknotes: cookiecutter templates, better plugin documentation, sqlite-generate

I spent this week spreading myself between a bunch of smaller projects, and finally getting familiar with cookiecutter. I wrote about my datasette-plugin cookiecutter template earlier in the week; here’s what else I’ve been working on.

[... 703 words]Web apps are typically continuously delivered, not rolled back, and you don't have to support multiple versions of the software running in the wild.

This is not the class of software that I had in mind when I wrote the blog post 10 years ago. If your team is doing continuous delivery of software, I would suggest to adopt a much simpler workflow (like GitHub flow) instead of trying to shoehorn git-flow into your team.

Weeknotes: Archiving coronavirus.data.gov.uk, custom pages and directory configuration in Datasette, photos-to-sqlite

I mainly made progress on three projects this week: Datasette, photos-to-sqlite and a cleaner way of archiving data to a git repository.

[... 1,132 words]Goodbye Zeit Now v1, hello datasette-publish-now—and talking to myself in GitHub issues

This week I’ve been mostly dealing with the finally announced shutdown of Zeit Now v1. And having long-winded conversations with myself in GitHub issues.

[... 2,050 words]