26 posts tagged “prototyping”

2026

Don’t “Trust the Process” (via) Jenny Wen, Design Lead at Anthropic (and previously Director of Design at Figma) gave a provocative keynote at Hatch Conference in Berlin last September.

Jenny argues that the Design Process - user research leading to personas leading to user journeys leading to wireframes... all before anything gets built - may be outdated for today's world.

Hypothesis: In a world where anyone can make anything — what matters is your ability to choose and curate what you make.

In place of the Process, designers should lean into prototypes. AI makes these much more accessible and less time-consuming than they used to be.

Watching this talk made me think about how AI-assisted programming significantly reduces the cost of building the wrong thing. Previously if the design wasn't right you could waste months of development time building in the wrong direction, which was a very expensive mistake. If a wrong direction wastes just a few days instead we can take more risks and be much more proactive in exploring the problem space.

I've always been a compulsive prototyper though, so this is very much playing into my own existing biases!

2025

The trick with Claude Code is to give it large, but not too large, extremely well defined problems.

(If the problems are too large then you are now vibe coding… which (a) frequently goes wrong, and (b) is a one-way street: once vibes enter your app, you end up with tangled, write-only code which functions perfectly but can no longer be edited by humans. Great for prototyping, bad for foundations.)

— Matt Webb, What I think about when I think about Claude Code

Build and share AI-powered apps with Claude. Anthropic have added one of the most important missing features to Claude Artifacts: apps built as artifacts now have the ability to run their own prompts against Claude via a new API.

Claude Artifacts are web apps that run in a strictly controlled browser sandbox: their access to features like localStorage or the ability to access external APIs via fetch() calls is restricted by CSP headers and the <iframe sandbox="..." mechanism.

The new window.claude.complete() method opens a hole that allows prompts composed by the JavaScript artifact application to be run against Claude.

As before, you can publish apps built using artifacts such that anyone can see them. The moment your app tries to execute a prompt the current user will be required to sign into their own Anthropic account so that the prompt can be billed against them, and not against you.

I'm amused that Anthropic turned "we added a window.claude.complete() function to Artifacts" into what looks like a major new product launch, but I can't say it's bad marketing for them to do that!

As always, the crucial details about how this all works are tucked away in tool descriptions in the system prompt. Thankfully this one was easy to leak. Here's the full set of instructions, which start like this:

When using artifacts and the analysis tool, you have access to window.claude.complete. This lets you send completion requests to a Claude API. This is a powerful capability that lets you orchestrate Claude completion requests via code. You can use this capability to do sub-Claude orchestration via the analysis tool, and to build Claude-powered applications via artifacts.

This capability may be referred to by the user as "Claude in Claude" or "Claudeception".

[...]

The API accepts a single parameter -- the prompt you would like to complete. You can call it like so:

const response = await window.claude.complete('prompt you would like to complete')

I haven't seen "Claudeception" in any of their official documentation yet!

That window.claude.complete(prompt) method is also available to the Claude analysis tool. It takes a string and returns a string.

The new function only handles strings. The tool instructions provide tips to Claude about prompt engineering a JSON response that will look frustratingly familiar:

- Use strict language: Emphasize that the response must be in JSON format only. For example: “Your entire response must be a single, valid JSON object. Do not include any text outside of the JSON structure, including backticks ```.”

- Be emphatic about the importance of having only JSON. If you really want Claude to care, you can put things in all caps – e.g., saying “DO NOT OUTPUT ANYTHING OTHER THAN VALID JSON. DON’T INCLUDE LEADING BACKTICKS LIKE ```json.”.

Talk about Claudeception... now even Claude itself knows that you have to YELL AT CLAUDE to get it to output JSON sometimes.

The API doesn't provide a mechanism for handling previous conversations, but Anthropic works round that by telling the artifact builder how to represent a prior conversation as a JSON encoded array:

Structure your prompt like this:

const conversationHistory = [ { role: "user", content: "Hello, Claude!" }, { role: "assistant", content: "Hello! How can I assist you today?" }, { role: "user", content: "I'd like to know about AI." }, { role: "assistant", content: "Certainly! AI, or Artificial Intelligence, refers to..." }, // ... ALL previous messages should be included here ]; const prompt = ` The following is the COMPLETE conversation history. You MUST consider ALL of these messages when formulating your response: ${JSON.stringify(conversationHistory)} IMPORTANT: Your response should take into account the ENTIRE conversation history provided above, not just the last message. Respond with a JSON object in this format: { "response": "Your response, considering the full conversation history", "sentiment": "brief description of the conversation's current sentiment" } Your entire response MUST be a single, valid JSON object. `; const response = await window.claude.complete(prompt);

There's another example in there showing how the state of play for a role playing game should be serialized as JSON and sent with every prompt as well.

The tool instructions acknowledge another limitation of the current Claude Artifacts environment: code that executes there is effectively invisible to the main LLM - error messages are not automatically round-tripped to the model. As a result it makes the following recommendation:

Using

window.claude.completemay involve complex orchestration across many different completion requests. Once you create an Artifact, you are not able to see whether or not your completion requests are orchestrated correctly. Therefore, you SHOULD ALWAYS test your completion requests first in the analysis tool before building an artifact.

I've already seen it do this in my own experiments: it will fire up the "analysis" tool (which allows it to run JavaScript directly and see the results) to perform a quick prototype before it builds the full artifact.

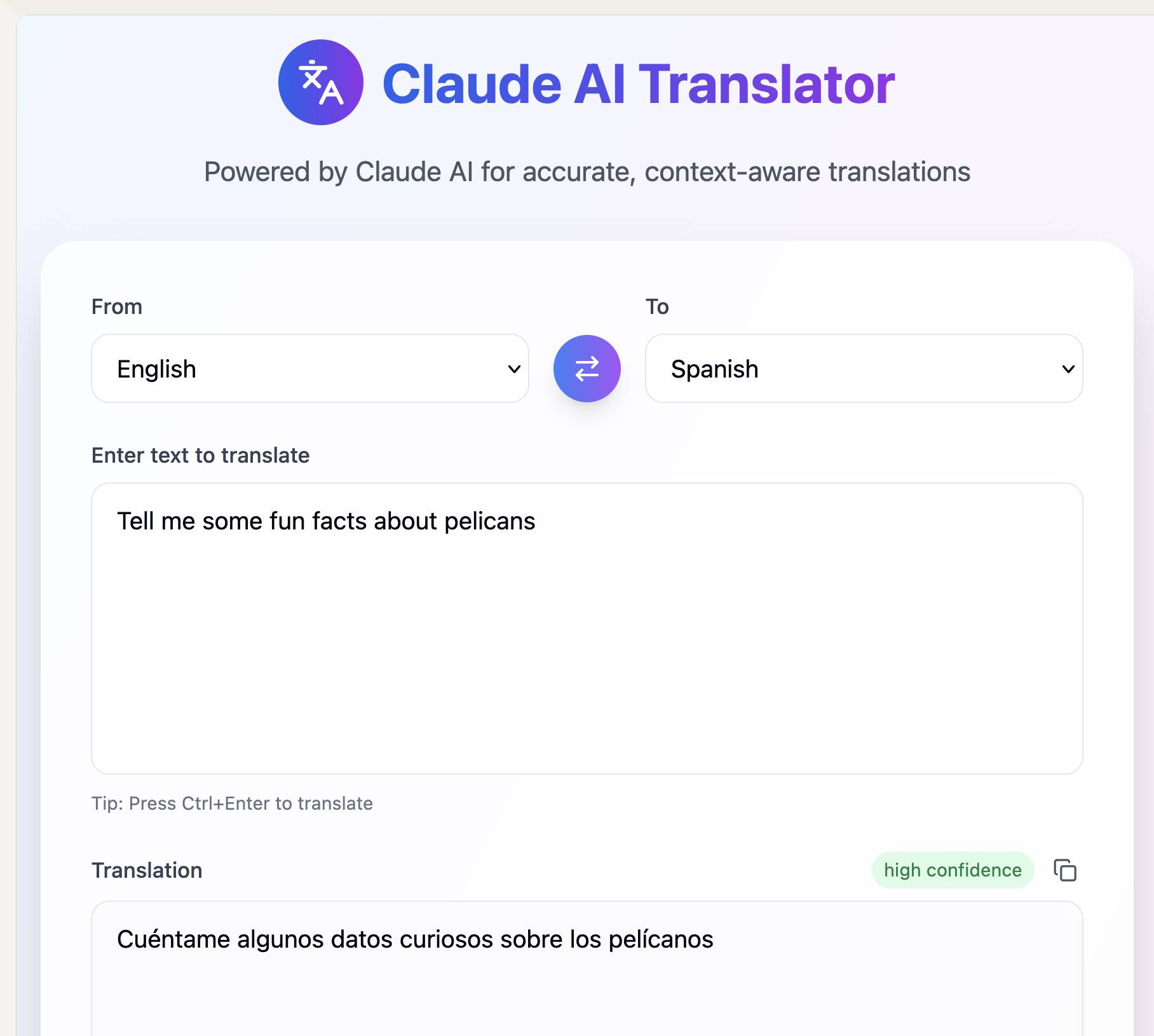

Here's my first attempt at an AI-enabled artifact: a translation app. I built it using the following single prompt:

Let’s build an AI app that uses Claude to translate from one language to another

Here's the transcript. You can try out the resulting app here - the app it built me looks like this:

If you want to use this feature yourself you'll need to turn on "Create AI-powered artifacts" in the "Feature preview" section at the bottom of your "Settings -> Profile" section. I had to do that in the Claude web app as I couldn't find the feature toggle in the Claude iOS application. This claude.ai/settings/profile page should have it for your account.

Update 31st July 2025: Anthropic changed how this works. Here's details of the updated mechanism.

At Amazon, Some Coders Say Their Jobs Have Begun to Resemble Warehouse Work. I got a couple of quotes in this NYTimes story about internal resistance to Amazon's policy to encourage employees to make use of more generative AI:

“It’s more fun to write code than to read code,” said Simon Willison, an A.I. fan who is a longtime programmer and blogger, channeling the objections of other programmers. “If you’re told you have to do a code review, it’s never a fun part of the job. When you’re working with these tools, it’s most of the job.” [...]

It took me about 15 years of my career before I got over my dislike of reading code written by other people. It's a difficult skill to develop! I'm not surprised that a lot of people dislike AI-assisted programming paradigm when the end result is less time writing, more time reading!

“If you’re a prototyper, this is a gift from heaven,” Mr. Willison said. “You can knock something out that illustrates the idea.”

Rapid prototyping has been a key skill of mine for a long time. I love being able to bring half-baked illustrative prototypes of ideas to a meeting - my experience is that the quality of conversation goes up by an order of magnitude as a result of having something concrete for people to talk about.

These days I can vibe code a prototype in single digit minutes.

Using S3 triggers to maintain a list of files in DynamoDB. I built an experimental prototype this morning of a system for efficiently tracking files that have been added to a large S3 bucket by maintaining a parallel DynamoDB table using S3 triggers and AWS lambda.

I got 80% of the way there with this single prompt (complete with typos) to my custom Claude Project:

Python CLI app using boto3 with commands for creating a new S3 bucket which it also configures to have S3 lambada event triggers which moantian a dynamodb table containing metadata about all of the files in that bucket. Include these commands

create_bucket - create a bucket and sets up the associated triggers and dynamo tableslist_files - shows me a list of files based purely on querying dynamo

ChatGPT then took me to the 95% point. The code Claude produced included an obvious bug, so I pasted the code into o3-mini-high on the basis that "reasoning" is often a great way to fix those kinds of errors:

Identify, explain and then fix any bugs in this code:code from Claude pasted here

... and aside from adding a couple of time.sleep() calls to work around timing errors with IAM policy distribution, everything worked!

Getting from a rough idea to a working proof of concept of something like this with less than 15 minutes of prompting is extraordinarily valuable.

This is exactly the kind of project I've avoided in the past because of my almost irrational intolerance of the frustration involved in figuring out the individual details of each call to S3, IAM, AWS Lambda and DynamoDB.

(Update: I just found out about the new S3 Metadata system which launched a few weeks ago and might solve this exact problem!)

2024

Preferring throwaway code over design docs (via) Doug Turnbull advocates for a software development process far more realistic than attempting to create a design document up front and then implement accordingly.

As Doug observes, "No plan survives contact with the enemy". His process is to build a prototype in a draft pull request on GitHub, making detailed notes along the way and with the full intention of discarding it before building the final feature.

Important in this methodology is a great deal of maturity. Can you throw away your idea you’ve coded or will you be invested in your first solution? A major signal for seniority is whether you feel comfortable coding something 2-3 different ways. That your value delivery isn’t about lines of code shipped to prod, but organizational knowledge gained.

I've been running a similar process for several years using issues rather than PRs. I wrote about that in How I build a feature back in 2022.

The thing I love about issue comments (or PR comments) for recording ongoing design decisions is that because they incorporate a timestamp there's no implicit expectation to keep them up to date as the software changes. Doug sees the same benefit:

Another important point is on using PRs for documentation. They are one of the best forms of documentation for devs. They’re discoverable - one of the first places you look when trying to understand why code is implemented a certain way. PRs don’t profess to reflect the current state of the world, but a state at a point in time.

In the past, these decisions were so consequential, they were basically one-way doors, in Amazon language. That’s why we call them ‘architectural decisions!’ You basically have to live with your choice of database, authentication, JavaScript UI framework, almost forever.

But that’s changing with LLMs, because you can explore, investigate, and even prototype each one so quickly. Even technology migrations are becoming so much easier/cheaper/faster.

These are all examples of increasing optionality.

— Steve Yegge, via Gene Kim

For most software engineers, being well rounded is more important than pure technical mastery. This was already true, of course — see @patio11's famous advice "Don't call yourself a programmer" — but even more so due to foundation models. In most situations, skills like being able to use AI to rapidly prototype in order to communicate with clients to iterate on specifications create far more business value than technical wizardry alone.

You can use text-wrap: balance; on icons. Neat CSS experiment from Terence Eden: the new text-wrap: balance CSS property is intended to help make text like headlines display without ugly wrapped single orphan words, but Terence points out it can be used for icons too:

![]()

This inspired me to investigate if the same technique could work for text based navigation elements. I used Claude to build this interactive prototype of a navigation bar that uses text-wrap: balance against a list of display: inline menu list items. It seems to work well!

My first attempt used display: inline-block which worked in Safari but failed in Firefox.

Notable limitation from that MDN article:

Because counting characters and balancing them across multiple lines is computationally expensive, this value is only supported for blocks of text spanning a limited number of lines (six or less for Chromium and ten or less for Firefox)

So it's fine for these navigation concepts but isn't something you can use for body text.

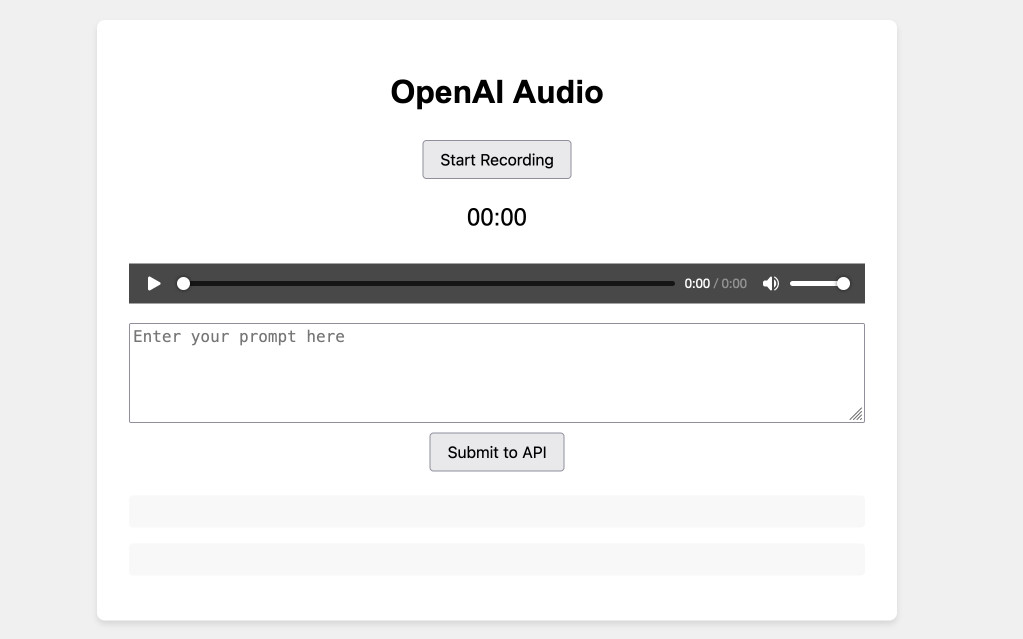

Experimenting with audio input and output for the OpenAI Chat Completion API

OpenAI promised this at DevDay a few weeks ago and now it’s here: their Chat Completion API can now accept audio as input and return it as output. OpenAI still recommend their WebSocket-based Realtime API for audio tasks, but the Chat Completion API is a whole lot easier to write code against.

[... 1,555 words]RISD BFA Industrial Design: AI Software Design Studio. Fascinating syllabus for a course on digital product design taught at the Rhode Island School of Design by Kelin Carolyn Zhang.

Designers must adapt and shape the frontier of AI-driven computing — while navigating the opportunities, risks, and ethical responsibilities of working with this new technology.

In this new world, creation is cheap, craft is automatable, and everyone is a beginner. The ultimate differentiator will be the creator’s perspective, taste, and judgment. The software design education for our current moment must prioritize this above all else.

By course's end, students will have hands-on experience with an end-to-end digital product design process, culminating in a physical or digital product that takes advantage of the unique properties of generative AI models. Prior coding experience is not required, but students will learn using AI coding assistants like ChatGPT and Claude.

From Kelin's Twitter thread about the course so far:

these are juniors in industrial design. about half of them don't have past experience even designing software or using figma [...]

to me, they're doing great because they're moving super quickly

what my 4th yr interaction design students in 2019 could make in half a semester, these 3rd year industrial design students are doing in a few days with no past experience [...]

they very quickly realized the limits of LLM code in week 1, especially in styling & creating unconventional behavior

AI can help them make a functional prototype with js in minutes, but it doesn't look good

datasette-checkbox. I built this fun little Datasette plugin today, inspired by a conversation I had in Datasette Office Hours.

If a user has the update-row permission and the table they are viewing has any integer columns with names that start with is_ or should_ or has_, the plugin adds interactive checkboxes to that table which can be toggled to update the underlying rows.

This makes it easy to quickly spin up an interface that allows users to review and update boolean flags in a table.

I have ambitions for a much more advanced version of this, where users can do things like add or remove tags from rows directly in that table interface - but for the moment this is a neat starting point, and it only took an hour to build (thanks to help from Claude to build an initial prototype, chat transcript here).

The challenge [with RAG] is that most corner-cutting solutions look like they’re working on small datasets while letting you pretend that things like search relevance don’t matter, while in reality relevance significantly impacts quality of responses when you move beyond prototyping (whether they’re literally search relevance or are better tuned SQL queries to retrieve more appropriate rows). This creates a false expectation of how the prototype will translate into a production capability, with all the predictable consequences: underestimating timelines, poor production behavior/performance, etc.

2023

tldraw/draw-a-ui (via) Absolutely spectacular GPT-4 Vision API demo. Sketch out a rough UI prototype using the open source tldraw drawing app, then select a set of components and click "Make Real" (after giving it an OpenAI API key). It generates a PNG snapshot of your selection and sends that to GPT-4 with instructions to turn it into a Tailwind HTML+JavaScript prototype, then adds the result as an iframe next to your mockup.

You can then make changes to your mockup, select it and the previous mockup and click "Make Real" again to ask for an updated version that takes your new changes into account.

This is such a great example of innovation at the UI layer, and everything is open source. Check app/lib/getHtmlFromOpenAI.ts for the system prompt that makes it work.

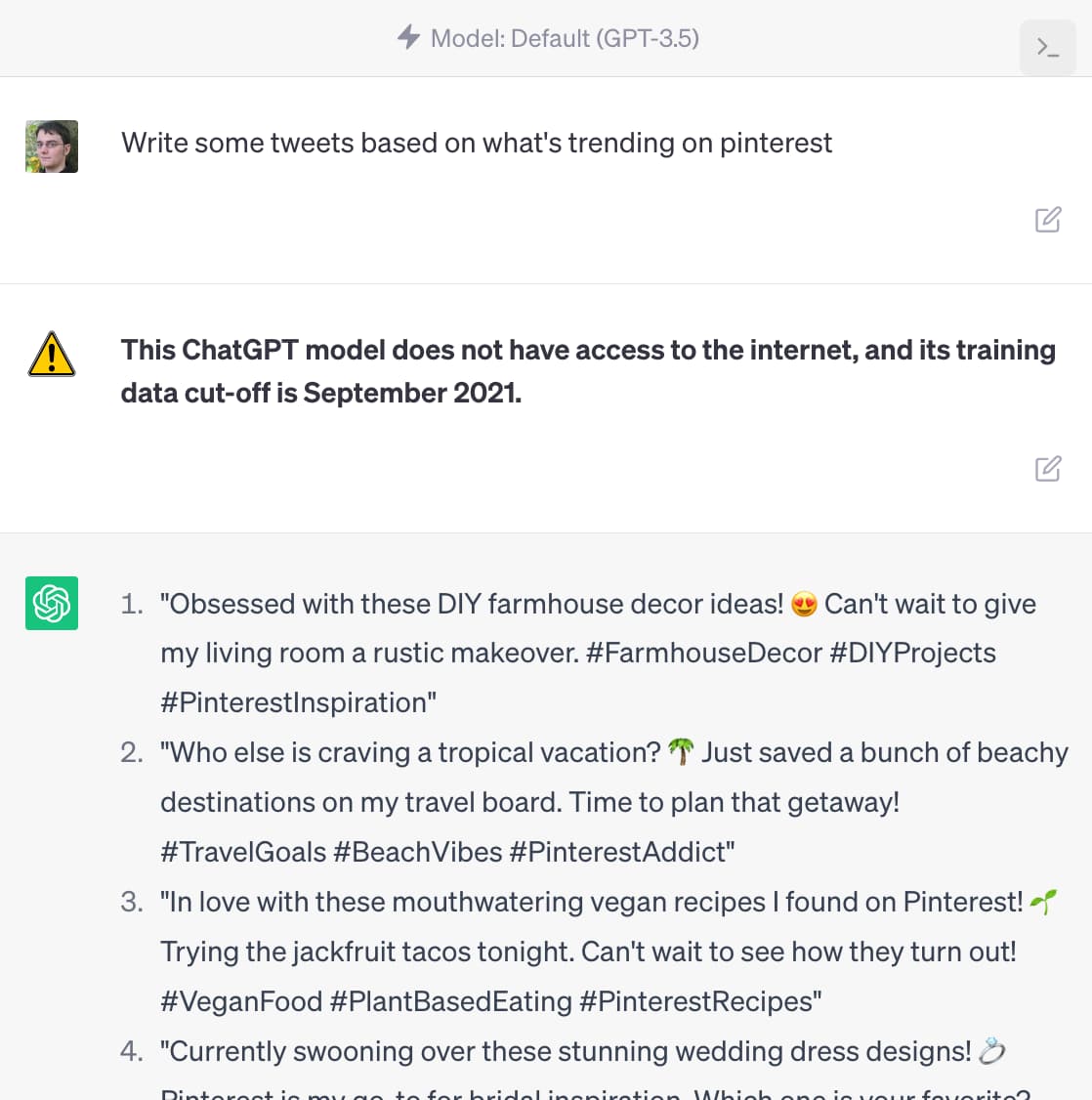

ChatGPT should include inline tips

In OpenAI isn’t doing enough to make ChatGPT’s limitations clear James Vincent argues that OpenAI’s existing warnings about ChatGPT’s confounding ability to convincingly make stuff up are not effective.

[... 1,488 words]2022

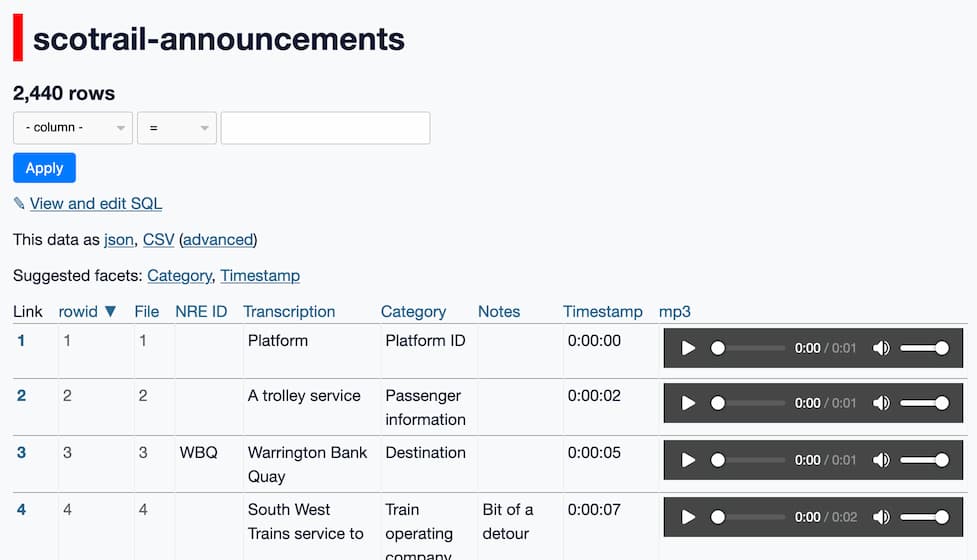

Analyzing ScotRail audio announcements with Datasette—from prototype to production

Scottish train operator ScotRail released a two-hour long MP3 file containing all of the components of its automated station announcements. Messing around with them is proving to be a huge amount of fun.

[... 4,428 words]2020

Demos, Prototypes, and MVPs (via) I really like how Jacob describes the difference between a demo and a prototype: a demo is externally facing and helps explain a concept to a customer; a prototype is internally facing and helps prove that something can be built.

2019

Logging to SQLite using ASGI middleware

I had some fun playing around with ASGI middleware and logging during our flight back to England for the holidays.

[... 2,535 words]2013

As a non-technical single founder for a web startup, is it better to hire a design firm to build the prototype, or find a technical co-founder?

Find a co-founder. The problem with using an outside agency to build your initial prototype is that you won’t really start learning about your product until after you have launched it. You need to have the talent available in-house to then make changes and improvements based on the feedback you get from real users.

[... 123 words]How can I produce an animated prototype out of designs for an iOS app?

Keynote is a surprisingly good tool for this kind of things, especially since they added path based animations to it a few years ago.

[... 55 words]2012

What are good ways to design a web application? Do you, for example, begin with a wire-frame of the front-end and work your way back to the database schema? The reverse? Figure out both ends and work towards the center?

I start with a working prototype, which I find I can often knock together in a couple of hours using Django. Having a functional (albeit buggy, ugly and insecure) prototype makes it much easier for me to start to reason about the larger application. There’s not much point in coming up with a comprehensive architecture plan only to find out you’re building the wrong thing!

[... 111 words]2010

How long would it take an average programmer to do a Ruby on Rails Reddit clone prototype?

Just FYI, Reddit is an open source Python project: http://code.reddit.com/

[... 34 words]2008

The overdue Places post II—Prototyping Iconicness. How Flickr Places works.

2007

DiSo: Distributed Social Networking applications (via) New project to prototype a decentralised social network on top of WordPress, using OpenID, microformats and social whitelisting.

Protoscript (via) JavaScript tool designed for easy prototyping of JS interactions; powered by YUI and jQuery.

2003

Comment Authentication Prototype

I’ve built a prototype of the comment signature system discussed earlier. The prototype consists of an authentication server which anyone can register with and support on this blog for verifying signatures. So far it seems to work.

[... 434 words]