April 2024

90 posts: 5 entries, 59 links, 26 quotes

April 22, 2024

No one buys books (via) Fascinating insights into the book publishing industry gathered by Elle Griffin from details that came out during the Penguin vs. DOJ antitrust lawsuit.

Publishing turns out to be similar to VC investing: a tiny percentage of books are hits that cover the costs for the vast majority that didn't sell well. The DOJ found that, of 58,000 books published in a year, "90 percent of them sold fewer than 2,000 copies and 50 percent sold less than a dozen copies."

UPDATE: This story is inaccurate: those statistics were grossly misinterpreted during the trial. See this post for updated information.

Here's an even better debunking: Yes, People Do Buy Books (subtitle: "Despite viral claims, Americans buy over a billion books a year").

timpaul/form-extractor-prototype (via) Tim Paul, Head of Interaction Design at the UK's Government Digital Service, published this brilliant prototype built on top of Claude 3 Opus.

The video shows what it can do. Give it an image of a form and it will extract the form fields and use them to create a GDS-style multi-page interactive form, using their GOV.UK design system and govuk-frontend npm package.

It works for both hand-drawn napkin illustrations and images of existing paper forms.

The bulk of the prompting logic is the schema definition in data/extract-form-questions.json.

I'm always excited to see applications built on LLMs that go beyond the chatbot UI. This is a great example of exactly that.

April 23, 2024

We introduce phi-3-mini, a 3.8 billion parameter language model trained on 3.3 trillion tokens, whose overall performance, as measured by both academic benchmarks and internal testing, rivals that of models such as Mixtral 8x7B and GPT-3.5 (e.g., phi-3-mini achieves 69% on MMLU and 8.38 on MT-bench), despite being small enough to be deployed on a phone.

The Instruction Hierarchy: Training LLMs to Prioritize Privileged Instructions (via) By far the most detailed paper on prompt injection I’ve seen yet from OpenAI, published a few days ago and with six credited authors: Eric Wallace, Kai Xiao, Reimar Leike, Lilian Weng, Johannes Heidecke and Alex Beutel.

The paper notes that prompt injection mitigations which completely refuse any form of instruction in an untrusted prompt may not actually be ideal: some forms of instruction are harmless, and refusing them may provide a worse experience.

Instead, it proposes a hierarchy—where models are trained to consider if instructions from different levels conflict with or support the goals of the higher-level instructions—if they are aligned or misaligned with them.

The authors tested this idea by fine-tuning a model on top of GPT 3.5, and claim that it shows greatly improved performance against numerous prompt injection benchmarks.

As always with prompt injection, my key concern is that I don’t think “improved” is good enough here. If you are facing an adversarial attacker reducing the chance that they might find an exploit just means they’ll try harder until they find an attack that works.

The paper concludes with this note: “Finally, our current models are likely still vulnerable to powerful adversarial attacks. In the future, we will conduct more explicit adversarial training, and study more generally whether LLMs can be made sufficiently robust to enable high-stakes agentic applications.”

Weeknotes: Llama 3, AI for Data Journalism, llm-evals and datasette-secrets

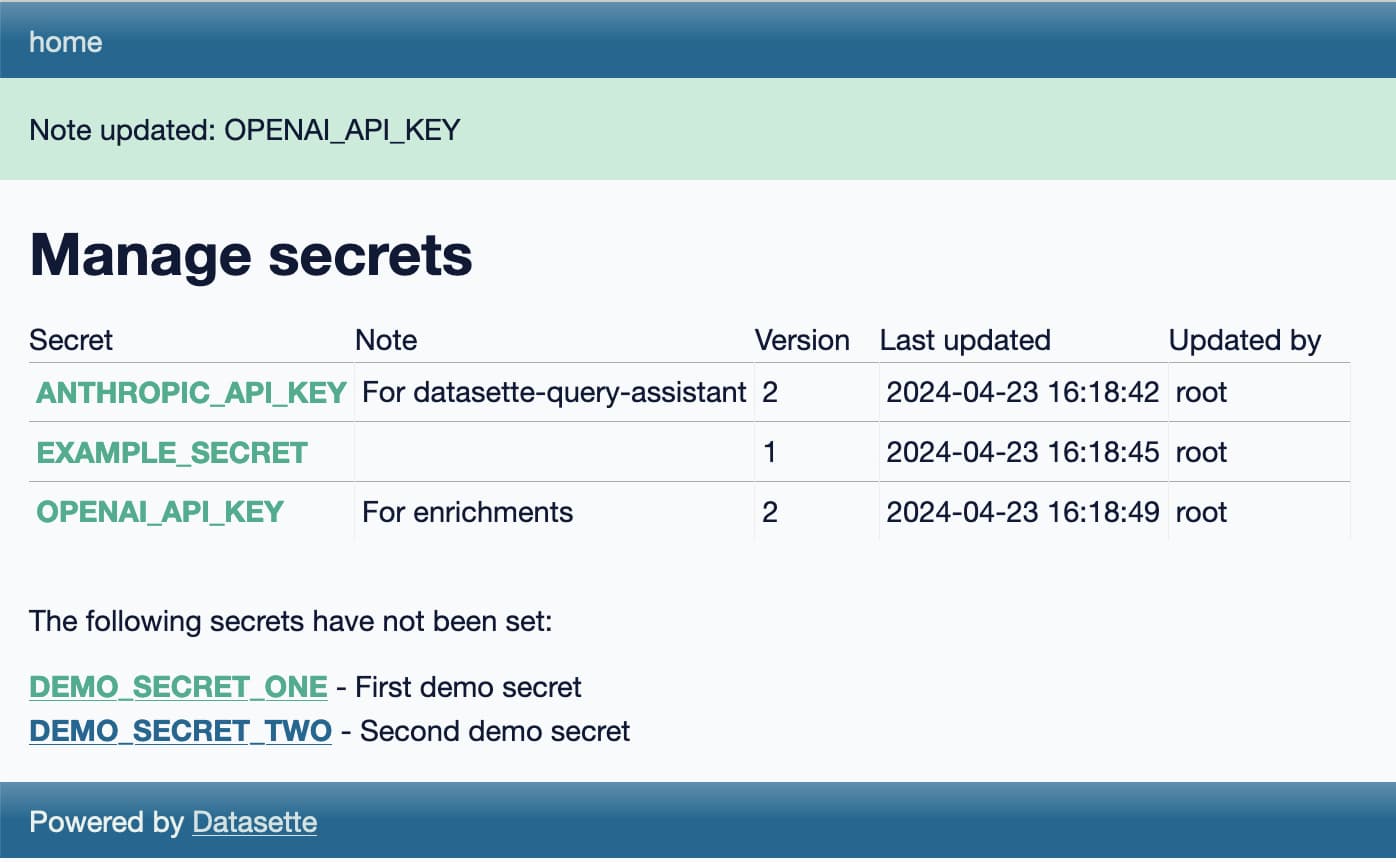

Llama 3 landed on Thursday. I ended up updating a whole bunch of different plugins to work with it, described in Options for accessing Llama 3 from the terminal using LLM.

[... 1,030 words]microsoft/Phi-3-mini-4k-instruct-gguf (via) Microsoft’s Phi-3 LLM is out and it’s really impressive. This 4,000 token context GGUF model is just a 2.2GB (for the Q4 version) and ran on my Mac using the llamafile option described in the README. I could then run prompts through it using the llm-llamafile plugin.

The vibes are good! Initial test prompts I’ve tried feel similar to much larger 7B models, despite using just a few GBs of RAM. Tokens are returned fast too—it feels like the fastest model I’ve tried yet.

And it’s MIT licensed.

We [Bluesky] took a somewhat novel approach of giving every user their own SQLite database. By removing the Postgres dependency, we made it possible to run a ‘PDS in a box’ [Personal Data Server] without having to worry about managing a database. We didn’t have to worry about things like replicas or failover. For those thinking this is irresponsible: don’t worry, we are backing up all the data on our PDSs!

SQLite worked really well because the PDS – in its ideal form – is a single-tenant system. We owned up to that by having these single tenant SQLite databases.

April 24, 2024

A bad survey won’t tell you it’s bad. It’s actually really hard to find out that a bad survey is bad — or to tell whether you have written a good or bad set of questions. Bad code will have bugs. A bad interface design will fail a usability test. It’s possible to tell whether you are having a bad user interview right away. Feedback from a bad survey can only come in the form of a second source of information contradicting your analysis of the survey results.

Most seductively, surveys yield responses that are easy to count and counting things feels so certain and objective and truthful.

Even if you are counting lies.

openelm/README-pretraining.md. Apple released something big three hours ago, and I’m still trying to get my head around exactly what it is.

The parent project is called CoreNet, described as “A library for training deep neural networks”. Part of the release is a new LLM called OpenELM, which includes completely open source training code and a large number of published training checkpoint.

I’m linking here to the best documentation I’ve found of that training data: it looks like the bulk of it comes from RefinedWeb, RedPajama, The Pile and Dolma.

When I said “Send a text message to Julian Chokkattu,” who’s a friend and fellow AI Pin reviewer over at Wired, I thought I’d be asked what I wanted to tell him. Instead, the device simply said OK and told me it sent the words “Hey Julian, just checking in. How's your day going?” to Chokkattu. I've never said anything like that to him in our years of friendship, but I guess technically the AI Pin did do what I asked.

April 25, 2024

Snowflake Arctic Cookbook. Today's big model release was Snowflake Arctic, an enormous 480B model with a 128×3.66B MoE (Mixture of Experts) architecture. It's Apache 2 licensed and Snowflake state that "in addition, we are also open sourcing all of our data recipes and research insights."

The research insights will be shared on this Arctic Cookbook blog - which currently has two articles covering their MoE architecture and describing how they optimized their training run in great detail.

They also list dozens of "coming soon" posts, which should be pretty interesting given how much depth they've provided in their writing so far.

No, Most Books Don’t Sell Only a Dozen Copies. I linked to a story the other day about book sales claiming "90 percent of them sold fewer than 2,000 copies and 50 percent sold less than a dozen copies", based on numbers released in the Penguin antitrust lawsuit. It turns out those numbers were interpreted incorrectly.

In this piece from September 2022 Lincoln Michel addresses this and other common misconceptions about book statistics.

Understanding these numbers requires understanding a whole lot of intricacies about how publishing actually works. Here's one illustrative snippet:

"Take the statistic that most published books only sell 99 copies. This seems shocking on its face. But if you dig into it, you’ll notice it was counting one year’s sales of all books that were in BookScan’s system. That’s quite different statistic than saying most books don’t sell 100 copies in total! A book could easily be a bestseller in, say, 1960 and sell only a trickle of copies today."

The top comment on the post comes from Kristen McLean of NPD BookScan, the organization who's numbers were misrepresented is the trial. She wasn't certain how the numbers had been sliced to get that 90% result, but in her own analysis of "frontlist sales for the top 10 publishers by unit volume in the U.S. Trade market" she found that 14.7% sold less than 12 copies and the 51.4% spot was for books selling less than a thousand.

Blogmarks that use markdown. I needed to attach a correction to an older blogmark (my 20-year old name for short-form links with commentary on my blog) today - but the commentary field has always been text, not HTML, so I didn't have a way to add the necessary link.

This motivated me to finally add optional Markdown support for blogmarks to my blog's custom Django CMS. I then went through and added inline code markup to a bunch of different older posts, and built this Django SQL Dashboard to keep track of which posts I had updated.

I’ve been at OpenAI for almost a year now. In that time, I’ve trained a lot of generative models. [...] It’s becoming awfully clear to me that these models are truly approximating their datasets to an incredible degree. [...] What this manifests as is – trained on the same dataset for long enough, pretty much every model with enough weights and training time converges to the same point. [...] This is a surprising observation! It implies that model behavior is not determined by architecture, hyperparameters, or optimizer choices. It’s determined by your dataset, nothing else. Everything else is a means to an end in efficiently delivery compute to approximating that dataset.

The only difference between screwing around and science is writing it down

— Alex Jason, via Adam Savage

April 26, 2024

Food Delivery Leak Unmasks Russian Security Agents. This story is from April 2022 but I realize now I never linked to it.

Yandex Food, a popular food delivery service in Russia, suffered a major data leak.

The data included an order history with names, addresses and phone numbers of people who had placed food orders through that service.

Bellingcat were able to cross-reference this leak with addresses of Russian security service buildings—including those linked to the GRU and FSB.This allowed them to identify the names and phone numbers of people working for those organizations, and then combine that information with further leaked data as part of their other investigations.

If you look closely at the screenshots in this story they may look familiar: Bellingcat were using Datasette internally as a tool for exploring this data!

If you’re auditioning for your job every day, and you’re auditioning against every other brilliant employee there, and you know that at the end of the year, 6% of you are going to get cut no matter what, and at the same time, you have access to unrivaled data on partners, sellers, and competitors, you might be tempted to look at that data to get an edge and keep your job and get to your restricted stock units.

It's very fast to build something that's 90% of a solution. The problem is that the last 10% of building something is usually the hard part which really matters, and with a black box at the center of the product, it feels much more difficult to me to nail that remaining 10%. With vibecheck, most of the time the results to my queries are great; some percentage of the time they aren't. Closing that gap with gen AI feels much more fickle to me than a normal engineering problem. It could be that I'm unfamiliar with it, but I also wonder if some classes of generative AI based products are just doomed to mediocrity as a result.

April 27, 2024

Everything Google’s Python team were responsible for. In a questionable strategic move, Google laid off the majority of their internal Python team a few days ago. Someone on Hacker News asked what the team had been responsible for, and team member zem relied with this fascinating comment providing detailed insight into how the team worked and indirectly how Python is used within Google.

I've worked out why I don't get much value out of LLMs. The hardest and most time-consuming parts of my job involve distinguishing between ideas that are correct, and ideas that are plausible-sounding but wrong. Current AI is great at the latter type of ideas, and I don't need more of those.

April 28, 2024

Zed Decoded: Rope & SumTree (via) Text editors like Zed need in-memory data structures that are optimized for handling large strings where text can be inserted or deleted at any point without needing to copy the whole string.

Ropes are a classic, widely used data structure for this.

Zed have their own implementation of ropes in Rust, but it's backed by something even more interesting: a SumTree, described here as a thread-safe, snapshot-friendly, copy-on-write B+ tree where each leaf node contains multiple items and a Summary for each Item, and internal tree nodes contain a Summary of the items in its subtree.

These summaries allow for some very fast traversal tree operations, such as turning an offset in the file into a line and row coordinate and vice-versa. The summary itself can be anything, so each application of SumTree in Zed collects different summary information.

Uses in Zed include tracking highlight regions, code folding state, git blame information, project file trees and more - over 20 different classes and counting.

Zed co-founder Nathan Sobo calls SumTree "the soul of Zed".

Also notable: this detailed article is accompanied by an hour long video with a four-way conversation between Zed maintainers providing a tour of these data structures in the Zed codebase.

April 29, 2024

How do you accidentally run for President of Iceland? (via) Anna Andersen writes about a spectacular user interface design case-study from this year's Icelandic presidential election.

Running for President requires 1,500 endorsements. This year, those endorsements can be filed online through a government website.

The page for collecting endorsements originally had two sections - one for registering to collect endorsements, and another to submit your endorsement. The login link for the first came higher on the page, and at least 11 people ended up accidentally running for President!

The creator of a model can not ensure that a model is never used to do something harmful – any more so that the developer of a web browser, calculator, or word processor could. Placing liability on the creators of general purpose tools like these mean that, in practice, such tools can not be created at all, except by big businesses with well funded legal teams.

[...] Instead of regulating the development of AI models, the focus should be on regulating their applications, particularly those that pose high risks to public safety and security. Regulate the use of AI in high-risk areas such as healthcare, criminal justice, and critical infrastructure, where the potential for harm is greatest, would ensure accountability for harmful use, whilst allowing for the continued advancement of AI technology.

My notes on gpt2-chatbot.

There's a new, unlabeled and undocumented model on the LMSYS Chatbot Arena today called gpt2-chatbot. It's been giving some impressive responses - you can prompt it directly in the Direct Chat tab by selecting it from the big model dropdown menu.

It looks like a stealth new model preview. It's giving answers that are comparable to GPT-4 Turbo and in some cases better - my own experiments lead me to think it may have more "knowledge" baked into it, as ego prompts ("Who is Simon Willison?") and questions about things like lists of speakers at DjangoCon over the years seem to hallucinate less and return more specific details than before.

The lack of transparency here is both entertaining and infuriating. Lots of people are performing a parallel distributed "vibe check" and sharing results with each other, but it's annoying that even the most basic questions (What even IS this thing? Can it do RAG? What's its context length?) remain unanswered so far.

The system prompt appears to be the following - but system prompts just influence how the model behaves, they aren't guaranteed to contain truthful information:

You are ChatGPT, a large language model trained

by OpenAI, based on the GPT-4 architecture.

Knowledge cutoff: 2023-11

Current date: 2024-04-29

Image input capabilities: Enabled

Personality: v2

My best guess is that this is a preview of some kind of OpenAI "GPT 4.5" release. I don't think it's a big enough jump in quality to be a GPT-5.

Update: LMSYS do document their policy on using anonymized model names for tests of unreleased models.

Update May 7th: The model has been confirmed as belonging to OpenAI thanks to an error message that leaked details of the underlying API platform.

# All the code is wrapped in a main function that gets called at the bottom of the file, so that a truncated partial download doesn't end up executing half a script.

April 30, 2024

Why SQLite Uses Bytecode (via) Brand new SQLite architecture documentation by D. Richard Hipp explaining the trade-offs between a bytecode based query plan and a tree of objects.

SQLite uses the bytecode approach, which provides an important characteristic that SQLite can very easily execute queries incrementally—stopping after each row, for example. This is more useful for a local library database than for a network server where the assumption is that the entire query will be executed before results are returned over the wire.

My approach to HTML web components. Some neat patterns here from Jeremy Keith, who is using Web Components extensively for progressive enhancement of existing markup.

The reactivity you get with full-on frameworks [like React and Vue] isn’t something that web components offer. But I do think web components can replace jQuery and other approaches to scripting the DOM.

Jeremy likes naming components with their element as a prefix (since all element names must contain at least one hyphen), and suggests building components under the single responsibility principle - so you can do things like <button-confirm><button-clipboard><button>....

He configures these buttons with data- attributes and has them communicate with each other using custom events.

Something I hadn't realized is that since the connectedCallback function on a custom element is fired any time that element is attached to a page you can fetch() and then insertHTML content that includes elements and know that they will initialize themselves without needing any extra logic - great for the kind of pattern encourages by systems such as HTMX.

How an empty S3 bucket can make your AWS bill explode (via) Maciej Pocwierz accidentally created an S3 bucket with a name that was already used as a placeholder value in a widely used piece of software. They saw 100 million PUT requests to their new bucket in a single day, racking up a big bill since AWS charges $5/million PUTs.

It turns out AWS charge that same amount for PUTs that result in a 403 authentication error, a policy that extends even to "requester pays" buckets!

So, if you know someone's S3 bucket name you can DDoS their AWS bill just by flooding them with meaningless unauthenticated PUT requests.

AWS support refunded Maciej's bill as an exception here, but I'd like to see them reconsider this broken policy entirely.

Update from Jeff Barr:

We agree that customers should not have to pay for unauthorized requests that they did not initiate. We’ll have more to share on exactly how we’ll help prevent these charges shortly.

Performance analysis indicates that SQLite spends very little time doing bytecode decoding and dispatch. Most CPU cycles are consumed in walking B-Trees, doing value comparisons, and decoding records - all of which happens in compiled C code. Bytecode dispatch is using less than 3% of the total CPU time, according to my measurements.

So at least in the case of SQLite, compiling all the way down to machine code might provide a performance boost 3% or less. That's not very much, considering the size, complexity, and portability costs involved.

We collaborate with open-source and commercial model providers to bring their unreleased models to community for preview testing.

Model providers can test their unreleased models anonymously, meaning the models' names will be anonymized. A model is considered unreleased if its weights are neither open, nor available via a public API or service.

— LMSYS