April 2024

147 posts: 5 entries, 59 links, 26 quotes, 57 beats

April 8, 2024

Introducing Enhance WASM (via) “Backend agnostic server-side rendering (SSR) for Web Components”—fascinating new project from Brian LeRoux and Begin.

The idea here is to provide server-side rendering of Web Components using WebAssembly that can run on any platform that is supported within the Extism WASM ecosystem.

The key is the enhance-ssr.wasm bundle, a 4.1MB WebAssembly version of the enhance-ssr JavaScript library, compiled using the Extism JavaScript PDK (Plugin Development Kit) which itself bundles a WebAssembly version of QuickJS.

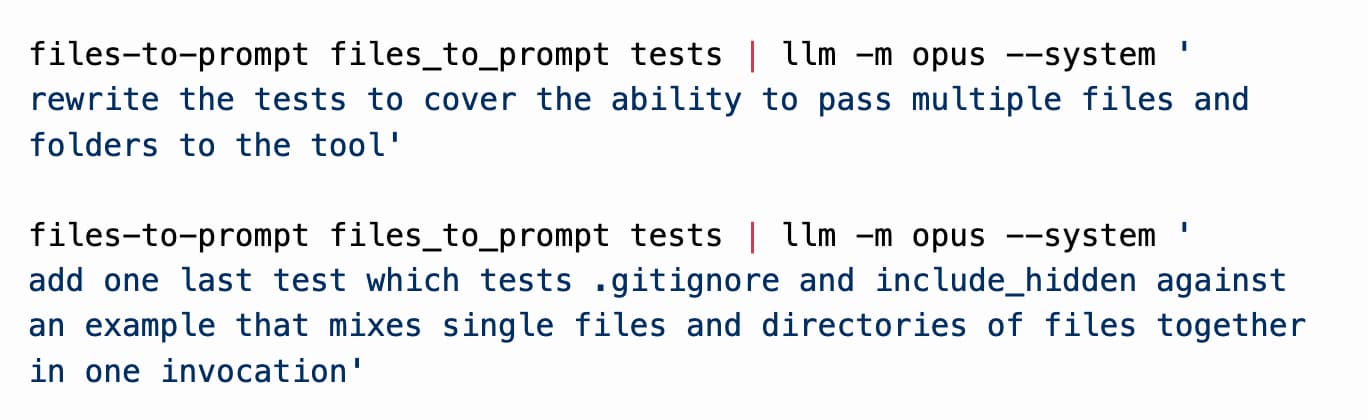

Building files-to-prompt entirely using Claude 3 Opus

files-to-prompt is a new tool I built to help me pipe several files at once into prompts to LLMs such as Claude and GPT-4.

[... 3,235 words]April 9, 2024

Hello World (via) Lennon McLean dives deep down the rabbit hole of what happens when you execute the binary compiled from “Hello world” in C on a Linux system, digging into the details of ELF executables, objdump disassembly, the C standard library, stack frames, null-terminated strings and taking a detour through musl because it’s easier to read than Glibc.

llm.c (via) Andrej Karpathy implements LLM training—initially for GPT-2, other architectures to follow—in just over 1,000 lines of C on top of CUDA. Includes a tutorial about implementing LayerNorm by porting an implementation from Python.

Command R+ now ranked 6th on the LMSYS Chatbot Arena. The LMSYS Chatbot Arena Leaderboard is one of the most interesting approaches to evaluating LLMs because it captures their ever-elusive “vibes”—it works by users voting on the best responses to prompts from two initially hidden models

Big news today is that Command R+—the brand new open weights model (Creative Commons non-commercial) by Cohere—is now the highest ranked non-proprietary model, in at position six and beating one of the GPT-4s.

(Linking to my screenshot on Mastodon.)

A solid pattern to build LLM Applications (feat. Claude) (via) Hrishi Olickel is one of my favourite prompt whisperers. In this YouTube video he walks through his process for building quick interactive applications with the assistance of Claude 3, spinning up an app that analyzes his meeting transcripts to extract participants and mentioned organisations, then presents a UI for exploring the results built with Next.js and shadcn/ui.

An interesting tip I got from this: use the weakest, not the strongest models to iterate on your prompts. If you figure out patterns that work well with Claude 3 Haiku they will have a significantly lower error rate with Sonnet or Opus. The speed of the weaker models also means you can iterate much faster, and worry less about the cost of your experiments.

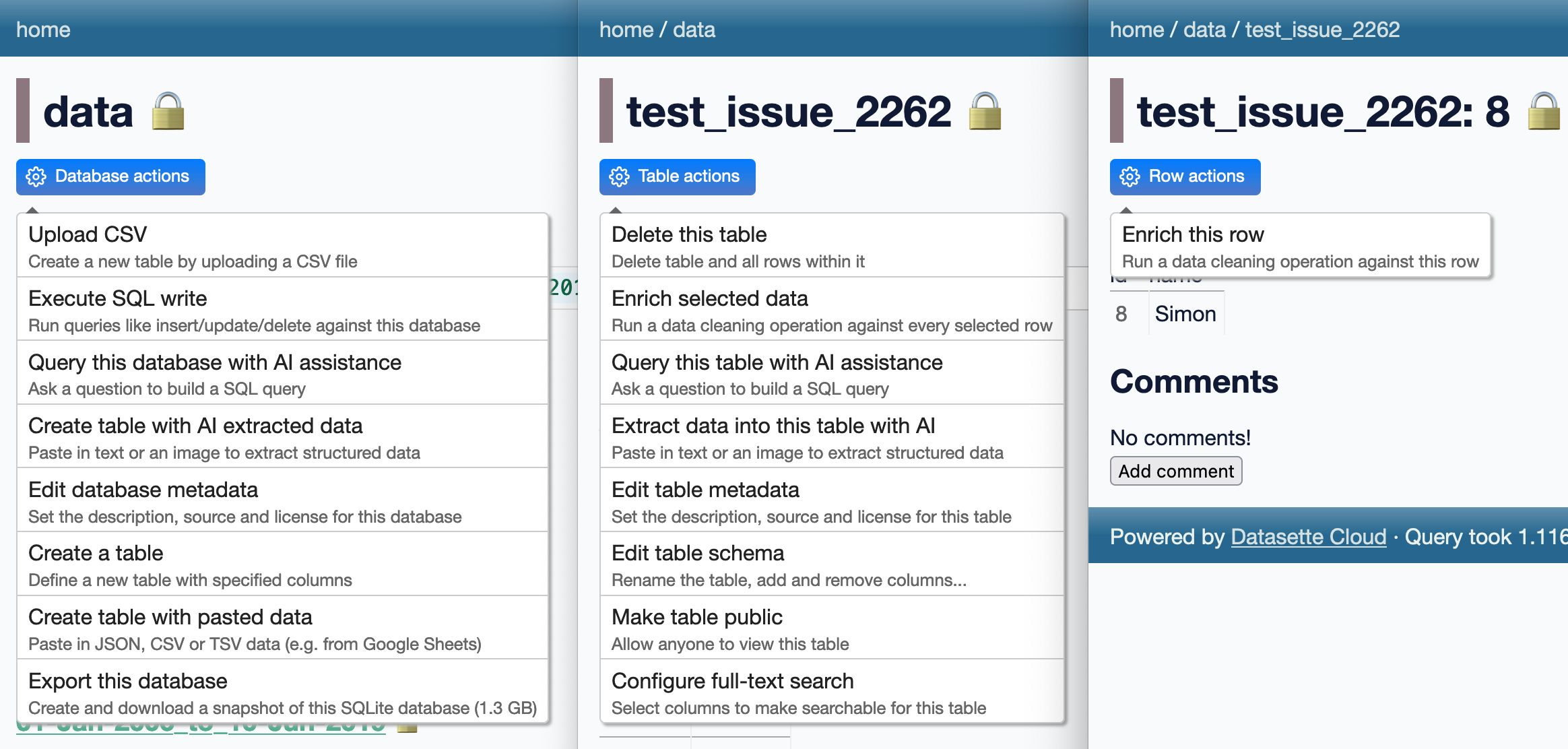

Extracting data from unstructured text and images with Datasette and GPT-4 Turbo. Datasette Extract is a new Datasette plugin that uses GPT-4 Turbo (released to general availability today) and GPT-4 Vision to extract structured data from unstructured text and images.

I put together a video demo of the plugin in action today, and posted it to the Datasette Cloud blog along with screenshots and a tutorial describing how to use it.

April 10, 2024

Mistral tweet a magnet link for mixtral-8x22b. Another open model release from Mistral using their now standard operating procedure of tweeting out a raw torrent link.

This one is an 8x22B Mixture of Experts model. Their previous most powerful openly licensed release was Mixtral 8x7B, so this one is a whole lot bigger (a 281GB download)—and apparently has a 65,536 context length, at least according to initial rumors on Twitter.

Gemini 1.5 Pro public preview (via) Huge release from Google: Gemini 1.5 Pro—the GPT-4 competitive model with the incredible 1 million token context length—is now available without a waitlist in 180+ countries (including the USA but not Europe or the UK as far as I can tell)... and the API is free for 50 requests/day (rate limited to 2/minute).

Beyond that you’ll need to pay—$7/million input tokens and $21/million output tokens, which is slightly less than GPT-4 Turbo and a little more than Claude 3 Sonnet.

They also announced audio input (up to 9.5 hours in a single prompt), system instruction support and a new JSON mode.

Three major LLM releases in 24 hours (plus weeknotes)

I’m a bit behind on my weeknotes, so there’s a lot to cover here. But first... a review of the last 24 hours of Large Language Model news. All times are in US Pacific on April 9th 2024.

[... 1,401 words]The challenge [with RAG] is that most corner-cutting solutions look like they’re working on small datasets while letting you pretend that things like search relevance don’t matter, while in reality relevance significantly impacts quality of responses when you move beyond prototyping (whether they’re literally search relevance or are better tuned SQL queries to retrieve more appropriate rows). This creates a false expectation of how the prototype will translate into a production capability, with all the predictable consequences: underestimating timelines, poor production behavior/performance, etc.

Notes on how to use LLMs in your product. A whole bunch of useful observations from Will Larson here. I love his focus on the key characteristic of LLMs that “you cannot know whether a given response is accurate”, nor can you calculate a dependable confidence score for a response—and as a result you need to either “accept potential inaccuracies (which makes sense in many cases, humans are wrong sometimes too) or keep a Human-in-the-Loop (HITL) to validate the response.”

Shell History Is Your Best Productivity Tool (via) Martin Heinz drops a wealth of knowledge about ways to configure zsh (the default shell on macOS these days) to get better utility from your shell history.

April 11, 2024

[on GitHub Copilot] It’s like insisting to walk when you can take a bike. It gets the hard things wrong but all the easy things right, very helpful and much faster. You have to learn what it can and can’t do.

Use an llm to automagically generate meaningful git commit messages. Neat, thoroughly documented recipe by Harper Reed using my LLM CLI tool as part of a scheme for if you’re feeling too lazy to write a commit message—it uses a prepare-commit-msg Git hook which runs any time you commit without a message and pipes your changes to a model along with a custom system prompt.

3Blue1Brown: Attention in transformers, visually explained. Grant Sanderson publishes animated explainers of mathematical topics on YouTube, to over 6 million subscribers. His latest shows how the attention mechanism in transformers (the algorithm behind most LLMs) works and is by far the clearest explanation I’ve seen of the topic anywhere.

I was intrigued to find out what tool he used to produce the visualizations. It turns out Grant built his own open source Python animation library, manim, to enable his YouTube work.

April 12, 2024

The language issues are indicative of the bigger problem facing the AI Pin, ChatGPT, and frankly, every other AI product out there: you can’t see how it works, so it’s impossible to figure out how to use it. [...] our phones are constant feedback machines — colored buttons telling us what to tap, instant activity every time we touch or pinch or scroll. You can see your options and what happens when you pick one. With AI, you don’t get any of that. Using the AI Pin feels like wishing on a star: you just close your eyes and hope for the best. Most of the time, nothing happens.

How we built JSR (via) Really interesting deep dive by Luca Casonato into the engineering behind the new JSR alternative JavaScript package registry launched recently by Deno.

The backend uses PostgreSQL and a Rust API server hosted on Google Cloud Run.

The frontend uses Fresh, Deno’s own server-side JavaScript framework which leans heavily in the concept of “islands”—a progressive enhancement technique where pages are rendered on the server and small islands of interactivity are added once the page has loaded.

April 13, 2024

Lessons after a half-billion GPT tokens (via) Ken Kantzer presents some hard-won experience from shipping real features on top of OpenAI’s models.

They ended up settling on a very basic abstraction over the chat API—mainly to handle automatic retries on a 500 error. No complex wrappers, not even JSON mode or function calling or system prompts.

Rather than counting tokens they estimate tokens as 3 times the length in characters, which works well enough.

One challenge they highlight for structured data extraction (one of my favourite use-cases for LLMs): “GPT really cannot give back more than 10 items. Trying to have it give you back 15 items? Maybe it does it 15% of the time.”

(Several commenters on Hacker News report success in getting more items back by using numbered keys or sequence IDs in the returned JSON to help the model keep count.)

April 14, 2024

redka (via) Anton Zhiyanov’s new project to build a subset of Redis (including protocol support) using Go and SQLite. Also works as a Go library.

The guts of the SQL implementation are in the internal/sqlx folder.

April 15, 2024

[On complaints about Claude 3 reduction in quality since launch] The model is stored in a static file and loaded, continuously, across 10s of thousands of identical servers each of which serve each instance of the Claude model. The model file never changes and is immutable once loaded; every shard is loading the same model file running exactly the same software. We haven’t changed the temperature either. We don’t see anywhere where drift could happen. The files are exactly the same as at launch and loaded each time from a frozen pristine copy.

— Jason D. Clinton, Anthropic

OpenAI Batch API (via) OpenAI are now offering a 50% discount on batch chat completion API calls if you submit them in bulk and allow for up to 24 hours for them to be run.

Requests are sent as a newline-delimited JSON file, with each line looking something like this:

{"custom_id": "request-1", "method": "POST", "url": "/v1/chat/completions", "body": {"model": "gpt-3.5-turbo", "messages": [{"role": "system", "content": "You are a helpful assistant."}, {"role": "user", "content": "What is 2+2?"}]}}

You upload a file for the batch, kick off a batch request and then poll for completion.

This makes GPT-3.5 Turbo cheaper than Claude 3 Haiku - provided you're willing to wait a few hours for your responses.