Monday, 5th August 2024

[On WebGPU in Firefox] There is a lot of work to do still to make sure we comply with the spec. in a way that's acceptable to ship in a browser. We're 90% of the way there in terms of functionality, but the last 10% of fixing up spec. changes in the last few years + being significantly more resourced-constrained (we have 3 full-time folks, Chrome has/had an order of magnitude more humans working on WebGPU) means we've got our work cut out for us. We're hoping to ship sometime in the next year, but I won't make promises here.

How to Get or Create in PostgreSQL (via) Get or create - for example to retrieve an existing tag record from a database table if it already exists or insert it if it doesn’t - is a surprisingly difficult operation.

Haki Benita uses it to illustrate a variety of interesting PostgreSQL concepts.

New to me: a pattern that runs INSERT INTO tags (name) VALUES (tag_name) RETURNING *; and then catches the constraint violation and returns a record instead has a disadvantage at scale: “The table contains a dead tuple for every attempt to insert a tag that already existed” - so until vacuum runs you can end up with significant table bloat!

Haki’s conclusion is that the best solution relies on an upcoming feature coming in PostgreSQL 17: the ability to combine the MERGE operation with a RETURNING clause:

WITH new_tags AS (

MERGE INTO tags

USING (VALUES ('B'), ('C')) AS t(name)

ON tags.name = t.name

WHEN NOT MATCHED THEN

INSERT (name) VALUES (t.name)

RETURNING *

)

SELECT * FROM tags WHERE name IN ('B', 'C')

UNION ALL

SELECT * FROM new_tags;

I wonder what the best pattern for this in SQLite is. Could it be as simple as this?

INSERT OR IGNORE INTO tags (name) VALUES ('B'), ('C');

The SQLite INSERT documentation doesn't currently provide extensive details for INSERT OR IGNORE, but there are some hints in this forum thread. This post by Rob Hoelz points out that INSERT OR IGNORE will silently ignore any constraint violation, so INSERT INTO tags (tag) VALUES ('C'), ('D') ON CONFLICT(tag) DO NOTHING may be a better option.

Leaked Documents Show Nvidia Scraping ‘A Human Lifetime’ of Videos Per Day to Train AI.

Samantha Cole at 404 Media reports on a huge leak of internal NVIDIA communications - mainly from a Slack channel - revealing details of how they have been collecting video training data for a new video foundation model called Cosmos. The data is mostly from YouTube, downloaded via yt-dlp using a rotating set of AWS IP addresses and consisting of millions (maybe even hundreds of millions) of videos.

The fact that companies scrape unlicensed data to train models isn't at all surprising. This article still provides a fascinating insight into what model training teams care about, with details like this from a project update via email:

As we measure against our desired distribution focus for the next week remains on cinematic, drone footage, egocentric, some travel and nature.

Or this from Slack:

Movies are actually a good source of data to get gaming-like 3D consistency and fictional content but much higher quality.

My intuition here is that the backlash against scraped video data will be even more intense than for static images used to train generative image models. Video is generally more expensive to create, and video creators (such as Marques Brownlee / MKBHD, who is mentioned in a Slack message here as a potential source of "tech product neviews - super high quality") have a lot of influence.

There was considerable uproar a few weeks ago over this story about training against just captions scraped from YouTube, and now we have a much bigger story involving the actual video content itself.

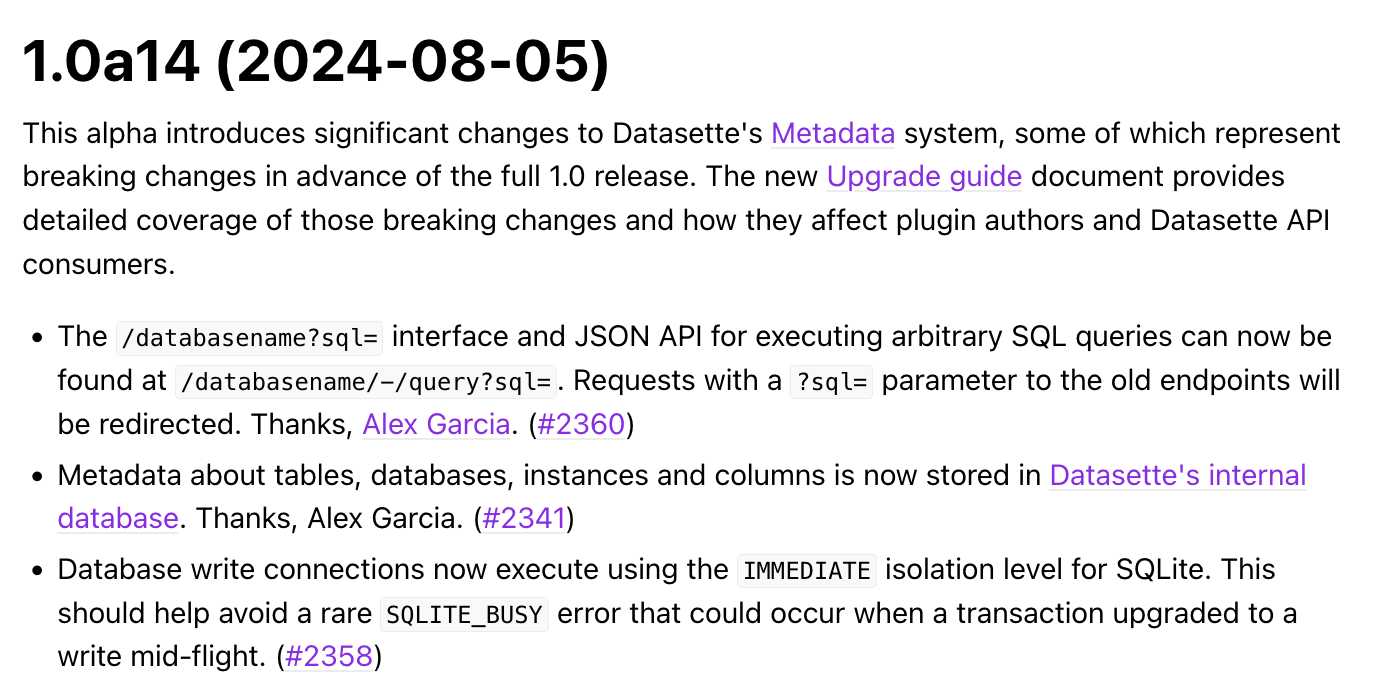

Datasette 1.0a14: The annotated release notes

Released today: Datasette 1.0a14. This alpha includes significant contributions from Alex Garcia, including some backwards-incompatible changes in the run-up to the 1.0 release.

[... 1,424 words]