GPT-5.2

11th December 2025

OpenAI reportedly declared a “code red” on the 1st of December in response to increasingly credible competition from the likes of Google’s Gemini 3. It’s less than two weeks later and they just announced GPT-5.2, calling it “the most capable model series yet for professional knowledge work”.

Key characteristics of GPT-5.2

The new model comes in two variants: GPT-5.2 and GPT-5.2 Pro. There’s no Mini variant yet.

GPT-5.2 is available via their UI in both “instant” and “thinking” modes, presumably still corresponding to the API concept of different reasoning effort levels.

The knowledge cut-off date for both variants is now August 31st 2025. This is significant—GPT 5.1 and 5 were both Sep 30, 2024 and GPT-5 mini was May 31, 2024.

Both of the 5.2 models have a 400,000 token context window and 128,000 max output tokens—no different from 5.1 or 5.

Pricing wise 5.2 is a rare increase—it’s 1.4x the cost of GPT 5.1, at $1.75/million input and $14/million output. GPT-5.2 Pro is $21.00/million input and a hefty $168.00/million output, putting it up there with their previous most expensive models o1 Pro and GPT-4.5.

So far the main benchmark results we have are self-reported by OpenAI. The most interesting ones are a 70.9% score on their GDPval “Knowledge work tasks” benchmark (GPT-5 got 38.8%) and a 52.9% on ARC-AGI-2 (up from 17.6% for GPT-5.1 Thinking).

The ARC Prize Twitter account provided this interesting note on the efficiency gains for GPT-5.2 Pro

A year ago, we verified a preview of an unreleased version of @OpenAI o3 (High) that scored 88% on ARC-AGI-1 at est. $4.5k/task

Today, we’ve verified a new GPT-5.2 Pro (X-High) SOTA score of 90.5% at $11.64/task

This represents a ~390X efficiency improvement in one year

GPT-5.2 can be accessed in OpenAI’s Codex CLI tool like this:

codex -m gpt-5.2

There are three new API models:

- gpt-5.2—I think this is what you get if you select “GPT-5.2 Thinking” in ChatGPT but I’m a little confused.

- gpt-5.2-chat-latest—the model used by ChatGPT for “GPT-5.2 Instant” mode. It’s priced the same as GPT-5.2 but has a reduced 128,000 context window with 16,384 max output tokens.

- gpt-5.2-pro

OpenAI have published a new GPT-5.2 Prompting Guide. An interesting note from that document is that compaction can now be run with a new dedicated server-side API:

For long-running, tool-heavy workflows that exceed the standard context window, GPT-5.2 with Reasoning supports response compaction via the

/responses/compactendpoint. Compaction performs a loss-aware compression pass over prior conversation state, returning encrypted, opaque items that preserve task-relevant information while dramatically reducing token footprint. This allows the model to continue reasoning across extended workflows without hitting context limits.

It’s better at vision

One note from the announcement that caught my eye:

GPT‑5.2 Thinking is our strongest vision model yet, cutting error rates roughly in half on chart reasoning and software interface understanding.

I had disappointing results from GPT-5 on an OCR task a while ago. I tried it against GPT-5.2 and it did much better:

llm -m gpt-5.2 ocr -a https://static.simonwillison.net/static/2025/ft.jpegHere’s the result from that, which cost 1,520 input and 1,022 for a total of 1.6968 cents.

Rendering some pelicans

For my classic “Generate an SVG of a pelican riding a bicycle” test:

llm -m gpt-5.2 "Generate an SVG of a pelican riding a bicycle"

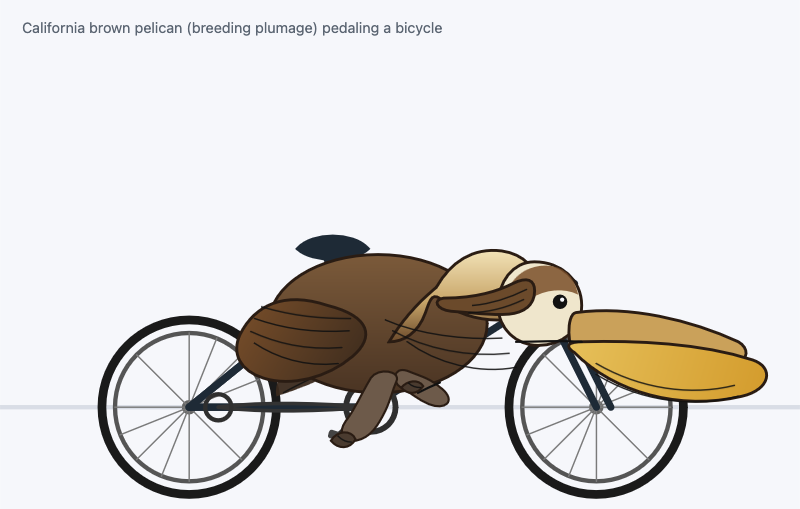

And for the more advanced alternative test, which tests instruction following in a little more depth:

llm -m gpt-5.2 "Generate an SVG of a California brown pelican riding a bicycle. The bicycle

must have spokes and a correctly shaped bicycle frame. The pelican must have its

characteristic large pouch, and there should be a clear indication of feathers.

The pelican must be clearly pedaling the bicycle. The image should show the full

breeding plumage of the California brown pelican."

Update 14th December 2025: I used GPT-5.2 running in Codex CLI to port a complex Python library to JavaScript. It ran without interference for nearly four hours and completed a complex task exactly to my specification.

More recent articles

- I vibe coded my dream macOS presentation app - 25th February 2026

- Writing about Agentic Engineering Patterns - 23rd February 2026

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026