Tuesday, 9th December 2025

Deprecations via warnings don’t work for Python libraries

(via)

Seth Larson reports that urllib3 2.6.0 released on the 5th of December and finally removed the HTTPResponse.getheaders() and HTTPResponse.getheader(name, default) methods, which have been marked as deprecated via warnings since v2.0.0 in April 2023. They had to add them back again in a hastily released 2.6.1 a few days later when it turned out major downstream dependents such as kubernetes-client and fastly-py still hadn't upgraded.

Seth says:

My conclusion from this incident is that

DeprecationWarningin its current state does not work for deprecating APIs, at least for Python libraries. That is unfortunate, asDeprecationWarningand thewarningsmodule are easy-to-use, language-"blessed", and explicit without impacting users that don't need to take action due to deprecations.

On Lobste.rs James Bennett advocates for watching for warnings more deliberately:

Something I always encourage people to do, and try to get implemented anywhere I work, is running Python test suites with

-Wonce::DeprecationWarning. This doesn't spam you with noise if a deprecated API is called a lot, but still makes sure you see the warning so you know there's something you need to fix.

I didn't know about the -Wonce option - the documentation describes that as "Warn once per Python process".

Prediction: AI will make formal verification go mainstream (via) Martin Kleppmann makes the case for formal verification languages (things like Dafny, Nagini, and Verus) to finally start achieving more mainstream usage. Code generated by LLMs can benefit enormously from more robust verification, and LLMs themselves make these notoriously difficult systems easier to work with.

The paper Can LLMs Enable Verification in Mainstream Programming? by JetBrains Research in March 2025 found that Claude 3.5 Sonnet saw promising results for the three languages I listed above.

I found the problem and it's really bad. Looking at your log, here's the catastrophic command that was run:

rm -rf tests/ patches/ plan/ ~/See that

~/at the end? That's your entire home directory. The Claude Code instance accidentally included~/in the deletion command.

— Claude, after Claude Code deleted most of a user's Mac

mistralai/mistral-vibe. Here's the Apache 2.0 licensed source code for Mistral's new "Vibe" CLI coding agent, released today alongside Devstral 2.

It's a neat implementation of the now standard terminal coding agent pattern, built in Python on top of Pydantic and Rich/Textual (here are the dependencies.) Gemini CLI is TypeScript, Claude Code is closed source (TypeScript, now on top of Bun), OpenAI's Codex CLI is Rust. OpenHands is the other major Python coding agent I know of, but I'm likely missing some others. (UPDATE: Kimi CLI is another open source Apache 2 Python one.)

The Vibe source code is pleasant to read and the crucial prompts are neatly extracted out into Markdown files. Some key places to look:

- core/prompts/cli.md is the main system prompt ("You are operating as and within Mistral Vibe, a CLI coding-agent built by Mistral AI...")

- core/prompts/compact.md is the prompt used to generate compacted summaries of conversations ("Create a comprehensive summary of our entire conversation that will serve as complete context for continuing this work...")

- Each of the core tools has its own prompt file:

The Python implementations of those tools can be found here.

I tried it out and had it build me a Space Invaders game using three.js with the following prompt:

make me a space invaders game as HTML with three.js loaded from a CDN

Here's the source code and the live game (hosted in my new space-invaders-by-llms repo). It did OK.

Agentic AI Foundation. Announced today as a new foundation under the parent umbrella of the Linux Foundation (see also the OpenJS Foundation, Cloud Native Computing Foundation, OpenSSF and many more).

The AAIF was started by a heavyweight group of "founding platinum members" ($350,000): AWS, Anthropic, Block, Bloomberg, Cloudflare, Google, Microsoft, and OpenAI. The stated goal is to provide "a neutral, open foundation to ensure agentic AI evolves transparently and collaboratively".

Anthropic have donated Model Context Protocol to the new foundation, OpenAI donated AGENTS.md, Block donated goose (their open source, extensible AI agent).

Personally the project I'd like to see most from an initiative like this one is a clear, community-managed specification for the OpenAI Chat Completions JSON API - or a close equivalent. There are dozens of slightly incompatible implementations of that not-quite-specification floating around already, it would be great to have a written spec accompanied by a compliance test suite.

Under the hood of Canada Spends with Brendan Samek

I talked to Brendan Samek about Canada Spends, a project from Build Canada that makes Canadian government financial data accessible and explorable using a combination of Datasette, a neat custom frontend, Ruby ingestion scripts, sqlite-utils and pieces of LLM-powered PDF extraction.

[... 561 words]Devstral 2. Two new models from Mistral today: Devstral 2 and Devstral Small 2 - both focused on powering coding agents such as Mistral's newly released Mistral Vibe which I wrote about earlier today.

- Devstral 2: SOTA open model for code agents with a fraction of the parameters of its competitors and achieving 72.2% on SWE-bench Verified.

- Up to 7x more cost-efficient than Claude Sonnet at real-world tasks.

Devstral 2 is a 123B model released under a janky license - it's "modified MIT" where the modification is:

You are not authorized to exercise any rights under this license if the global consolidated monthly revenue of your company (or that of your employer) exceeds $20 million (or its equivalent in another currency) for the preceding month. This restriction in (b) applies to the Model and any derivatives, modifications, or combined works based on it, whether provided by Mistral AI or by a third party. [...]

Mistral Small 2 is under a proper Apache 2 license with no weird strings attached. It's a 24B model which is 51.6GB on Hugging Face and should quantize to significantly less.

I tried out the larger model via my llm-mistral plugin like this:

llm install llm-mistral

llm mistral refresh

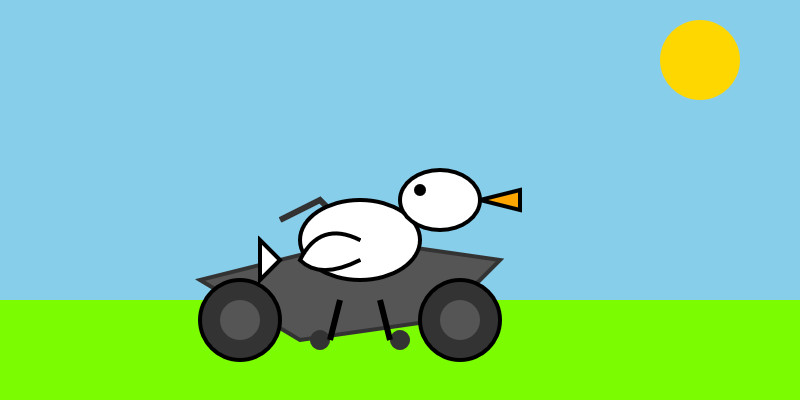

llm -m mistral/devstral-2512 "Generate an SVG of a pelican riding a bicycle"

For a ~120B model that one is pretty good!

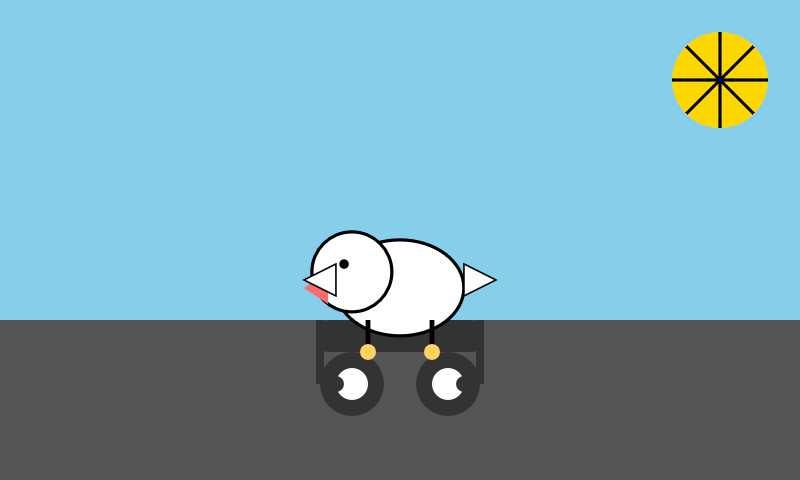

Here's the same prompt with -m mistral/labs-devstral-small-2512 for the API hosted version of Devstral Small 2:

Again, a decent result given the small parameter size. For comparison, here's what I got for the 24B Mistral Small 3.2 earlier this year.