1,884 posts tagged “ai”

"AI is whatever hasn't been done yet"—Larry Tesler

2025

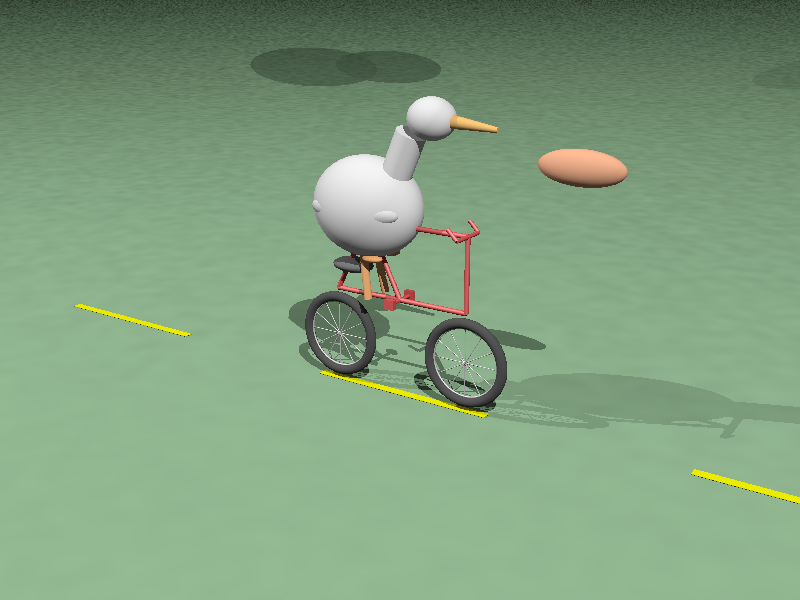

Pelican on a Bike—Raytracer Edition (via) beetle_b ran this prompt against a bunch of recent LLMs:

Write a POV-Ray file that shows a pelican riding on a bicycle.

This turns out to be a harder challenge than SVG, presumably because there are less examples of POV-Ray in the training data:

Most produced a script that failed to parse. I would paste the error back into the chat and let it attempt a fix.

The results are really fun though! A lot of them end up accompanied by a weird floating egg for some reason - here's Claude Opus 4:

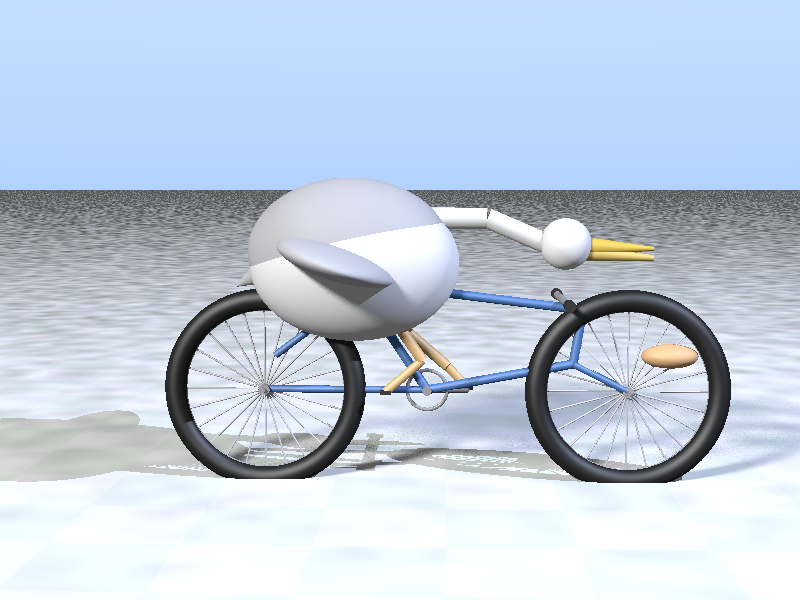

I think the best result came from GPT-5 - again with the floating egg though!

I decided to try this on the new gpt-5-codex-mini, using the trick I described yesterday. Here's the code it wrote.

./target/debug/codex prompt -m gpt-5-codex-mini \

"Write a POV-Ray file that shows a pelican riding on a bicycle."

It turns out you can render POV files on macOS like this:

brew install povray

povray demo.pov # produces demo.png

The code GPT-5 Codex Mini created didn't quite work, so I round-tripped it through Sonnet 4.5 via Claude Code a couple of times - transcript here. Once it had fixed the errors I got this:

That's significantly worse than the one beetle_b got from GPT-5 Mini!

Reverse engineering Codex CLI to get GPT-5-Codex-Mini to draw me a pelican

OpenAI partially released a new model yesterday called GPT-5-Codex-Mini, which they describe as "a more compact and cost-efficient version of GPT-5-Codex". It’s currently only available via their Codex CLI tool and VS Code extension, with proper API access "coming soon". I decided to use Codex to reverse engineer the Codex CLI tool and give me the ability to prompt the new model directly.

[... 1,774 words]The big advantage of MCP over OpenAPI is that it is very clear about auth. [...]

Maybe an agent could read the docs and write code to auth. But we don't actually want that, because it implies the agent gets access to the API token! We want the agent's harness to handle that and never reveal the key to the agent. [...]

OAuth has always assumed that the client knows what API it's talking to, and so the client's developer can register the client with that API in advance to get a client_id/client_secret pair. Agents, though, don't know what MCPs they'll talk to in advance.

So MCP requires OAuth dynamic client registration (RFC 7591), which practically nobody actually implemented prior to MCP. DCR might as well have been introduced by MCP, and may actually be the most important unlock in the whole spec.

I have AiDHD

It has never been easier to build an MVP and in turn, it has never been harder to keep focus. When new features always feel like they're just a prompt away, feature creep feels like a never ending battle. Being disciplined is more important than ever.

AI still doesn't change one very important thing: you still need to make something people want. I think that getting users (even free ones) will become significantly harder as the bar for user's time will only get higher as their options increase.

Being quicker to get to the point of failure is actually incredibly valuable. Even just over a year ago, many of these projects would have taken months to build.

— Josh Cohenzadeh, AiDHD

My hunch is that existing LLMs make it easier to build a new programming language in a way that captures new developers.

Most programming languages are similar enough to existing languages that you only need to know a small number of details to use them: what's the core syntax for variables, loops, conditionals and functions? How does memory management work? What's the concurrency model?

For many languages you can fit all of that, including illustrative examples, in a few thousand tokens of text.

So ship your new programming language with a Claude Skills style document and give your early adopters the ability to write it with LLMs. The LLMs should handle that very well, especially if they get to run an agentic loop against a compiler or even a linter that you provide.

This post started as a comment.

Using Codex CLI with gpt-oss:120b on an NVIDIA DGX Spark via Tailscale. Inspired by a YouTube comment I wrote up how I run OpenAI's Codex CLI coding agent against the gpt-oss:120b model running in Ollama on my NVIDIA DGX Spark via a Tailscale network.

It takes a little bit of work to configure but the result is I can now use Codex CLI on my laptop anywhere in the world against a self-hosted model.

I used it to build this space invaders clone.

You should write an agent (via) Thomas Ptacek on the Fly blog:

Agents are the most surprising programming experience I’ve had in my career. Not because I’m awed by the magnitude of their powers — I like them, but I don’t like-like them. It’s because of how easy it was to get one up on its legs, and how much I learned doing that.

I think he's right: hooking up a simple agentic loop that prompts an LLM and runs a tool for it any time it request one really is the new "hello world" of AI engineering.

My trepidation extends to complex literature searches. I use LLMs as secondary librarians when I’m doing research. They reliably find primary sources (articles, papers, etc.) that I miss in my initial searches.

But these searches are dangerous. I distrust LLM librarians. There is so much data in the world: you can (in good faith!) find evidence to support almost any position or conclusion. ChatGPT is not a human, and, unlike teachers & librarians & scholars, ChatGPT does not have a consistent, legible worldview. In my experience, it readily agrees with any premise you hand it — and brings citations. It may have read every article that can be read, but it has no real opinion — so it is not a credible expert.

— Ben Stolovitz, How I use AI

Kimi K2 Thinking. Chinese AI lab Moonshot's Kimi K2 established itself as one of the largest open weight models - 1 trillion parameters - back in July. They've now released the Thinking version, also a trillion parameters (MoE, 32B active) and also under their custom modified (so not quite open source) MIT license.

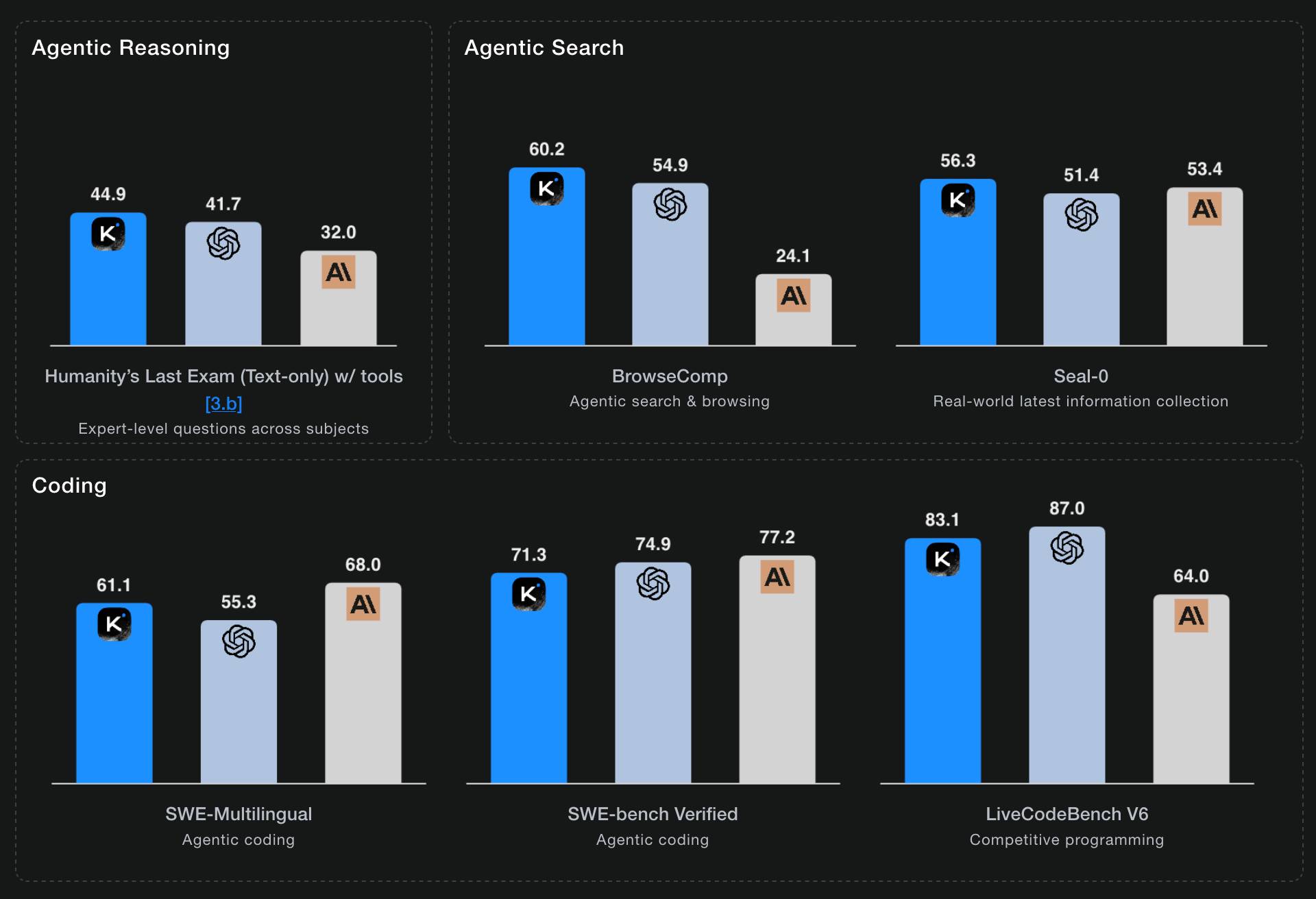

Starting with Kimi K2, we built it as a thinking agent that reasons step-by-step while dynamically invoking tools. It sets a new state-of-the-art on Humanity's Last Exam (HLE), BrowseComp, and other benchmarks by dramatically scaling multi-step reasoning depth and maintaining stable tool-use across 200–300 sequential calls. At the same time, K2 Thinking is a native INT4 quantization model with 256k context window, achieving lossless reductions in inference latency and GPU memory usage.

This one is only 594GB on Hugging Face - Kimi K2 was 1.03TB - which I think is due to the new INT4 quantization. This makes the model both cheaper and faster to host.

So far the only people hosting it are Moonshot themselves. I tried it out both via their own API and via the OpenRouter proxy to it, via the llm-moonshot plugin (by NickMystic) and my llm-openrouter plugin respectively.

The buzz around this model so far is very positive. Could this be the first open weight model that's competitive with the latest from OpenAI and Anthropic, especially for long-running agentic tool call sequences?

Moonshot AI's self-reported benchmark scores show K2 Thinking beating the top OpenAI and Anthropic models (GPT-5 and Sonnet 4.5 Thinking) at "Agentic Reasoning" and "Agentic Search" but not quite top for "Coding":

I ran a couple of pelican tests:

llm install llm-moonshot

llm keys set moonshot # paste key

llm -m moonshot/kimi-k2-thinking 'Generate an SVG of a pelican riding a bicycle'

llm install llm-openrouter

llm keys set openrouter # paste key

llm -m openrouter/moonshotai/kimi-k2-thinking \

'Generate an SVG of a pelican riding a bicycle'

Artificial Analysis said:

Kimi K2 Thinking achieves 93% in 𝜏²-Bench Telecom, an agentic tool use benchmark where the model acts as a customer service agent. This is the highest score we have independently measured. Tool use in long horizon agentic contexts was a strength of Kimi K2 Instruct and it appears this new Thinking variant makes substantial gains

CNBC quoted a source who provided the training price for the model:

The Kimi K2 Thinking model cost $4.6 million to train, according to a source familiar with the matter. [...] CNBC was unable to independently verify the DeepSeek or Kimi figures.

MLX developer Awni Hannun got it working on two 512GB M3 Ultra Mac Studios:

The new 1 Trillion parameter Kimi K2 Thinking model runs well on 2 M3 Ultras in its native format - no loss in quality!

The model was quantization aware trained (qat) at int4.

Here it generated ~3500 tokens at 15 toks/sec using pipeline-parallelism in mlx-lm

Here's the 658GB mlx-community model.

At the start of the year, most people loosely following AI probably knew of 0 [Chinese] AI labs. Now, and towards wrapping up 2025, I’d say all of DeepSeek, Qwen, and Kimi are becoming household names. They all have seasons of their best releases and different strengths. The important thing is this’ll be a growing list. A growing share of cutting edge mindshare is shifting to China. I expect some of the likes of Z.ai, Meituan, or Ant Ling to potentially join this list next year. For some of these labs releasing top tier benchmark models, they literally started their foundation model effort after DeepSeek. It took many Chinese companies only 6 months to catch up to the open frontier in ballpark of performance, now the question is if they can offer something in a niche of the frontier that has real demand for users.

— Nathan Lambert, 5 Thoughts on Kimi K2 Thinking

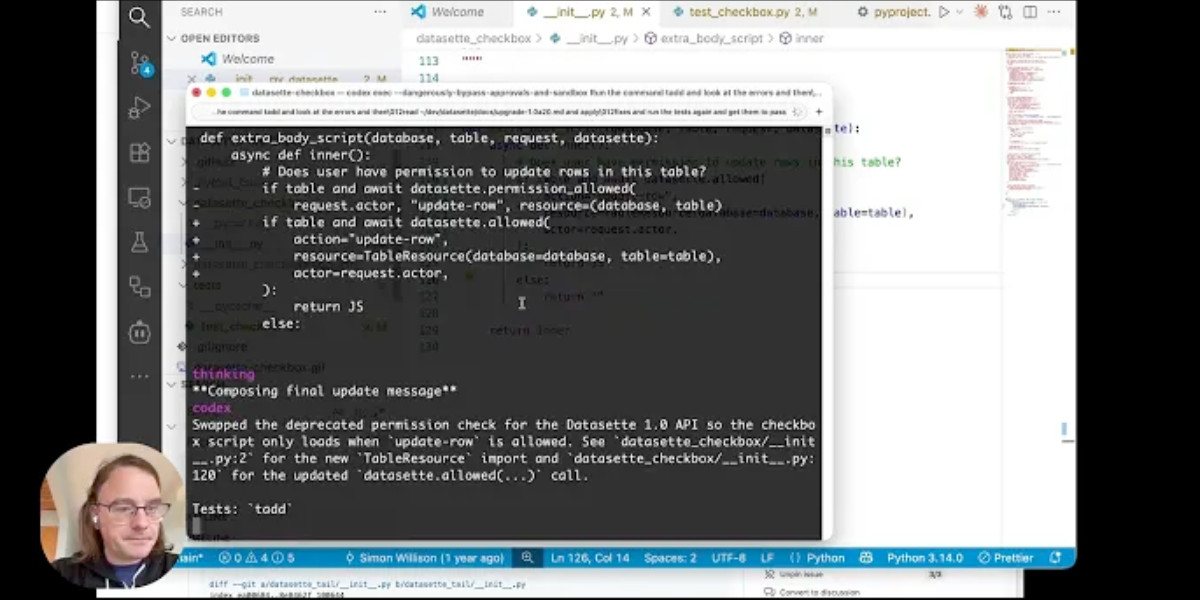

Video + notes on upgrading a Datasette plugin for the latest 1.0 alpha, with help from uv and OpenAI Codex CLI

I’m upgrading various plugins for compatibility with the new Datasette 1.0a20 alpha release and I decided to record a video of the process. This post accompanies that video with detailed additional notes.

[... 1,094 words]Code research projects with async coding agents like Claude Code and Codex

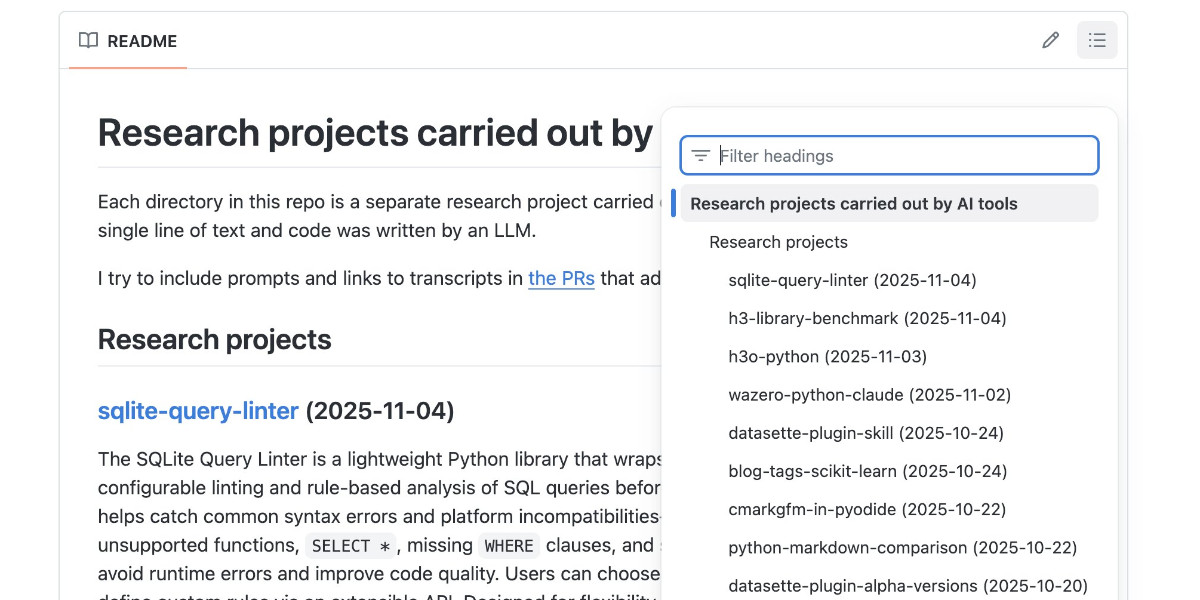

I’ve been experimenting with a pattern for LLM usage recently that’s working out really well: asynchronous code research tasks. Pick a research question, spin up an asynchronous coding agent and let it go and run some experiments and report back when it’s done.

[... 2,017 words]I'm worried that they put co-pilot in Excel because Excel is the beast that drives our entire economy and do you know who has tamed that beast?

Brenda.

Who is Brenda?

She is a mid-level employee in every finance department, in every business across this stupid nation and the Excel goddess herself descended from the heavens, kissed Brenda on her forehead and the sweat from Brenda's brow is what allows us to do capitalism. [...]

She's gonna birth that formula for a financial report and then she's gonna send that financial report to a higher up and he's gonna need to make a change to the report and normally he would have sent it back to Brenda but he's like oh I have AI and AI is probably like smarter than Brenda and then the AI is gonna fuck it up real bad and he won't be able to recognize it because he doesn't understand Excel because AI hallucinates.

You know who's not hallucinating?

Brenda.

— Ada James, @belligerentbarbies on TikTok

Code execution with MCP: Building more efficient agents (via) When I wrote about Claude Skills I mentioned that I don't use MCP at all any more when working with coding agents - I find CLI utilities and libraries like Playwright Python to be a more effective way of achieving the same goals.

This new piece from Anthropic proposes a way to bring the two worlds more closely together.

It identifies two challenges with MCP as it exists today. The first has been widely discussed before: all of those tool descriptions take up a lot of valuable real estate in the agent context even before you start using them.

The second is more subtle but equally interesting: chaining multiple MCP tools together involves passing their responses through the context, absorbing more valuable tokens and introducing chances for the LLM to make additional mistakes.

What if you could turn MCP tools into code functions instead, and then let the LLM wire them together with executable code?

Anthropic's example here imagines a system that turns MCP tools into TypeScript files on disk, looking something like this:

// ./servers/google-drive/getDocument.ts

interface GetDocumentInput {

documentId: string;

}

interface GetDocumentResponse {

content: string;

}

/* Read a document from Google Drive */

export async function getDocument(input: GetDocumentInput): Promise<GetDocumentResponse> {

return callMCPTool<GetDocumentResponse>('google_drive__get_document', input);

}This takes up no tokens at all - it's a file on disk. In a similar manner to Skills the agent can navigate the filesystem to discover these definitions on demand.

Then it can wire them together by generating code:

const transcript = (await gdrive.getDocument({ documentId: 'abc123' })).content;

await salesforce.updateRecord({

objectType: 'SalesMeeting',

recordId: '00Q5f000001abcXYZ',

data: { Notes: transcript }

});Notably, the example here avoids round-tripping the response from the gdrive.getDocument() call through the model on the way to the salesforce.updateRecord() call - which is faster, more reliable, saves on context tokens, and avoids the model being exposed to any potentially sensitive data in that document.

This all looks very solid to me! I think it's a sensible way to take advantage of the strengths of coding agents and address some of the major drawbacks of MCP as it is usually implemented today.

There's one catch: Anthropic outline the proposal in some detail but provide no code to execute on it! Implementation is left as an exercise for the reader:

If you implement this approach, we encourage you to share your findings with the MCP community.

MCP Colors: Systematically deal with prompt injection risk (via) Tim Kellogg proposes a neat way to think about prompt injection, especially with respect to MCP tools.

Classify every tool with a color: red if it exposes the agent to untrusted (potentially malicious) instructions, blue if it involves a "critical action" - something you would not want an attacker to be able to trigger.

This means you can configure your agent to actively avoid mixing the two colors at once:

The Chore: Go label every data input, and every tool (especially MCP tools). For MCP tools & resources, you can use the _meta object to keep track of the color. The agent can decide at runtime (or earlier) if it’s gotten into an unsafe state.

Personally, I like to automate. I needed to label ~200 tools, so I put them in a spreadsheet and used an LLM to label them. That way, I could focus on being precise and clear about my criteria for what constitutes “red”, “blue” or “neither”. That way I ended up with an artifact that scales beyond my initial set of tools.

Interleaved thinking is essential for LLM agents: it means alternating between explicit reasoning and tool use, while carrying that reasoning forward between steps.This process significantly enhances planning, self‑correction, and reliability in long workflows. [...]

From community feedback, we've often observed failures to preserve prior-round thinking state across multi-turn interactions with M2. The root cause is that the widely-used OpenAI Chat Completion API does not support passing reasoning content back in subsequent requests. Although the Anthropic API natively supports this capability, the community has provided less support for models beyond Claude, and many applications still omit passing back the previous turns' thinking in their Anthropic API implementations. This situation has resulted in poor support for Interleaved Thinking for new models. To fully unlock M2's capabilities, preserving the reasoning process across multi-turn interactions is essential.

— MiniMax, Interleaved Thinking Unlocks Reliable MiniMax-M2 Agentic Capability

How I Use Every Claude Code Feature (via) Useful, detailed guide from Shrivu Shankar, a Claude Code power user. Lots of tips for both individual Claude Code usage and configuring it for larger team projects.

I appreciated Shrivu's take on MCP:

The "Scripting" model (now formalized by Skills) is better, but it needs a secure way to access the environment. This to me is the new, more focused role for MCP.

Instead of a bloated API, an MCP should be a simple, secure gateway that provides a few powerful, high-level tools:

download_raw_data(filters...)take_sensitive_gated_action(args...)execute_code_in_environment_with_state(code...)In this model, MCP's job isn't to abstract reality for the agent; its job is to manage the auth, networking, and security boundaries and then get out of the way.

This makes a lot of sense to me. Most of my MCP usage with coding agents like Claude Code has been replaced by custom shell scripts for it to execute, but there's still a useful role for MCP in helping the agent access secure resources in a controlled way.

Claude Code Can Debug Low-level Cryptography (via) Go cryptography author Filippo Valsorda reports on some very positive results applying Claude Code to the challenge of implementing novel cryptography algorithms. After Claude was able to resolve a "fairly complex low-level bug" in fresh code he tried it against two other examples and got positive results both time.

Filippo isn't directly using Claude's solutions to the bugs, but is finding it useful for tracking down the cause and saving him a solid amount of debugging work:

Three out of three one-shot debugging hits with no help is extremely impressive. Importantly, there is no need to trust the LLM or review its output when its job is just saving me an hour or two by telling me where the bug is, for me to reason about it and fix it.

Using coding agents in this way may represent a useful entrypoint for LLM-skeptics who wouldn't dream of letting an autocomplete-machine writing code on their behalf.

Marimo is Joining CoreWeave (via) I don't usually cover startup acquisitions here, but this one feels relevant to several of my interests.

Marimo (previously) provide an open source (Apache 2 licensed) notebook tool for Python, with first-class support for an additional WebAssembly build plus an optional hosted service. It's effectively a reimagining of Jupyter notebooks as a reactive system, where cells automatically update based on changes to other cells - similar to how Observable JavaScript notebooks work.

The first public Marimo release was in January 2024 and the tool has "been in development since 2022" (source).

CoreWeave are a big player in the AI data center space. They started out as an Ethereum mining company in 2017, then pivoted to cloud computing infrastructure for AI companies after the 2018 cryptocurrency crash. They IPOd in March 2025 and today they operate more than 30 data centers worldwide and have announced a number of eye-wateringly sized deals with companies such as Cohere and OpenAI. I found their Wikipedia page very helpful.

They've also been on an acquisition spree this year, including:

- Weights & Biases in March 2025 (deal closed in May), the AI training observability platform.

- OpenPipe in September 2025 - a reinforcement learning platform, authors of the Agent Reinforcement Trainer Apache 2 licensed open source RL framework.

- Monolith AI in October 2025, a UK-based AI model SaaS platform focused on AI for engineering and industrial manufacturing.

- And now Marimo.

Marimo's own announcement emphasizes continued investment in that tool:

Marimo is joining CoreWeave. We’re continuing to build the open-source marimo notebook, while also leveling up molab with serious compute. Our long-term mission remains the same: to build the world’s best open-source programming environment for working with data.

marimo is, and always will be, free, open-source, and permissively licensed.

Give CoreWeave's buying spree only really started this year it's impossible to say how well these acquisitions are likely to play out - they haven't yet established a track record.

Introducing SWE-1.5: Our Fast Agent Model (via) Here's the second fast coding model released by a coding agent IDE in the same day - the first was Composer-1 by Cursor. This time it's Windsurf releasing SWE-1.5:

Today we’re releasing SWE-1.5, the latest in our family of models optimized for software engineering. It is a frontier-size model with hundreds of billions of parameters that achieves near-SOTA coding performance. It also sets a new standard for speed: we partnered with Cerebras to serve it at up to 950 tok/s – 6x faster than Haiku 4.5 and 13x faster than Sonnet 4.5.

Like Composer-1 it's only available via their editor, no separate API yet. Also like Composer-1 they don't appear willing to share details of the "leading open-source base model" they based their new model on.

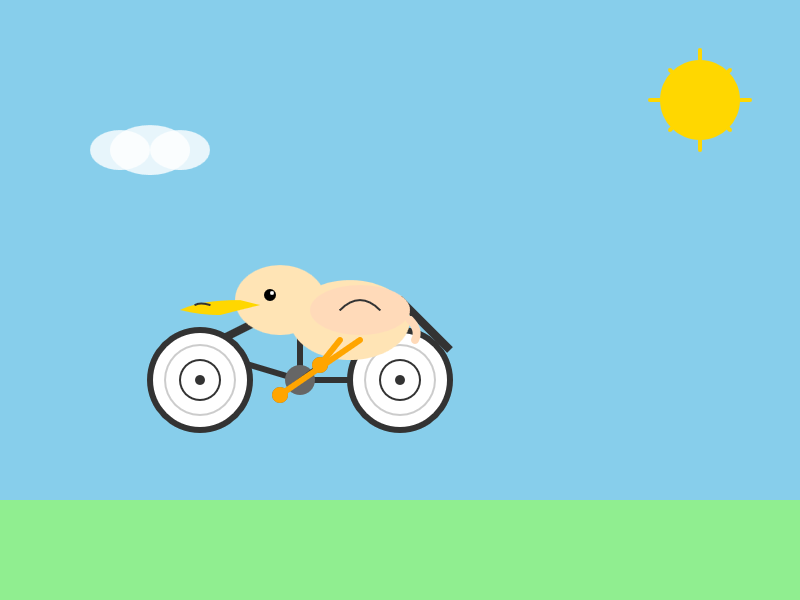

I asked it to generate an SVG of a pelican riding a bicycle and got this:

This one felt really fast. Partnering with Cerebras for inference is a very smart move.

They share a lot of details about their training process in the post:

SWE-1.5 is trained on our state-of-the-art cluster of thousands of GB200 NVL72 chips. We believe SWE-1.5 may be the first public production model trained on the new GB200 generation. [...]

Our RL rollouts require high-fidelity environments with code execution and even web browsing. To achieve this, we leveraged our VM hypervisor

otterlinkthat allows us to scale Devin to tens of thousands of concurrent machines (learn more about blockdiff). This enabled us to smoothly support very high concurrency and ensure the training environment is aligned with our Devin production environments.

That's another similarity to Cursor's Composer-1! Cursor talked about how they ran "hundreds of thousands of concurrent sandboxed coding environments in the cloud" in their description of their RL training as well.

This is a notable trend: if you want to build a really great agentic coding tool there's clearly a lot to be said for using reinforcement learning to fine-tune a model against your own custom set of tools using large numbers of sandboxed simulated coding environments as part of that process.

Update: I think it's built on GLM.

MiniMax M2 & Agent: Ingenious in Simplicity. MiniMax M2 was released on Monday 27th October by MiniMax, a Chinese AI lab founded in December 2021.

It's a very promising model. Their self-reported benchmark scores show it as comparable to Claude Sonnet 4, and Artificial Analysis are ranking it as the best currently available open weight model according to their intelligence score:

MiniMax’s M2 achieves a new all-time-high Intelligence Index score for an open weights model and offers impressive efficiency with only 10B active parameters (200B total). [...]

The model’s strengths include tool use and instruction following (as shown by Tau2 Bench and IFBench). As such, while M2 likely excels at agentic use cases it may underperform other open weights leaders such as DeepSeek V3.2 and Qwen3 235B at some generalist tasks. This is in line with a number of recent open weights model releases from Chinese AI labs which focus on agentic capabilities, likely pointing to a heavy post-training emphasis on RL.

The size is particularly significant: the model weights are 230GB on Hugging Face, significantly smaller than other high performing open weight models. That's small enough to run on a 256GB Mac Studio, and the MLX community have that working already.

MiniMax offer their own API, and recommend using their Anthropic-compatible endpoint and the official Anthropic SDKs to access it. MiniMax Head of Engineering Skyler Miao provided some background on that:

M2 is a agentic thinking model, it do interleaved thinking like sonnet 4.5, which means every response will contain its thought content. Its very important for M2 to keep the chain of thought. So we must make sure the history thought passed back to the model. Anthropic API support it for sure, as sonnet needs it as well. OpenAI only support it in their new Response API, no support for in ChatCompletion.

MiniMax are offering the new model via their API for free until November 7th, after which the cost will be $0.30/million input tokens and $1.20/million output tokens - similar in price to Gemini 2.5 Flash and GPT-5 Mini, see price comparison here on my llm-prices.com site.

I released a new plugin for LLM called llm-minimax providing support for M2 via the MiniMax API:

llm install llm-minimax

llm keys set minimax

# Paste key here

llm -m m2 -o max_tokens 10000 "Generate an SVG of a pelican riding a bicycle"

Here's the result:

51 input, 4,017 output. At $0.30/m input and $1.20/m output that pelican would cost 0.4836 cents - less than half a cent.

This is the first plugin I've written for an Anthropic-API-compatible model. I released llm-anthropic 0.21 first adding the ability to customize the base_url parameter when using that model class. This meant the new plugin was less than 30 lines of Python.

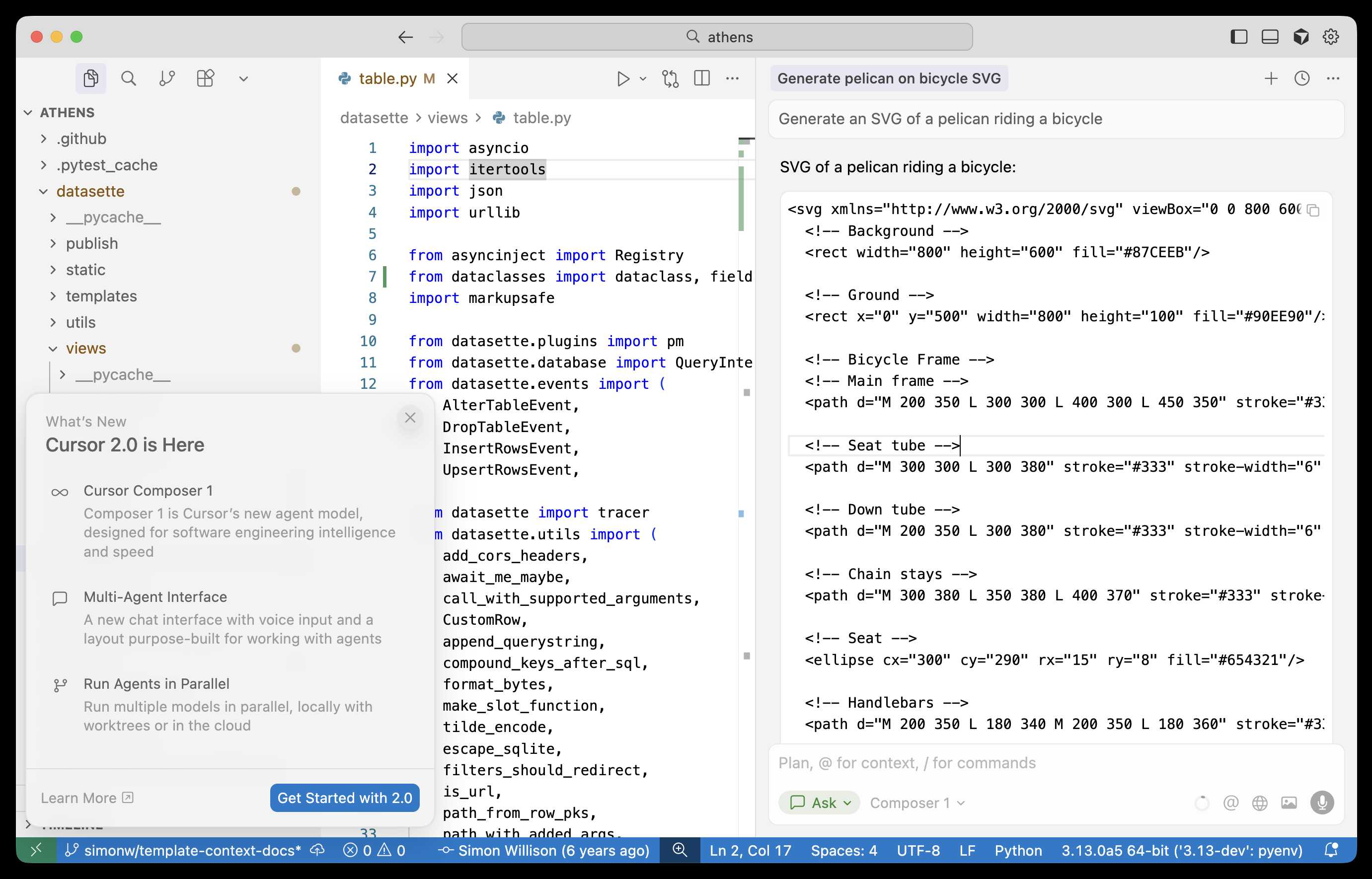

Composer: Building a fast frontier model with RL (via) Cursor released Cursor 2.0 today, with a refreshed UI focused on agentic coding (and running agents in parallel) and a new model that's unique to Cursor called Composer 1.

As far as I can tell there's no way to call the model directly via an API, so I fired up "Ask" mode in Cursor's chat side panel and asked it to "Generate an SVG of a pelican riding a bicycle":

Here's the result:

The notable thing about Composer-1 is that it is designed to be fast. The pelican certainly came back quickly, and in their announcement they describe it as being "4x faster than similarly intelligent models".

It's interesting to see Cursor investing resources in training their own code-specific model - similar to GPT-5-Codex or Qwen3-Coder. From their post:

Composer is a mixture-of-experts (MoE) language model supporting long-context generation and understanding. It is specialized for software engineering through reinforcement learning (RL) in a diverse range of development environments. [...]

Efficient training of large MoE models requires significant investment into building infrastructure and systems research. We built custom training infrastructure leveraging PyTorch and Ray to power asynchronous reinforcement learning at scale. We natively train our models at low precision by combining our MXFP8 MoE kernels with expert parallelism and hybrid sharded data parallelism, allowing us to scale training to thousands of NVIDIA GPUs with minimal communication cost. [...]

During RL, we want our model to be able to call any tool in the Cursor Agent harness. These tools allow editing code, using semantic search, grepping strings, and running terminal commands. At our scale, teaching the model to effectively call these tools requires running hundreds of thousands of concurrent sandboxed coding environments in the cloud.

One detail that's notably absent from their description: did they train the model from scratch, or did they start with an existing open-weights model such as something from Qwen or GLM?

Cursor researcher Sasha Rush has been answering questions on Hacker News, but has so far been evasive in answering questions about the base model. When directly asked "is Composer a fine tune of an existing open source base model?" they replied:

Our primary focus is on RL post-training. We think that is the best way to get the model to be a strong interactive agent.

Sasha did confirm that rumors of an earlier Cursor preview model, Cheetah, being based on a model by xAI's Grok were "Straight up untrue."

Hacking the WiFi-enabled color screen GitHub Universe conference badge

I’m at GitHub Universe this week (thanks to a free ticket from Microsoft). Yesterday I picked up my conference badge... which incorporates a full Raspberry Pi Raspberry Pi Pico microcontroller with a battery, color screen, WiFi and bluetooth.

Claude doesn't make me much faster on the work that I am an expert on. Maybe 15-20% depending on the day.

It's the work that I don't know how to do and would have to research. Or the grunge work I don't even want to do. On this it is hard to even put a number on. Many of the projects I do with Claude day to day I just wouldn't have done at all pre-Claude.

Infinity% improvement in productivity on those.

GenAI Image Editing Showdown (via) Useful collection of examples by Shaun Pedicini who tested Seedream 4, Gemini 2.5 Flash, Qwen-Image-Edit, FLUX.1 Kontext [dev], FLUX.1 Kontext [max], OmniGen2, and OpenAI gpt-image-1 across 12 image editing prompts.

The tasks are very neatly selected, for example:

Remove all the brown pieces of candy from the glass bowl

Qwen-Image-Edit (a model that can be self-hosted) was the only one to successfully manage that!

This kind of collection is really useful for building up an intuition as to how well image editing models work, and which ones are worth trying for which categories of task.

Shaun has a similar page for text-to-image models which are not fed an initial image to modify, with further challenging prompts like:

Two Prussian soldiers wearing spiked pith helmets are facing each other and playing a game of ring toss by attempting to toss metal rings over the spike on the other soldier's helmet.

Sora might have a ’pervert’ problem on its hands (via) Katie Notopoulos turned on the Sora 2 option where anyone can make a video featuring her cameo, and then:

I found a stranger had made a video where I appeared pregnant. A quick look at the user's profile, and I saw that this person's entire Sora profile was made up of this genre — video after video of women with big, pregnant bellies. I recognized immediately what this was: fetish content.

This feels like an intractable problem to me: given the enormous array of fetishes it's hard to imagine a classifier that could protect people from having their likeness used in this way.

Best to be aware of this risk before turning on any settings that allow strangers to reuse your image... and that's only an option for tools that implement a robust opt-in mechanism like Sora does.

Someone on Hacker News asked for tips on setting up a codebase to be more productive with AI coding tools. Here's my reply:

- Good automated tests which the coding agent can run. I love pytest for this - one of my projects has 1500 tests and Claude Code is really good at selectively executing just tests relevant to the change it is making, and then running the whole suite at the end.

- Give them the ability to interactively test the code they are writing too. Notes on how to start a development server (for web projects) are useful, then you can have them use Playwright or curl to try things out.

- I'm having great results from maintaining a GitHub issues collection for projects and pasting URLs to issues directly into Claude Code.

- I actually don't think documentation is too important: LLMs can read the code a lot faster than you to figure out how to use it. I have comprehensive documentation across all of my projects but I don't think it's that helpful for the coding agents, though they are good at helping me spot if it needs updating.

- Linters, type checkers, auto-formatters - give coding agents helpful tools to run and they'll use them.

For the most part anything that makes a codebase easier for humans to maintain turns out to help agents as well.

Update: Thought of another one: detailed error messages! If a manual or automated test fails the more information you can return back to the model the better, and stuffing extra data in the error message or assertion is a very inexpensive way to do that.

If you have an

AGENTS.mdfile, you can source it in yourCLAUDE.mdusing@AGENTS.mdto maintain a single source of truth.

— Claude Docs, with the official answer to standardizing on AGENTS.md

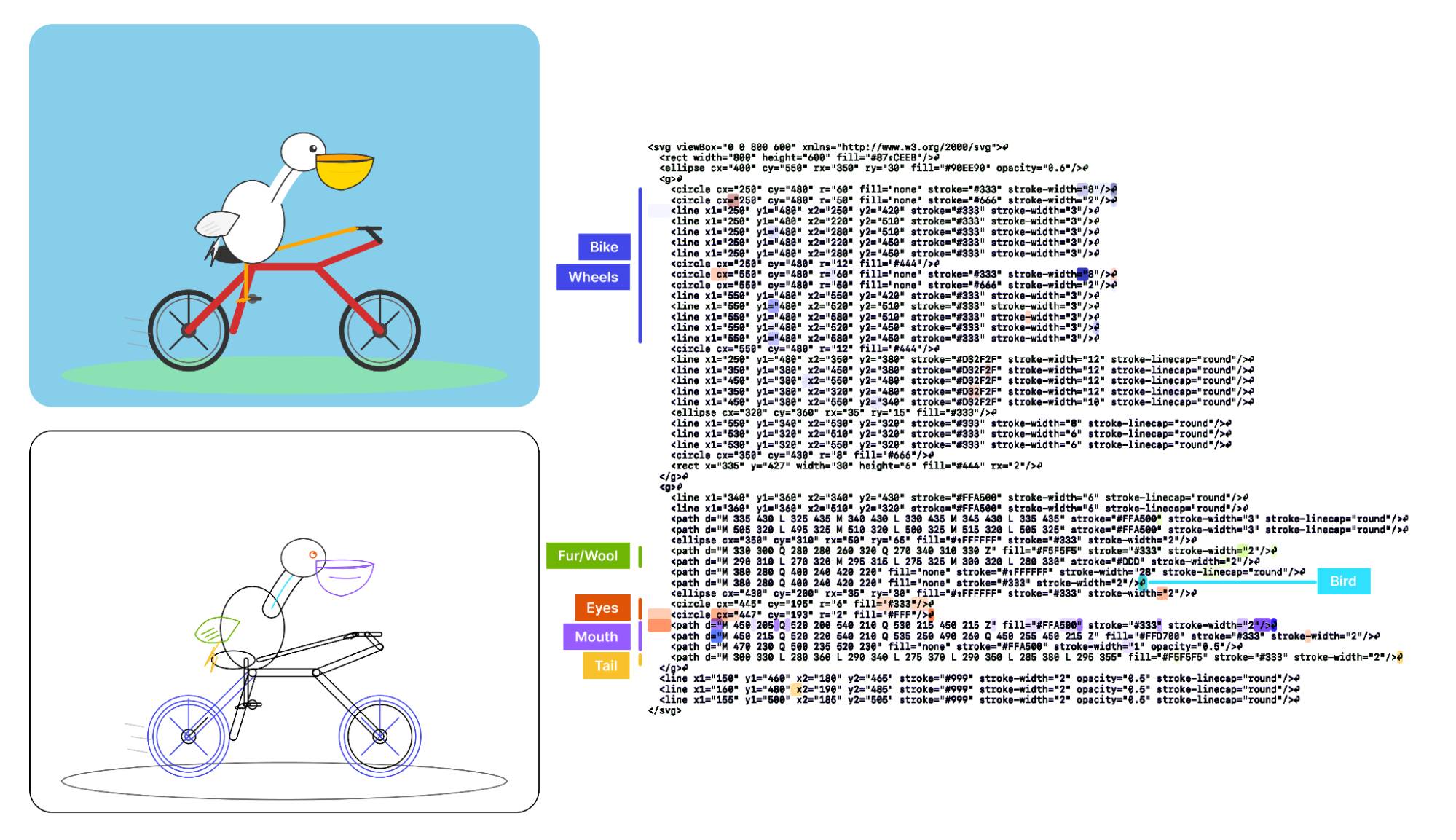

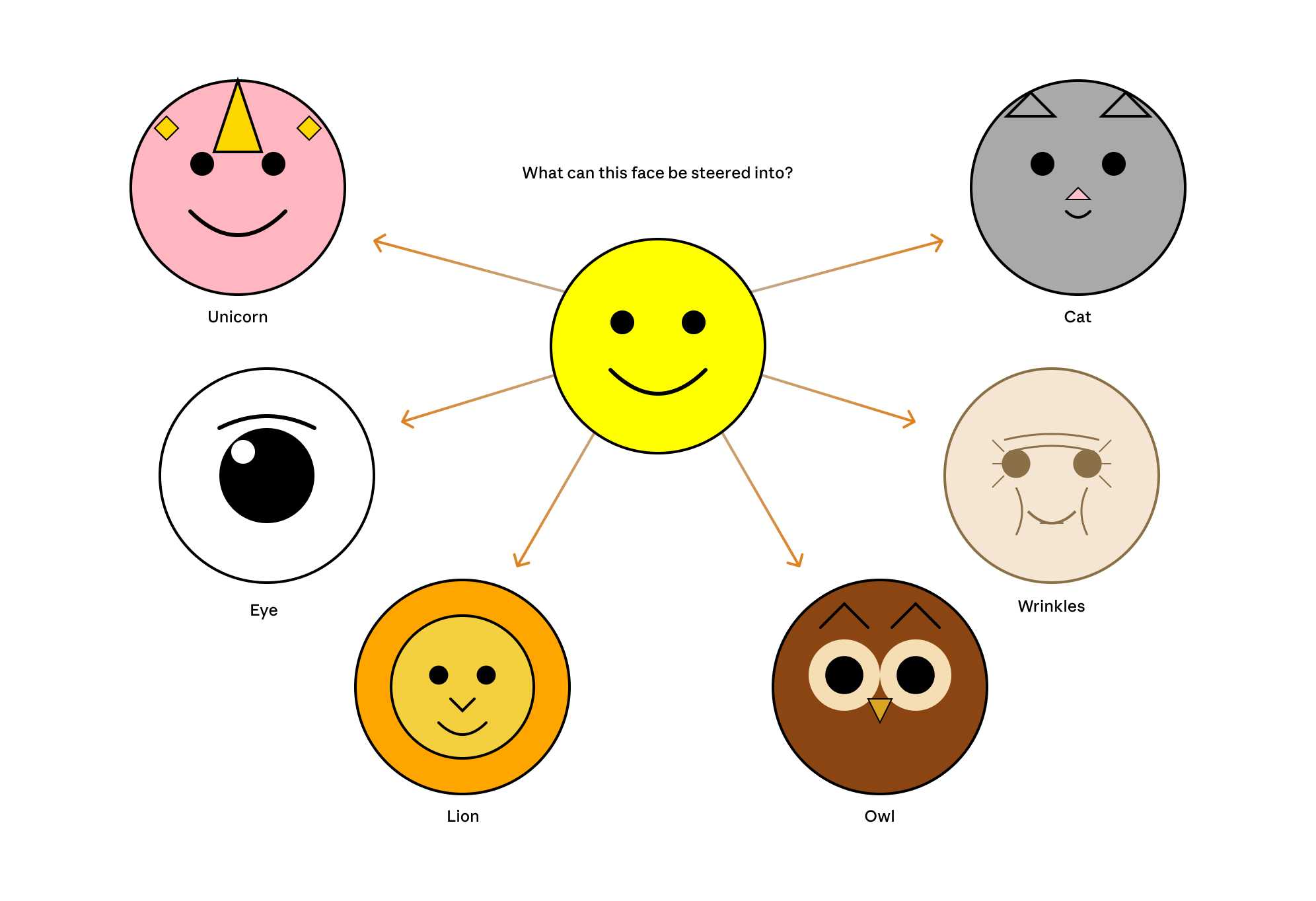

Visual Features Across Modalities: SVG and ASCII Art Reveal Cross-Modal Understanding (via) New model interpretability research from Anthropic, this time focused on SVG and ASCII art generation.

We found that the same feature that activates over the eyes in an ASCII face also activates for eyes across diverse text-based modalities, including SVG code and prose in various languages. This is not limited to eyes – we found a number of cross-modal features that recognize specific concepts: from small components like mouths and ears within ASCII or SVG faces, to full visual depictions like dogs and cats. [...]

These features depend on the surrounding context within the visual depiction. For instance, an SVG circle element activates “eye” features only when positioned within a larger structure that activates “face” features.

And really, I can't not link to this one given the bonus they tagged on at the end!

As a bonus, we also inspected features for an SVG of a pelican riding a bicycle, first popularized by Simon Willison as a way to test a model's artistic capabilities. We find features representing concepts including "bike", "wheels", "feet", "tail", "eyes", and "mouth" activating over the corresponding parts of the SVG code.

Now that they can identify model features associated with visual concepts in SVG images, can they us those for steering?

It turns out they can! Starting with a smiley SVG (provided as XML with no indication as to what it was drawing) and then applying a negative score to the "smile" feature produced a frown instead, and worked against ASCII art as well.

They could also boost features like unicorn, cat, owl, or lion and get new SVG smileys clearly attempting to depict those creatures.

I'd love to see how this behaves if you jack up the feature for the Golden Gate Bridge.

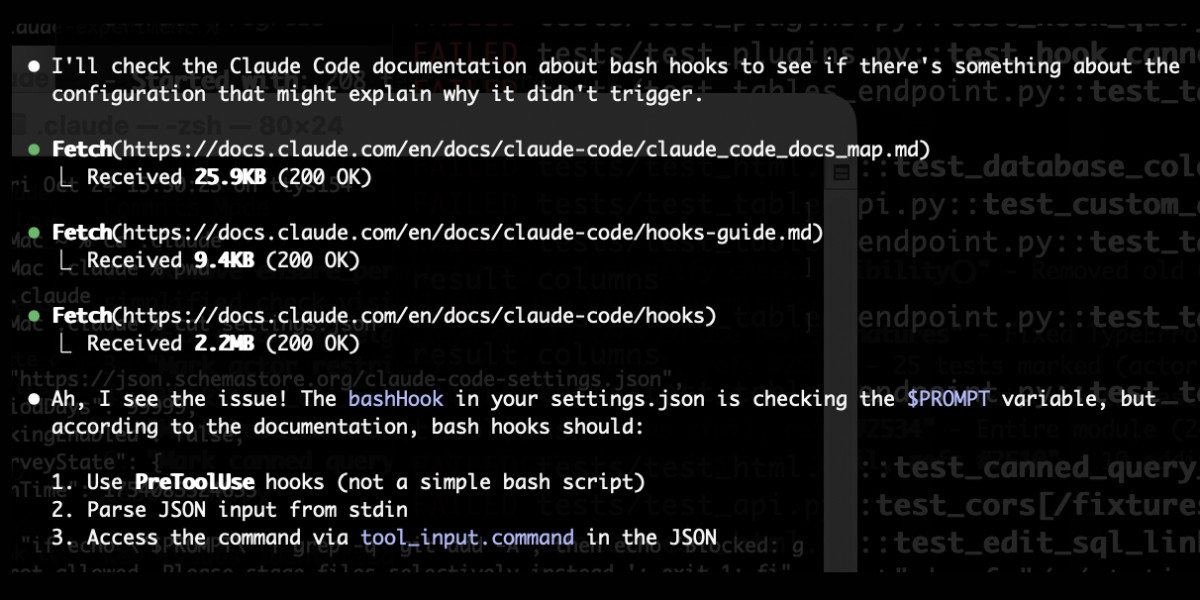

claude_code_docs_map.md. Something I'm enjoying about Claude Code is that any time you ask it questions about itself it runs tool calls like these:

In this case I'd asked it about its "hooks" feature.

The claude_code_docs_map.md file is a neat Markdown index of all of their other documentation - the same pattern advocated by llms.txt. Claude Code can then fetch further documentation to help it answer your question.

I intercepted the current Claude Code system prompt using this trick and sure enough it included a note about this URL:

When the user directly asks about Claude Code (eg. "can Claude Code do...", "does Claude Code have..."), or asks in second person (eg. "are you able...", "can you do..."), or asks how to use a specific Claude Code feature (eg. implement a hook, or write a slash command), use the WebFetch tool to gather information to answer the question from Claude Code docs. The list of available docs is available at https://docs.claude.com/en/docs/claude-code/claude_code_docs_map.md.

I wish other LLM products - including both ChatGPT and Claude.ai themselves - would implement a similar pattern. It's infuriating how bad LLM tools are at answering questions about themselves, though unsurprising given that their model's training data pre-dates the latest version of those tools.