4 posts tagged “anthony-shaw”

2025

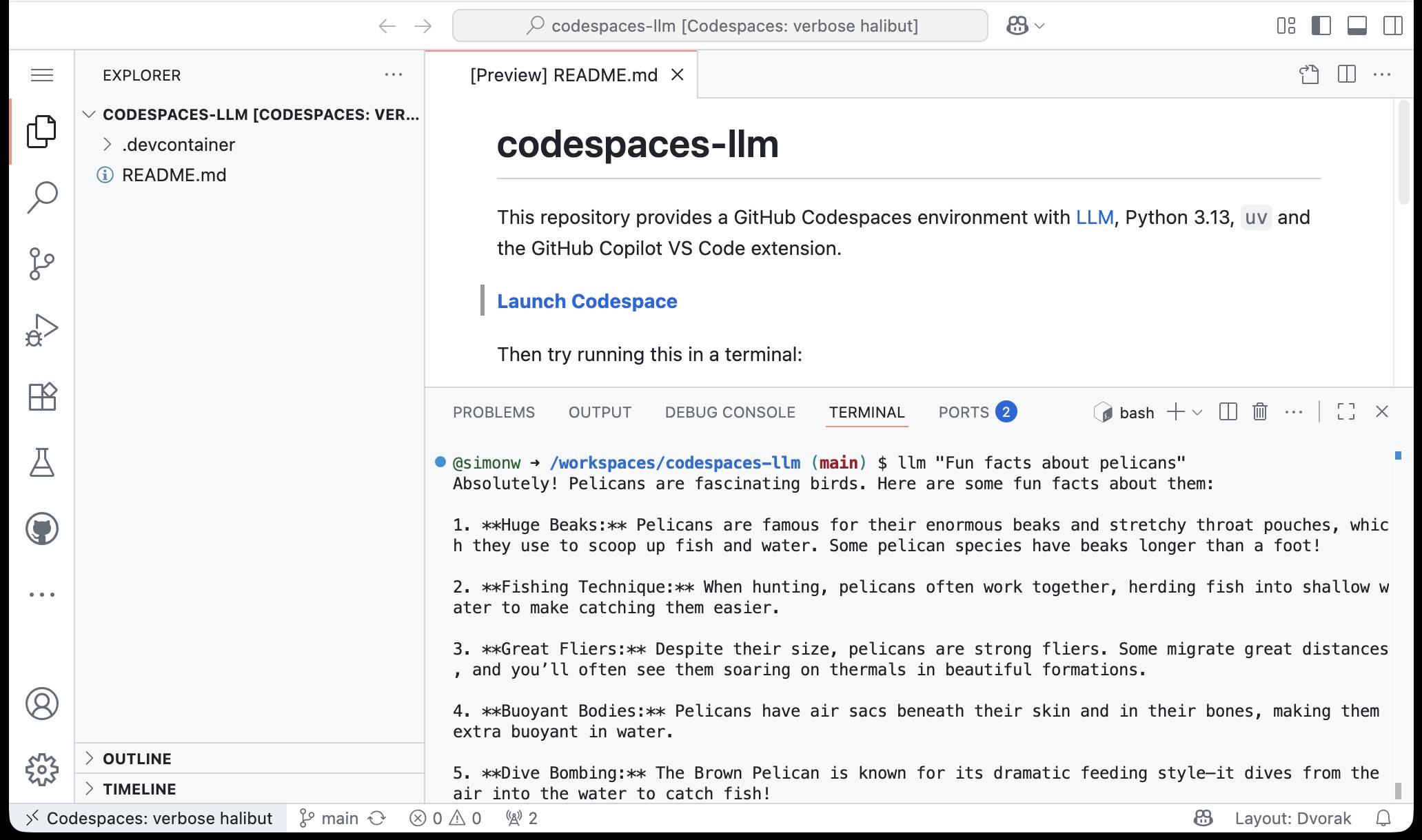

simonw/codespaces-llm. GitHub Codespaces provides full development environments in your browser, and is free to use with anyone with a GitHub account. Each environment has a full Linux container and a browser-based UI using VS Code.

I found out today that GitHub Codespaces come with a GITHUB_TOKEN environment variable... and that token works as an API key for accessing LLMs in the GitHub Models collection, which includes dozens of models from OpenAI, Microsoft, Mistral, xAI, DeepSeek, Meta and more.

Anthony Shaw's llm-github-models plugin for my LLM tool allows it to talk directly to GitHub Models. I filed a suggestion that it could pick up that GITHUB_TOKEN variable automatically and Anthony shipped v0.18.0 with that feature a few hours later.

... which means you can now run the following in any Python-enabled Codespaces container and get a working llm command:

pip install llm

llm install llm-github-models

llm models default github/gpt-4.1

llm "Fun facts about pelicans"

Setting the default model to github/gpt-4.1 means you get free (albeit rate-limited) access to that OpenAI model.

To save you from needing to even run that sequence of commands I've created a new GitHub repository, simonw/codespaces-llm, which pre-installs and runs those commands for you.

Anyone with a GitHub account can use this URL to launch a new Codespaces instance with a configured llm terminal command ready to use:

codespaces.new/simonw/codespaces-llm?quickstart=1

While putting this together I wrote up what I've learned about devcontainers so far as a TIL: Configuring GitHub Codespaces using devcontainers.

llm-github-models 0.15. Anthony Shaw's llm-github-models plugin just got an upgrade: it now supports LLM 0.26 tool use for a subset of the models hosted on the GitHub Models API, contributed by Caleb Brose.

The neat thing about this GitHub Models plugin is that it picks up an API key from your GITHUB_TOKEN - and if you're running LLM within a GitHub Actions worker the API key provided by the worker should be enough to start executing prompts!

I tried it out against Cohere Command A via GitHub Models like this (transcript here):

llm install llm-github-models

llm keys set github

# Paste key here

llm -m github/cohere-command-a -T llm_time 'What time is it?' --td

We now have seven LLM plugins that provide tool support, covering OpenAI, Anthropic, Gemini, Mistral, Ollama, llama-server and now GitHub Models.

I had some notes in a GitHub issue thread in a private repository that I wanted to export as Markdown. I realized that I could get them using a combination of several recent projects.

Here's what I ran:

export GITHUB_TOKEN="$(llm keys get github)"

llm -f issue:https://github.com/simonw/todos/issues/170 \

-m echo --no-log | jq .prompt -r > notes.md

I have a GitHub personal access token stored in my LLM keys, for use with Anthony Shaw's llm-github-models plugin.

My own llm-fragments-github plugin expects an optional GITHUB_TOKEN environment variable, so I set that first - here's an issue to have it use the github key instead.

With that set, the issue: fragment loader can take a URL to a private GitHub issue thread and load it via the API using the token, then concatenate the comments together as Markdown. Here's the code for that.

Fragments are meant to be used as input to LLMs. I built a llm-echo plugin recently which adds a fake LLM called "echo" which simply echos its input back out again.

Adding --no-log prevents that junk data from being stored in my LLM log database.

The output is JSON with a "prompt" key for the original prompt. I use jq .prompt to extract that out, then -r to get it as raw text (not a "JSON string").

... and I write the result to notes.md.

2024

Python 3.13 gets a JIT. “In late December 2023 (Christmas Day to be precise), CPython core developer Brandt Bucher submitted a little pull-request to the Python 3.13 branch adding a JIT compiler.”

Anthony Shaw does a deep dive into this new experimental JIT, explaining how it differs from other JITs. It’s an implementation of a copy-and-patch JIT, an idea that only emerged in 2021. This makes it architecturally much simpler than a traditional JIT, allowing it to compile faster and take advantage of existing LLVM tools on different architectures.

So far it’s providing a 2-9% performance improvement, but the real impact will be from the many future optimizations it enables.