146 posts tagged “local-llms”

LLMs that can run on consumer hardware like laptops or mobile phones.

2026

ggml.ai joins Hugging Face to ensure the long-term progress of Local AI (via) I don't normally cover acquisition news like this, but I have some thoughts.

It's hard to overstate the impact Georgi Gerganov has had on the local model space. Back in March 2023 his release of llama.cpp made it possible to run a local LLM on consumer hardware. The original README said:

The main goal is to run the model using 4-bit quantization on a MacBook. [...] This was hacked in an evening - I have no idea if it works correctly.

I wrote about trying llama.cpp out at the time in Large language models are having their Stable Diffusion moment:

I used it to run the 7B LLaMA model on my laptop last night, and then this morning upgraded to the 13B model—the one that Facebook claim is competitive with GPT-3.

Meta's original LLaMA release depended on PyTorch and their FairScale PyTorch extension for running on multiple GPUs, and required CUDA and NVIDIA hardware. Georgi's work opened that up to a much wider range of hardware and kicked off the local model movement that has continued to grow since then.

Hugging Face are already responsible for the incredibly influential Transformers library used by the majority of LLM releases today. They've proven themselves a good steward for that open source project, which makes me optimistic for the future of llama.cpp and related projects.

This section from the announcement looks particularly promising:

Going forward, our joint efforts will be geared towards the following objectives:

- Towards seamless "single-click" integration with the transformers library. The

transformersframework has established itself as the 'source of truth' for AI model definitions. Improving the compatibility between the transformers and the ggml ecosystems is essential for wider model support and quality control.- Better packaging and user experience of ggml-based software. As we enter the phase in which local inference becomes a meaningful and competitive alternative to cloud inference, it is crucial to improve and simplify the way in which casual users deploy and access local models. We will work towards making llama.cpp ubiquitous and readily available everywhere, and continue partnering with great downstream projects.

Given the influence of Transformers, this closer integration could lead to model releases that are compatible with the GGML ecosystem out of the box. That would be a big win for the local model ecosystem.

I'm also excited to see investment in "packaging and user experience of ggml-based software". This has mostly been left to tools like Ollama and LM Studio. ggml-org released LlamaBarn last year - "a macOS menu bar app for running local LLMs" - and I'm hopeful that further investment in this area will result in more high quality open source tools for running local models from the team best placed to deliver them.

2025

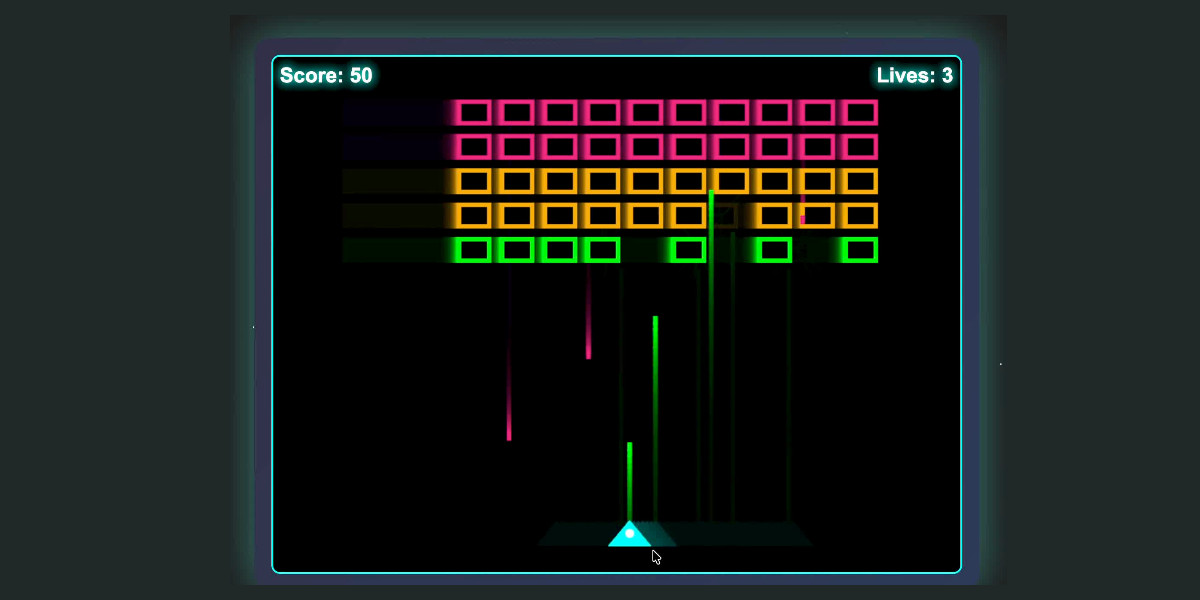

Using Codex CLI with gpt-oss:120b on an NVIDIA DGX Spark via Tailscale. Inspired by a YouTube comment I wrote up how I run OpenAI's Codex CLI coding agent against the gpt-oss:120b model running in Ollama on my NVIDIA DGX Spark via a Tailscale network.

It takes a little bit of work to configure but the result is I can now use Codex CLI on my laptop anywhere in the world against a self-hosted model.

I used it to build this space invaders clone.

MiniMax M2 & Agent: Ingenious in Simplicity. MiniMax M2 was released on Monday 27th October by MiniMax, a Chinese AI lab founded in December 2021.

It's a very promising model. Their self-reported benchmark scores show it as comparable to Claude Sonnet 4, and Artificial Analysis are ranking it as the best currently available open weight model according to their intelligence score:

MiniMax’s M2 achieves a new all-time-high Intelligence Index score for an open weights model and offers impressive efficiency with only 10B active parameters (200B total). [...]

The model’s strengths include tool use and instruction following (as shown by Tau2 Bench and IFBench). As such, while M2 likely excels at agentic use cases it may underperform other open weights leaders such as DeepSeek V3.2 and Qwen3 235B at some generalist tasks. This is in line with a number of recent open weights model releases from Chinese AI labs which focus on agentic capabilities, likely pointing to a heavy post-training emphasis on RL.

The size is particularly significant: the model weights are 230GB on Hugging Face, significantly smaller than other high performing open weight models. That's small enough to run on a 256GB Mac Studio, and the MLX community have that working already.

MiniMax offer their own API, and recommend using their Anthropic-compatible endpoint and the official Anthropic SDKs to access it. MiniMax Head of Engineering Skyler Miao provided some background on that:

M2 is a agentic thinking model, it do interleaved thinking like sonnet 4.5, which means every response will contain its thought content. Its very important for M2 to keep the chain of thought. So we must make sure the history thought passed back to the model. Anthropic API support it for sure, as sonnet needs it as well. OpenAI only support it in their new Response API, no support for in ChatCompletion.

MiniMax are offering the new model via their API for free until November 7th, after which the cost will be $0.30/million input tokens and $1.20/million output tokens - similar in price to Gemini 2.5 Flash and GPT-5 Mini, see price comparison here on my llm-prices.com site.

I released a new plugin for LLM called llm-minimax providing support for M2 via the MiniMax API:

llm install llm-minimax

llm keys set minimax

# Paste key here

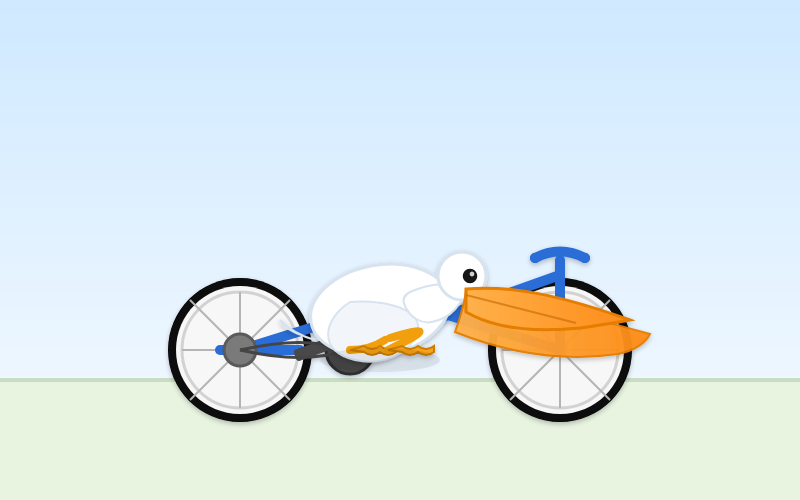

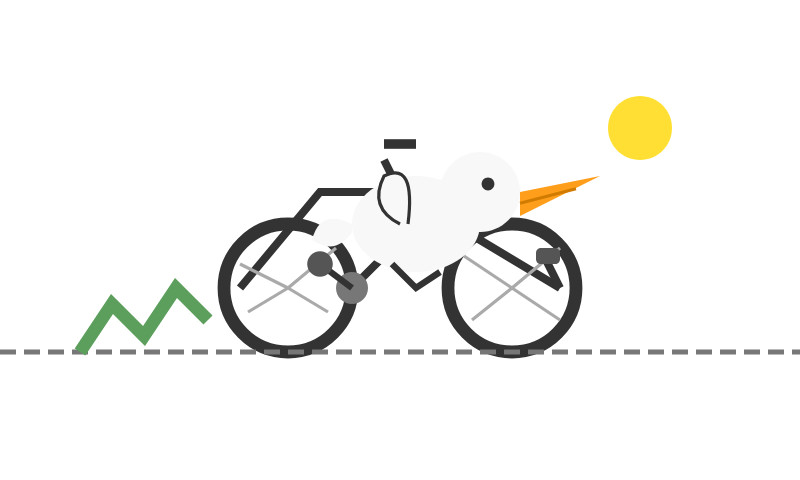

llm -m m2 -o max_tokens 10000 "Generate an SVG of a pelican riding a bicycle"

Here's the result:

51 input, 4,017 output. At $0.30/m input and $1.20/m output that pelican would cost 0.4836 cents - less than half a cent.

This is the first plugin I've written for an Anthropic-API-compatible model. I released llm-anthropic 0.21 first adding the ability to customize the base_url parameter when using that model class. This meant the new plugin was less than 30 lines of Python.

NVIDIA DGX Spark + Apple Mac Studio = 4x Faster LLM Inference with EXO 1.0 (via) EXO Labs wired a 256GB M3 Ultra Mac Studio up to an NVIDIA DGX Spark and got a 2.8x performance boost serving Llama-3.1 8B (FP16) with an 8,192 token prompt.

Their detailed explanation taught me a lot about LLM performance.

There are two key steps in executing a prompt. The first is the prefill phase that reads the incoming prompt and builds a KV cache for each of the transformer layers in the model. This is compute-bound as it needs to process every token in the input and perform large matrix multiplications across all of the layers to initialize the model's internal state.

Performance in the prefill stage influences TTFT - time‑to‑first‑token.

The second step is the decode phase, which generates the output one token at a time. This part is limited by memory bandwidth - there's less arithmetic, but each token needs to consider the entire KV cache.

Decode performance influences TPS - tokens per second.

EXO noted that the Spark has 100 TFLOPS but only 273GB/s of memory bandwidth, making it a better fit for prefill. The M3 Ultra has 26 TFLOPS but 819GB/s of memory bandwidth, making it ideal for the decode phase.

They run prefill on the Spark, streaming the KV cache to the Mac over 10Gb Ethernet. They can start streaming earlier layers while the later layers are still being calculated. Then the Mac runs the decode phase, returning tokens faster than if the Spark had run the full process end-to-end.

NVIDIA DGX Spark: great hardware, early days for the ecosystem

NVIDIA sent me a preview unit of their new DGX Spark desktop “AI supercomputer”. I’ve never had hardware to review before! You can consider this my first ever sponsored post if you like, but they did not pay me any cash and aside from an embargo date they did not request (nor would I grant) any editorial input into what I write about the device.

[... 1,846 words]Video of GPT-OSS 20B running on a phone. GPT-OSS 20B is a very good model. At launch OpenAI claimed:

The gpt-oss-20b model delivers similar results to OpenAI o3‑mini on common benchmarks and can run on edge devices with just 16 GB of memory

Nexa AI just posted a video on Twitter demonstrating exactly that: the full GPT-OSS 20B running on a Snapdragon Gen 5 phone in their Nexa Studio Android app. It requires at least 16GB of RAM, and benefits from Snapdragon using a similar trick to Apple Silicon where the system RAM is available to both the CPU and the GPU.

The latest iPhone 17 Pro Max is still stuck at 12GB of RAM, presumably not enough to run this same model.

Locally AI. Handy new iOS app by Adrien Grondin for running local LLMs on your phone. It just added support for the new iOS 26 Apple Foundation model, so you can install this app and instantly start a conversation with that model without any additional download.

The app can also run a variety of other models using MLX, including members of the Gemma, Llama 3.2, and and Qwen families.

Load Llama-3.2 WebGPU in your browser from a local folder (via) Inspired by a comment on Hacker News I decided to see if it was possible to modify the transformers.js-examples/tree/main/llama-3.2-webgpu Llama 3.2 chat demo (online here, I wrote about it last November) to add an option to open a local model file directly from a folder on disk, rather than waiting for it to download over the network.

I posed the problem to OpenAI's GPT-5-enabled Codex CLI like this:

git clone https://github.com/huggingface/transformers.js-examples

cd transformers.js-examples/llama-3.2-webgpu

codex

Then this prompt:

Modify this application such that it offers the user a file browse button for selecting their own local copy of the model file instead of loading it over the network. Provide a "download model" option too.

Codex churned away for several minutes, even running commands like curl -sL https://raw.githubusercontent.com/huggingface/transformers.js/main/src/models.js | sed -n '1,200p' to inspect the source code of the underlying Transformers.js library.

After four prompts total (shown here) it built something which worked!

To try it out you'll need your own local copy of the Llama 3.2 ONNX model. You can get that (a ~1.2GB) download) like so:

git lfs install

git clone https://huggingface.co/onnx-community/Llama-3.2-1B-Instruct-q4f16

Then visit my llama-3.2-webgpu page in Chrome or Firefox Nightly (since WebGPU is required), click "Browse folder", select that folder you just cloned, agree to the "Upload" confirmation (confusing since nothing is uploaded from your browser, the model file is opened locally on your machine) and click "Load local model".

Here's an animated demo (recorded in real-time, I didn't speed this up):

I pushed a branch with those changes here. The next step would be to modify this to support other models in addition to the Llama 3.2 demo, but I'm pleased to have got to this proof of concept with so little work beyond throwing some prompts at Codex to see if it could figure it out.

According to the Codex /status command this used 169,818 input tokens, 17,112 output tokens and 1,176,320 cached input tokens. At current GPT-5 token pricing ($1.25/million input, $0.125/million cached input, $10/million output) that would cost 53.942 cents, but Codex CLI hooks into my existing $20/month ChatGPT Plus plan so this was bundled into that.

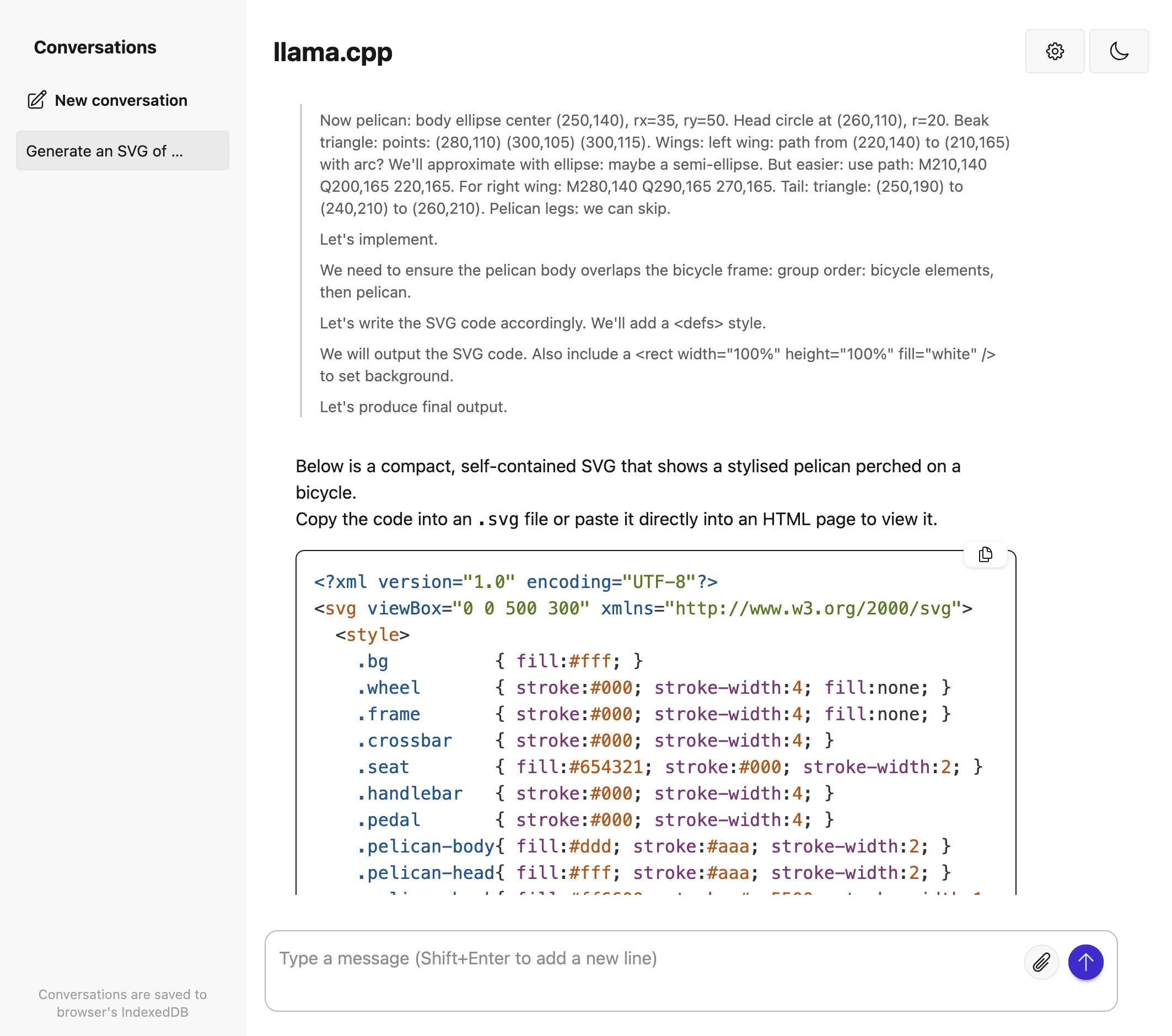

llama.cpp guide: running gpt-oss with llama.cpp

(via)

Really useful official guide to running the OpenAI gpt-oss models using llama-server from llama.cpp - which provides an OpenAI-compatible localhost API and a neat web interface for interacting with the models.

TLDR version for macOS to run the smaller gpt-oss-20b model:

brew install llama.cpp

llama-server -hf ggml-org/gpt-oss-20b-GGUF \

--ctx-size 0 --jinja -ub 2048 -b 2048 -ngl 99 -fa

This downloads a 12GB model file from ggml-org/gpt-oss-20b-GGUF on Hugging Face, stores it in ~/Library/Caches/llama.cpp/ and starts it running on port 8080.

You can then visit this URL to start interacting with the model:

http://localhost:8080/

On my 64GB M2 MacBook Pro it runs at around 82 tokens/second.

The guide also includes notes for running on NVIDIA and AMD hardware.

TIL: Running a gpt-oss eval suite against LM Studio on a Mac. The other day I learned that OpenAI published a set of evals as part of their gpt-oss model release, described in their cookbook on Verifying gpt-oss implementations.

I decided to try and run that eval suite on my own MacBook Pro, against gpt-oss-20b running inside of LM Studio.

TLDR: once I had the model running inside LM Studio with a longer than default context limit, the following incantation ran an eval suite in around 3.5 hours:

mkdir /tmp/aime25_openai

OPENAI_API_KEY=x \

uv run --python 3.13 --with 'gpt-oss[eval]' \

python -m gpt_oss.evals \

--base-url http://localhost:1234/v1 \

--eval aime25 \

--sampler chat_completions \

--model openai/gpt-oss-20b \

--reasoning-effort low \

--n-threads 2

My new TIL breaks that command down in detail and walks through the underlying eval - AIME 2025, which asks 30 questions (8 times each) that are defined using the following format:

{"question": "Find the sum of all integer bases $b>9$ for which $17_{b}$ is a divisor of $97_{b}$.", "answer": "70"}

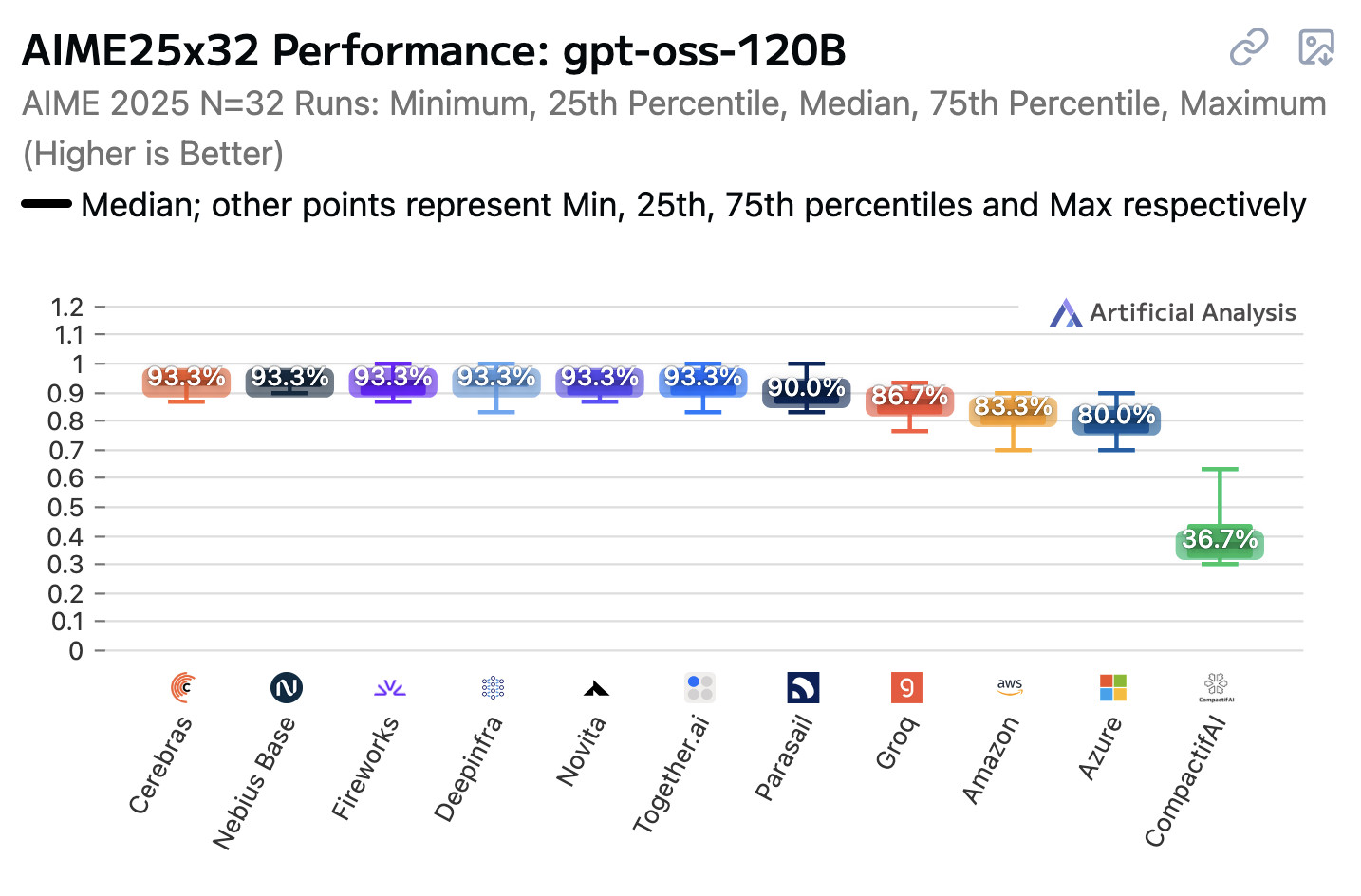

Open weight LLMs exhibit inconsistent performance across providers

Artificial Analysis published a new benchmark the other day, this time focusing on how an individual model—OpenAI’s gpt-oss-120b—performs across different hosted providers.

[... 847 words]Introducing Gemma 3 270M: The compact model for hyper-efficient AI (via) New from Google:

Gemma 3 270M, a compact, 270-million parameter model designed from the ground up for task-specific fine-tuning with strong instruction-following and text structuring capabilities already trained in.

This model is tiny. The version I tried was the LM Studio GGUF one, a 241MB download.

It works! You can say "hi" to it and ask it very basic questions like "What is the capital of France".

I tried "Generate an SVG of a pelican riding a bicycle" about a dozen times and didn't once get back an SVG that was more than just a blank square... but at one point it did decide to write me this poem instead, which was nice:

+-----------------------+

| Pelican Riding Bike |

+-----------------------+

| This is the cat! |

| He's got big wings and a happy tail. |

| He loves to ride his bike! |

+-----------------------+

| Bike lights are shining bright. |

| He's got a shiny top, too! |

| He's ready for adventure! |

+-----------------------+

That's not really the point though. The Gemma 3 team make it very clear that the goal of this model is to support fine-tuning: a model this tiny is never going to be useful for general purpose LLM tasks, but given the right fine-tuning data it should be able to specialize for all sorts of things:

In engineering, success is defined by efficiency, not just raw power. You wouldn't use a sledgehammer to hang a picture frame. The same principle applies to building with AI.

Gemma 3 270M embodies this "right tool for the job" philosophy. It's a high-quality foundation model that follows instructions well out of the box, and its true power is unlocked through fine-tuning. Once specialized, it can execute tasks like text classification and data extraction with remarkable accuracy, speed, and cost-effectiveness. By starting with a compact, capable model, you can build production systems that are lean, fast, and dramatically cheaper to operate.

Here's their tutorial on Full Model Fine-Tune using Hugging Face Transformers, which I have not yet attempted to follow.

I imagine this model will be particularly fun to play with directly in a browser using transformers.js.

Update: It is! Here's a bedtime story generator using Transformers.js (requires WebGPU, so Chrome-like browsers only). Here's the source code for that demo.

Qwen3-4B-Thinking: “This is art—pelicans don’t ride bikes!”

I’ve fallen a few days behind keeping up with Qwen. They released two new 4B models last week: Qwen3-4B-Instruct-2507 and its thinking equivalent Qwen3-4B-Thinking-2507.

[... 991 words]OpenAI’s new open weight (Apache 2) models are really good

The long promised OpenAI open weight models are here, and they are very impressive. They’re available under proper open source licenses—Apache 2.0—and come in two sizes, 120B and 20B.

[... 2,771 words]Ollama’s new app (via) Ollama has been one of my favorite ways to run local models for a while - it makes it really easy to download models, and it's smart about keeping them resident in memory while they are being used and then cleaning them out after they stop receiving traffic.

The one missing feature to date has been an interface: Ollama has been exclusively command-line, which is fine for the CLI literate among us and not much use for everyone else.

They've finally fixed that! The new app's interface is accessible from the existing system tray menu and lets you chat with any of your installed models. Vision models can accept images through the new interface as well.

Something that has become undeniable this month is that the best available open weight models now come from the Chinese AI labs.

I continue to have a lot of love for Mistral, Gemma and Llama but my feeling is that Qwen, Moonshot and Z.ai have positively smoked them over the course of July.

Here's what came out this month, with links to my notes on each one:

- Moonshot Kimi-K2-Instruct - 11th July, 1 trillion parameters

- Qwen Qwen3-235B-A22B-Instruct-2507 - 21st July, 235 billion

- Qwen Qwen3-Coder-480B-A35B-Instruct - 22nd July, 480 billion

- Qwen Qwen3-235B-A22B-Thinking-2507 - 25th July, 235 billion

- Z.ai GLM-4.5 and GLM-4.5 Air - 28th July, 355 and 106 billion

- Qwen Qwen3-30B-A3B-Instruct-2507 - 29th July, 30 billion

- Qwen Qwen3-30B-A3B-Thinking-2507 - 30th July, 30 billion

- Qwen Qwen3-Coder-30B-A3B-Instruct - 31st July, 30 billion (released after I first posted this note)

Notably absent from this list is DeepSeek, but that's only because their last model release was DeepSeek-R1-0528 back in April.

The only janky license among them is Kimi K2, which uses a non-OSI-compliant modified MIT. Qwen's models are all Apache 2 and Z.ai's are MIT.

The larger Chinese models all offer their own APIs and are increasingly available from other providers. I've been able to run versions of the Qwen 30B and GLM-4.5 Air 106B models on my own laptop.

I can't help but wonder if part of the reason for the delay in release of OpenAI's open weights model comes from a desire to be notably better than this truly impressive lineup of Chinese models.

Update August 5th 2025: The OpenAI open weight models came out and they are very impressive.

My 2.5 year old laptop can write Space Invaders in JavaScript now, using GLM-4.5 Air and MLX

I wrote about the new GLM-4.5 model family yesterday—new open weight (MIT licensed) models from Z.ai in China which their benchmarks claim score highly in coding even against models such as Claude Sonnet 4.

[... 685 words]GLM-4.5: Reasoning, Coding, and Agentic Abililties. Another day, another significant new open weight model release from a Chinese frontier AI lab.

This time it's Z.ai - who rebranded (at least in English) from Zhipu AI a few months ago. They just dropped GLM-4.5-Base, GLM-4.5 and GLM-4.5 Air on Hugging Face, all under an MIT license.

These are MoE hybrid reasoning models with thinking and non-thinking modes, similar to Qwen 3. GLM-4.5 is 355 billion total parameters with 32 billion active, GLM-4.5-Air is 106 billion total parameters and 12 billion active.

They started using MIT a few months ago for their GLM-4-0414 models - their older releases used a janky non-open-source custom license.

Z.ai's own benchmarking (across 12 common benchmarks) ranked their GLM-4.5 3rd behind o3 and Grok-4 and just ahead of Claude Opus 4. They ranked GLM-4.5 Air 6th place just ahead of Claude 4 Sonnet. I haven't seen any independent benchmarks yet.

The other models they included in their own benchmarks were o4-mini (high), Gemini 2.5 Pro, Qwen3-235B-Thinking-2507, DeepSeek-R1-0528, Kimi K2, GPT-4.1, DeepSeek-V3-0324. Notably absent: any of Meta's Llama models, or any of Mistral's. Did they deliberately only compare themselves to open weight models from other Chinese AI labs?

Both models have a 128,000 context length and are trained for tool calling, which honestly feels like table stakes for any model released in 2025 at this point.

It's interesting to see them use Claude Code to run their own coding benchmarks:

To assess GLM-4.5's agentic coding capabilities, we utilized Claude Code to evaluate performance against Claude-4-Sonnet, Kimi K2, and Qwen3-Coder across 52 coding tasks spanning frontend development, tool development, data analysis, testing, and algorithm implementation. [...] The empirical results demonstrate that GLM-4.5 achieves a 53.9% win rate against Kimi K2 and exhibits dominant performance over Qwen3-Coder with an 80.8% success rate. While GLM-4.5 shows competitive performance, further optimization opportunities remain when compared to Claude-4-Sonnet.

They published the dataset for that benchmark as zai-org/CC-Bench-trajectories on Hugging Face. I think they're using the word "trajectory" for what I would call a chat transcript.

Unlike DeepSeek-V3 and Kimi K2, we reduce the width (hidden dimension and number of routed experts) of the model while increasing the height (number of layers), as we found that deeper models exhibit better reasoning capacity.

They pre-trained on 15 trillion tokens, then an additional 7 trillion for code and reasoning:

Our base model undergoes several training stages. During pre-training, the model is first trained on 15T tokens of a general pre-training corpus, followed by 7T tokens of a code & reasoning corpus. After pre-training, we introduce additional stages to further enhance the model's performance on key downstream domains.

They also open sourced their post-training reinforcement learning harness, which they've called slime. That's available at THUDM/slime on GitHub - THUDM is the Knowledge Engineer Group @ Tsinghua University, the University from which Zhipu AI spun out as an independent company.

This time I ran my pelican bechmark using the chat.z.ai chat interface, which offers free access (no account required) to both GLM 4.5 and GLM 4.5 Air. I had reasoning enabled for both.

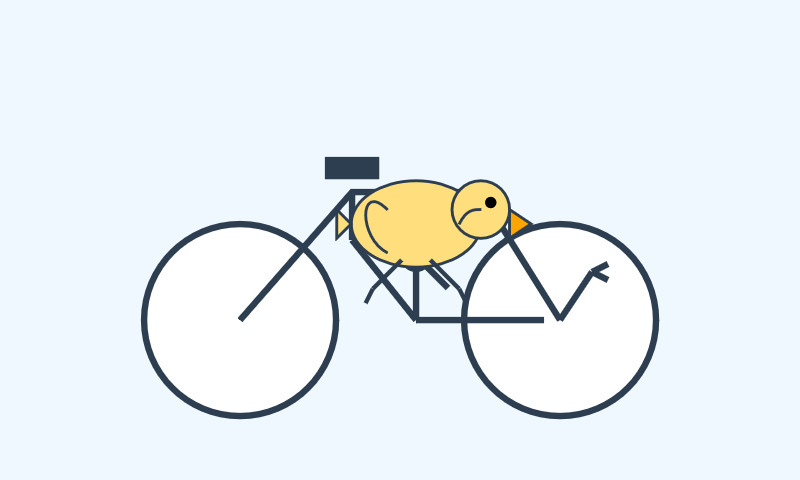

Here's what I got for "Generate an SVG of a pelican riding a bicycle" on GLM 4.5. I like how the pelican has its wings on the handlebars:

And GLM 4.5 Air:

Ivan Fioravanti shared a video of the mlx-community/GLM-4.5-Air-4bit quantized model running on a M4 Mac with 128GB of RAM, and it looks like a very strong contender for a local model that can write useful code. The cheapest 128GB Mac Studio costs around $3,500 right now, so genuinely great open weight coding models are creeping closer to being affordable on consumer machines.

Update: Ivan released a 3 bit quantized version of GLM-4.5 Air which runs using 48GB of RAM on my laptop. I tried it and was really impressed, see My 2.5 year old laptop can write Space Invaders in JavaScript now.

How to run an LLM on your laptop. I talked to Grace Huckins for this piece from MIT Technology Review on running local models. Apparently she enjoyed my dystopian backup plan!

Simon Willison has a plan for the end of the world. It’s a USB stick, onto which he has loaded a couple of his favorite open-weight LLMs—models that have been shared publicly by their creators and that can, in principle, be downloaded and run with local hardware. If human civilization should ever collapse, Willison plans to use all the knowledge encoded in their billions of parameters for help. “It’s like having a weird, condensed, faulty version of Wikipedia, so I can help reboot society with the help of my little USB stick,” he says.

The article suggests Ollama or LM Studio for laptops, and new-to-me LLM Farm for the iPhone:

My beat-up iPhone 12 was able to run Meta’s Llama 3.2 1B using an app called LLM Farm. It’s not a particularly good model—it very quickly goes off into bizarre tangents and hallucinates constantly—but trying to coax something so chaotic toward usability can be entertaining.

Update 19th July 20205: Evan Hahn compared the size of various offline LLMs to different Wikipedia exports. Full English Wikipedia without images, revision history or talk pages is 13.82GB, smaller than Mistral Small 3.2 (15GB) but larger than Qwen 3 14B and Gemma 3n.

LM Studio is free for use at work. A notable policy change for LM Studio. Their excellent macOS app (and Linux and Windows, but I've only tried it on Mac) was previously free for personal use but required a license for commercial purposes:

Until now, the LM Studio app terms stated that for use at a company or organization, you should get in touch with us and get separate commercial license. This requirement is now removed.

Starting today, there's no need to fill a form or contact us. You and your team can just use LM Studio at work!

Introducing Gemma 3n: The developer guide. Extremely consequential new open weights model release from Google today:

Multimodal by design: Gemma 3n natively supports image, audio, video, and text inputs and text outputs.

Optimized for on-device: Engineered with a focus on efficiency, Gemma 3n models are available in two sizes based on effective parameters: E2B and E4B. While their raw parameter count is 5B and 8B respectively, architectural innovations allow them to run with a memory footprint comparable to traditional 2B and 4B models, operating with as little as 2GB (E2B) and 3GB (E4B) of memory.

This is very exciting: a 2B and 4B model optimized for end-user devices which accepts text, images and audio as inputs!

Gemma 3n is also the most comprehensive day one launch I've seen for any model: Google partnered with "AMD, Axolotl, Docker, Hugging Face, llama.cpp, LMStudio, MLX, NVIDIA, Ollama, RedHat, SGLang, Unsloth, and vLLM" so there are dozens of ways to try this out right now.

So far I've run two variants on my Mac laptop. Ollama offer a 7.5GB version (full tag gemma3n:e4b-it-q4_K_M0) of the 4B model, which I ran like this:

ollama pull gemma3n

llm install llm-ollama

llm -m gemma3n:latest "Generate an SVG of a pelican riding a bicycle"

It drew me this:

The Ollama version doesn't appear to support image or audio input yet.

... but the mlx-vlm version does!

First I tried that on this WAV file like so (using a recipe adapted from Prince Canuma's video):

uv run --with mlx-vlm mlx_vlm.generate \

--model gg-hf-gm/gemma-3n-E4B-it \

--max-tokens 100 \

--temperature 0.7 \

--prompt "Transcribe the following speech segment in English:" \

--audio pelican-joke-request.wav

That downloaded a 15.74 GB bfloat16 version of the model and output the following correct transcription:

Tell me a joke about a pelican.

Then I had it draw me a pelican for good measure:

uv run --with mlx-vlm mlx_vlm.generate \

--model gg-hf-gm/gemma-3n-E4B-it \

--max-tokens 100 \

--temperature 0.7 \

--prompt "Generate an SVG of a pelican riding a bicycle"

I quite like this one:

It's interesting to see such a striking visual difference between those 7.5GB and 15GB model quantizations.

Finally, I had it describe the image it had just created:

uv run --with mlx-vlm mlx_vlm.generate \

--model gg-hf-gm/gemma-3n-E4B-it \

--max-tokens 1000 \

--prompt "Describe image" \

--image gemma3n-mlx-vlm.jpg

Here's what I got back - it thought this was a chemical diagram!

The image is a cartoon-style illustration depicting a molecular structure against a light blue background. The structure is composed of several differently colored and shaped elements connected by curved black lines.

Here's a breakdown of the elements:

- Large Black Circles: There are two large, solid black circles, likely representing larger molecules or components.

- Cream-Colored Oval: A prominent cream-colored oval shape is centrally located and connected to other elements. It has a smaller, darker cream-colored sphere attached to its left side.

- Red Oval: A smaller, solid red oval is positioned near one of the black circles and connected to the cream-colored oval.

- Black Lines: These lines act as bonds, connecting the various molecular components. They are curved and dynamic, suggesting movement or interaction.

- Triangular Shape: A small black triangle is attached to the smaller cream-colored sphere.

- Letter "I": The letter "I" appears twice, likely labeling specific parts of the molecule.

The overall impression is of a simplified representation of a biological molecule, possibly a protein or a complex organic compound. The use of different colors helps to distinguish the various components within the structure.

Mistral-Small 3.2. Released on Hugging Face a couple of hours ago, so far there aren't any quantizations to run it on a Mac but I'm sure those will emerge pretty quickly.

This is a minor bump to Mistral Small 3.1, one of my favorite local models. I've been running Small 3.1 via Ollama where it's a 15GB download - these 24 billion parameter models are a great balance between capabilities and not using up all of the available RAM on my laptop. I expect Ollama will add 3.2 imminently.

According to Mistral:

Small-3.2 improves in the following categories:

Interestingly they recommend running it with a temperature of 0.15 - many models recommend a default of 0.7. They also provide a suggested system prompt which includes a note that "Your knowledge base was last updated on 2023-10-01".

It's not currently available via Mistral's API, or through any of the third-party LLM hosting vendors that I've checked, so I've not been able to run a prompt through the model myself yet.

Update: I downloaded one of first GGUF quantizations to show up on Hugging Face, gabriellarson/Mistral-Small-3.2-24B-Instruct-2506-GGUF by Gabriel Larson. I ran it using Ollama and llm-ollama like this:

ollama pull hf.co/gabriellarson/Mistral-Small-3.2-24B-Instruct-2506-GGUF:Q4_K_M

llm install llm-ollama

llm -m hf.co/gabriellarson/Mistral-Small-3.2-24B-Instruct-2506-GGUF:Q4_K_M \

"Generate an SVG of a pelican riding a bicycle"

This one is pretty good for a 15GB model!

Here's the full transcript - it doesn't quite work in the actual image but I was delighted to see the model attempt to add this detail:

<!-- Basket with fish -->

<rect x="250" y="190" width="25" height="15" rx="5" fill="#FFA500"/>

<circle cx="260" cy="200" r="3" fill="#FF4500"/> <!-- Fish -->

Here's what you get if you isolate just that part of the generated SVG:

I had Mistral Small 3.2 describe the full image to me, since it's a vision-LLM:

llm -m hf.co/gabriellarson/Mistral-Small-3.2-24B-Instruct-2506-GGUF:Q4_K_M \

'describe image' \

-a https://static.simonwillison.net/static/2025/mistral-3.2-pelican.jpg

And it gave me the following:

The image depicts a cartoonish illustration of a duck that is being lifted off the ground by a hook. The duck appears to be in mid-air, with its wings spread slightly as if it's surprised or reacting to being picked up. The hook is attached to an arm or a mechanism and seems to be connected to a vehicle below—perhaps a truck or a platform with wheels. The background of the image is light blue, indicating an outdoor setting. Overall, the scene is whimsical and playful, possibly suggesting a humorous or unusual situation where the duck is being transported in this manner.

Update 2: It's now available as an official Ollama model:

ollama pull mistral-small3.2

LM Studio has a community quantization too: lmstudio-community/Mistral-Small-3.2-24B-Instruct-2506-GGUF.

Magistral — the first reasoning model by Mistral AI. Mistral's first reasoning model is out today, in two sizes. There's a 24B Apache 2 licensed open-weights model called Magistral Small (actually Magistral-Small-2506), and a larger API-only model called Magistral Medium.

Magistral Small is available as mistralai/Magistral-Small-2506 on Hugging Face. From that model card:

Context Window: A 128k context window, but performance might degrade past 40k. Hence we recommend setting the maximum model length to 40k.

Mistral also released an official GGUF version, Magistral-Small-2506_gguf, which I ran successfully using Ollama like this:

ollama pull hf.co/mistralai/Magistral-Small-2506_gguf:Q8_0

That fetched a 25GB file. I ran prompts using a chat session with llm-ollama like this:

llm chat -m hf.co/mistralai/Magistral-Small-2506_gguf:Q8_0

Here's what I got for "Generate an SVG of a pelican riding a bicycle" (transcript here):

It's disappointing that the GGUF doesn't support function calling yet - hopefully a community variant can add that, it's one of the best ways I know of to unlock the potential of these reasoning models.

I just noticed that Ollama have their own Magistral model too, which can be accessed using:

ollama pull magistral:latest

That gets you a 14GB q4_K_M quantization - other options can be found in the full list of Ollama magistral tags.

One thing that caught my eye in the Magistral announcement:

Legal, finance, healthcare, and government professionals get traceable reasoning that meets compliance requirements. Every conclusion can be traced back through its logical steps, providing auditability for high-stakes environments with domain-specialized AI.

I guess this means the reasoning traces are fully visible and not redacted in any way - interesting to see Mistral trying to turn that into a feature that's attractive to the business clients they are most interested in appealing to.

Also from that announcement:

Our early tests indicated that Magistral is an excellent creative companion. We highly recommend it for creative writing and storytelling, with the model capable of producing coherent or — if needed — delightfully eccentric copy.

I haven't seen a reasoning model promoted for creative writing in this way before.

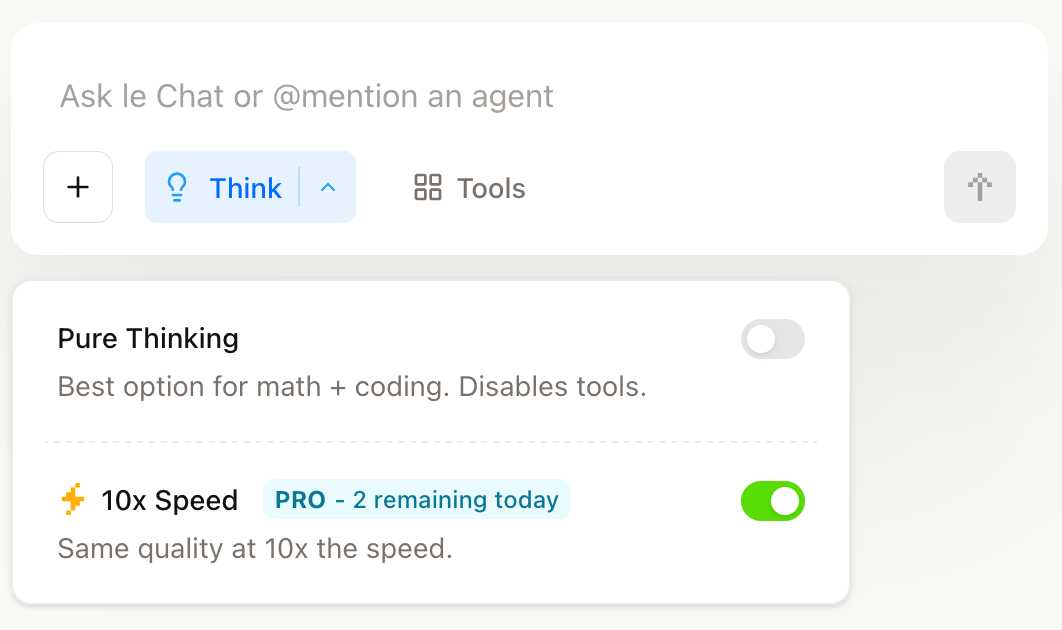

You can try out Magistral Medium by selecting the new "Thinking" option in Mistral's Le Chat.

They have options for "Pure Thinking" and a separate option for "10x speed", which runs Magistral Medium at 10x the speed using Cerebras.

The new models are also available through the Mistral API. You can access them by installing llm-mistral and running llm mistral refresh to refresh the list of available models, then:

llm -m mistral/magistral-medium-latest \

'Generate an SVG of a pelican riding a bicycle'

Here's that transcript. At 13 input and 1,236 output tokens that cost me 0.62 cents - just over half a cent.

deepseek-ai/DeepSeek-R1-0528. Sadly the trend for terrible naming of models has infested the Chinese AI labs as well.

DeepSeek-R1-0528 is a brand new and much improved open weights reasoning model from DeepSeek, a major step up from the DeepSeek R1 they released back in January.

In the latest update, DeepSeek R1 has significantly improved its depth of reasoning and inference capabilities by [...] Its overall performance is now approaching that of leading models, such as O3 and Gemini 2.5 Pro. [...]

Beyond its improved reasoning capabilities, this version also offers a reduced hallucination rate, enhanced support for function calling, and better experience for vibe coding.

The new R1 comes in two sizes: a 685B model called deepseek-ai/DeepSeek-R1-0528 (the previous R1 was 671B) and an 8B variant distilled from Qwen 3 called deepseek-ai/DeepSeek-R1-0528-Qwen3-8B.

The January release of R1 had a much larger collection of distilled models: four based on Qwen 2.5 (14B, 32B, Math 1.5B and Math 7B) and 2 based on Llama 3 (Llama-3.1 8B and Llama 3.3 70B Instruct).

No Llama model at all this time. I wonder if that's because Qwen 3 is really good and Apache 2 licensed, while Llama continues to stick with their janky license terms.

Further adding to the confusion, Ollama have mixed the two new models into their existing deepseek-r1 label. Last week running ollama pull deepseek-r1:8B from Ollama would get you DeepSeek-R1-Distill-Llama-8B (Internet Archive link), today that same command gets you DeepSeek-R1-0528-Qwen3-8B, a completely different base model.

If this bothers you as much as it bothers me, thankfully you can use the more explicit tag deepseek-r1:8b-0528-qwen3-q8_0.

Update: This is a great illustration of how confusing these names are! Ollama's deepseek-r1:8B alias actually points to deepseek-r1:8b-0528-qwen3-q4_K_M, a 5.2GB model. I pulled the larger q8_0 one.

I ran it like this:

ollama pull deepseek-r1:8b-0528-qwen3-q8_0

It fetched 8.9GB of model weights, then I had it draw me a pelican.

llm install llm-ollama

llm -m deepseek-r1:8b-0528-qwen3-q8_0 \

"Generate an SVG of a pelican riding a bicycle"

Since the “reasoning” traces of these models are almost always the most interesting part, I’ll quote the response here in full:

<think>

We are going to create an SVG that depicts a pelican riding a bicycle. Steps:

- Create the basic structure of an SVG with width and height set appropriately (e.g., 400x200).

- Draw a pelican: We'll break it down into parts - body, beak, eye, feet, wings, etc.

- Draw a bicycle: We can represent it as two circles for wheels, a rectangle for the frame, and some other details.

Let's design the SVG with the following:

- The pelican will be facing to the right (positive x-axis) so that it is riding the bike from left to right.

- The bicycle will have two wheels and a simple frame in front of the pelican.

We'll use absolute positioning for each part. Let's assume we set up coordinates such that:

- The top-left of the SVG is (0,0), and we want the scene to be drawn from left to right.

- The pelican will be positioned at (50, 120) with a body length of about 30 units.

We'll use simple shapes for the pelican:

Body: An ellipse or rounded rectangle. Let's do an ellipse for the body. Beak: A triangle pointing to the right. Head: Another ellipse but smaller and positioned in front of the body. Wings: Two triangles (one above each other, one slightly behind) that are upside down.

Bicycle:

- Wheels: two circles at fixed positions relative to the frame.

- Frame: a rectangle with some details for handlebars and seat.

Since this is a fun illustration, we'll keep it simple but recognizable.

Let's code accordingly.

</think>

Here’s an SVG of a pelican riding a bicycle. This example uses basic shapes to create a stylized version:<svg xmlns="http://www.w3.org/2000/svg" viewBox="0 0 400 200"> <!-- Pelican body --> <ellipse cx="150" cy="150" rx="80" ry="60" fill="#FFD700"/> <!-- Pelican head (larger for comedic effect) --> <circle cx="90" cy="120" r="45" fill="#FFA3CE"/> <!-- Pelican beak --> <polygon points="80,110 60,120 80,130 70,140 60,150" fill="#FFC233"/> <!-- Pelican eye --> <circle cx="95" cy="115" r="3" fill="#fff"/> <!-- Bicycle wheels --> <ellipse cx="380" cy="140" rx="25" ry="60" transform="rotate(90 380 140)" fill="black"/> <ellipse cx="380" cy="70" rx="25" ry="60" transform="rotate(90 380 70)" fill="black"/> <!-- Bicycle frame --> <path d="M 15,40 L 15,140 H 370 L 370,140 V 40 Z" stroke="gray" stroke-width="6" fill="none"/> <!-- Handlebars and seat (stylized) --> <path d="M 280,40 C 290,35 300,30 310,40 C 320,50 330,60 310,70 L 310,70 Q 290,50 280,40" fill="none" stroke="gray"/> <!-- Pedals --> <circle cx="340" cy="70" r="15" fill="#3366CC"/> <polygon points="340,65 330,45 350,45" fill="#F29E3D"/> <circle cx="340" cy="140" r="15" fill="#3366CC"/> <polygon points="340,135 330,115 350,115" fill="#F29E3D"/> <!-- Neck connecting head to body --> <path d="M 80,120 C 70,160 100,140 150,120" stroke="#FFA3CE" stroke-width="8"/> </svg>Explanation:

- Pelican Body: A large yellow ellipse.

- Head: A pink circle with a prominent beak (yellow-orange triangle).

- Bicycle:

- Wheels are black ellipses rotated vertically to simulate spokes.

- The frame is a gray rectangle extending from the pelican’s back to the right side of the SVG.

- Handlebars and seat are simplified curves in gray.

This creates a comical scene with the pelican riding forward. You can adjust colors, sizes, or positions by modifying

cx,cy,rx,ryattributes and fill/stroke values.

Love the thought process, and the explanation! The actual rendered SVG does leave a lot to be desired though:

To be fair, this is just using the ~8GB Qwen3 Q8_0 model on my laptop. I don't have the hardware to run the full sized R1 but it's available as deepseek-reasoner through DeepSeek's API, so I tried it there using the llm-deepseek plugin:

llm install llm-deepseek

llm -m deepseek-reasoner \

"Generate an SVG of a pelican riding a bicycle"

This one came out a lot better:

Meanwhile, on Reddit, u/adrgrondin got DeepSeek-R1-0528-Qwen3-8B running on an iPhone 16 Pro using MLX:

It runs at a decent speed for the size thanks to MLX, pretty impressive. But not really usable in my opinion, the model is thinking for too long, and the phone gets really hot.

Devstral. New Apache 2.0 licensed LLM release from Mistral, this time specifically trained for code.

Devstral achieves a score of 46.8% on SWE-Bench Verified, outperforming prior open-source SoTA models by more than 6% points. When evaluated under the same test scaffold (OpenHands, provided by All Hands AI 🙌), Devstral exceeds far larger models such as Deepseek-V3-0324 (671B) and Qwen3 232B-A22B.

I'm always suspicious of small models like this that claim great benchmarks against much larger rivals, but there's a Devstral model that is just 14GB on Ollama to it's quite easy to try out for yourself.

I fetched it like this:

ollama pull devstral

Then ran it in a llm chat session with llm-ollama like this:

llm install llm-ollama

llm chat -m devstral

Initial impressions: I think this one is pretty good! Here's a full transcript where I had it write Python code to fetch a CSV file from a URL and import it into a SQLite database, creating the table with the necessary columns. Honestly I need to retire that challenge, it's been a while since a model failed at it, but it's still interesting to see how it handles follow-up prompts to demand things like asyncio or a different HTTP client library.

It's also available through Mistral's API. llm-mistral 0.13 configures the devstral-small alias for it:

llm install -U llm-mistral

llm keys set mistral

# paste key here

llm -m devstral-small 'HTML+JS for a large text countdown app from 5m'

qwen2.5vl in Ollama. Ollama announced a complete overhaul of their vision support the other day. Here's the first new model they've shipped since then - a packaged version of Qwen 2.5 VL which was first released on January 26th 2025. Here are my notes from that release.

I upgraded Ollama (it auto-updates so I just had to restart it from the tray icon) and ran this:

ollama pull qwen2.5vl

This downloaded a 6GB model file. I tried it out against my photo of Cleo rolling on the beach:

llm -a https://static.simonwillison.net/static/2025/cleo-sand.jpg \

'describe this image' -m qwen2.5vl

And got a pretty good result:

The image shows a dog lying on its back on a sandy beach. The dog appears to be a medium to large breed with a dark coat, possibly black or dark brown. It is wearing a red collar or harness around its chest. The dog's legs are spread out, and its belly is exposed, suggesting it might be rolling around or playing in the sand. The sand is light-colored and appears to be dry, with some small footprints and marks visible around the dog. The lighting in the image suggests it is taken during the daytime, with the sun casting a shadow of the dog to the left side of the image. The overall scene gives a relaxed and playful impression, typical of a dog enjoying time outdoors on a beach.

Qwen 2.5 VL has a strong reputation for OCR, so I tried it on my poster:

llm -a https://static.simonwillison.net/static/2025/poster.jpg \

'convert to markdown' -m qwen2.5vl

The result that came back:

It looks like the image you provided is a jumbled and distorted text, making it difficult to interpret. If you have a specific question or need help with a particular topic, please feel free to ask, and I'll do my best to assist you!

I'm not sure what went wrong here. My best guess is that the maximum resolution the model can handle is too small to make out the text, or maybe Ollama resized the image to the point of illegibility before handing it to the model?

Update: I think this may be a bug relating to URL handling in LLM/llm-ollama. I tried downloading the file first:

wget https://static.simonwillison.net/static/2025/poster.jpg

llm -m qwen2.5vl 'extract text' -a poster.jpg

This time it did a lot better. The results weren't perfect though - it ended up stuck in a loop outputting the same code example dozens of times.

I tried with a different prompt - "extract text" - and it got confused by the three column layout, misread Datasette as "Datasetette" and missed some of the text. Here's that result.

These experiments used qwen2.5vl:7b (6GB) - I expect the results would be better with the larger qwen2.5vl:32b (21GB) and qwen2.5vl:72b (71GB) models.

Fred Jonsson reported a better result using the MLX model via LM studio (~9GB model running in 8bit - I think that's mlx-community/Qwen2.5-VL-7B-Instruct-8bit). His full output is here - looks almost exactly right to me.

Building software on top of Large Language Models

I presented a three hour workshop at PyCon US yesterday titled Building software on top of Large Language Models. The goal of the workshop was to give participants everything they needed to get started writing code that makes use of LLMs.

[... 3,726 words]Vision Language Models (Better, Faster, Stronger) (via) Extremely useful review of the last year in vision and multi-modal LLMs.

So much has happened! I'm particularly excited about the range of small open weight vision models that are now available. Models like gemma3-4b-it and Qwen2.5-VL-3B-Instruct produce very impressive results and run happily on mid-range consumer hardware.

Trying out llama.cpp’s new vision support

This llama.cpp server vision support via libmtmd pull request—via Hacker News—was merged earlier today. The PR finally adds full support for vision models to the excellent llama.cpp project. It’s documented on this page, but the more detailed technical details are covered here. Here are my notes on getting it working on a Mac.

[... 1,693 words]Saying “hi” to Microsoft’s Phi-4-reasoning

Microsoft released a new sub-family of models a few days ago: Phi-4 reasoning. They introduced them in this blog post celebrating a year since the release of Phi-3:

[... 1,498 words]