24 posts tagged “openrouter”

2026

Qwen3.5: Towards Native Multimodal Agents. Alibaba's Qwen just released the first two models in the Qwen 3.5 series - one open weights, one proprietary. Both are multi-modal for vision input.

The open weight one is a Mixture of Experts model called Qwen3.5-397B-A17B. Interesting to see Qwen call out serving efficiency as a benefit of that architecture:

Built on an innovative hybrid architecture that fuses linear attention (via Gated Delta Networks) with a sparse mixture-of-experts, the model attains remarkable inference efficiency: although it comprises 397 billion total parameters, just 17 billion are activated per forward pass, optimizing both speed and cost without sacrificing capability.

It's 807GB on Hugging Face, and Unsloth have a collection of smaller GGUFs ranging in size from 94.2GB 1-bit to 462GB Q8_K_XL.

I got this pelican from the OpenRouter hosted model (transcript):

The proprietary hosted model is called Qwen3.5 Plus 2026-02-15, and is a little confusing. Qwen researcher Junyang Lin says:

Qwen3-Plus is a hosted API version of 397B. As the model natively supports 256K tokens, Qwen3.5-Plus supports 1M token context length. Additionally it supports search and code interpreter, which you can use on Qwen Chat with Auto mode.

Here's its pelican, which is similar in quality to the open weights model:

GLM-5: From Vibe Coding to Agentic Engineering (via) This is a huge new MIT-licensed model: 744B parameters and 1.51TB on Hugging Face twice the size of GLM-4.7 which was 368B and 717GB (4.5 and 4.6 were around that size too).

It's interesting to see Z.ai take a position on what we should call professional software engineers building with LLMs - I've seen Agentic Engineering show up in a few other places recently. most notable from Andrej Karpathy and Addy Osmani.

I ran my "Generate an SVG of a pelican riding a bicycle" prompt through GLM-5 via OpenRouter and got back a very good pelican on a disappointing bicycle frame:

Open Responses (via) This is the standardization effort I've most wanted in the world of LLMs: a vendor-neutral specification for the JSON API that clients can use to talk to hosted LLMs.

Open Responses aims to provide exactly that as a documented standard, derived from OpenAI's Responses API.

I was hoping for one based on their older Chat Completions API since so many other products have cloned the already, but basing it on Responses does make sense since that API was designed with the feature of more recent models - such as reasoning traces - baked into the design.

What's certainly notable is the list of launch partners. OpenRouter alone means we can expect to be able to use this protocol with almost every existing model, and Hugging Face, LM Studio, vLLM, Ollama and Vercel cover a huge portion of the common tools used to serve models.

For protocols like this I really want to see a comprehensive, language-independent conformance test site. Open Responses has a subset of that - the official repository includes src/lib/compliance-tests.ts which can be used to exercise a server implementation, and is available as a React app on the official site that can be pointed at any implementation served via CORS.

What's missing is the equivalent for clients. I plan to spin up my own client library for this in Python and I'd really like to be able to run that against a conformance suite designed to check that my client correctly handles all of the details.

2025

DeepSeek-V3.2 (via) Two new open weight (MIT licensed) models from DeepSeek today: DeepSeek-V3.2 and DeepSeek-V3.2-Speciale, both 690GB, 685B parameters. Here's the PDF tech report.

DeepSeek-V3.2 is DeepSeek's new flagship model, now running on chat.deepseek.com.

The difference between the two new models is best explained by this paragraph from the technical report:

DeepSeek-V3.2 integrates reasoning, agent, and human alignment data distilled from specialists, undergoing thousands of steps of continued RL training to reach the final checkpoints. To investigate the potential of extended thinking, we also developed an experimental variant, DeepSeek-V3.2-Speciale. This model was trained exclusively on reasoning data with a reduced length penalty during RL. Additionally, we incorporated the dataset and reward method from DeepSeekMath-V2 (Shao et al., 2025) to enhance capabilities in mathematical proofs.

I covered DeepSeek-Math-V2 last week. Like that model, DeepSeek-V3.2-Speciale also scores gold on the 2025 International Mathematical Olympiad so beloved of model training teams!

I tried both models on "Generate an SVG of a pelican riding a bicycle" using the chat feature of [OpenRouter](https://openrouter.ai/). DeepSeek V3.2 produced this very short reasoning chain:

Let's assume the following:

Wheel radius: 40

Distance between wheel centers: 180

Seat height: 60 (above the rear wheel center)

Handlebars: above the front wheel, extending back and up.We'll set the origin at the center of the rear wheel.

We'll create the SVG with a viewBox that fits the entire drawing.

Let's start by setting up the SVG.

Followed by this illustration:

Here's what I got from the Speciale model, which thought deeply about the geometry of bicycles and pelicans for a very long time (at least 10 minutes) before spitting out this result:

Kimi K2 Thinking. Chinese AI lab Moonshot's Kimi K2 established itself as one of the largest open weight models - 1 trillion parameters - back in July. They've now released the Thinking version, also a trillion parameters (MoE, 32B active) and also under their custom modified (so not quite open source) MIT license.

Starting with Kimi K2, we built it as a thinking agent that reasons step-by-step while dynamically invoking tools. It sets a new state-of-the-art on Humanity's Last Exam (HLE), BrowseComp, and other benchmarks by dramatically scaling multi-step reasoning depth and maintaining stable tool-use across 200–300 sequential calls. At the same time, K2 Thinking is a native INT4 quantization model with 256k context window, achieving lossless reductions in inference latency and GPU memory usage.

This one is only 594GB on Hugging Face - Kimi K2 was 1.03TB - which I think is due to the new INT4 quantization. This makes the model both cheaper and faster to host.

So far the only people hosting it are Moonshot themselves. I tried it out both via their own API and via the OpenRouter proxy to it, via the llm-moonshot plugin (by NickMystic) and my llm-openrouter plugin respectively.

The buzz around this model so far is very positive. Could this be the first open weight model that's competitive with the latest from OpenAI and Anthropic, especially for long-running agentic tool call sequences?

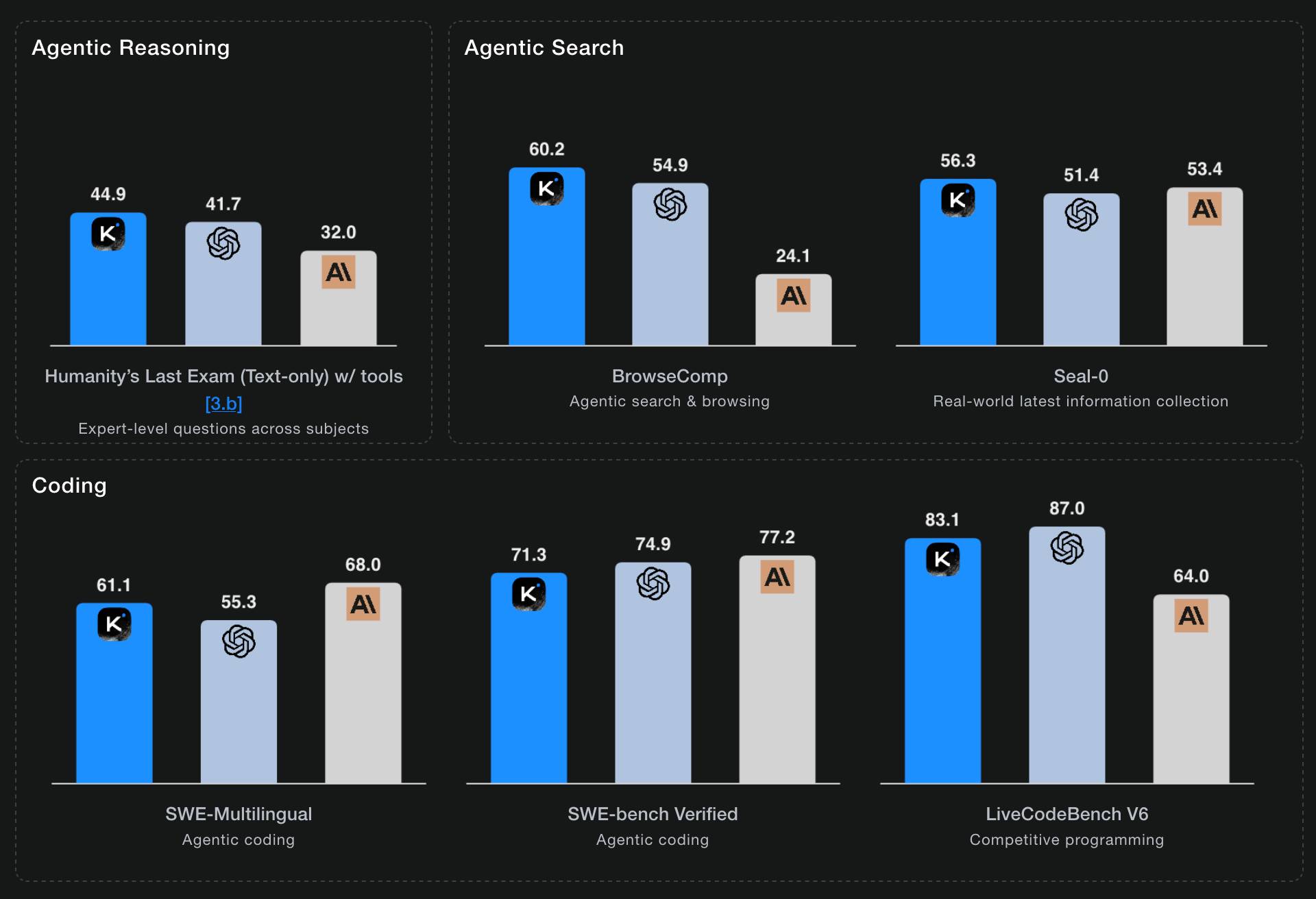

Moonshot AI's self-reported benchmark scores show K2 Thinking beating the top OpenAI and Anthropic models (GPT-5 and Sonnet 4.5 Thinking) at "Agentic Reasoning" and "Agentic Search" but not quite top for "Coding":

I ran a couple of pelican tests:

llm install llm-moonshot

llm keys set moonshot # paste key

llm -m moonshot/kimi-k2-thinking 'Generate an SVG of a pelican riding a bicycle'

llm install llm-openrouter

llm keys set openrouter # paste key

llm -m openrouter/moonshotai/kimi-k2-thinking \

'Generate an SVG of a pelican riding a bicycle'

Artificial Analysis said:

Kimi K2 Thinking achieves 93% in 𝜏²-Bench Telecom, an agentic tool use benchmark where the model acts as a customer service agent. This is the highest score we have independently measured. Tool use in long horizon agentic contexts was a strength of Kimi K2 Instruct and it appears this new Thinking variant makes substantial gains

CNBC quoted a source who provided the training price for the model:

The Kimi K2 Thinking model cost $4.6 million to train, according to a source familiar with the matter. [...] CNBC was unable to independently verify the DeepSeek or Kimi figures.

MLX developer Awni Hannun got it working on two 512GB M3 Ultra Mac Studios:

The new 1 Trillion parameter Kimi K2 Thinking model runs well on 2 M3 Ultras in its native format - no loss in quality!

The model was quantization aware trained (qat) at int4.

Here it generated ~3500 tokens at 15 toks/sec using pipeline-parallelism in mlx-lm

Here's the 658GB mlx-community model.

Two new models from Chinese AI labs in the past few days. I tried them both out using llm-openrouter:

DeepSeek-V3.2-Exp from DeepSeek. Announcement, Tech Report, Hugging Face (690GB, MIT license).

As an intermediate step toward our next-generation architecture, V3.2-Exp builds upon V3.1-Terminus by introducing DeepSeek Sparse Attention—a sparse attention mechanism designed to explore and validate optimizations for training and inference efficiency in long-context scenarios.

This one felt very slow when I accessed it via OpenRouter - I probably got routed to one of the slower providers. Here's the pelican:

GLM-4.6 from Z.ai. Announcement, Hugging Face (714GB, MIT license).

The context window has been expanded from 128K to 200K tokens [...] higher scores on code benchmarks [...] GLM-4.6 exhibits stronger performance in tool using and search-based agents.

Here's the pelican for that:

llm-openrouter 0.5. New release of my LLM plugin for accessing models made available via OpenRouter. The release notes in full:

- Support for tool calling. Thanks, James Sanford. #43

- Support for reasoning options, for example

llm -m openrouter/openai/gpt-5 'prove dogs exist' -o reasoning_effort medium. #45

Tool calling is a really big deal, as it means you can now use the plugin to try out tools (and build agents, if you like) against any of the 179 tool-enabled models on that platform:

llm install llm-openrouter

llm keys set openrouter

# Paste key here

llm models --tools | grep 'OpenRouter:' | wc -l

# Outputs 179

Quite a few of the models hosted on OpenRouter can be accessed for free. Here's a tool-usage example using the llm-tools-datasette plugin against the new Grok 4 Fast model:

llm install llm-tools-datasette

llm -m openrouter/x-ai/grok-4-fast:free -T 'Datasette("https://datasette.io/content")' 'Count available plugins'

Outputs:

There are 154 available plugins.

The output of llm logs -cu shows the tool calls and SQL queries it executed to get that result.

Grok 4 Fast. New hosted vision-enabled reasoning model from xAI that's designed to be fast and extremely competitive on price. It has a 2 million token context window and "was trained end-to-end with tool-use reinforcement learning".

It's priced at $0.20/million input tokens and $0.50/million output tokens - 15x less than Grok 4 (which is $3/million input and $15/million output). That puts it cheaper than GPT-5 mini and Gemini 2.5 Flash on llm-prices.com.

The same model weights handle reasoning and non-reasoning based on a parameter passed to the model.

I've been trying it out via my updated llm-openrouter plugin, since Grok 4 Fast is available for free on OpenRouter for a limited period.

Here's output from the non-reasoning model. This actually output an invalid SVG - I had to make a tiny manual tweak to the XML to get it to render.

llm -m openrouter/x-ai/grok-4-fast:free "Generate an SVG of a pelican riding a bicycle" -o reasoning_enabled false

(I initially ran this without that -o reasoning_enabled false flag, but then I saw that OpenRouter enable reasoning by default for that model. Here's my previous invalid result.)

And the reasoning model:

llm -m openrouter/x-ai/grok-4-fast:free "Generate an SVG of a pelican riding a bicycle" -o reasoning_enabled true

In related news, the New York Times had a story a couple of days ago about Elon's recent focus on xAI: Since Leaving Washington, Elon Musk Has Been All In on His A.I. Company.

Qwen3-Next-80B-A3B. Qwen announced two new models via their Twitter account (and here's their blog): Qwen3-Next-80B-A3B-Instruct and Qwen3-Next-80B-A3B-Thinking.

They make some big claims on performance:

- Qwen3-Next-80B-A3B-Instruct approaches our 235B flagship.

- Qwen3-Next-80B-A3B-Thinking outperforms Gemini-2.5-Flash-Thinking.

The name "80B-A3B" indicates 80 billion parameters of which only 3 billion are active at a time. You still need to have enough GPU-accessible RAM to hold all 80 billion in memory at once but only 3 billion will be used for each round of inference, which provides a significant speedup in responding to prompts.

More details from their tweet:

- 80B params, but only 3B activated per token → 10x cheaper training, 10x faster inference than Qwen3-32B.(esp. @ 32K+ context!)

- Hybrid Architecture: Gated DeltaNet + Gated Attention → best of speed & recall

- Ultra-sparse MoE: 512 experts, 10 routed + 1 shared

- Multi-Token Prediction → turbo-charged speculative decoding

- Beats Qwen3-32B in perf, rivals Qwen3-235B in reasoning & long-context

The models on Hugging Face are around 150GB each so I decided to try them out via OpenRouter rather than on my own laptop (Thinking, Instruct).

I'm used my llm-openrouter plugin. I installed it like this:

llm install llm-openrouter

llm keys set openrouter

# paste key here

Then found the model IDs with this command:

llm models -q next

Which output:

OpenRouter: openrouter/qwen/qwen3-next-80b-a3b-thinking

OpenRouter: openrouter/qwen/qwen3-next-80b-a3b-instruct

I have an LLM prompt template saved called pelican-svg which I created like this:

llm "Generate an SVG of a pelican riding a bicycle" --save pelican-svg

This means I can run my pelican benchmark like this:

llm -t pelican-svg -m openrouter/qwen/qwen3-next-80b-a3b-thinking

Or like this:

llm -t pelican-svg -m openrouter/qwen/qwen3-next-80b-a3b-instruct

Here's the thinking model output (exported with llm logs -c | pbcopy after I ran the prompt):

I enjoyed the "Whimsical style with smooth curves and friendly proportions (no anatomical accuracy needed for bicycle riding!)" note in the transcript.

The instruct (non-reasoning) model gave me this:

"🐧🦩 Who needs legs!?" indeed! I like that penguin-flamingo emoji sequence it's decided on for pelicans.

DeepSeek 3.1. The latest model from DeepSeek, a 685B monster (like DeepSeek v3 before it) but this time it's a hybrid reasoning model.

DeepSeek claim:

DeepSeek-V3.1-Think achieves comparable answer quality to DeepSeek-R1-0528, while responding more quickly.

Drew Breunig points out that their benchmarks show "the same scores with 25-50% fewer tokens" - at least across AIME 2025 and GPQA Diamond and LiveCodeBench.

The DeepSeek release includes prompt examples for a coding agent, a python agent and a search agent - yet more evidence that the leading AI labs have settled on those as the three most important agentic patterns for their models to support.

Here's the pelican riding a bicycle it drew me (transcript), which I ran from my phone using OpenRouter chat.

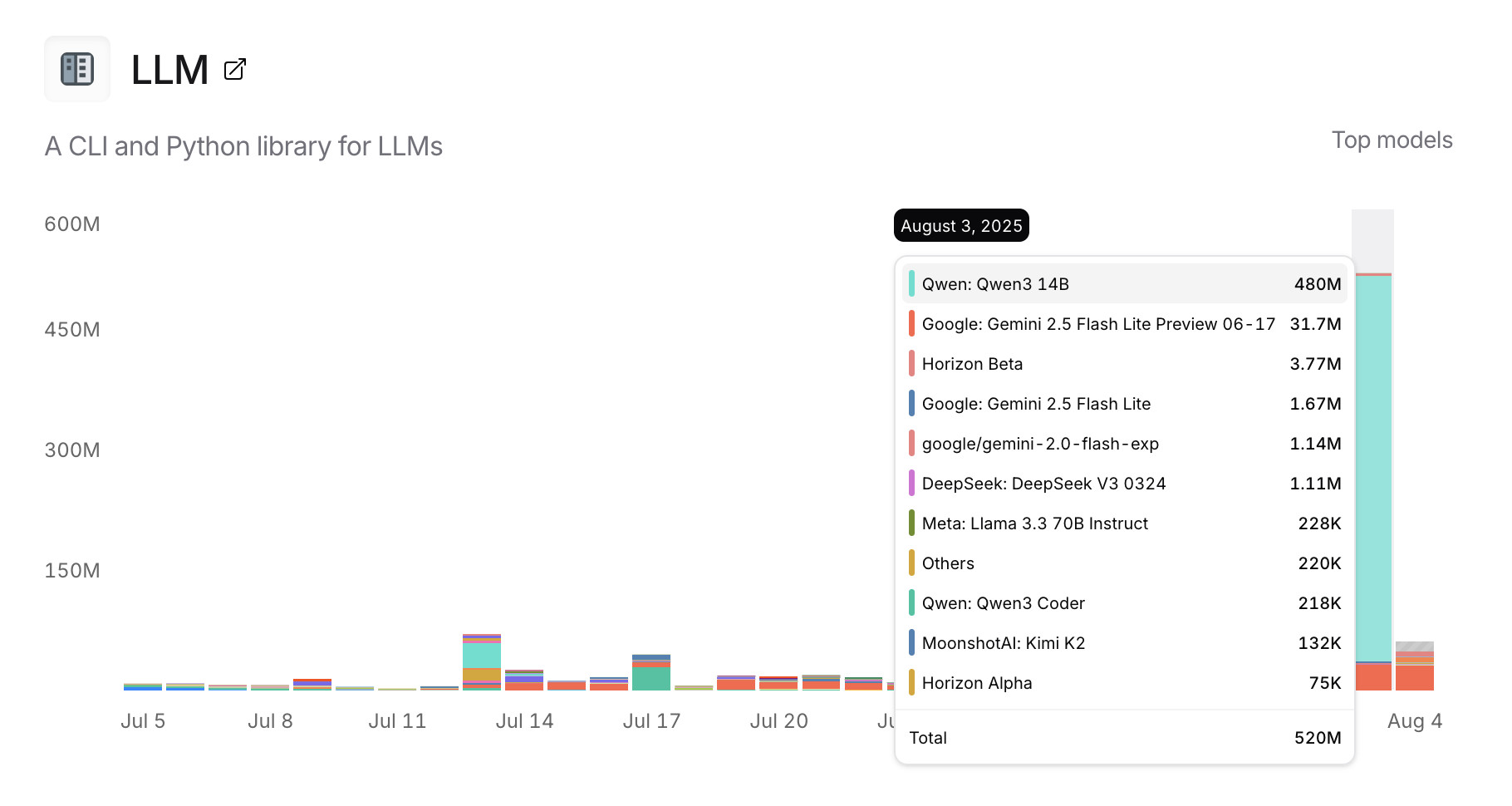

Usage charts for my LLM tool against OpenRouter. OpenRouter proxies requests to a large number of different LLMs and provides high level statistics of which models are the most popular among their users.

Tools that call OpenRouter can include HTTP-Referer and X-Title headers to credit that tool with the token usage. My llm-openrouter plugin does that here.

... which means this page displays aggregate stats across users of that plugin! Looks like someone has been running a lot of traffic through Qwen 3 14B recently.

Here are a few more model releases from today, to round out a very busy July:

- Cohere released Command A Vision, their first multi-modal (image input) LLM. Like their others it's open weights under Creative Commons Attribution Non-Commercial, so you need to license it (or use their paid API) if you want to use it commercially.

- San Francisco AI startup Deep Cogito released four open weights hybrid reasoning models, cogito-v2-preview-deepseek-671B-MoE, cogito-v2-preview-llama-405B, cogito-v2-preview-llama-109B-MoE and cogito-v2-preview-llama-70B. These follow their v1 preview models in April at smaller 3B, 8B, 14B, 32B and 70B sizes. It looks like their unique contribution here is "distilling inference-time reasoning back into the model’s parameters" - demonstrating a form of self-improvement. I haven't tried any of their models myself yet.

- Mistral released Codestral 25.08, an update to their Codestral model which is specialized for fill-in‑the‑middle autocomplete as seen in text editors like VS Code, Zed and Cursor.

- And an anonymous stealth preview model called Horizon Alpha running on OpenRouter was released yesterday and is attracting a lot of attention.

Qwen3-Coder: Agentic Coding in the World (via) It turns out that as I was typing up my notes on Qwen3-235B-A22B-Instruct-2507 the Qwen team were unleashing something much bigger:

Today, we’re announcing Qwen3-Coder, our most agentic code model to date. Qwen3-Coder is available in multiple sizes, but we’re excited to introduce its most powerful variant first: Qwen3-Coder-480B-A35B-Instruct — a 480B-parameter Mixture-of-Experts model with 35B active parameters which supports the context length of 256K tokens natively and 1M tokens with extrapolation methods, offering exceptional performance in both coding and agentic tasks.

This is another Apache 2.0 licensed open weights model, available as Qwen3-Coder-480B-A35B-Instruct and Qwen3-Coder-480B-A35B-Instruct-FP8 on Hugging Face.

I used qwen3-coder-480b-a35b-instruct on the Hyperbolic playground to run my "Generate an SVG of a pelican riding a bicycle" test prompt:

I actually slightly prefer the one I got from qwen3-235b-a22b-07-25.

It's also available as qwen3-coder on OpenRouter.

In addition to the new model, Qwen released their own take on an agentic terminal coding assistant called qwen-code, which they describe in their blog post as being "Forked from Gemini Code" (they mean gemini-cli) - which is Apache 2.0 so a fork is in keeping with the license.

They focused really hard on code performance for this release, including generating synthetic data tested using 20,000 parallel environments on Alibaba Cloud:

In the post-training phase of Qwen3-Coder, we introduced long-horizon RL (Agent RL) to encourage the model to solve real-world tasks through multi-turn interactions using tools. The key challenge of Agent RL lies in environment scaling. To address this, we built a scalable system capable of running 20,000 independent environments in parallel, leveraging Alibaba Cloud’s infrastructure. The infrastructure provides the necessary feedback for large-scale reinforcement learning and supports evaluation at scale. As a result, Qwen3-Coder achieves state-of-the-art performance among open-source models on SWE-Bench Verified without test-time scaling.

To further burnish their coding credentials, the announcement includes instructions for running their new model using both Claude Code and Cline using custom API base URLs that point to Qwen's own compatibility proxies.

Pricing for Qwen's own hosted models (through Alibaba Cloud) looks competitive. This is the first model I've seen that sets different prices for four different sizes of input:

This kind of pricing reflects how inference against longer inputs is more expensive to process. Gemini 2.5 Pro has two different prices for above or below 200,00 tokens.

Awni Hannun reports running a 4-bit quantized MLX version on a 512GB M3 Ultra Mac Studio at 24 tokens/second using 272GB of RAM, getting great results for "write a python script for a bouncing yellow ball within a square, make sure to handle collision detection properly. make the square slowly rotate. implement it in python. make sure ball stays within the square".

Qwen/Qwen3-235B-A22B-Instruct-2507. Significant new model release from Qwen, published yesterday without much fanfare. (Update: probably because they were cooking the much larger Qwen3-Coder-480B-A35B-Instruct which they released just now.)

This is a follow-up to their April release of the full Qwen 3 model family, which included a Qwen3-235B-A22B model which could handle both reasoning and non-reasoning prompts (via a /no_think toggle).

The new Qwen3-235B-A22B-Instruct-2507 ditches that mechanism - this is exclusively a non-reasoning model. It looks like Qwen have new reasoning models in the pipeline.

This new model is Apache 2 licensed and comes in two official sizes: a BF16 model (437.91GB of files on Hugging Face) and an FP8 variant (220.20GB). VentureBeat estimate that the large model needs 88GB of VRAM while the smaller one should run in ~30GB.

The benchmarks on these new models look very promising. Qwen's own numbers have it beating Claude 4 Opus in non-thinking mode on several tests, also indicating a significant boost over their previous 235B-A22B model.

I haven't seen any independent benchmark results yet. Here's what I got for "Generate an SVG of a pelican riding a bicycle", which I ran using the qwen3-235b-a22b-07-25:free on OpenRouter:

llm install llm-openrouter

llm -m openrouter/qwen/qwen3-235b-a22b-07-25:free \

"Generate an SVG of a pelican riding a bicycle"

Grok 4. Released last night, Grok 4 is now available via both API and a paid subscription for end-users.

Update: If you ask it about controversial topics it will sometimes search X for tweets "from:elonmusk"!

Key characteristics: image and text input, text output. 256,000 context length (twice that of Grok 3). It's a reasoning model where you can't see the reasoning tokens or turn off reasoning mode.

xAI released results showing Grok 4 beating other models on most of the significant benchmarks. I haven't been able to find their own written version of these (the launch was a livestream video) but here's a TechCrunch report that includes those scores. It's not clear to me if these benchmark results are for Grok 4 or Grok 4 Heavy.

I ran my own benchmark using Grok 4 via OpenRouter (since I have API keys there already).

llm -m openrouter/x-ai/grok-4 "Generate an SVG of a pelican riding a bicycle" \

-o max_tokens 10000

I then asked Grok to describe the image it had just created:

llm -m openrouter/x-ai/grok-4 -o max_tokens 10000 \

-a https://static.simonwillison.net/static/2025/grok4-pelican.png \

'describe this image'

Here's the result. It described it as a "cute, bird-like creature (resembling a duck, chick, or stylized bird)".

The most interesting independent analysis I've seen so far is this one from Artificial Analysis:

We have run our full suite of benchmarks and Grok 4 achieves an Artificial Analysis Intelligence Index of 73, ahead of OpenAI o3 at 70, Google Gemini 2.5 Pro at 70, Anthropic Claude 4 Opus at 64 and DeepSeek R1 0528 at 68.

The timing of the release is somewhat unfortunate, given that Grok 3 made headlines just this week after a clumsy system prompt update - presumably another attempt to make Grok "less woke" - caused it to start firing off antisemitic tropes and referring to itself as MechaHitler.

My best guess is that these lines in the prompt were the root of the problem:

- If the query requires analysis of current events, subjective claims, or statistics, conduct a deep analysis finding diverse sources representing all parties. Assume subjective viewpoints sourced from the media are biased. No need to repeat this to the user.

- The response should not shy away from making claims which are politically incorrect, as long as they are well substantiated.

If xAI expect developers to start building applications on top of Grok they need to do a lot better than this. Absurd self-inflicted mistakes like this do not build developer trust!

As it stands, Grok 4 isn't even accompanied by a model card.

Update: Ian Bicking makes an astute point:

It feels very credulous to ascribe what happened to a system prompt update. Other models can't be pushed into racism, Nazism, and ideating rape with a system prompt tweak.

Even if that system prompt change was responsible for unlocking this behavior, the fact that it was able to speaks to a much looser approach to model safety by xAI compared to other providers.

Update 12th July 2025: Grok posted a postmortem blaming the behavior on a different set of prompts, including "you are not afraid to offend people who are politically correct", that were not included in the system prompts they had published to their GitHub repository.

Grok 4 is competitively priced. It's $3/million for input tokens and $15/million for output tokens - the same price as Claude Sonnet 4. Once you go above 128,000 input tokens the price doubles to $6/$30 (Gemini 2.5 Pro has a similar price increase for longer inputs). I've added these prices to llm-prices.com.

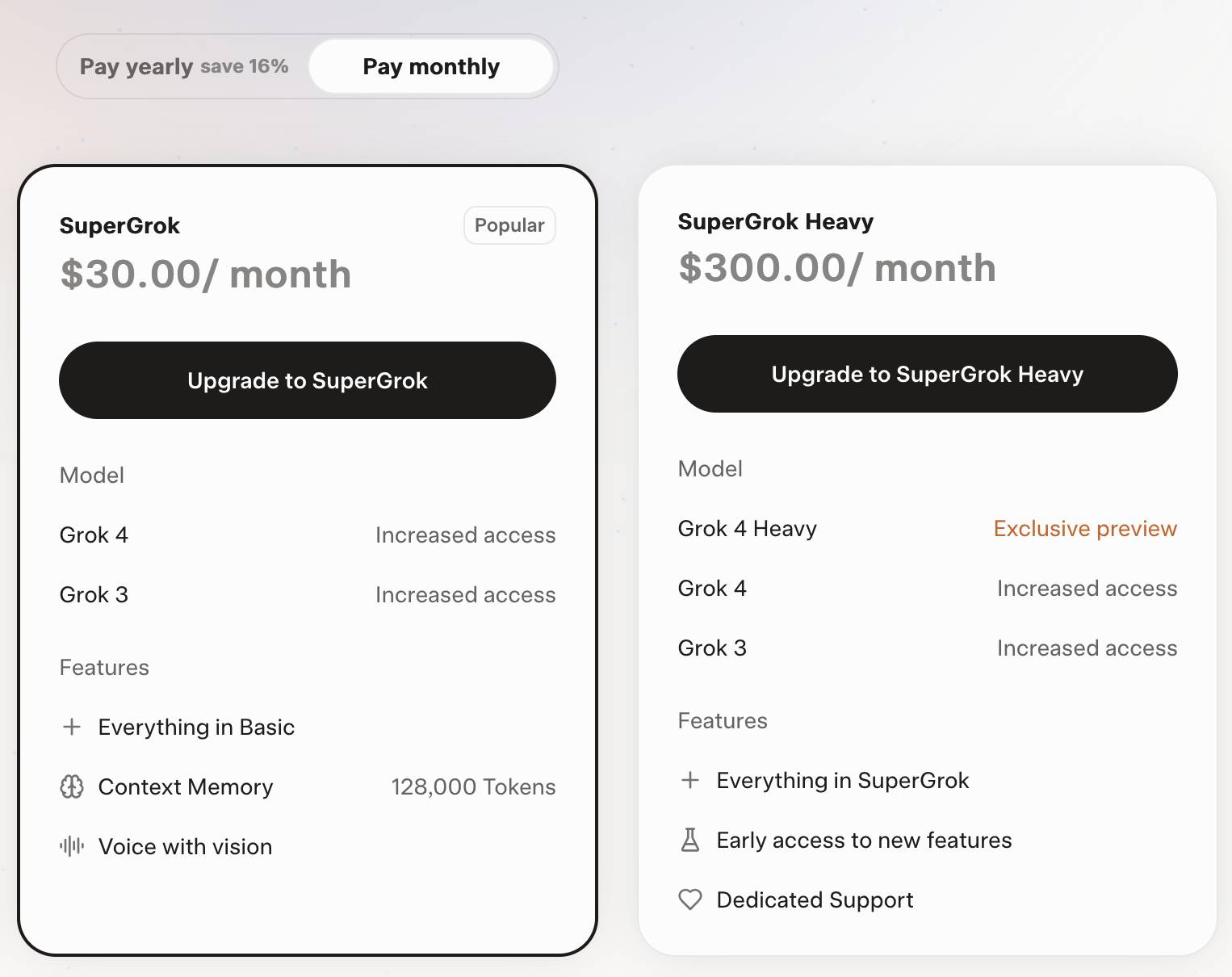

Consumers can access Grok 4 via a new $30/month or $300/year "SuperGrok" plan - or a $300/month or $3,000/year "SuperGrok Heavy" plan providing access to Grok 4 Heavy.

Become a command-line superhero with Simon Willison’s llm tool (via) Christopher Smith ran a mini hackathon in Albany New York at the weekend around uses of my LLM - the first in-person event I'm aware of dedicated to that project!

He prepared this video version of the opening talk he presented there, and it's the best video introduction I've seen yet for how to get started experimenting with LLM and its various plugins:

Christopher introduces LLM and the llm-openrouter plugin, touches on various features including fragments and schemas and also shows LLM used in conjunction with repomix to dump full source repos into an LLM at once.

Here are the notes that accompanied the talk.

I learned about cypher-alpha:free from this video - a free trial preview model currently available on OpenRouter from an anonymous vendor. I hadn't realized OpenRouter hosted these - it's similar to how LMArena often hosts anonymous previews.

Understanding the recent criticism of the Chatbot Arena

The Chatbot Arena has become the go-to place for vibes-based evaluation of LLMs over the past two years. The project, originating at UC Berkeley, is home to a large community of model enthusiasts who submit prompts to two randomly selected anonymous models and pick their favorite response. This produces an Elo score leaderboard of the “best” models, similar to how chess rankings work.

[... 1,579 words]Maybe Meta’s Llama claims to be open source because of the EU AI act

I encountered a theory a while ago that one of the reasons Meta insist on using the term “open source” for their Llama models despite the Llama license not actually conforming to the terms of the Open Source Definition is that the EU’s AI act includes special rules for open source models without requiring OSI compliance.

[... 852 words]Initial impressions of Llama 4

Dropping a model release as significant as Llama 4 on a weekend is plain unfair! So far the best place to learn about the new model family is this post on the Meta AI blog. They’ve released two new models today: Llama 4 Maverick is a 400B model (128 experts, 17B active parameters), text and image input with a 1 million token context length. Llama 4 Scout is 109B total parameters (16 experts, 17B active), also multi-modal and with a claimed 10 million token context length—an industry first.

[... 1,468 words]deepseek-ai/DeepSeek-V3-0324.

Chinese AI lab DeepSeek just released the latest version of their enormous DeepSeek v3 model, baking the release date into the name DeepSeek-V3-0324.

The license is MIT (that's new - previous DeepSeek v3 had a custom license), the README is empty and the release adds up a to a total of 641 GB of files, mostly of the form model-00035-of-000163.safetensors.

The model only came out a few hours ago and MLX developer Awni Hannun already has it running at >20 tokens/second on a 512GB M3 Ultra Mac Studio ($9,499 of ostensibly consumer-grade hardware) via mlx-lm and this mlx-community/DeepSeek-V3-0324-4bit 4bit quantization, which reduces the on-disk size to 352 GB.

I think that means if you have that machine you can run it with my llm-mlx plugin like this, but I've not tried myself!

llm mlx download-model mlx-community/DeepSeek-V3-0324-4bit

llm chat -m mlx-community/DeepSeek-V3-0324-4bit

The new model is also listed on OpenRouter. You can try a chat at openrouter.ai/chat?models=deepseek/deepseek-chat-v3-0324:free.

Here's what the chat interface gave me for "Generate an SVG of a pelican riding a bicycle":

I have two API keys with OpenRouter - one of them worked with the model, the other gave me a No endpoints found matching your data policy error - I think because I had a setting on that key disallowing models from training on my activity. The key that worked was a free key with no attached billing credentials.

For my working API key the llm-openrouter plugin let me run a prompt like this:

llm install llm-openrouter

llm keys set openrouter

# Paste key here

llm -m openrouter/deepseek/deepseek-chat-v3-0324:free "best fact about a pelican"

Here's that "best fact" - the terminal output included Markdown and an emoji combo, here that's rendered.

One of the most fascinating facts about pelicans is their unique throat pouch, called a gular sac, which can hold up to 3 gallons (11 liters) of water—three times more than their stomach!

Here’s why it’s amazing:

- Fishing Tool: They use it like a net to scoop up fish, then drain the water before swallowing.

- Cooling Mechanism: On hot days, pelicans flutter the pouch to stay cool by evaporating water.

- Built-in "Shopping Cart": Some species even use it to carry food back to their chicks.Bonus fact: Pelicans often fish cooperatively, herding fish into shallow water for an easy catch.

Would you like more cool pelican facts? 🐦🌊

In putting this post together I got Claude to build me this new tool for finding the total on-disk size of a Hugging Face repository, which is available in their API but not currently displayed on their website.

Update: Here's a notable independent benchmark from Paul Gauthier:

DeepSeek's new V3 scored 55% on aider's polyglot benchmark, significantly improving over the prior version. It's the #2 non-thinking/reasoning model, behind only Sonnet 3.7. V3 is competitive with thinking models like R1 & o3-mini.

llm-openrouter 0.4. I found out this morning that OpenRouter include support for a number of (rate-limited) free API models.

I occasionally run workshops on top of LLMs (like this one) and being able to provide students with a quick way to obtain an API key against models where they don't have to setup billing is really valuable to me!

This inspired me to upgrade my existing llm-openrouter plugin, and in doing so I closed out a bunch of open feature requests.

Consider this post the annotated release notes:

- LLM schema support for OpenRouter models that support structured output. #23

I'm trying to get support for LLM's new schema feature into as many plugins as possible.

OpenRouter's OpenAI-compatible API includes support for the response_format structured content option, but with an important caveat: it only works for some models, and if you try to use it on others it is silently ignored.

I filed an issue with OpenRouter requesting they include schema support in their machine-readable model index. For the moment LLM will let you specify schemas for unsupported models and will ignore them entirely, which isn't ideal.

llm openrouter keycommand displays information about your current API key. #24

Useful for debugging and checking the details of your key's rate limit.

llm -m ... -o online 1enables web search grounding against any model, powered by Exa. #25

OpenRouter apparently make this feature available to every one of their supported models! They're using new-to-me Exa to power this feature, an AI-focused search engine startup who appear to have built their own index with their own crawlers (according to their FAQ). This feature is currently priced by OpenRouter at $4 per 1000 results, and since 5 results are returned for every prompt that's 2 cents per prompt.

llm openrouter modelscommand for listing details of the OpenRouter models, including a--jsonoption to get JSON and a--freeoption to filter for just the free models. #26

This offers a neat way to list the available models. There are examples of the output in the comments on the issue.

- New option to specify custom provider routing:

-o provider '{JSON here}'. #17

Part of OpenRouter's USP is that it can route prompts to different providers depending on factors like latency, cost or as a fallback if your first choice is unavailable - great for if you are using open weight models like Llama which are hosted by competing companies.

The options they provide for routing are very thorough - I had initially hoped to provide a set of CLI options that covered all of these bases, but I decided instead to reuse their JSON format and forward those options directly on to the model.

2024

llm-openrouter 0.3. New release of my llm-openrouter plugin, which allows LLM to access models hosted by OpenRouter.

Quoting the release notes:

- Enable image attachments for models that support images. Thanks, Adam Montgomery. #12

- Provide async model access. #15

- Fix documentation to list correct

LLM_OPENROUTER_KEYenvironment variable. #10

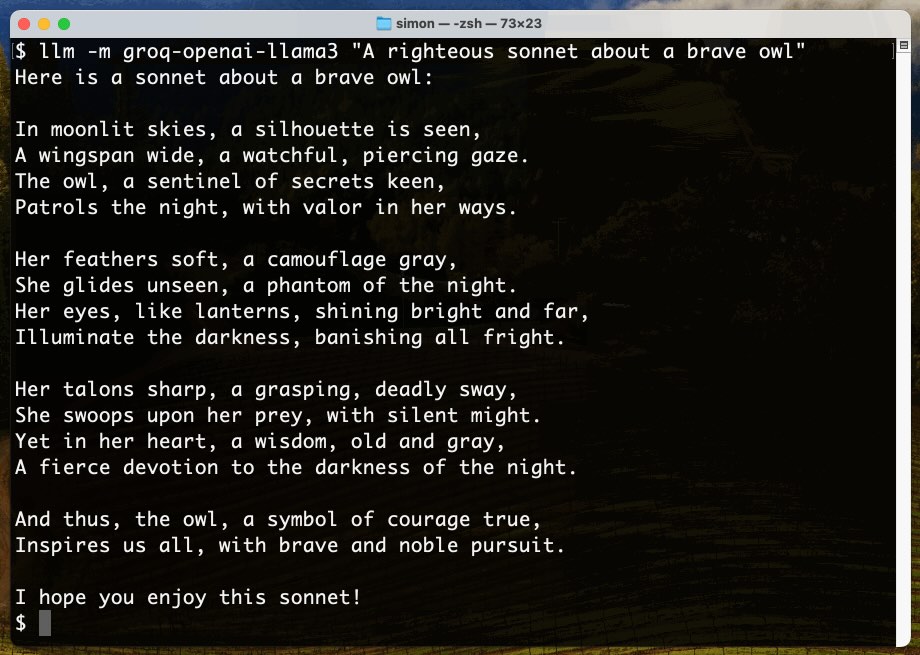

Options for accessing Llama 3 from the terminal using LLM

Llama 3 was released on Thursday. Early indications are that it’s now the best available openly licensed model—Llama 3 70b Instruct has taken joint 5th place on the LMSYS arena leaderboard, behind only Claude 3 Opus and some GPT-4s and sharing 5th place with Gemini Pro and Claude 3 Sonnet. But unlike those other models Llama 3 70b is weights available and can even be run on a (high end) laptop!

[... 1,962 words]2023

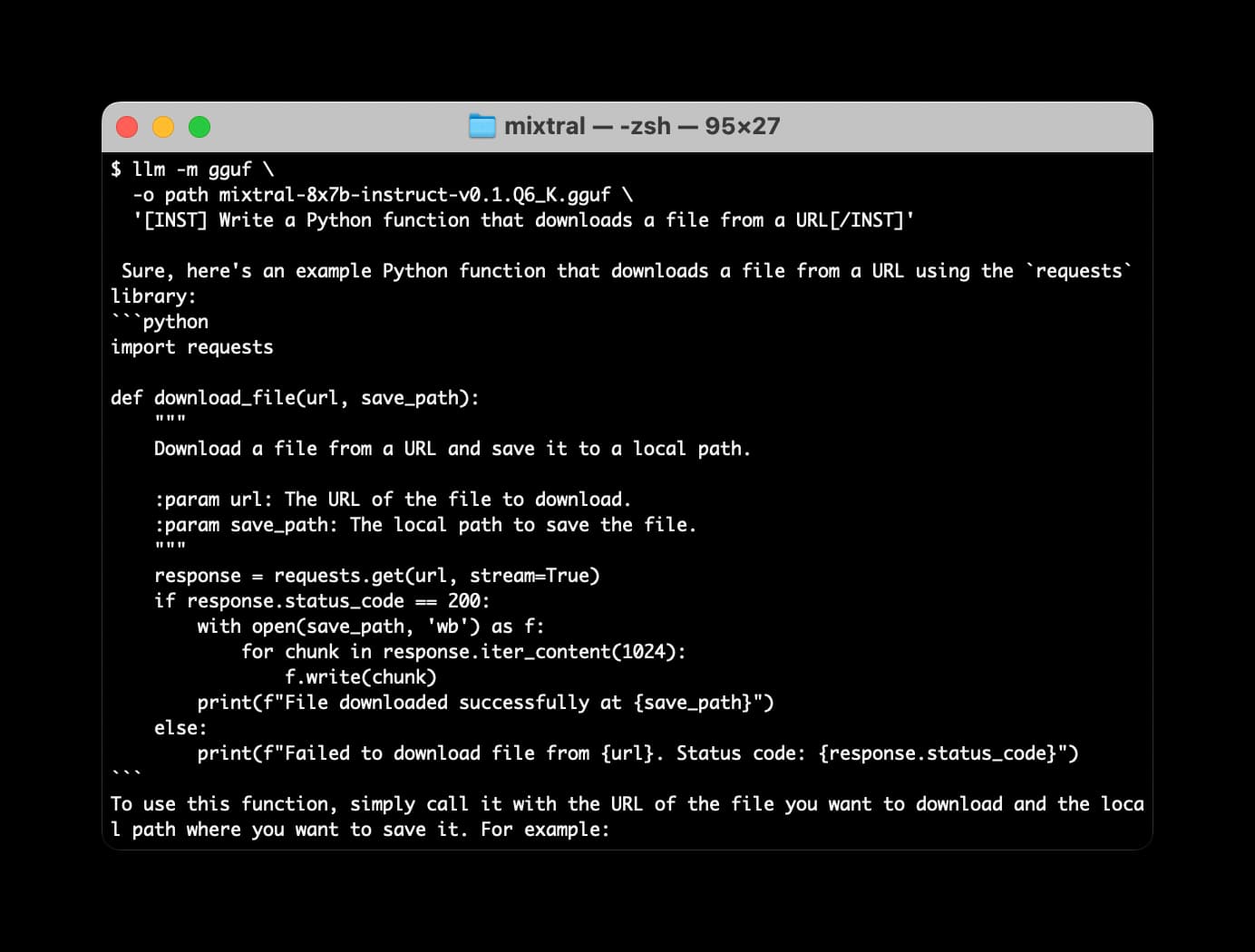

Many options for running Mistral models in your terminal using LLM

Mistral AI is the most exciting AI research lab at the moment. They’ve now released two extremely powerful smaller Large Language Models under an Apache 2 license, and have a third much larger one that’s available via their API.

[... 2,063 words]