January 2024

75 posts: 8 entries, 57 links, 10 quotes

Jan. 14, 2024

How We Executed a Critical Supply Chain Attack on PyTorch (via) Report on a now handled supply chain attack reported against PyTorch which took advantage of GitHub Actions, stealing credentials from some self-hosted task runners.

The researchers first submitted a typo fix to the PyTorch repo, which gave them status as a “contributor” to that repo and meant that their future pull requests would have workflows executed without needing manual approval.

Their mitigation suggestion is to switch the option from ’Require approval for first-time contributors’ to ‘Require approval for all outside collaborators’.

I think GitHub could help protect against this kind of attack by making it more obvious when you approve a PR to run workflows in a way that grants that contributor future access rights. I’d like a “approve this time only” button separate from “approve this run and allow future runs from user X”.

Making a Discord bot with PHP (via) Building bots for Discord used to require a long-running process that stayed connected, but a more recent change introduced slash commands via webhooks, making it much easier to write a bot that is backed by a simple request/response HTTP endpoint. Stuart Langridge explores how to build these in PHP here, but the same pattern in Python should be quite straight-forward.

Jan. 15, 2024

SQLite 3.45. Released today. The big new feature is JSONB support, a new, specific-to-SQLite binary internal representation of JSON which can provide up to a 3x performance improvement for JSON-heavy operations, plus a 5-10% saving it terms of bytes stored on disk.

Slashing Data Transfer Costs in AWS by 99% (via) Brilliant trick by Daniel Kleinstein. If you have data in two availability zones in the same AWS region, transferring a TB will cost you $10 in ingress and $10 in egress at the inter-zone rates charged by AWS.

But... transferring data to an S3 bucket in that same region is free (aside from S3 storage costs). And buckets are available with free transfer to all availability zones in their region, which means that TB of data can be transferred between availability zones for mere cents of S3 storage costs provided you delete the data as soon as it’s transferred.

Jan. 16, 2024

Daniel Situnayake explains TinyML in a Hacker News comment. Daniel worked on TensorFlow Lite at Google and co-wrote the TinyML O’Reilly book. He just posted a multi-paragraph comment on Hacker News explaining the term and describing some of the recent innovations in that space.

“TinyML means running machine learning on low power embedded devices, like microcontrollers, with constrained compute and memory.”

You likely have a TinyML system in your pocket right now: every cellphone has a low power DSP chip running a deep learning model for keyword spotting, so you can say "Hey Google" or "Hey Siri" and have it wake up on-demand without draining your battery. It’s an increasingly pervasive technology. [...]

It’s astonishing what is possible today: real time computer vision on microcontrollers, on-device speech transcription, denoising and upscaling of digital signals. Generative AI is happening, too, assuming you can find a way to squeeze your models down to size. We are an unsexy field compared to our hype-fueled neighbors, but the entire world is already filling up with this stuff and it’s only the very beginning. Edge AI is being rapidly deployed in a ton of fields: medical sensing, wearables, manufacturing, supply chain, health and safety, wildlife conservation, sports, energy, built environment—we see new applications every day.

On being listed in the court document as one of the artists whose work was used to train Midjourney, alongside 4,000 of my closest friends (via) Poignant webcomic from Cat and Girl.

I want to make my little thing and put it out in the world and hope that sometimes it means something to somebody else.

Without exploiting anyone.

And without being exploited.

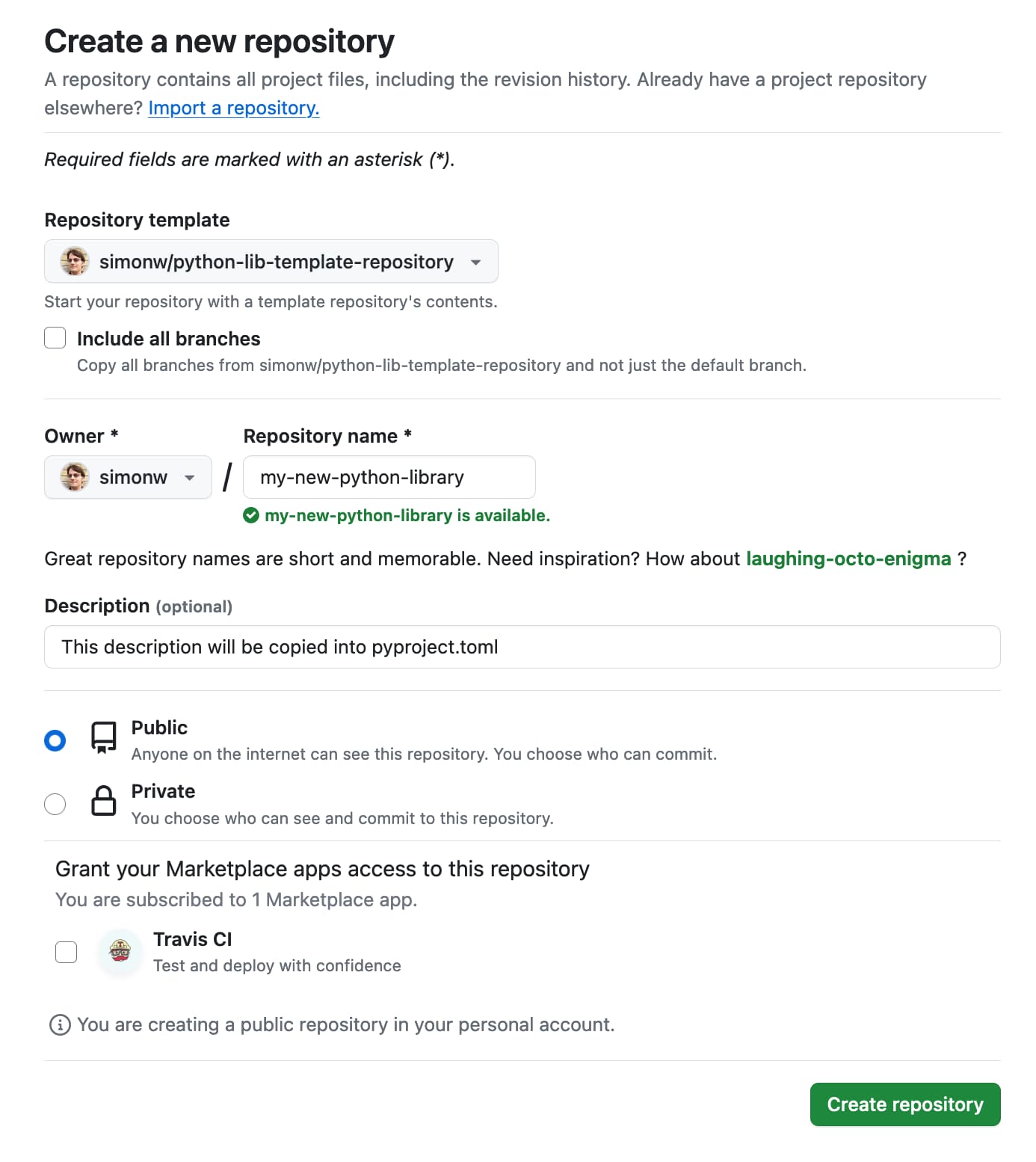

Publish Python packages to PyPI with a python-lib cookiecutter template and GitHub Actions

I use cookiecutter to start almost all of my Python projects. It helps me quickly generate a skeleton of a project with my preferred directory structure and configured tools.

[... 686 words]Jan. 17, 2024

Open Source LLMs with Simon Willison. I was invited to the Oxide and Friends weekly audio show (previously on Twitter Spaces, now using broadcast using Discord) to talk about open source LLMs, and to respond to a very poorly considered op-ed calling for them to be regulated as “uniquely dangerous”. It was a really fun conversation, now available to listen to as a podcast or YouTube audio-only video.

Talking about Open Source LLMs on Oxide and Friends

I recorded an episode of the Oxide and Friends podcast on Monday, talking with Bryan Cantrill and Adam Leventhal about Open Source LLMs.

[... 1,995 words]Jan. 18, 2024

Tools are the things we build that we don't ship - but that very much affect the artifact that we develop.

It can be tempting to either shy away from developing tooling entirely or (in larger organizations) to dedicate an entire organization to it.

In my experience, tooling should be built by those using it.

This is especially true for tools that improve the artifact by improving understanding: the best time to develop a debugger is when debugging!

Jan. 19, 2024

AWS Fixes Data Exfiltration Attack Angle in Amazon Q for Business. An indirect prompt injection (where the AWS Q bot consumes malicious instructions) could result in Q outputting a markdown link to a malicious site that exfiltrated the previous chat history in a query string.

Amazon fixed it by preventing links from being output at all—apparently Microsoft 365 Chat uses the same mitigation.

Jan. 20, 2024

DSF calls for applicants for a Django Fellow. The Django Software Foundation employs contractors to manage code reviews and releases, responsibly handle security issues, coach new contributors, triage tickets and more.

This is the Django Fellows program, which is now ten years old and has proven enormously impactful.

Mariusz Felisiak is moving on after five years and the DSF are calling for new applicants, open to anywhere in the world.

And now, in Anno Domini 2024, Google has lost its edge in search. There are plenty of things it can’t find. There are compelling alternatives. To me this feels like a big inflection point, because around the stumbling feet of the Big Tech dinosaurs, the Web’s mammals, agile and flexible, still scurry. They exhibit creative energy and strongly-flavored voices, and those voices still sometimes find and reinforce each other without being sock puppets of shareholder-value-focused private empires.

— Tim Bray

Jan. 21, 2024

NYT Flash-based visualizations work again. The New York Times are using the open source Ruffle Flash emulator - built using Rust, compiled to WebAssembly - to get their old archived data visualization interactives working again.

Weeknotes: datasette-test, datasette-build, PSF board retreat

I wrote about Page caching and custom templates in my last weeknotes. This week I wrapped up that work, modifying datasette-edit-templates to be compatible with the jinja2_environment_from_request() plugin hook. This means you can edit templates directly in Datasette itself and have those served either for the full instance or just for the instance when served from a specific domain (the Datasette Cloud case).

[... 757 words]Jan. 22, 2024

We estimate the supply-side value of widely-used OSS is $4.15 billion, but that the demand-side value is much larger at $8.8 trillion. We find that firms would need to spend 3.5 times more on software than they currently do if OSS did not exist. [...] Further, 96% of the demand-side value is created by only 5% of OSS developers.

— The Value of Open Source Software, Harvard Business School Strategy Unit

Python packaging must be getting better—a datapoint (via) Luke Plant reports on a recent project he developed on Linux using a requirements.txt file and some complex binary dependencies—Qt5 and VTK—and when he tried to run it on Windows... it worked! No modifications required.

I think Python’s packaging system has never been more effective... provided you know how to use it. The learning curve is still too high, which I think accounts for the bulk of complaints about it today.

Jan. 23, 2024

Prompt Lookup Decoding (via) Really neat LLM optimization trick by Apoorv Saxena, who observed that it’s common for sequences of tokens in LLM input to be reflected by the output—snippets included in a summarization, for example.

Apoorv’s code performs a simple search for such prefixes and uses them to populate a set of suggested candidate IDs during LLM token generation.

The result appears to provide around a 2.4x speed-up in generating outputs!

The Open Source Sustainability Crisis (via) Chad Whitacre: “What is Open Source sustainability? Why do I say it is in crisis? My answers are that sustainability is when people are getting paid without jumping through hoops, and we’re in a crisis because people aren’t and they’re burning out.”

I really like Chad’s focus on “jumping through hoops” in this piece. It’s possible to build a financially sustainable project today, but it requires picking one or more activities that aren’t directly aligned with working on the core project: raising VC and starting a company, building a hosted SaaS platform and becoming a sysadmin, publishing books and courses and becoming a content author.

The dream is that open source maintainers can invest all of their effort in their projects and make a good living from that work.

Jan. 24, 2024

Find a level of abstraction that works for what you need to do. When you have trouble there, look beneath that abstraction. You won’t be seeing how things really work, you’ll be seeing a lower-level abstraction that could be helpful. Sometimes what you need will be an abstraction one level up. Is your Python loop too slow? Perhaps you need a C loop. Or perhaps you need numpy array operations.

You (probably) don’t need to learn C.

Google Research: Lumiere. The latest in text-to-video from Google Research, described as “a text-to-video diffusion model designed for synthesizing videos that portray realistic, diverse and coherent motion”.

Most existing text-to-video models generate keyframes and then use other models to fill in the gaps, which frequently leads to a lack of coherency. Lumiere “generates the full temporal duration of the video at once”, which avoids this problem.

Disappointingly but unsurprisingly the paper doesn’t go into much detail on the training data, beyond stating “We train our T2V model on a dataset containing 30M videos along with their text caption. The videos are 80 frames long at 16 fps (5 seconds)”.

The examples of “stylized generation” which combine a text prompt with a single reference image for style are particularly impressive.

Django Chat: Datasette, LLMs, and Django. I’m the guest on the latest episode of the Django Chat podcast. We talked about Datasette, LLMs, the New York Times OpenAI lawsuit, the Python Software Foundation and all sorts of other topics.

Jan. 25, 2024

Fairly Trained launches certification for generative AI models that respect creators’ rights. I've been using the term "vegan models" for a while to describe machine learning models that have been trained in a way that avoids using unlicensed, copyrighted data. Fairly Trained is a new non-profit initiative that aims to encourage such models through a "certification" stamp of approval.

The team is lead by Ed Newton-Rex, who was previously VP of Audio at Stability AI before leaving over ethical concerns with the way models were being trained.

Inside .git. This single diagram filled in all sorts of gaps in my mental model of how git actually works under the hood.

iOS 17.4 Introduces Alternative App Marketplaces With No Commission in EU. The most exciting detail tucked away in this story about new EU policies from iOS 17.4 onwards: “Apple is giving app developers in the EU access to NFC and allowing for alternative browser engines, so WebKit will not be required for third-party browser apps.”

Finally, browser engine competition on iOS! I really hope this results in a future worldwide policy allowing such engines.

Portable EPUBs. Will Crichton digs into the reasons people still prefer PDF over HTML as a format for sharing digital documents, concluding that the key issues are that HTML documents are not fully self-contained and may not be rendered consistently.

He proposes “Portable EPUBs” as the solution, defining a subset of the existing EPUB standard with some additional restrictions around avoiding loading extra assets over a network, sticking to a smaller (as-yet undefined) subset of HTML and encouraging interactive components to be built using self-contained Web Components.

Will also built his own lightweight EPUB reading system, called Bene—which is used to render this Portable EPUBs article. It provides a “download” link in the top right which produces the .epub file itself.

There’s a lot to like here. I’m constantly infuriated at the number of documents out there that are PDFs but really should be web pages (academic papers are a particularly bad example here), so I’m very excited by any initiatives that might help push things in the other direction.

Jan. 26, 2024

Did an AI write that hour-long “George Carlin” special? I’m not convinced. Two weeks ago "Dudesy", a comedy podcast which claims to be controlled and written by an AI, released an extremely poor taste hour long YouTube video called "George Carlin: I’m Glad I’m Dead". They used voice cloning to produce a stand-up comedy set featuring the late George Carlin, claiming to also use AI to write all of the content after training it on everything in the Carlin back catalog.

Unsurprisingly this has resulted in a massive amount of angry coverage, including from Carlin's own daughter (the Carlin estate have filed a lawsuit). Resurrecting people without their permission is clearly abhorrent.

But... did AI even write this? The author of this Ars Technica piece, Kyle Orland, started digging in.

It turns out the Dudesy podcast has been running with this premise since it launched in early 2022 - long before any LLM was capable of producing a well-crafted joke. The structure of the Carlin set goes way beyond anything I've seen from even GPT-4. And in a follow-up podcast episode, Dudesy co-star Chad Kultgen gave an O. J. Simpson-style "if I did it" semi-confession that described a much more likely authorship process.

I think this is a case of a human-pretending-to-be-an-AI - an interesting twist, given that the story started out being about an-AI-imitating-a-human.

I consulted with Kyle on this piece, and got a couple of neat quotes in there:

Either they have genuinely trained a custom model that can generate jokes better than any model produced by any other AI researcher in the world... or they're still doing the same bit they started back in 2022 [...]

The real story here is… everyone is ready to believe that AI can do things, even if it can't. In this case, it's pretty clear what's going on if you look at the wider context of the show in question. But anyone without that context, [a viewer] is much more likely to believe that the whole thing was AI-generated… thanks to the massive ramp up in the quality of AI output we have seen in the past 12 months.

Update 27th January 2024: The NY Times confirmed via a spokesperson for the podcast that the entire special had been written by Chad Kultgen, not by an AI.

Exploring codespaces as temporary dev containers (via) DJ Adams shows how to use GitHub Codespaces without interacting with their web UI at all: you can run “gh codespace create --repo ...” to create a new instance, then SSH directly into it using “gh codespace ssh --codespace codespacename”.

This turns Codespaces into an extremely convenient way to spin up a scratch on-demand Linux container where you pay for just the time that the machine spends running.

LLM 0.13: The annotated release notes

I just released LLM 0.13, the latest version of my LLM command-line tool for working with Large Language Models—both via APIs and running models locally using plugins.

[... 1,278 words]