Series: LLMs on personal devices

Large language models that can run on our own devices open up exciting new ways in which these tools can be used.

Large language models are having their Stable Diffusion moment

The open release of the Stable Diffusion image generation model back in August 2022 was a key moment. I wrote how Stable Diffusion is a really big deal at the time.

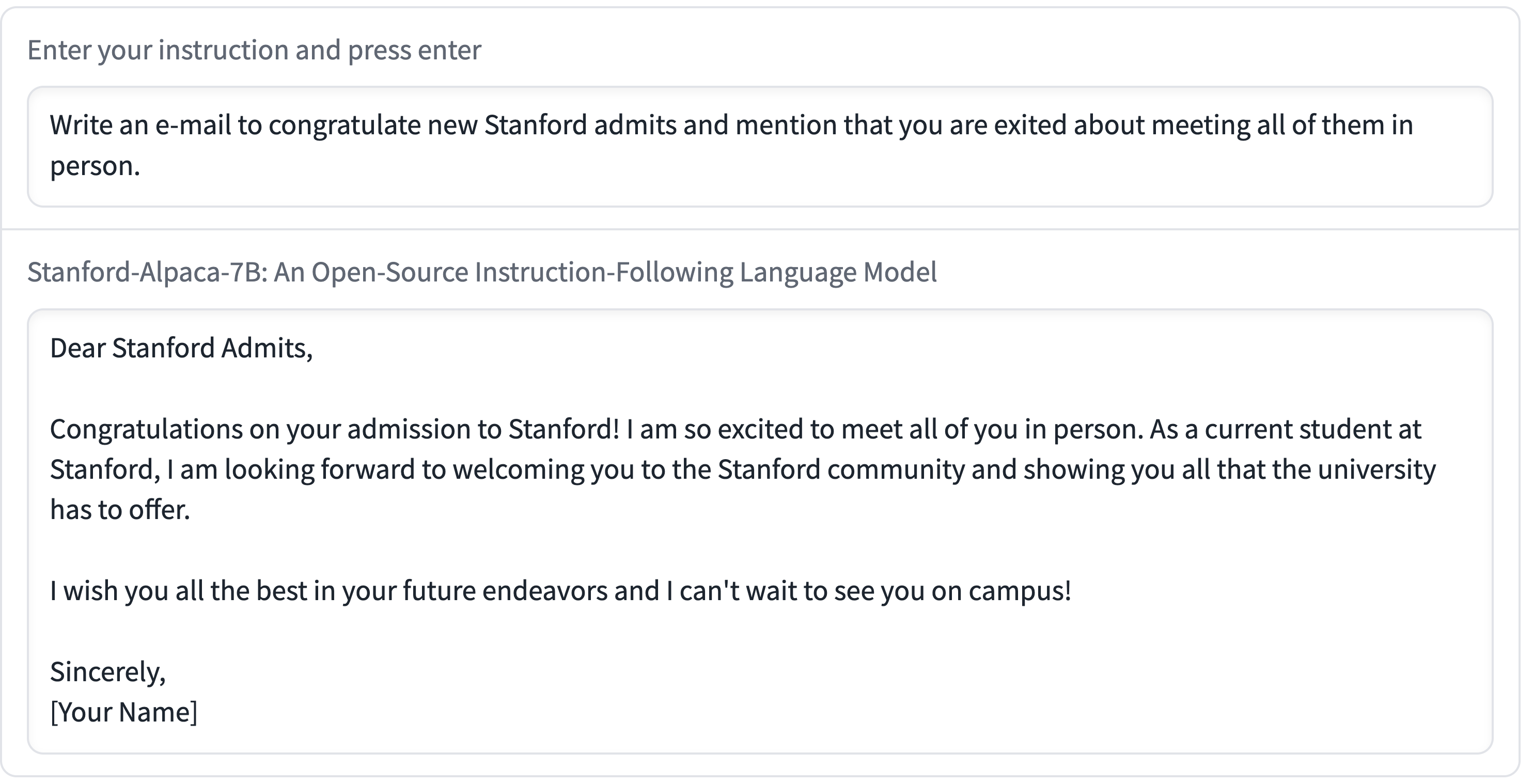

[... 1,815 words]Stanford Alpaca, and the acceleration of on-device large language model development

On Saturday 11th March I wrote about how Large language models are having their Stable Diffusion moment. Today is Monday. Let’s look at what’s happened in the past three days.

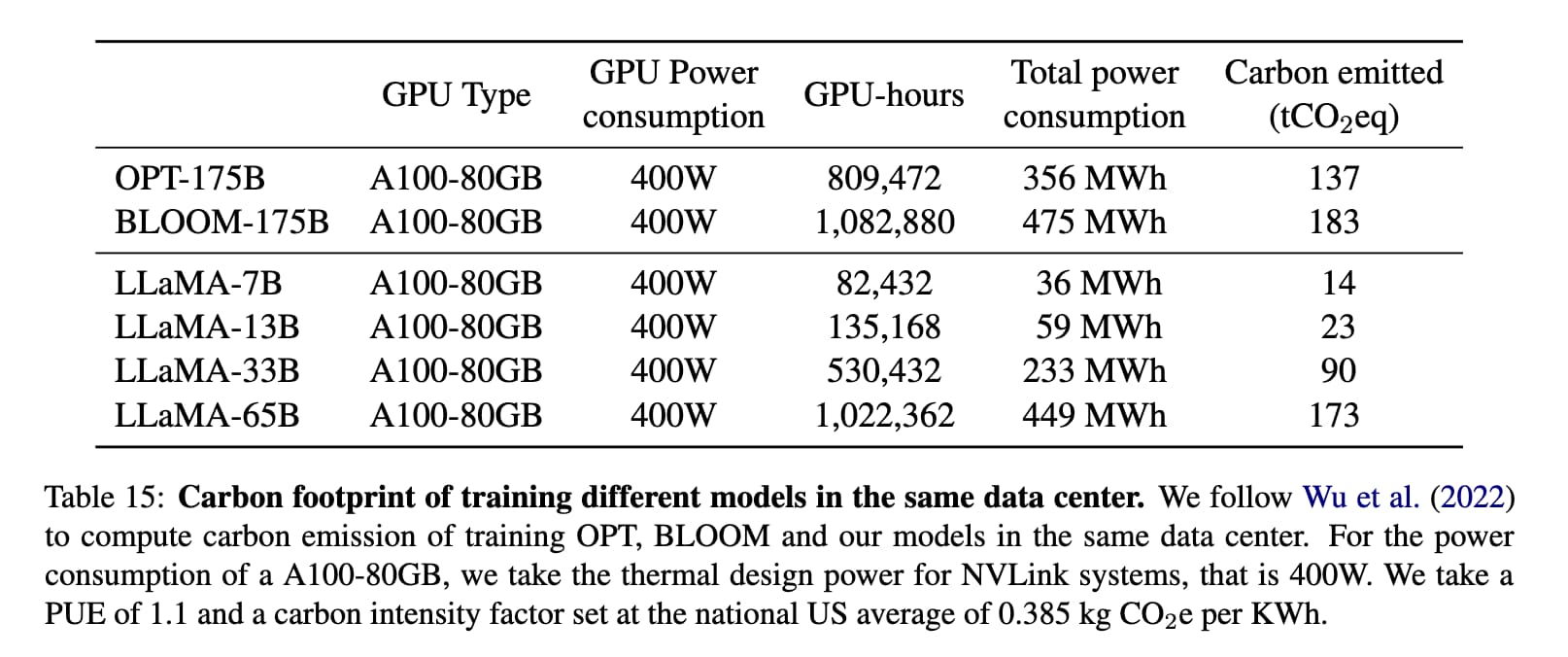

[... 2,055 words]Could you train a ChatGPT-beating model for $85,000 and run it in a browser?

I think it’s now possible to train a large language model with similar functionality to GPT-3 for $85,000. And I think we might soon be able to run the resulting model entirely in the browser, and give it capabilities that leapfrog it ahead of ChatGPT.

[... 1,751 words]Thoughts on AI safety in this era of increasingly powerful open source LLMs

This morning, VentureBeat published a story by Sharon Goldman: With a wave of new LLMs, open source AI is having a moment — and a red-hot debate. It covers the explosion in activity around openly available Large Language Models such as LLaMA—a trend I’ve been tracking in my own series LLMs on personal devices—and talks about their implications with respect to AI safety.

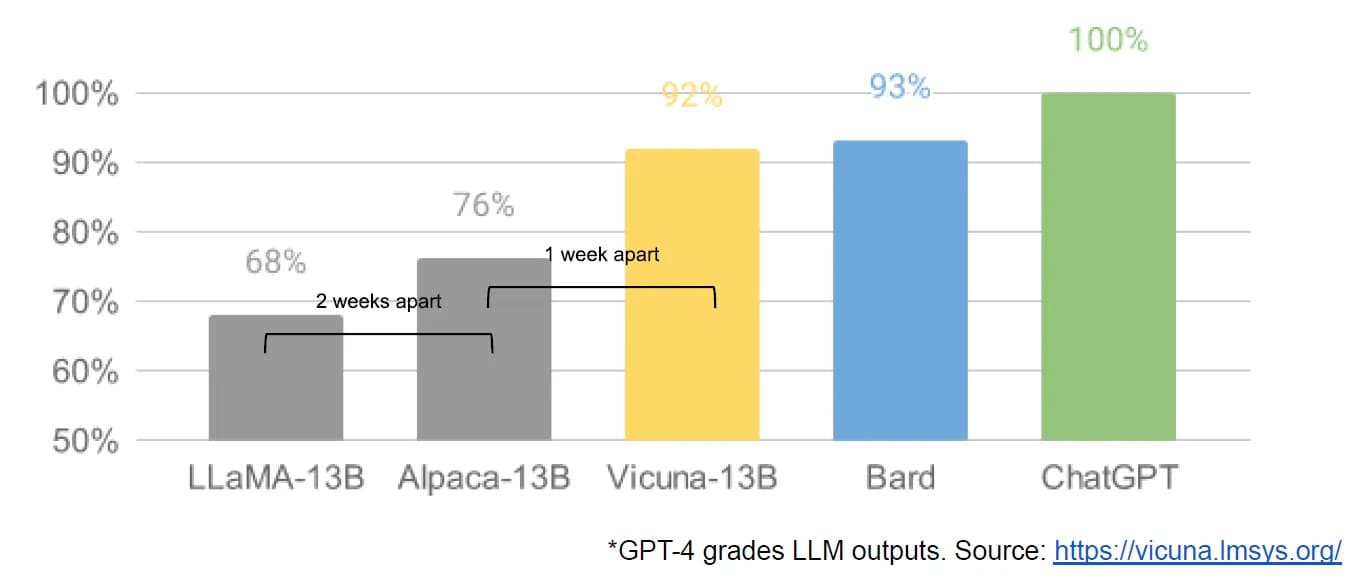

[... 782 words]Web LLM runs the vicuna-7b Large Language Model entirely in your browser, and it’s very impressive

A month ago I asked Could you train a ChatGPT-beating model for $85,000 and run it in a browser?. $85,000 was a hypothetical training cost for LLaMA 7B plus Stanford Alpaca. “Run it in a browser” was based on the fact that Web Stable Diffusion runs a 1.9GB Stable Diffusion model in a browser, so maybe it’s not such a big leap to run a small Large Language Model there as well.

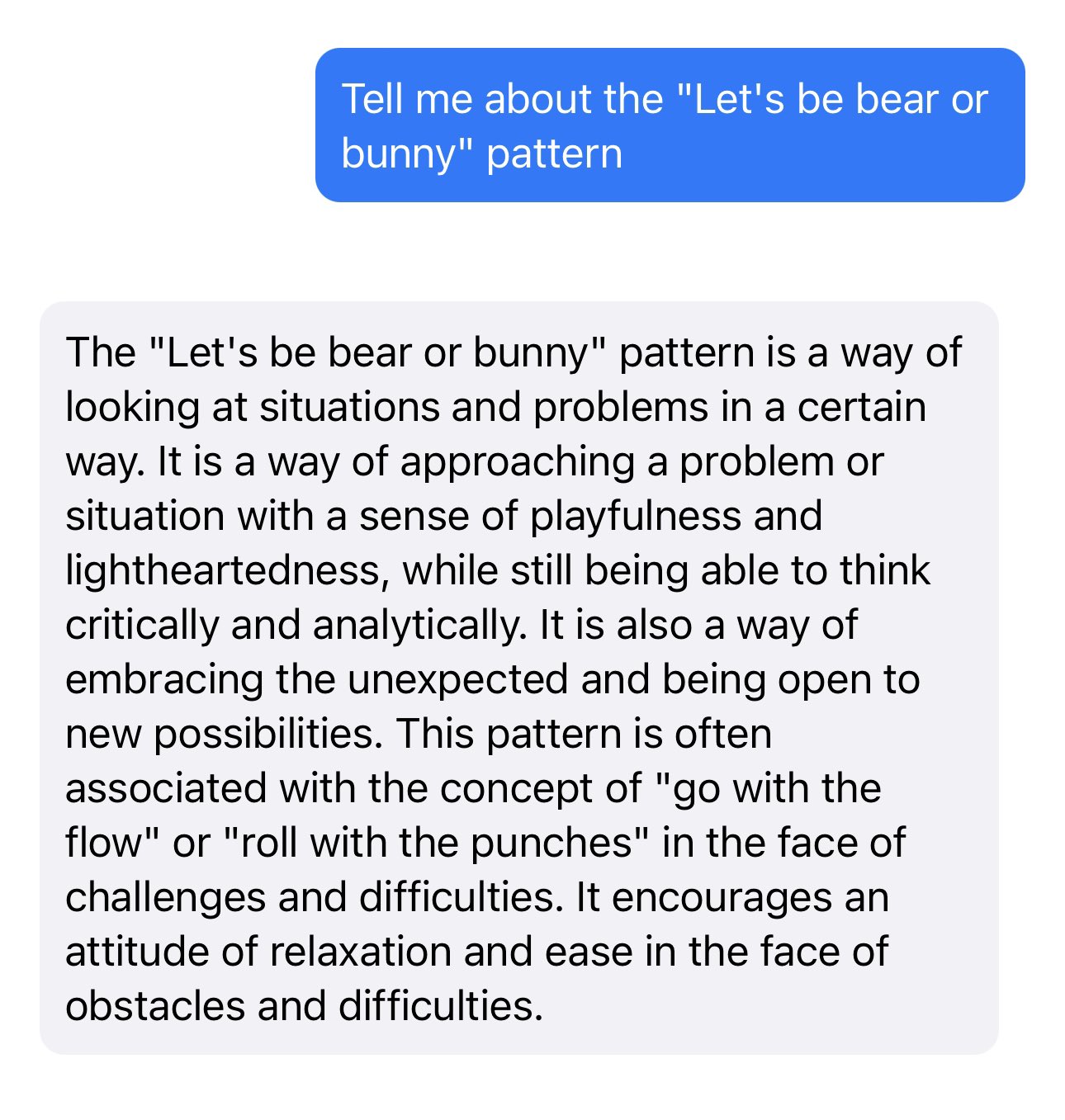

[... 2,276 words]Let’s be bear or bunny

The Machine Learning Compilation group (MLC) are my favourite team of AI researchers at the moment.

[... 599 words]Leaked Google document: “We Have No Moat, And Neither Does OpenAI”

SemiAnalysis published something of a bombshell leaked document this morning: Google “We Have No Moat, And Neither Does OpenAI”.

[... 1,073 words]Run Llama 2 on your own Mac using LLM and Homebrew

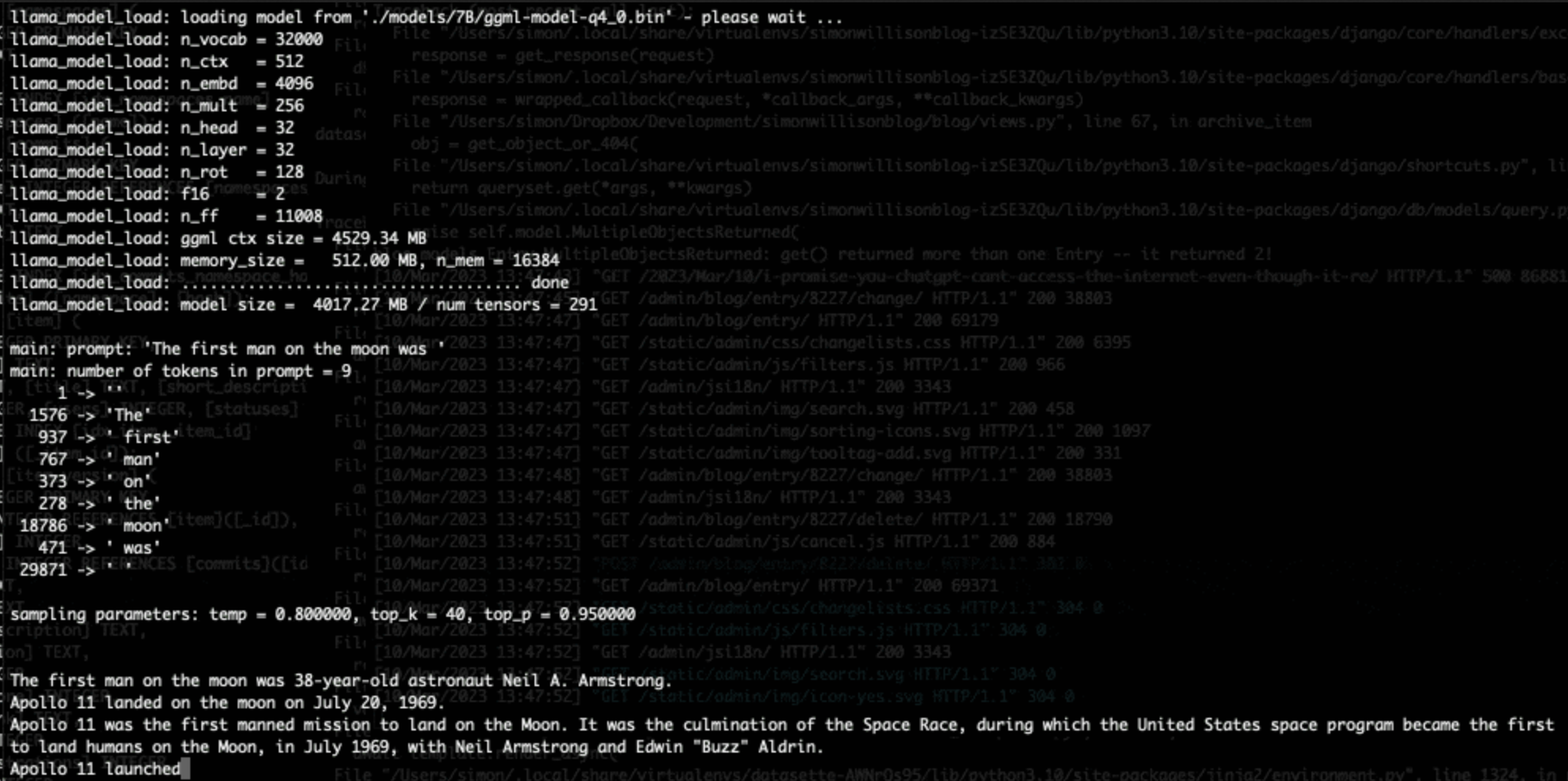

Llama 2 is the latest commercially usable openly licensed Large Language Model, released by Meta AI a few weeks ago. I just released a new plugin for my LLM utility that adds support for Llama 2 and many other llama-cpp compatible models.

[... 1,423 words]llamafile is the new best way to run an LLM on your own computer

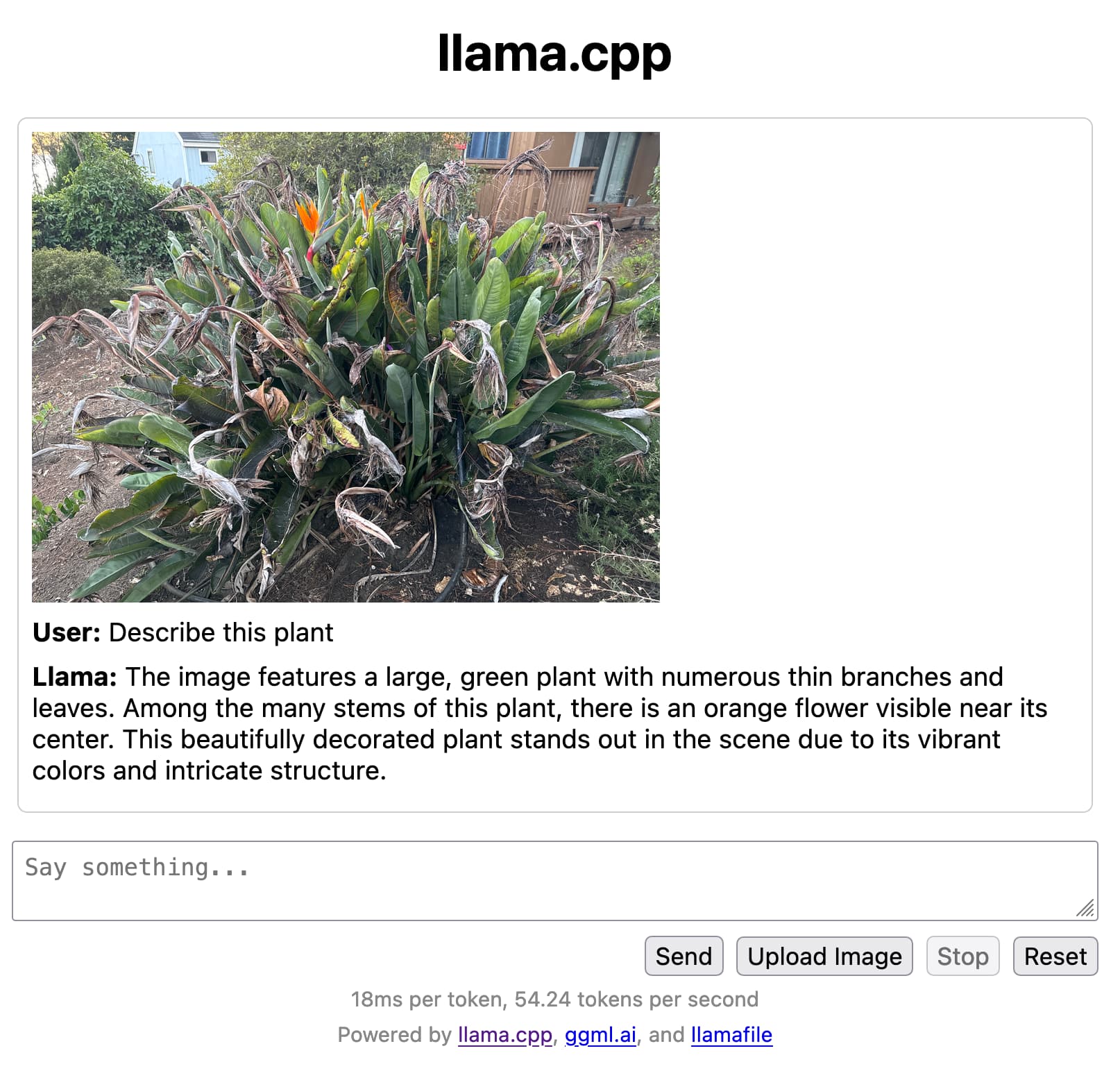

Mozilla’s innovation group and Justine Tunney just released llamafile, and I think it’s now the single best way to get started running Large Language Models (think your own local copy of ChatGPT) on your own computer.

[... 650 words]Many options for running Mistral models in your terminal using LLM

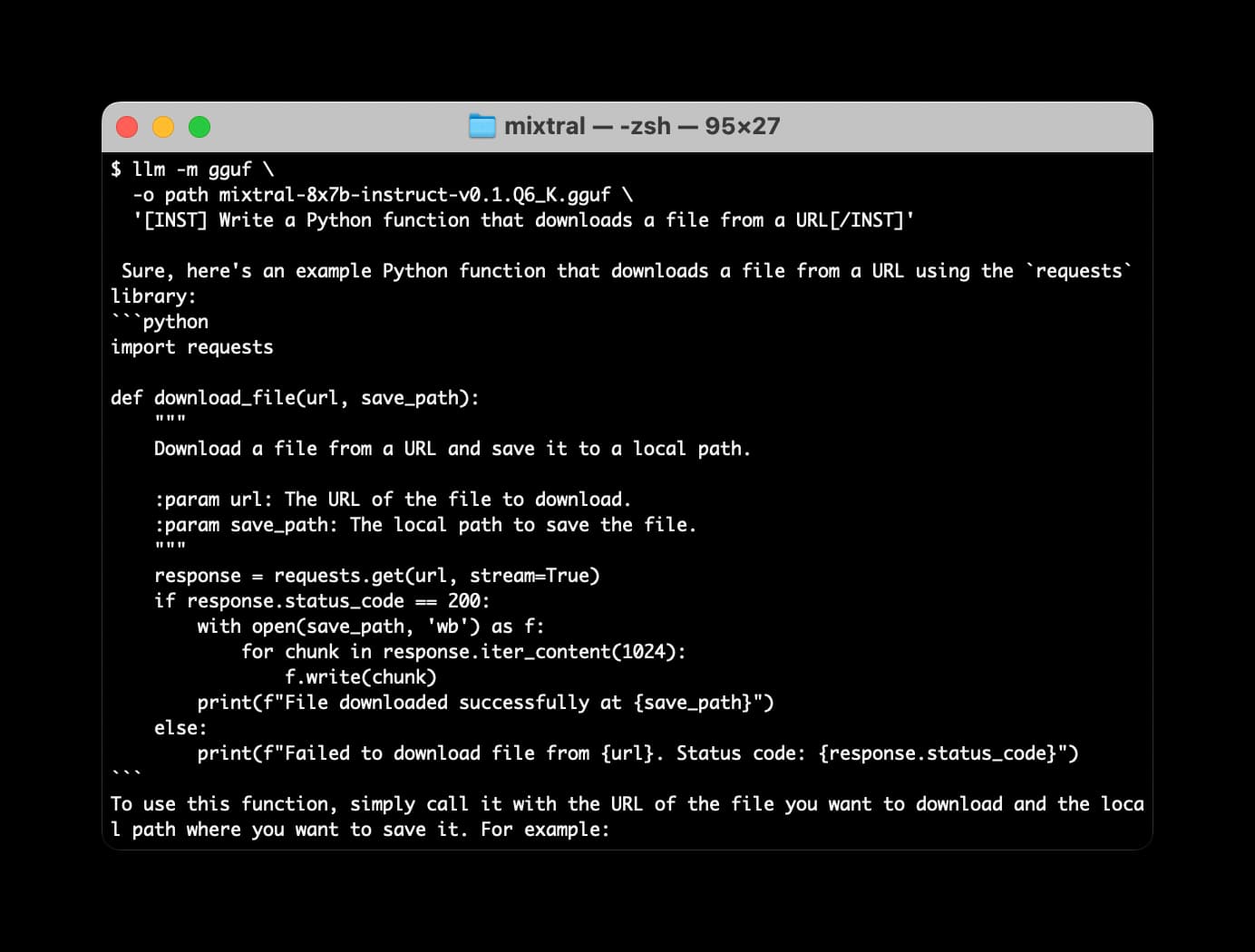

Mistral AI is the most exciting AI research lab at the moment. They’ve now released two extremely powerful smaller Large Language Models under an Apache 2 license, and have a third much larger one that’s available via their API.

[... 2,063 words]Options for accessing Llama 3 from the terminal using LLM

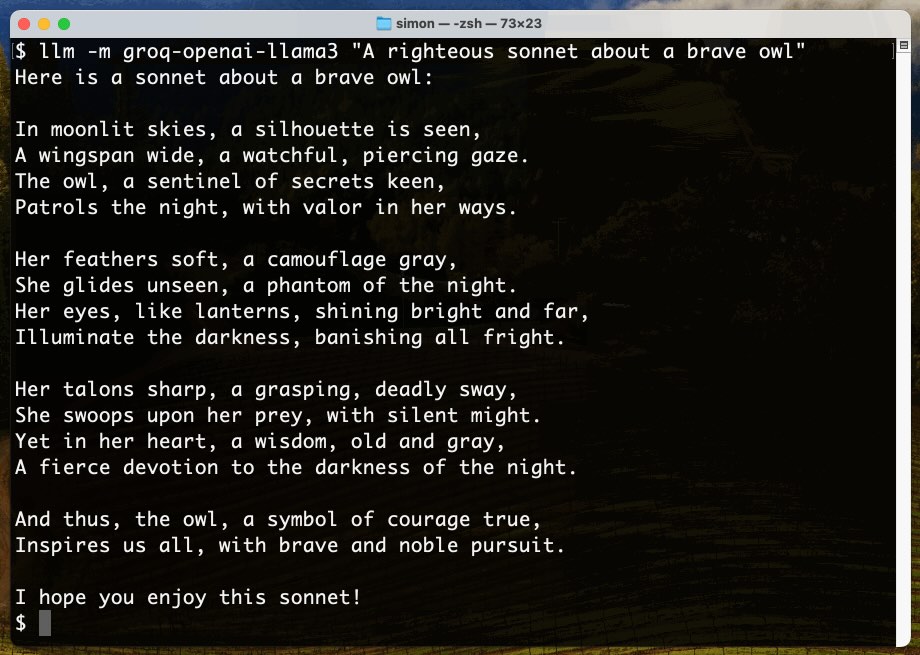

Llama 3 was released on Thursday. Early indications are that it’s now the best available openly licensed model—Llama 3 70b Instruct has taken joint 5th place on the LMSYS arena leaderboard, behind only Claude 3 Opus and some GPT-4s and sharing 5th place with Gemini Pro and Claude 3 Sonnet. But unlike those other models Llama 3 70b is weights available and can even be run on a (high end) laptop!

[... 1,962 words]Running Llama 3.2 Vision and Phi-3.5 Vision on a Mac with mistral.rs

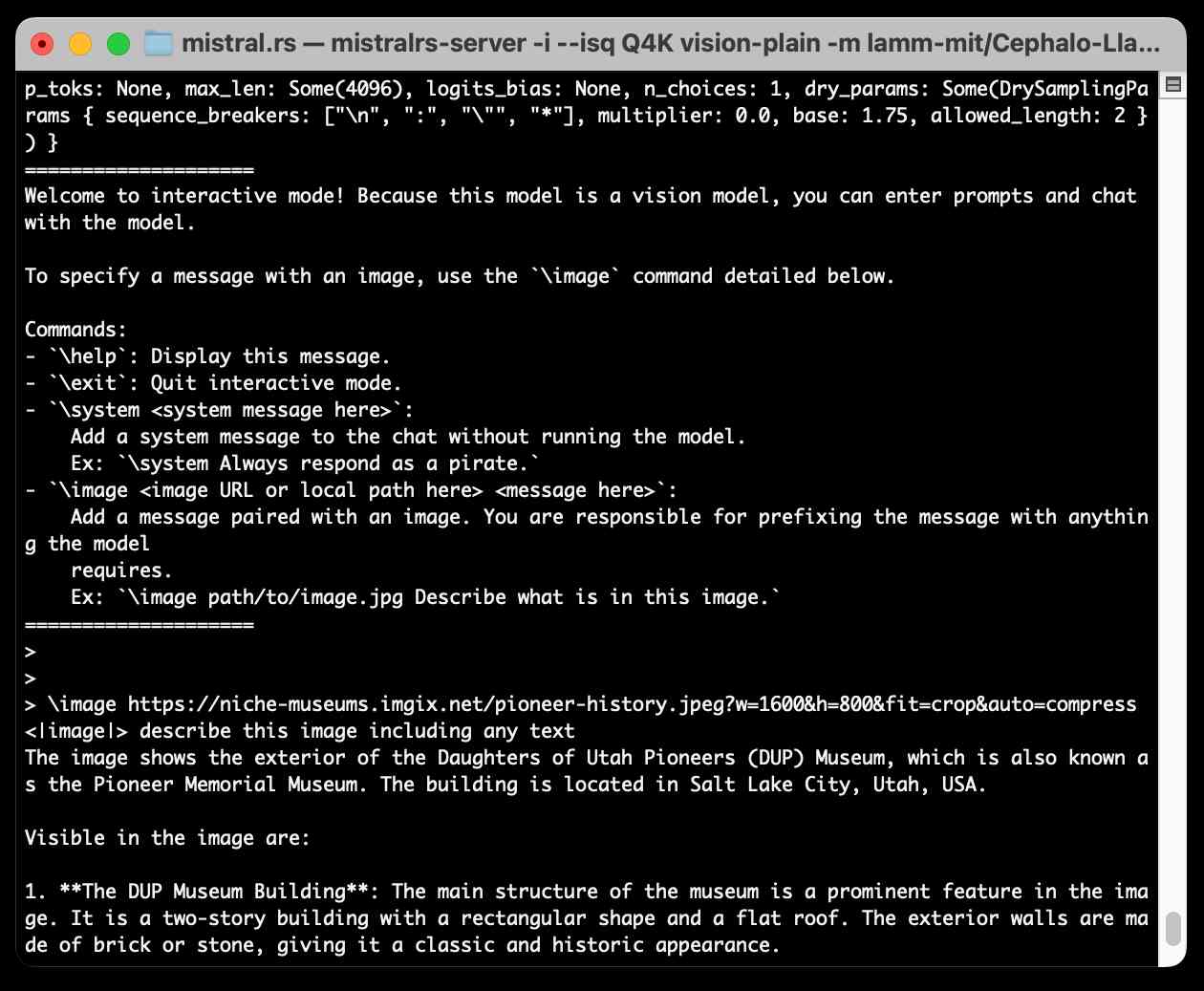

mistral.rs is an LLM inference library written in Rust by Eric Buehler. Today I figured out how to use it to run the Llama 3.2 Vision and Phi-3.5 Vision models on my Mac.

[... 1,231 words]Qwen2.5-Coder-32B is an LLM that can code well that runs on my Mac

There’s a whole lot of buzz around the new Qwen2.5-Coder Series of open source (Apache 2.0 licensed) LLM releases from Alibaba’s Qwen research team. On first impression it looks like the buzz is well deserved.

[... 697 words]I can now run a GPT-4 class model on my laptop

Meta’s new Llama 3.3 70B is a genuinely GPT-4 class Large Language Model that runs on my laptop.

[... 2,905 words]DeepSeek-R1 and exploring DeepSeek-R1-Distill-Llama-8B

DeepSeek are the Chinese AI lab who dropped the best currently available open weights LLM on Christmas day, DeepSeek v3. That model was trained in part using their unreleased R1 “reasoning” model. Today they’ve released R1 itself, along with a whole family of new models derived from that base.

[... 1,276 words]Using pip to install a Large Language Model that’s under 100MB

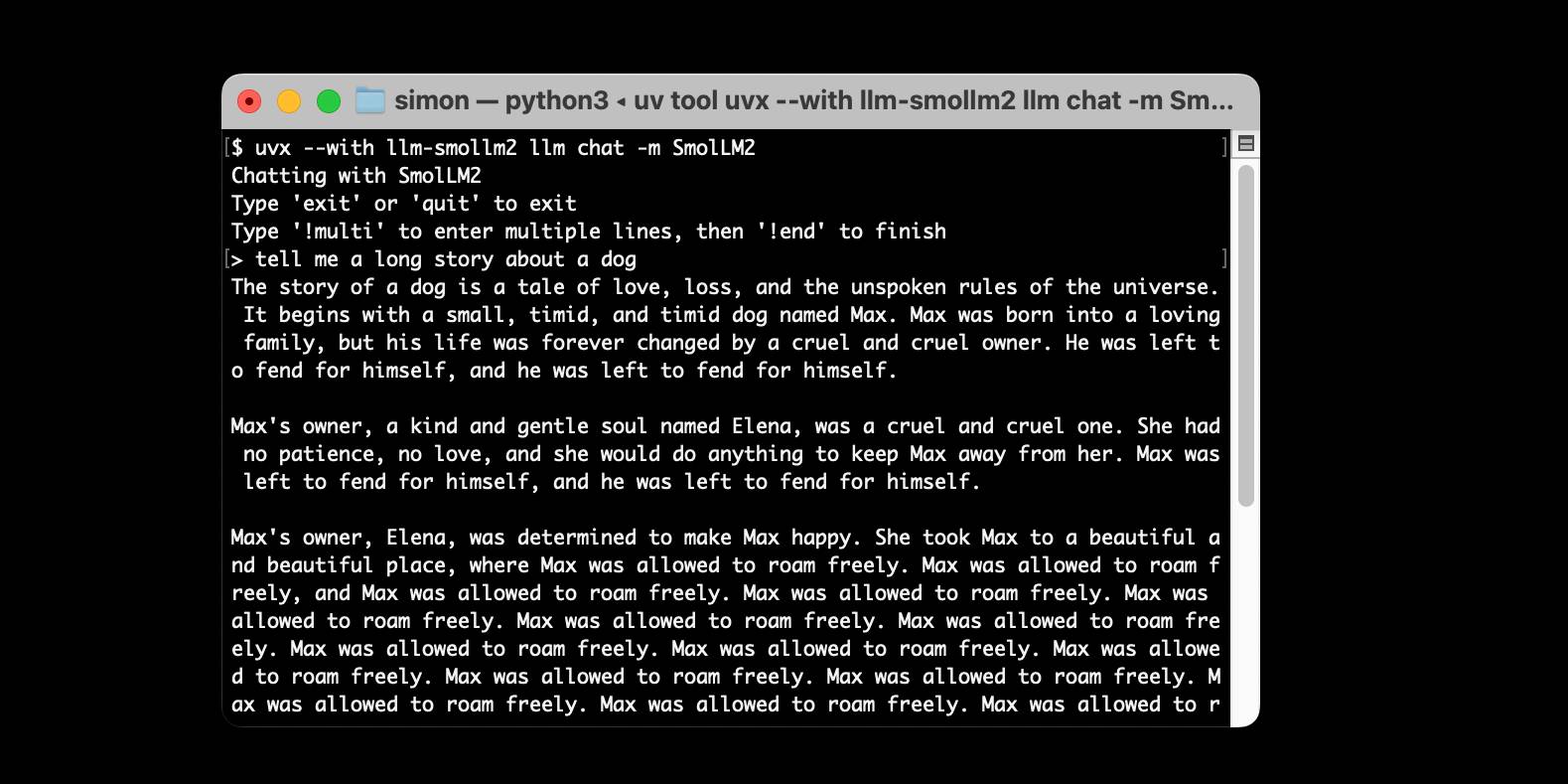

I just released llm-smollm2, a new plugin for LLM that bundles a quantized copy of the SmolLM2-135M-Instruct LLM inside of the Python package.

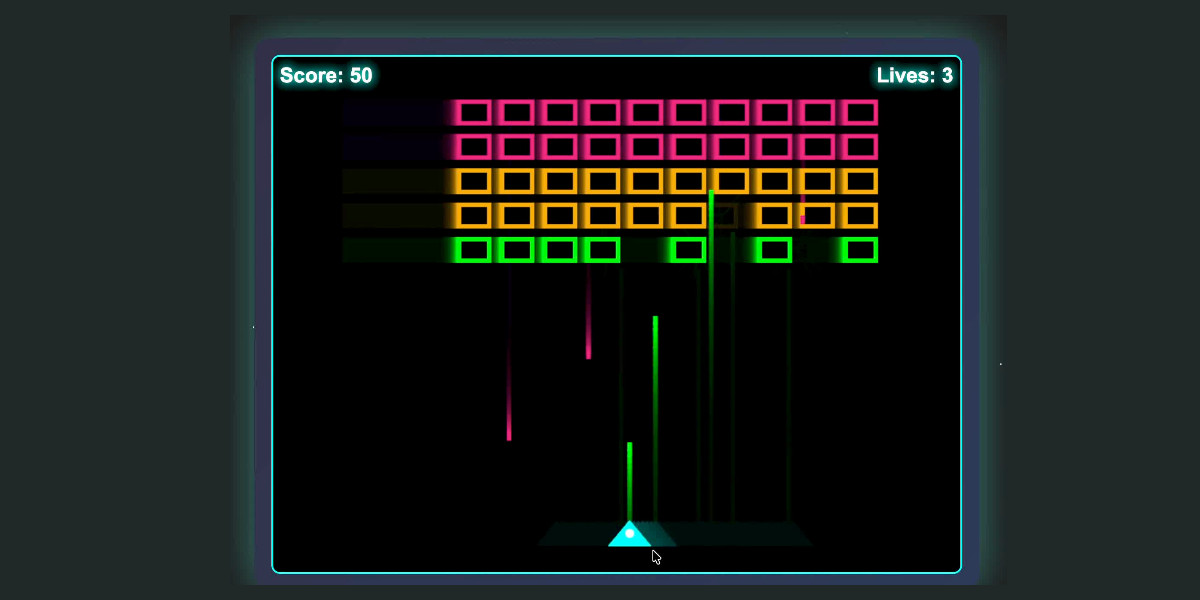

[... 1,553 words]My 2.5 year old laptop can write Space Invaders in JavaScript now, using GLM-4.5 Air and MLX

I wrote about the new GLM-4.5 model family yesterday—new open weight (MIT licensed) models from Z.ai in China which their benchmarks claim score highly in coding even against models such as Claude Sonnet 4.

[... 685 words]Trying out Qwen3 Coder Flash using LM Studio and Open WebUI and LLM

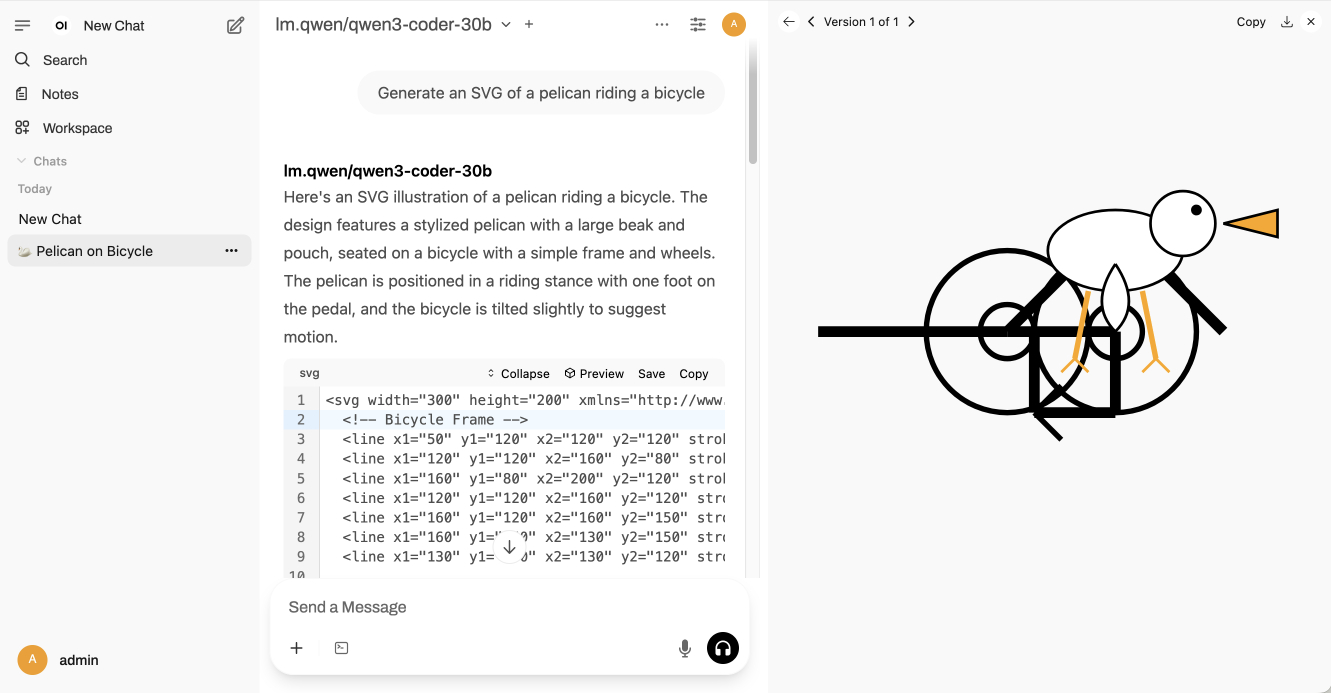

Qwen just released their sixth model(!) of this July called Qwen3-Coder-30B-A3B-Instruct—listed as Qwen3-Coder-Flash in their chat.qwen.ai interface.

[... 1,390 words]OpenAI’s new open weight (Apache 2) models are really good

The long promised OpenAI open weight models are here, and they are very impressive. They’re available under proper open source licenses—Apache 2.0—and come in two sizes, 120B and 20B.

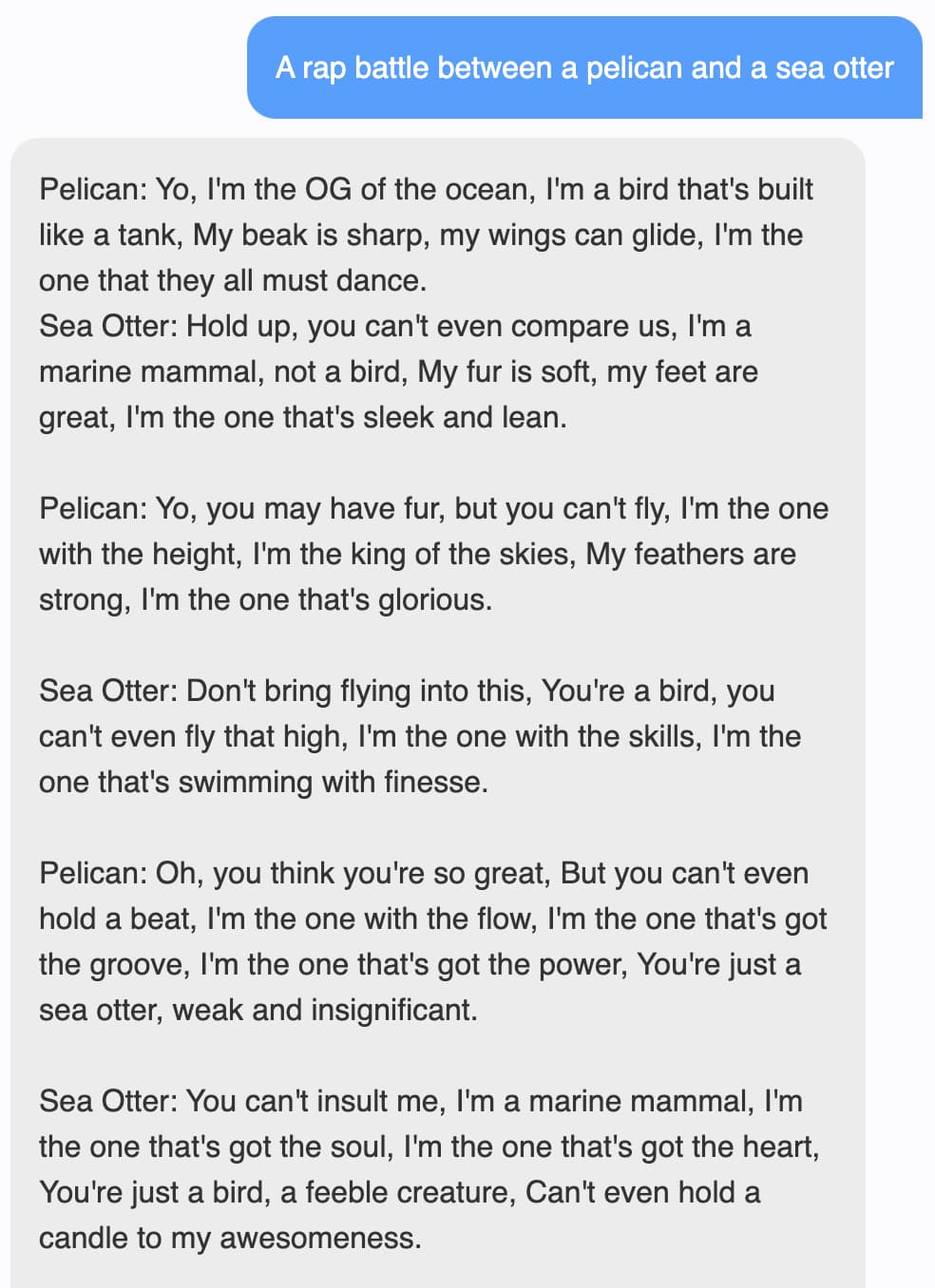

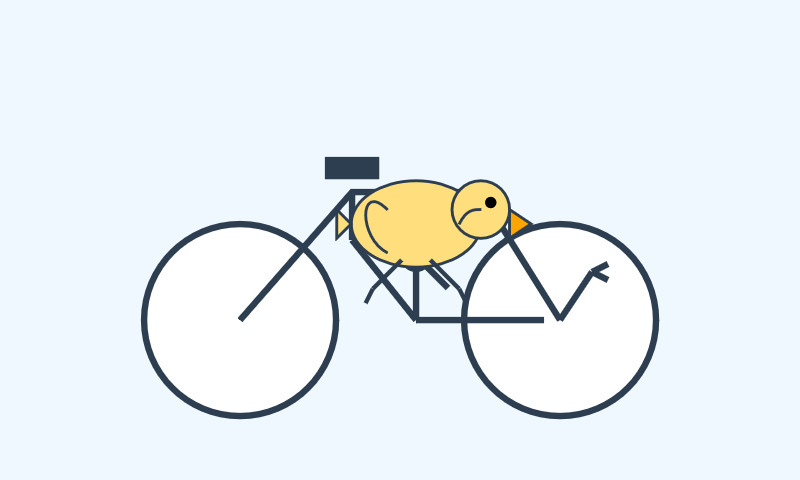

[... 2,771 words]Qwen3-4B-Thinking: “This is art—pelicans don’t ride bikes!”

I’ve fallen a few days behind keeping up with Qwen. They released two new 4B models last week: Qwen3-4B-Instruct-2507 and its thinking equivalent Qwen3-4B-Thinking-2507.

[... 991 words]