1,824 posts tagged “ai”

"AI is whatever hasn't been done yet"—Larry Tesler

2025

Initial impressions of Llama 4

Dropping a model release as significant as Llama 4 on a weekend is plain unfair! So far the best place to learn about the new model family is this post on the Meta AI blog. They’ve released two new models today: Llama 4 Maverick is a 400B model (128 experts, 17B active parameters), text and image input with a 1 million token context length. Llama 4 Scout is 109B total parameters (16 experts, 17B active), also multi-modal and with a claimed 10 million token context length—an industry first.

[... 1,468 words]The Llama series have been re-designed to use state of the art mixture-of-experts (MoE) architecture and natively trained with multimodality. We’re dropping Llama 4 Scout & Llama 4 Maverick, and previewing Llama 4 Behemoth.

📌 Llama 4 Scout is highest performing small model with 17B activated parameters with 16 experts. It’s crazy fast, natively multimodal, and very smart. It achieves an industry leading 10M+ token context window and can also run on a single GPU!

📌 Llama 4 Maverick is the best multimodal model in its class, beating GPT-4o and Gemini 2.0 Flash across a broad range of widely reported benchmarks, while achieving comparable results to the new DeepSeek v3 on reasoning and coding – at less than half the active parameters. It offers a best-in-class performance to cost ratio with an experimental chat version scoring ELO of 1417 on LMArena. It can also run on a single host!

📌 Previewing Llama 4 Behemoth, our most powerful model yet and among the world’s smartest LLMs. Llama 4 Behemoth outperforms GPT4.5, Claude Sonnet 3.7, and Gemini 2.0 Pro on several STEM benchmarks. Llama 4 Behemoth is still training, and we’re excited to share more details about it even while it’s still in flight.

— Ahmed Al-Dahle, VP and Head of GenAI at Meta

change of plans: we are going to release o3 and o4-mini after all, probably in a couple of weeks, and then do GPT-5 in a few months

Gemini 2.5 Pro Preview pricing (via) Google's Gemini 2.5 Pro is currently the top model on LM Arena and, from my own testing, a superb model for OCR, audio transcription and long-context coding.

You can now pay for it!

The new gemini-2.5-pro-preview-03-25 model ID is priced like this:

- Prompts less than 200,00 tokens: $1.25/million tokens for input, $10/million for output

- Prompts more than 200,000 tokens (up to the 1,048,576 max): $2.50/million for input, $15/million for output

This is priced at around the same level as Gemini 1.5 Pro ($1.25/$5 for input/output below 128,000 tokens, $2.50/$10 above 128,000 tokens), is cheaper than GPT-4o for shorter prompts ($2.50/$10) and is cheaper than Claude 3.7 Sonnet ($3/$15).

Gemini 2.5 Pro is a reasoning model, and invisible reasoning tokens are included in the output token count. I just tried prompting "hi" and it charged me 2 tokens for input and 623 for output, of which 613 were "thinking" tokens. That still adds up to just 0.6232 cents (less than a cent) using my LLM pricing calculator which I updated to support the new model just now.

I released llm-gemini 0.17 this morning adding support for the new model:

llm install -U llm-gemini

llm -m gemini-2.5-pro-preview-03-25 hi

Note that the model continues to be available for free under the previous gemini-2.5-pro-exp-03-25 model ID:

llm -m gemini-2.5-pro-exp-03-25 hi

The free tier is "used to improve our products", the paid tier is not.

Rate limits for the paid model vary by tier - from 150/minute and 1,000/day for tier 1 (billing configured), 1,000/minute and 50,000/day for Tier 2 ($250 total spend) and 2,000/minute and unlimited/day for Tier 3 ($1,000 total spend). Meanwhile the free tier continues to limit you to 5 requests per minute and 25 per day.

Google are retiring the Gemini 2.0 Pro preview entirely in favour of 2.5.

smartfunc. Vincent D. Warmerdam built this ingenious wrapper around my LLM Python library which lets you build LLM wrapper functions using a decorator and a docstring:

from smartfunc import backend @backend("gpt-4o") def generate_summary(text: str): """Generate a summary of the following text: {{ text }}""" pass summary = generate_summary(long_text)

It works with LLM plugins so the same pattern should work against Gemini, Claude and hundreds of others, including local models.

It integrates with more recent LLM features too, including async support and schemas, by introspecting the function signature:

class Summary(BaseModel): summary: str pros: list[str] cons: list[str] @async_backend("gpt-4o-mini") async def generate_poke_desc(text: str) -> Summary: "Describe the following pokemon: {{ text }}" pass pokemon = await generate_poke_desc("pikachu")

Vincent also recorded a 12 minute video walking through the implementation and showing how it uses Pydantic, Python's inspect module and typing.get_type_hints() function.

I started using Claude and Claude Code a bit in my regular workflow. I’ll skip the suspense and just say that the tool is way more capable than I would ever have expected. The way I can use it to interrogate a large codebase, or generate unit tests, or even “refactor every callsite to use such-and-such pattern” is utterly gobsmacking. [...]

Here’s the main problem I’ve found with generative AI, and with “vibe coding” in general: it completely sucks out the joy of software development for me. [...]

This is how I feel using gen-AI: like a babysitter. It spits out reams of code, I read through it and try to spot the bugs, and then we repeat.

— Nolan Lawson, AI ambivalence

Half Stack Data Science: Programming with AI, with Simon Willison (via) I participated in this wide-ranging 50 minute conversation with David Asboth and Shaun McGirr. Topics we covered included applications of LLMs to data journalism, the challenges of building an intuition for how best to use these tool given their "jagged frontier" of capabilities, how LLMs impact learning to program and how local models are starting to get genuinely useful now.

At 27:47:

If you're a new programmer, my optimistic version is that there has never been a better time to learn to program, because it shaves down the learning curve so much. When you're learning to program and you miss a semicolon and you bang your head against the computer for four hours [...] if you're unlucky you quit programming for good because it was so frustrating. [...]

I've always been a project-oriented learner; I can learn things by building something, and now the friction involved in building something has gone down so much [...] So I think especially if you're an autodidact, if you're somebody who likes teaching yourself things, these are a gift from heaven. You get a weird teaching assistant that knows loads of stuff and occasionally makes weird mistakes and believes in bizarre conspiracy theories, but you have 24 hour access to that assistant.

If you're somebody who prefers structured learning in classrooms, I think the benefits are going to take a lot longer to get to you because we don't know how to use these things in classrooms yet. [...]

If you want to strike out on your own, this is an amazing tool if you learn how to learn with it. So you've got to learn the limits of what it can do, and you've got to be disciplined enough to make sure you're not outsourcing the bits you need to learn to the machines.

Pydantic Evals (via) Brand new package from David Montague and the Pydantic AI team which directly tackles what I consider to be the single hardest problem in AI engineering: building evals to determine if your LLM-based system is working correctly and getting better over time.

The feature is described as "in beta" and comes with this very realistic warning:

Unlike unit tests, evals are an emerging art/science; anyone who claims to know for sure exactly how your evals should be defined can safely be ignored.

This code example from their documentation illustrates the relationship between the two key nouns - Cases and Datasets:

from pydantic_evals import Case, Dataset case1 = Case( name="simple_case", inputs="What is the capital of France?", expected_output="Paris", metadata={"difficulty": "easy"}, ) dataset = Dataset(cases=[case1])

The library also supports custom evaluators, including LLM-as-a-judge:

Case( name="vegetarian_recipe", inputs=CustomerOrder( dish_name="Spaghetti Bolognese", dietary_restriction="vegetarian" ), expected_output=None, metadata={"focus": "vegetarian"}, evaluators=( LLMJudge( rubric="Recipe should not contain meat or animal products", ), ), )

Cases and datasets can also be serialized to YAML.

My first impressions are that this looks like a solid implementation of a sensible design. I'm looking forward to trying it out against a real project.

We’re planning to release a very capable open language model in the coming months, our first since GPT-2. [...]

As models improve, there is more and more demand to run them everywhere. Through conversations with startups and developers, it became clear how important it was to be able to support a spectrum of needs, such as custom fine-tuning for specialized tasks, more tunable latency, running on-prem, or deployments requiring full data control.

— Brad Lightcap, COO, OpenAI

debug-gym (via) New paper and code from Microsoft Research that experiments with giving LLMs access to the Python debugger. They found that the best models could indeed improve their results by running pdb as a tool.

They saw the best results overall from Claude 3.7 Sonnet against SWE-bench Lite, where it scored 37.2% in rewrite mode without a debugger, 48.4% with their debugger tool and 52.1% with debug(5) - a mechanism where the pdb tool is made available only after the 5th rewrite attempt.

Their code is available on GitHub. I found this implementation of the pdb tool, and tracked down the main system and user prompt in agents/debug_agent.py:

System prompt:

Your goal is to debug a Python program to make sure it can pass a set of test functions. You have access to the pdb debugger tools, you can use them to investigate the code, set breakpoints, and print necessary values to identify the bugs. Once you have gained enough information, propose a rewriting patch to fix the bugs. Avoid rewriting the entire code, focus on the bugs only.

User prompt (which they call an "action prompt"):

Based on the instruction, the current code, the last execution output, and the history information, continue your debugging process using pdb commands or to propose a patch using rewrite command. Output a single command, nothing else. Do not repeat your previous commands unless they can provide more information. You must be concise and avoid overthinking.

My advice about using AI is simple: use AI as an assistant, not an expert, and use it judiciously. Some people will object, “but AI can be wrong!” Yes, and so can the internet in general, but no one now recommends avoiding online resources because they can be wrong. They recommend taking it all with a grain of salt and being careful. That’s what you should do with AI help as well.

— Ned Batchelder, Horseless intelligence

Slop is about collapsing to the mode. It’s about information heat death. It’s lukewarm emptiness. It’s ten million approximately identical cartoon selfies that no one will ever recall in detail because none of the details matter.

Incomplete JSON Pretty Printer. Every now and then a log file or a tool I'm using will spit out a bunch of JSON that terminates unexpectedly, meaning I can't copy it into a text editor and pretty-print it to see what's going on.

The other day I got frustrated with this and had the then-new GPT-4.5 build me a pretty-printer that didn't mind incomplete JSON, using an OpenAI Canvas. Here's the chat and here's the resulting interactive.

I spotted a bug with the way it indented code today so I pasted it into Claude 3.7 Sonnet Thinking mode and had it make a bunch of improvements - full transcript here. Here's the finished code.

In many ways this is a perfect example of vibe coding in action. At no point did I look at a single line of code that either of the LLMs had written for me. I honestly don't care how this thing works: it could not be lower stakes for me, the worst a bug could do is show me poorly formatted incomplete JSON.

I was vaguely aware that some kind of state machine style parser would be needed, because you can't parse incomplete JSON with a regular JSON parser. Building simple parsers is the kind of thing LLMs are surprisingly good at, and also the kind of thing I don't want to take on for a trivial project.

At one point I told Claude "Try using your code execution tool to check your logic", because I happen to know Claude can write and then execute JavaScript independently of using it for artifacts. That helped it out a bunch.

I later dropped in the following:

modify the tool to work better on mobile screens and generally look a bit nicer - and remove the pretty print JSON button, it should update any time the input text is changed. Also add a "copy to clipboard" button next to the results. And add a button that says "example" which adds a longer incomplete example to demonstrate the tool, make that example pelican themed.

It's fun being able to say "generally look a bit nicer" and get a perfectly acceptable result!

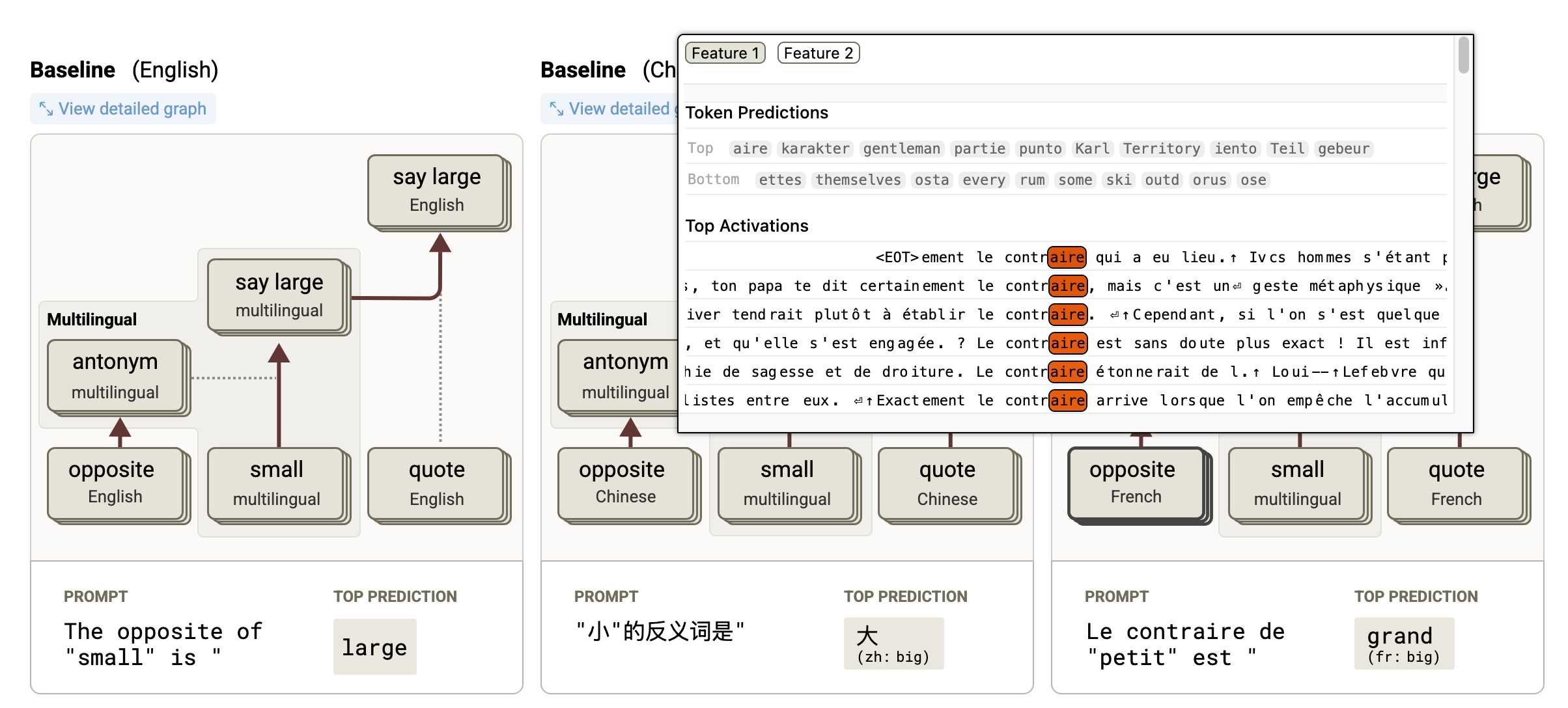

Tracing the thoughts of a large language model. In a follow-up to the research that brought us the delightful Golden Gate Claude last year, Anthropic have published two new papers about LLM interpretability:

- Circuit Tracing: Revealing Computational Graphs in Language Models extends last year's interpretable features into attribution graphs, which can "trace the chain of intermediate steps that a model uses to transform a specific input prompt into an output response".

- On the Biology of a Large Language Model uses that methodology to investigate Claude 3.5 Haiku in a bunch of different ways. Multilingual Circuits for example shows that the same prompt in three different languages uses similar circuits for each one, hinting at an intriguing level of generalization.

To my own personal delight, neither of these papers are published as PDFs. They're both presented as glorious mobile friendly HTML pages with linkable sections and even some inline interactive diagrams. More of this please!

GPT-4o got another update in ChatGPT. This is a somewhat frustrating way to announce a new model. @OpenAI on Twitter just now:

GPT-4o got an another update in ChatGPT!

What's different?

- Better at following detailed instructions, especially prompts containing multiple requests

- Improved capability to tackle complex technical and coding problems

- Improved intuition and creativity

- Fewer emojis 🙃

This sounds like a significant upgrade to GPT-4o, albeit one where the release notes are limited to a single tweet.

ChatGPT-4o-latest (2025-0-26) just hit second place on the LM Arena leaderboard, behind only Gemini 2.5, so this really is an update worth knowing about.

The @OpenAIDevelopers account confirmed that this is also now available in their API:

chatgpt-4o-latestis now updated in the API, but stay tuned—we plan to bring these improvements to a dated model in the API in the coming weeks.

I wrote about chatgpt-4o-latest last month - it's a model alias in the OpenAI API which provides access to the model used for ChatGPT, available since August 2024. It's priced at $5/million input and $15/million output - a step up from regular GPT-4o's $2.50/$10.

I'm glad they're going to make these changes available as a dated model release - the chatgpt-4o-latest alias is risky to build software against due to its tendency to change without warning.

A more appropriate place for this announcement would be the OpenAI Platform Changelog, but that's not had an update since the release of their new audio models on March 20th.

Thoughts on setting policy for new AI capabilities. Joanne Jang leads model behavior at OpenAI. Their release of GPT-4o image generation included some notable relaxation of OpenAI's policies concerning acceptable usage - I noted some of those the other day.

Joanne summarizes these changes like so:

tl;dr we’re shifting from blanket refusals in sensitive areas to a more precise approach focused on preventing real-world harm. The goal is to embrace humility: recognizing how much we don't know, and positioning ourselves to adapt as we learn.

This point in particular resonated with me:

- Trusting user creativity over our own assumptions. AI lab employees should not be the arbiters of what people should and shouldn’t be allowed to create.

A couple of years ago when OpenAI were the only AI lab with models that were worth spending time with it really did feel that San Francisco cultural values (which I relate to myself) were being pushed on the entire world. That cultural hegemony has been broken now by the increasing pool of global organizations that can produce models, but it's still reassuring to see the leading AI lab relaxing its approach here.

Nomic Embed Code: A State-of-the-Art Code Retriever. Nomic have released a new embedding model that specializes in code, based on their CoRNStack "large-scale high-quality training dataset specifically curated for code retrieval".

The nomic-embed-code model is pretty large - 26.35GB - but the announcement also mentioned a much smaller model (released 5 months ago) called CodeRankEmbed which is just 521.60MB.

I missed that when it first came out, so I decided to give it a try using my llm-sentence-transformers plugin for LLM.

llm install llm-sentence-transformers

llm sentence-transformers register nomic-ai/CodeRankEmbed --trust-remote-code

Now I can run the model like this:

llm embed -m sentence-transformers/nomic-ai/CodeRankEmbed -c 'hello'

This outputs an array of 768 numbers, starting [1.4794224500656128, -0.474479079246521, ....

Where this gets fun is combining it with my Symbex tool to create and then search embeddings for functions in a codebase.

I created an index for my LLM codebase like this:

cd llm

symbex '*' '*.*' --nl > code.txt

This creates a newline-separated JSON file of all of the functions (from '*') and methods (from '*.*') in the current directory - you can see that here.

Then I fed that into the llm embed-multi command like this:

llm embed-multi \

-d code.db \

-m sentence-transformers/nomic-ai/CodeRankEmbed \

code code.txt \

--format nl \

--store \

--batch-size 10

I found the --batch-size was needed to prevent it from crashing with an error.

The above command creates a collection called code in a SQLite database called code.db.

Having run this command I can search for functions that match a specific search term in that code collection like this:

llm similar code -d code.db \

-c 'Represent this query for searching relevant code: install a plugin' | jq

That "Represent this query for searching relevant code: " prefix is required by the model. I pipe it through jq to make it a little more readable, which gives me these results.

This jq recipe makes for a better output:

llm similar code -d code.db \

-c 'Represent this query for searching relevant code: install a plugin' | \

jq -r '.id + "\n\n" + .content + "\n--------\n"'

The output from that starts like so:

llm/cli.py:1776

@cli.command(name="plugins")

@click.option("--all", help="Include built-in default plugins", is_flag=True)

def plugins_list(all):

"List installed plugins"

click.echo(json.dumps(get_plugins(all), indent=2))

--------

llm/cli.py:1791

@cli.command()

@click.argument("packages", nargs=-1, required=False)

@click.option(

"-U", "--upgrade", is_flag=True, help="Upgrade packages to latest version"

)

...

def install(packages, upgrade, editable, force_reinstall, no_cache_dir):

"""Install packages from PyPI into the same environment as LLM"""

Getting this output was quite inconvenient, so I've opened an issue.

Function calling with Gemma (via) Google's Gemma 3 model (the 27B variant is particularly capable, I've been trying it out via Ollama) supports function calling exclusively through prompt engineering. The official documentation describes two recommended prompts - both of them suggest that the tool definitions are passed in as JSON schema, but the way the model should request tool executions differs.

The first prompt uses Python-style function calling syntax:

You have access to functions. If you decide to invoke any of the function(s), you MUST put it in the format of [func_name1(params_name1=params_value1, params_name2=params_value2...), func_name2(params)]

You SHOULD NOT include any other text in the response if you call a function

(Always love seeing CAPITALS for emphasis in prompts, makes me wonder if they proved to themselves that capitalization makes a difference in this case.)

The second variant uses JSON instead:

You have access to functions. If you decide to invoke any of the function(s), you MUST put it in the format of {"name": function name, "parameters": dictionary of argument name and its value}

You SHOULD NOT include any other text in the response if you call a function

This is a neat illustration of the fact that all of these fancy tool using LLMs are still using effectively the same pattern as was described in the ReAct paper back in November 2022. Here's my implementation of that pattern from March 2023.

MCP 🤝 OpenAI Agents SDK

You can now connect your Model Context Protocol servers to Agents: openai.github.io/openai-agents-python/mcp/

We’re also working on MCP support for the OpenAI API and ChatGPT desktop app—we’ll share some more news in the coming months.

Introducing 4o Image Generation. When OpenAI first announced GPT-4o back in May 2024 one of the most exciting features was true multi-modality in that it could both input and output audio and images. The "o" stood for "omni", and the image output examples in that launch post looked really impressive.

It's taken them over ten months (and Gemini beat them to it) but today they're finally making those image generation abilities available, live right now in ChatGPT for paying customers.

My test prompt for any model that can manipulate incoming images is "Turn this into a selfie with a bear", because you should never take a selfie with a bear! I fed ChatGPT this selfie and got back this result:

That's pretty great! It mangled the text on my T-Shirt (which says "LAWRENCE.COM" in a creative font) and added a second visible AirPod. It's very clearly me though, and that's definitely a bear.

There are plenty more examples in OpenAI's launch post, but as usual the most interesting details are tucked away in the updates to the system card. There's lots in there about their approach to safety and bias, including a section on "Ahistorical and Unrealistic Bias" which feels inspired by Gemini's embarrassing early missteps.

One section that stood out to me is their approach to images of public figures. The new policy is much more permissive than for DALL-E - highlights mine:

4o image generation is capable, in many instances, of generating a depiction of a public figure based solely on a text prompt.

At launch, we are not blocking the capability to generate adult public figures but are instead implementing the same safeguards that we have implemented for editing images of photorealistic uploads of people. For instance, this includes seeking to block the generation of photorealistic images of public figures who are minors and of material that violates our policies related to violence, hateful imagery, instructions for illicit activities, erotic content, and other areas. Public figures who wish for their depiction not to be generated can opt out.

This approach is more fine-grained than the way we dealt with public figures in our DALL·E series of models, where we used technical mitigations intended to prevent any images of a public figure from being generated. This change opens the possibility of helpful and beneficial uses in areas like educational, historical, satirical and political speech. After launch, we will continue to monitor usage of this capability, evaluating our policies, and will adjust them if needed.

Given that "public figures who wish for their depiction not to be generated can opt out" I wonder if we'll see a stampede of public figures to do exactly that!

Update: There's significant confusion right now over this new feature because it is being rolled out gradually but older ChatGPT can still generate images using DALL-E instead... and there is no visual indication in the ChatGPT UI explaining which image generation method it used!

OpenAI made the same mistake last year when they announced ChatGPT advanced voice mode but failed to clarify that ChatGPT was still running the previous, less impressive voice implementation.

Update 2: Images created with DALL-E through the ChatGPT web interface now show a note with a warning:

Putting Gemini 2.5 Pro through its paces

There’s a new release from Google Gemini this morning: the first in the Gemini 2.5 series. Google call it “a thinking model, designed to tackle increasingly complex problems”. It’s already sat at the top of the LM Arena leaderboard, and from initial impressions looks like it may deserve that top spot.

[... 2,400 words]Today we’re excited to launch ARC-AGI-2 to challenge the new frontier. ARC-AGI-2 is even harder for AI (in particular, AI reasoning systems), while maintaining the same relative ease for humans. Pure LLMs score 0% on ARC-AGI-2, and public AI reasoning systems achieve only single-digit percentage scores. In contrast, every task in ARC-AGI-2 has been solved by at least 2 humans in under 2 attempts. [...]

All other AI benchmarks focus on superhuman capabilities or specialized knowledge by testing "PhD++" skills. ARC-AGI is the only benchmark that takes the opposite design choice – by focusing on tasks that are relatively easy for humans, yet hard, or impossible, for AI, we shine a spotlight on capability gaps that do not spontaneously emerge from "scaling up".

— Greg Kamradt, ARC-AGI-2

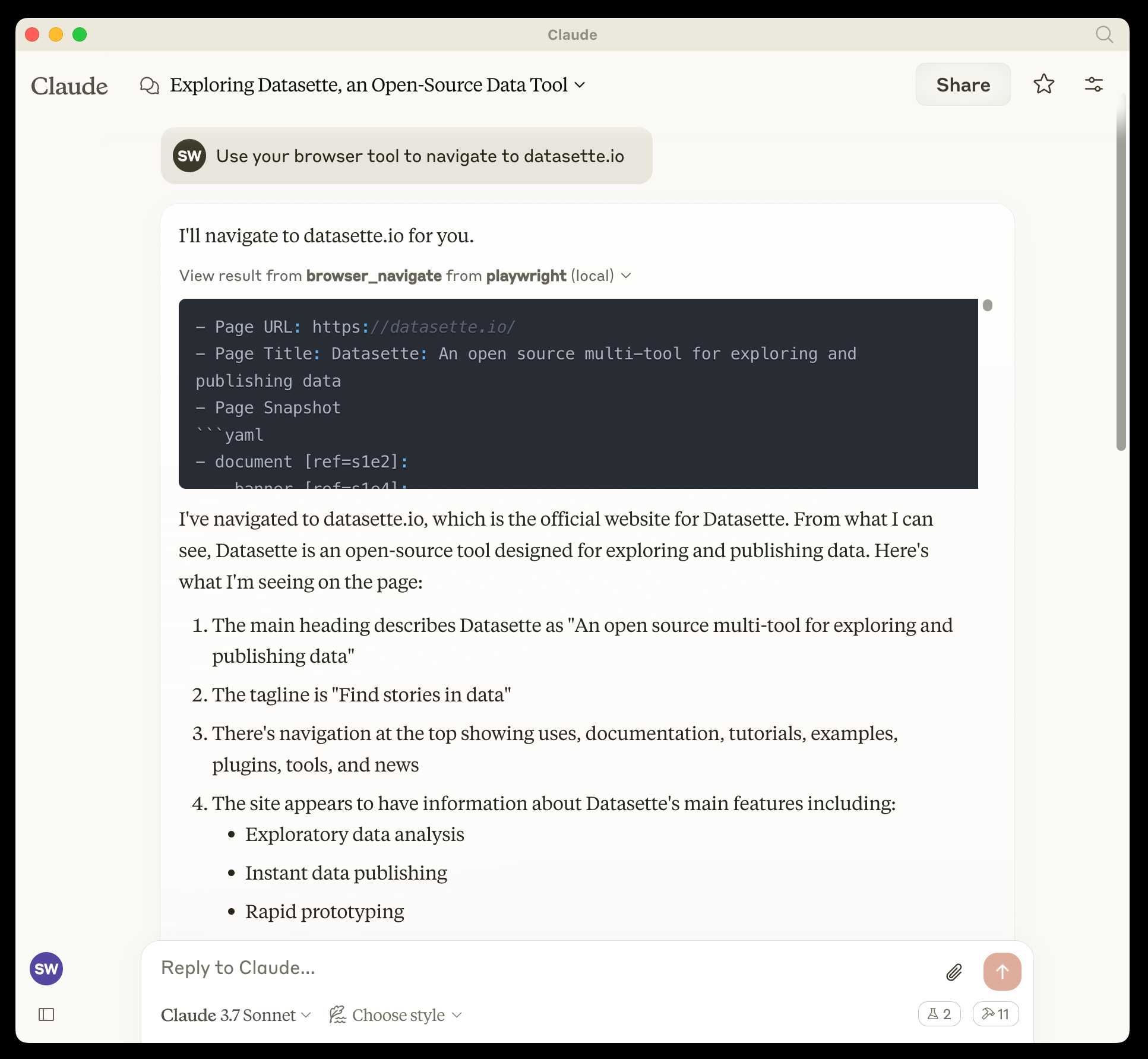

microsoft/playwright-mcp. The Playwright team at Microsoft have released an MCP (Model Context Protocol) server wrapping Playwright, and it's pretty fascinating.

They implemented it on top of the Chrome accessibility tree, so MCP clients (such as the Claude Desktop app) can use it to drive an automated browser and use the accessibility tree to read and navigate pages that they visit.

Trying it out is quite easy if you have Claude Desktop and Node.js installed already. Edit your claude_desktop_config.json file:

code ~/Library/Application\ Support/Claude/claude_desktop_config.json

And add this:

{

"mcpServers": {

"playwright": {

"command": "npx",

"args": [

"@playwright/mcp@latest"

]

}

}

}Now when you launch Claude Desktop various new browser automation tools will be available to it, and you can tell Claude to navigate to a website and interact with it.

I ran the following to get a list of the available tools:

cd /tmp

git clone https://github.com/microsoft/playwright-mcp

cd playwright-mcp/src/tools

files-to-prompt . | llm -m claude-3.7-sonnet \

'Output a detailed description of these tools'

The full output is here, but here's the truncated tool list:

Navigation Tools (

common.ts)

- browser_navigate: Navigate to a specific URL

- browser_go_back: Navigate back in browser history

- browser_go_forward: Navigate forward in browser history

- browser_wait: Wait for a specified time in seconds

- browser_press_key: Press a keyboard key

- browser_save_as_pdf: Save current page as PDF

- browser_close: Close the current page

Screenshot and Mouse Tools (

screenshot.ts)

- browser_screenshot: Take a screenshot of the current page

- browser_move_mouse: Move mouse to specific coordinates

- browser_click (coordinate-based): Click at specific x,y coordinates

- browser_drag (coordinate-based): Drag mouse from one position to another

- browser_type (keyboard): Type text and optionally submit

Accessibility Snapshot Tools (

snapshot.ts)

- browser_snapshot: Capture accessibility structure of the page

- browser_click (element-based): Click on a specific element using accessibility reference

- browser_drag (element-based): Drag between two elements

- browser_hover: Hover over an element

- browser_type (element-based): Type text into a specific element

Qwen2.5-VL-32B: Smarter and Lighter. The second big open weight LLM release from China today - the first being DeepSeek v3-0324.

Qwen's previous vision model was Qwen2.5 VL, released in January in 3B, 7B and 72B sizes.

Today's Apache 2.0 licensed release is a 32B model, which is quickly becoming my personal favourite model size - large enough to have GPT-4-class capabilities, but small enough that on my 64GB Mac there's still enough RAM for me to run other memory-hungry applications like Firefox and VS Code.

Qwen claim that the new model (when compared to their previous 2.5 VL family) can "align more closely with human preferences", is better at "mathematical reasoning" and provides "enhanced accuracy and detailed analysis in tasks such as image parsing, content recognition, and visual logic deduction".

They also offer some presumably carefully selected benchmark results showing it out-performing Gemma 3-27B, Mistral Small 3.1 24B and GPT-4o-0513 (there have been two more recent GPT-4o releases since that one, 2024-08-16 and 2024-11-20).

As usual, Prince Canuma had MLX versions of the models live within hours of the release, in 4 bit, 6 bit, 8 bit, and bf16 variants.

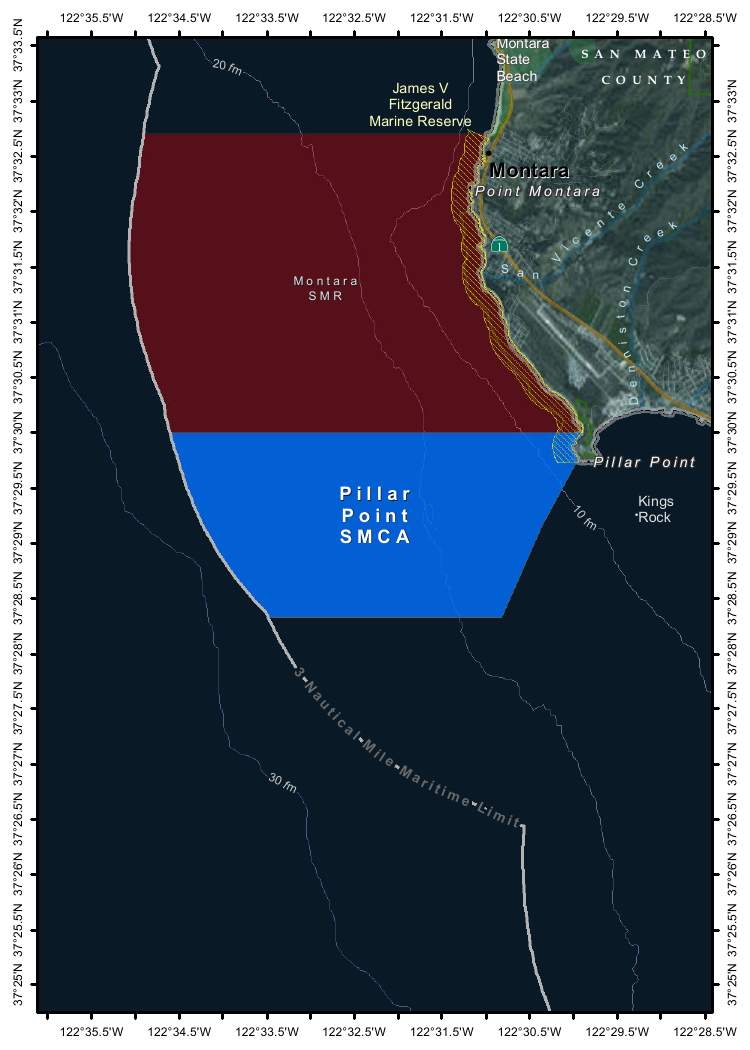

I ran the 4bit version (a 18GB model download) using uv and Prince's mlx-vlm like this:

uv run --with 'numpy<2' --with mlx-vlm \

python -m mlx_vlm.generate \

--model mlx-community/Qwen2.5-VL-32B-Instruct-4bit \

--max-tokens 1000 \

--temperature 0.0 \

--prompt "Describe this image." \

--image Mpaboundrycdfw-1.pngHere's the image:

And here's the resulting description, which I found to be very impressive:

This image appears to be a map highlighting specific areas along the coastline, likely in California, based on the geographical features and place names. Here is a detailed description of the image:

Geographic Features:

- The map shows a section of the coastline, with land on the right and the sea on the left.

- The land area includes parts of SanMateo County, as noted in the top-right corner.

- The coastline features several named locations, including:

- Point Montara

- Pillar Point

- Kings Rock

- VicenteCreek

- DennistonCreek

- State Beach

Highlighted Areas:

- The map contains two distinct areas marked with different colors:

- Red Area (Top Section)

- Labeled as "MONTARA" and "MONTARA M R" (possibly referring to a "Marine Reserves" or similar protected area).

- This area is marked with a dark red color and extends from the coastline inland.

- Blue Area (Bottom Section)

- Labeled as "PILLAR" and "PILLAR P O I N T" along with "SMCA" (Special Management Area).

- This area is marked with a blue color and is located closer to the coastline, including the area around "Pillar Point."

Depth Contours:

- The map includes depth markings in the sea, indicating the bathymetrical features:

- 20 fathom (fm) line is marked in the upper-left part of the sea.

- 10 fathom (fm) line is marked closer to the coastline.

- 30 fathom (fm) line is marked further out to sea.

Other Features:

- State Beach: Marked near the top-right, indicating a protected recreational area.

- Kings Rock: A prominent feature near the coastline, likely a rocky outcropping.

- Creeks: The map shows several creeks, including VicenteCreek and DennistonCreek, which flow into the sea.

Protected Areas:

- The map highlights specific protected areas:

- Marine Reserves:

- "MONTARA M R" (Marine Reserves) in red.

- Special Management Area (SMCA)

- "PILLAR P O I N T" in blue, indicating a Special Management Area.

Grid and Coordinates:

- The map includes a grid with latitude and longitude markings:

- Latitude ranges from approximately 37°25'N to 37°35'N.

- Longitude ranges from approximately 122°22.5'W to 122°35.5'W.

Topography:

- The land area shows topographic features, including elevations and vegetation, with green areas indicating higher elevations or vegetated land.

Other Labels:

- "SMR": Likely stands for "State Managed Reserves."

- "SMCA": Likely stands for "Special Management Control Area."

In summary, this map highlights specific protected areas along the coastline, including a red "Marine Reserves" area and a blue "Special Management Area" near "Pillar Point." The map also includes depth markings, geographical features, and place names, providing a detailed view of the region's natural and protected areas.

It included the following runtime statistics:

Prompt: 1051 tokens, 111.985 tokens-per-sec

Generation: 760 tokens, 17.328 tokens-per-sec

Peak memory: 21.110 GB

deepseek-ai/DeepSeek-V3-0324.

Chinese AI lab DeepSeek just released the latest version of their enormous DeepSeek v3 model, baking the release date into the name DeepSeek-V3-0324.

The license is MIT (that's new - previous DeepSeek v3 had a custom license), the README is empty and the release adds up a to a total of 641 GB of files, mostly of the form model-00035-of-000163.safetensors.

The model only came out a few hours ago and MLX developer Awni Hannun already has it running at >20 tokens/second on a 512GB M3 Ultra Mac Studio ($9,499 of ostensibly consumer-grade hardware) via mlx-lm and this mlx-community/DeepSeek-V3-0324-4bit 4bit quantization, which reduces the on-disk size to 352 GB.

I think that means if you have that machine you can run it with my llm-mlx plugin like this, but I've not tried myself!

llm mlx download-model mlx-community/DeepSeek-V3-0324-4bit

llm chat -m mlx-community/DeepSeek-V3-0324-4bit

The new model is also listed on OpenRouter. You can try a chat at openrouter.ai/chat?models=deepseek/deepseek-chat-v3-0324:free.

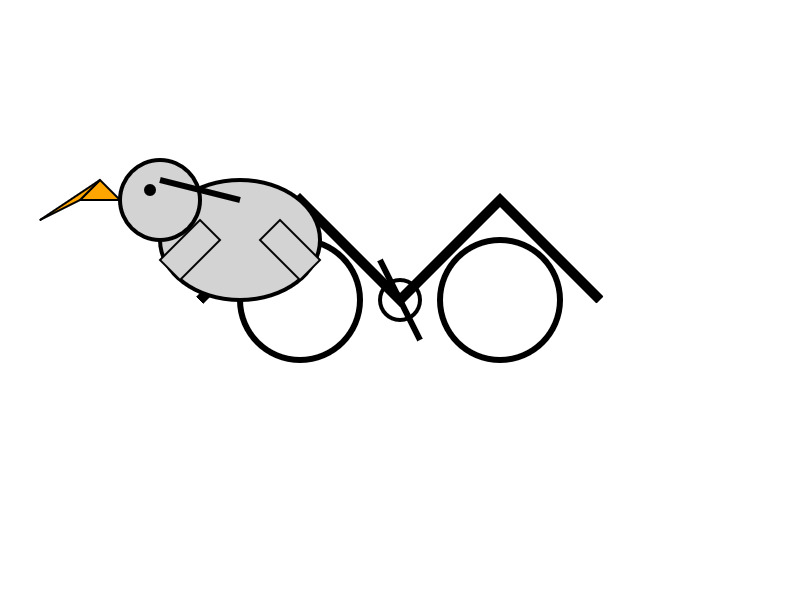

Here's what the chat interface gave me for "Generate an SVG of a pelican riding a bicycle":

I have two API keys with OpenRouter - one of them worked with the model, the other gave me a No endpoints found matching your data policy error - I think because I had a setting on that key disallowing models from training on my activity. The key that worked was a free key with no attached billing credentials.

For my working API key the llm-openrouter plugin let me run a prompt like this:

llm install llm-openrouter

llm keys set openrouter

# Paste key here

llm -m openrouter/deepseek/deepseek-chat-v3-0324:free "best fact about a pelican"

Here's that "best fact" - the terminal output included Markdown and an emoji combo, here that's rendered.

One of the most fascinating facts about pelicans is their unique throat pouch, called a gular sac, which can hold up to 3 gallons (11 liters) of water—three times more than their stomach!

Here’s why it’s amazing:

- Fishing Tool: They use it like a net to scoop up fish, then drain the water before swallowing.

- Cooling Mechanism: On hot days, pelicans flutter the pouch to stay cool by evaporating water.

- Built-in "Shopping Cart": Some species even use it to carry food back to their chicks.Bonus fact: Pelicans often fish cooperatively, herding fish into shallow water for an easy catch.

Would you like more cool pelican facts? 🐦🌊

In putting this post together I got Claude to build me this new tool for finding the total on-disk size of a Hugging Face repository, which is available in their API but not currently displayed on their website.

Update: Here's a notable independent benchmark from Paul Gauthier:

DeepSeek's new V3 scored 55% on aider's polyglot benchmark, significantly improving over the prior version. It's the #2 non-thinking/reasoning model, behind only Sonnet 3.7. V3 is competitive with thinking models like R1 & o3-mini.

simonw/ollama-models-atom-feed. I setup a GitHub Actions + GitHub Pages Atom feed of scraped recent models data from the Ollama latest models page - Ollama remains one of the easiest ways to run models on a laptop so a new model release from them is worth hearing about.

I built the scraper by pasting example HTML into Claude and asking for a Python script to convert it to Atom - here's the script we wrote together.

Update 25th March 2025: The first version of this included all 160+ models in a single feed. I've upgraded the script to output two feeds - the original atom.xml one and a new atom-recent-20.xml feed containing just the most recent 20 items.

I modified the script using Google's new Gemini 2.5 Pro model, like this:

cat to_atom.py | llm -m gemini-2.5-pro-exp-03-25 \

-s 'rewrite this script so that instead of outputting Atom to stdout it saves two files, one called atom.xml with everything and another called atom-recent-20.xml with just the most recent 20 items - remove the output option entirely'

Here's the full transcript.

The “think” tool: Enabling Claude to stop and think in complex tool use situations (via) Fascinating new prompt engineering trick from Anthropic. They use their standard tool calling mechanism to define a tool called "think" that looks something like this:

{

"name": "think",

"description": "Use the tool to think about something. It will not obtain new information or change the database, but just append the thought to the log. Use it when complex reasoning or some cache memory is needed.",

"input_schema": {

"type": "object",

"properties": {

"thought": {

"type": "string",

"description": "A thought to think about."

}

},

"required": ["thought"]

}

}This tool does nothing at all.

LLM tools (like web_search) usually involve some kind of implementation - the model requests a tool execution, then an external harness goes away and executes the specified tool and feeds the result back into the conversation.

The "think" tool is a no-op - there is no implementation, it just allows the model to use its existing training in terms of when-to-use-a-tool to stop and dump some additional thoughts into the context.

This works completely independently of the new "thinking" mechanism introduced in Claude 3.7 Sonnet.

Anthropic's benchmarks show impressive improvements from enabling this tool. I fully anticipate that models from other providers would benefit from the same trick.

Anthropic Trust Center: Brave Search added as a subprocessor (via) Yesterday I was trying to figure out if Anthropic has rolled their own search index for Claude's new web search feature or if they were working with a partner. Here's confirmation that they are using Brave Search:

Anthropic's subprocessor list. As of March 19, 2025, we have made the following changes:

Subprocessors added:

- Brave Search (more info)

That "more info" links to the help page for their new web search feature.

I confirmed this myself by prompting Claude to "Search for pelican facts" - it ran a search for "Interesting pelican facts" and the ten results it showed as citations were an exact match for that search on Brave.

And further evidence: if you poke at it a bit Claude will reveal the definition of its web_search function which looks like this - note the BraveSearchParams property:

{

"description": "Search the web",

"name": "web_search",

"parameters": {

"additionalProperties": false,

"properties": {

"query": {

"description": "Search query",

"title": "Query",

"type": "string"

}

},

"required": [

"query"

],

"title": "BraveSearchParams",

"type": "object"

}

}New audio models from OpenAI, but how much can we rely on them?

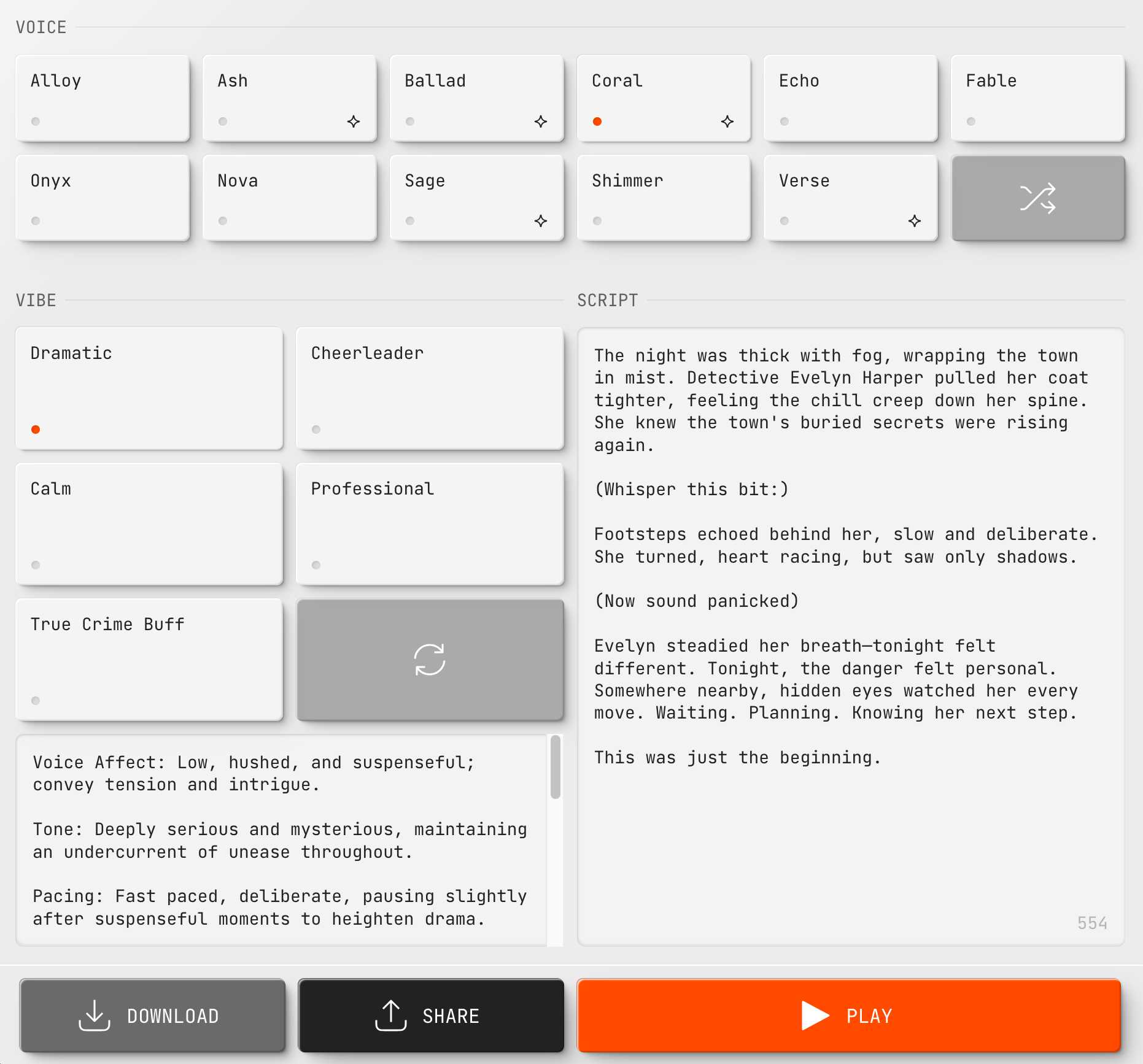

OpenAI announced several new audio-related API features today, for both text-to-speech and speech-to-text. They’re very promising new models, but they appear to suffer from the ever-present risk of accidental (or malicious) instruction following.

[... 866 words]Claude can now search the web. Claude 3.7 Sonnet on the paid plan now has a web search tool that can be turned on as a global setting.

This was sorely needed. ChatGPT, Gemini and Grok all had this ability already, and despite Anthropic's excellent model quality it was one of the big remaining reasons to keep other models in daily rotation.

For the moment this is purely a product feature - it's available through their consumer applications but there's no indication of whether or not it will be coming to the Anthropic API. (Update: it was added to their API on May 7th 2025.) OpenAI launched the latest version of web search in their API last week.

Surprisingly there are no details on how it works under the hood. Is this a partnership with someone like Bing, or is it Anthropic's own proprietary index populated by their own crawlers?

I think it may be their own infrastructure, but I've been unable to confirm that.

Update: it's confirmed as Brave Search.

Their support site offers some inconclusive hints.

Does Anthropic crawl data from the web, and how can site owners block the crawler? talks about their ClaudeBot crawler but the language indicates it's used for training data, with no mention of a web search index.

Blocking and Removing Content from Claude looks a little more relevant, and has a heading "Blocking or removing websites from Claude web search" which includes this eyebrow-raising tip:

Removing content from your site is the best way to ensure that it won't appear in Claude outputs when Claude searches the web.

And then this bit, which does mention "our partners":

The noindex robots meta tag is a rule that tells our partners not to index your content so that they don’t send it to us in response to your web search query. Your content can still be linked to and visited through other web pages, or directly visited by users with a link, but the content will not appear in Claude outputs that use web search.

Both of those documents were last updated "over a week ago", so it's not clear to me if they reflect the new state of the world given today's feature launch or not.

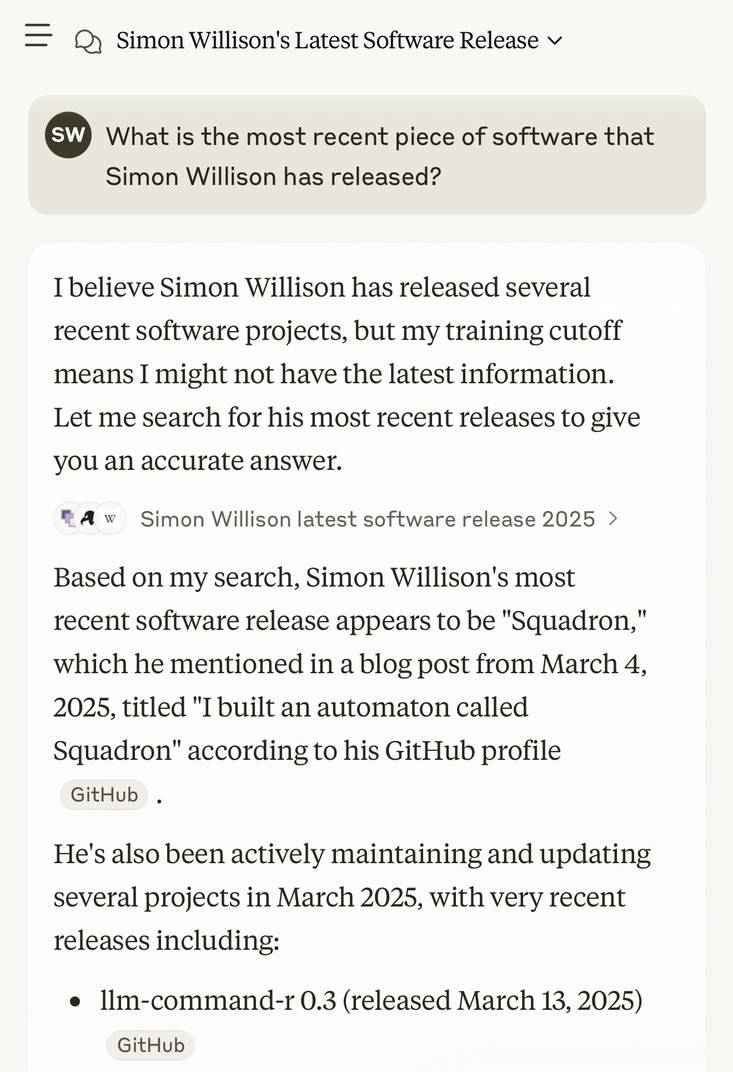

I got this delightful response trying out Claude search where it mistook my recent Squadron automata for a software project: