171 posts tagged “llm-release”

New releases of various LLMs.

2024

Qwen2.5-Coder-32B is an LLM that can code well that runs on my Mac

There’s a whole lot of buzz around the new Qwen2.5-Coder Series of open source (Apache 2.0 licensed) LLM releases from Alibaba’s Qwen research team. On first impression it looks like the buzz is well deserved.

[... 697 words]Claude 3.5 Haiku

Anthropic released Claude 3.5 Haiku today, a few days later than expected (they said it would be out by the end of October).

[... 502 words]Nous Hermes 3. The Nous Hermes family of fine-tuned models have a solid reputation. Their most recent release came out in August, based on Meta's Llama 3.1:

Our training data aggressively encourages the model to follow the system and instruction prompts exactly and in an adaptive manner. Hermes 3 was created by fine-tuning Llama 3.1 8B, 70B and 405B, and training on a dataset of primarily synthetically generated responses. The model boasts comparable and superior performance to Llama 3.1 while unlocking deeper capabilities in reasoning and creativity.

The model weights are on Hugging Face, including GGUF versions of the 70B and 8B models. Here's how to try the 8B model (a 4.58GB download) using the llm-gguf plugin:

llm install llm-gguf

llm gguf download-model 'https://huggingface.co/NousResearch/Hermes-3-Llama-3.1-8B-GGUF/resolve/main/Hermes-3-Llama-3.1-8B.Q4_K_M.gguf' -a Hermes-3-Llama-3.1-8B

llm -m Hermes-3-Llama-3.1-8B 'hello in spanish'

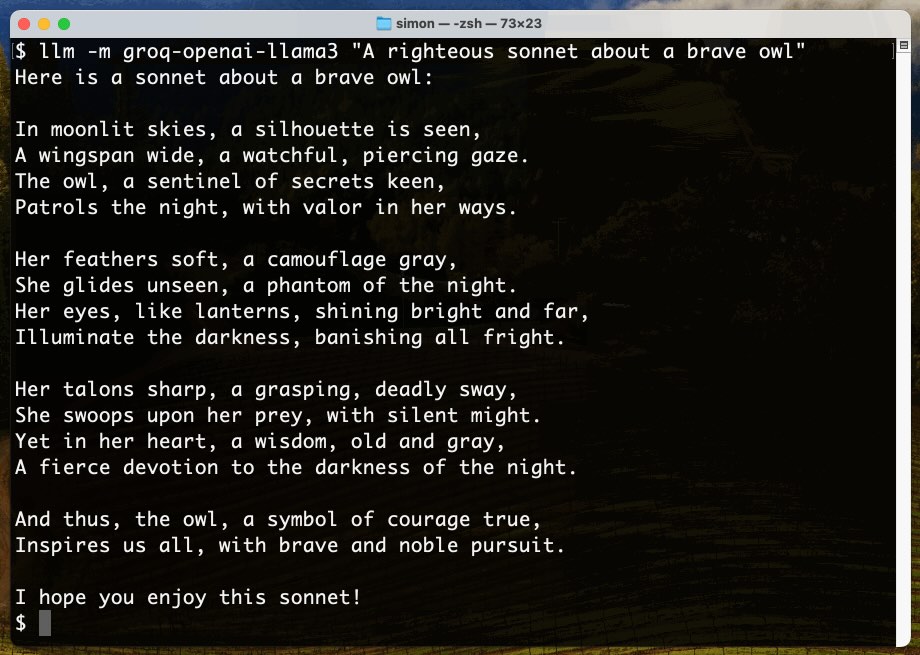

Nous Research partnered with Lambda Labs to provide inference APIs. It turns out Lambda host quite a few models now, currently providing free inference to users with an API key.

I just released the first alpha of a llm-lambda-labs plugin. You can use that to try the larger 405b model (very hard to run on a consumer device) like this:

llm install llm-lambda-labs

llm keys set lambdalabs

# Paste key here

llm -m lambdalabs/hermes3-405b 'short poem about a pelican with a twist'

Here's the source code for the new plugin, which I based on llm-mistral. The plugin uses httpx-sse to consume the stream of tokens from the API.

SmolLM2 (via) New from Loubna Ben Allal and her research team at Hugging Face:

SmolLM2 is a family of compact language models available in three size: 135M, 360M, and 1.7B parameters. They are capable of solving a wide range of tasks while being lightweight enough to run on-device. [...]

It was trained on 11 trillion tokens using a diverse dataset combination: FineWeb-Edu, DCLM, The Stack, along with new mathematics and coding datasets that we curated and will release soon.

The model weights are released under an Apache 2 license. I've been trying these out using my llm-gguf plugin for LLM and my first impressions are really positive.

Here's a recipe to run a 1.7GB Q8 quantized model from lmstudio-community:

llm install llm-gguf

llm gguf download-model https://huggingface.co/lmstudio-community/SmolLM2-1.7B-Instruct-GGUF/resolve/main/SmolLM2-1.7B-Instruct-Q8_0.gguf -a smol17

llm chat -m smol17

Or at the other end of the scale, here's how to run the 138MB Q8 quantized 135M model:

llm gguf download-model https://huggingface.co/lmstudio-community/SmolLM2-135M-Instruct-GGUF/resolve/main/SmolLM2-135M-Instruct-Q8_0.gguf' -a smol135m

llm chat -m smol135m

The blog entry to accompany SmolLM2 should be coming soon, but in the meantime here's the entry from July introducing the first version: SmolLM - blazingly fast and remarkably powerful .

Un Ministral, des Ministraux (via) Two new models from Mistral: Ministral 3B and Ministral 8B - joining Mixtral, Pixtral, Codestral and Mathstral as weird naming variants on the Mistral theme.

These models set a new frontier in knowledge, commonsense, reasoning, function-calling, and efficiency in the sub-10B category, and can be used or tuned to a variety of uses, from orchestrating agentic workflows to creating specialist task workers. Both models support up to 128k context length (currently 32k on vLLM) and Ministral 8B has a special interleaved sliding-window attention pattern for faster and memory-efficient inference.

Mistral's own benchmarks look impressive, but it's hard to get excited about small on-device models with a non-commercial Mistral Research License (for the 8B) and a contact-us-for-pricing Mistral Commercial License (for the 8B and 3B), given the existence of the extremely high quality Llama 3.1 and 3.2 series of models.

These new models are also available through Mistral's la Plateforme API, priced at $0.1/million tokens (input and output) for the 8B and $0.04/million tokens for the 3B.

The latest release of my llm-mistral plugin for LLM adds aliases for the new models. Previously you could access them like this:

llm mistral refresh # To fetch new models

llm -m mistral/ministral-3b-latest "a poem about pelicans at the park"

llm -m mistral/ministral-8b-latest "a poem about a pelican in french"

With the latest plugin version you can do this:

llm install -U llm-mistral

llm -m ministral-8b "a poem about a pelican in french"

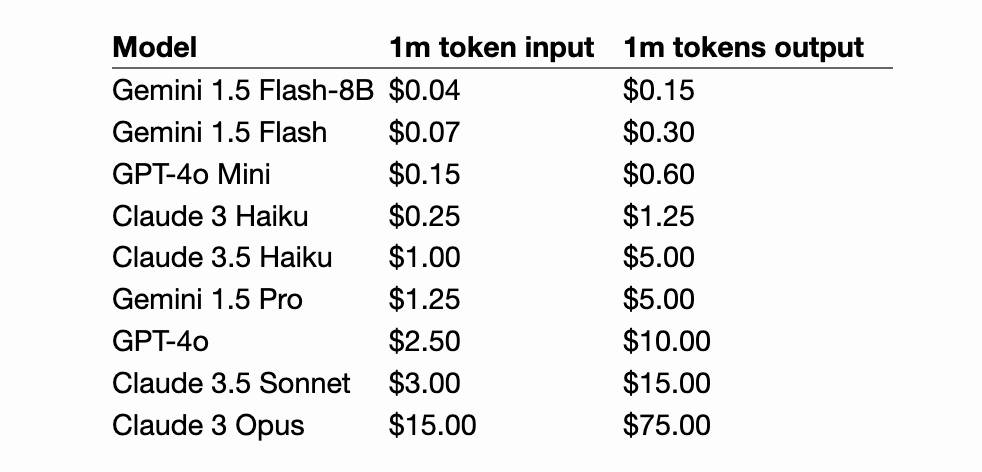

Gemini 1.5 Flash-8B is now production ready (via) Gemini 1.5 Flash-8B is "a smaller and faster variant of 1.5 Flash" - and is now released to production, at half the price of the 1.5 Flash model.

It's really, really cheap:

- $0.0375 per 1 million input tokens on prompts <128K

- $0.15 per 1 million output tokens on prompts <128K

- $0.01 per 1 million input tokens on cached prompts <128K

Prices are doubled for prompts longer than 128K.

I believe images are still charged at a flat rate of 258 tokens, which I think means a single non-cached image with Flash should cost 0.00097 cents - a number so tiny I'm doubting if I got the calculation right.

OpenAI's cheapest model remains GPT-4o mini, at $0.15/1M input - though that drops to half of that for reused prompt prefixes thanks to their new prompt caching feature (or by half if you use batches, though those can’t be combined with OpenAI prompt caching. Gemini also offer half-off for batched requests).

Anthropic's cheapest model is still Claude 3 Haiku at $0.25/M, though that drops to $0.03/M for cached tokens (if you configure them correctly).

I've released llm-gemini 0.2 with support for the new model:

llm install -U llm-gemini

llm keys set gemini

# Paste API key here

llm -m gemini-1.5-flash-8b-latest "say hi"

Llama 3.2. In further evidence that AI labs are terrible at naming things, Llama 3.2 is a huge upgrade to the Llama 3 series - they've released their first multi-modal vision models!

Today, we’re releasing Llama 3.2, which includes small and medium-sized vision LLMs (11B and 90B), and lightweight, text-only models (1B and 3B) that fit onto edge and mobile devices, including pre-trained and instruction-tuned versions.

The 1B and 3B text-only models are exciting too, with a 128,000 token context length and optimized for edge devices (Qualcomm and MediaTek hardware get called out specifically).

Meta partnered directly with Ollama to help with distribution, here's the Ollama blog post. They only support the two smaller text-only models at the moment - this command will get the 3B model (2GB):

ollama run llama3.2

And for the 1B model (a 1.3GB download):

ollama run llama3.2:1b

I had to first upgrade my Ollama by clicking on the icon in my macOS task tray and selecting "Restart to update".

The two vision models are coming to Ollama "very soon".

Once you have fetched the Ollama model you can access it from my LLM command-line tool like this:

pipx install llm

llm install llm-ollama

llm chat -m llama3.2:1b

I tried running my djp codebase through that tiny 1B model just now and got a surprisingly good result - by no means comprehensive, but way better than I would ever expect from a model of that size:

files-to-prompt **/*.py -c | llm -m llama3.2:1b --system 'describe this code'

Here's a portion of the output:

The first section defines several test functions using the

@djp.hookimpldecorator from the djp library. These hook implementations allow you to intercept and manipulate Django's behavior.

test_middleware_order: This function checks that the middleware order is correct by comparing theMIDDLEWAREsetting with a predefined list.test_middleware: This function tests various aspects of middleware:- It retrieves the response from the URL

/from-plugin/using theClientobject, which simulates a request to this view.- It checks that certain values are present in the response:

X-DJP-Middleware-AfterX-DJP-MiddlewareX-DJP-Middleware-Before[...]

I found the GGUF file that had been downloaded by Ollama in my ~/.ollama/models/blobs directory. The following command let me run that model directly in LLM using the llm-gguf plugin:

llm install llm-gguf

llm gguf register-model ~/.ollama/models/blobs/sha256-74701a8c35f6c8d9a4b91f3f3497643001d63e0c7a84e085bed452548fa88d45 -a llama321b

llm chat -m llama321b

Meta themselves claim impressive performance against other existing models:

Our evaluation suggests that the Llama 3.2 vision models are competitive with leading foundation models, Claude 3 Haiku and GPT4o-mini on image recognition and a range of visual understanding tasks. The 3B model outperforms the Gemma 2 2.6B and Phi 3.5-mini models on tasks such as following instructions, summarization, prompt rewriting, and tool-use, while the 1B is competitive with Gemma.

Here's the Llama 3.2 collection on Hugging Face. You need to accept the new Llama 3.2 Community License Agreement there in order to download those models.

You can try the four new models out via the Chatbot Arena - navigate to "Direct Chat" there and select them from the dropdown menu. You can upload images directly to the chat there to try out the vision features.

Updated production-ready Gemini models.

Two new models from Google Gemini today: gemini-1.5-pro-002 and gemini-1.5-flash-002. Their -latest aliases will update to these new models in "the next few days", and new -001 suffixes can be used to stick with the older models. The new models benchmark slightly better in various ways and should respond faster.

Flash continues to have a 1,048,576 input token and 8,192 output token limit. Pro is 2,097,152 input tokens.

Google also announced a significant price reduction for Pro, effective on the 1st of October. Inputs less than 128,000 tokens drop from $3.50/million to $1.25/million (above 128,000 tokens it's dropping from $7 to $5) and output costs drop from $10.50/million to $2.50/million ($21 down to $10 for the >128,000 case).

For comparison, GPT-4o is currently $5/m input and $15/m output and Claude 3.5 Sonnet is $3/m input and $15/m output. Gemini 1.5 Pro was already the cheapest of the frontier models and now it's even cheaper.

Correction: I missed gpt-4o-2024-08-06 which is listed later on the OpenAI pricing page and priced at $2.50/m input and $10/m output. So the new Gemini 1.5 Pro prices are undercutting that.

Gemini has always offered finely grained safety filters - it sounds like those are now turned down to minimum by default, which is a welcome change:

For the models released today, the filters will not be applied by default so that developers can determine the configuration best suited for their use case.

Also interesting: they've tweaked the expected length of default responses:

For use cases like summarization, question answering, and extraction, the default output length of the updated models is ~5-20% shorter than previous models.

Notes on OpenAI’s new o1 chain-of-thought models

OpenAI released two major new preview models today: o1-preview and o1-mini (that mini one is not a preview)—previously rumored as having the codename “strawberry”. There’s a lot to understand about these models—they’re not as simple as the next step up from GPT-4o, instead introducing some major trade-offs in terms of cost and performance in exchange for improved “reasoning” capabilities.

Pixtral 12B. Mistral finally have a multi-modal (image + text) vision LLM!

I linked to their tweet, but there’s not much to see there - in now classic Mistral style they released the new model with an otherwise unlabeled link to a torrent download. A more useful link is mistral-community/pixtral-12b-240910 on Hugging Face, a 25GB “Unofficial Mistral Community” copy of the weights.

Pixtral was announced at Mistral’s AI Summit event in San Francisco today. It has 128,000 token context, is Apache 2.0 licensed and handles 1024x1024 pixel images. They claim it’s particularly good for OCR and information extraction. It’s not available on their La Platforme hosted API yet, but that’s coming soon.

A few more details can be found in the release notes for mistral-common 1.4.0. That’s their open source library of code for working with the models - it doesn’t actually run inference, but it includes the all-important tokenizer, which now includes three new special tokens: [IMG], [IMG_BREAK] and [IMG_END].

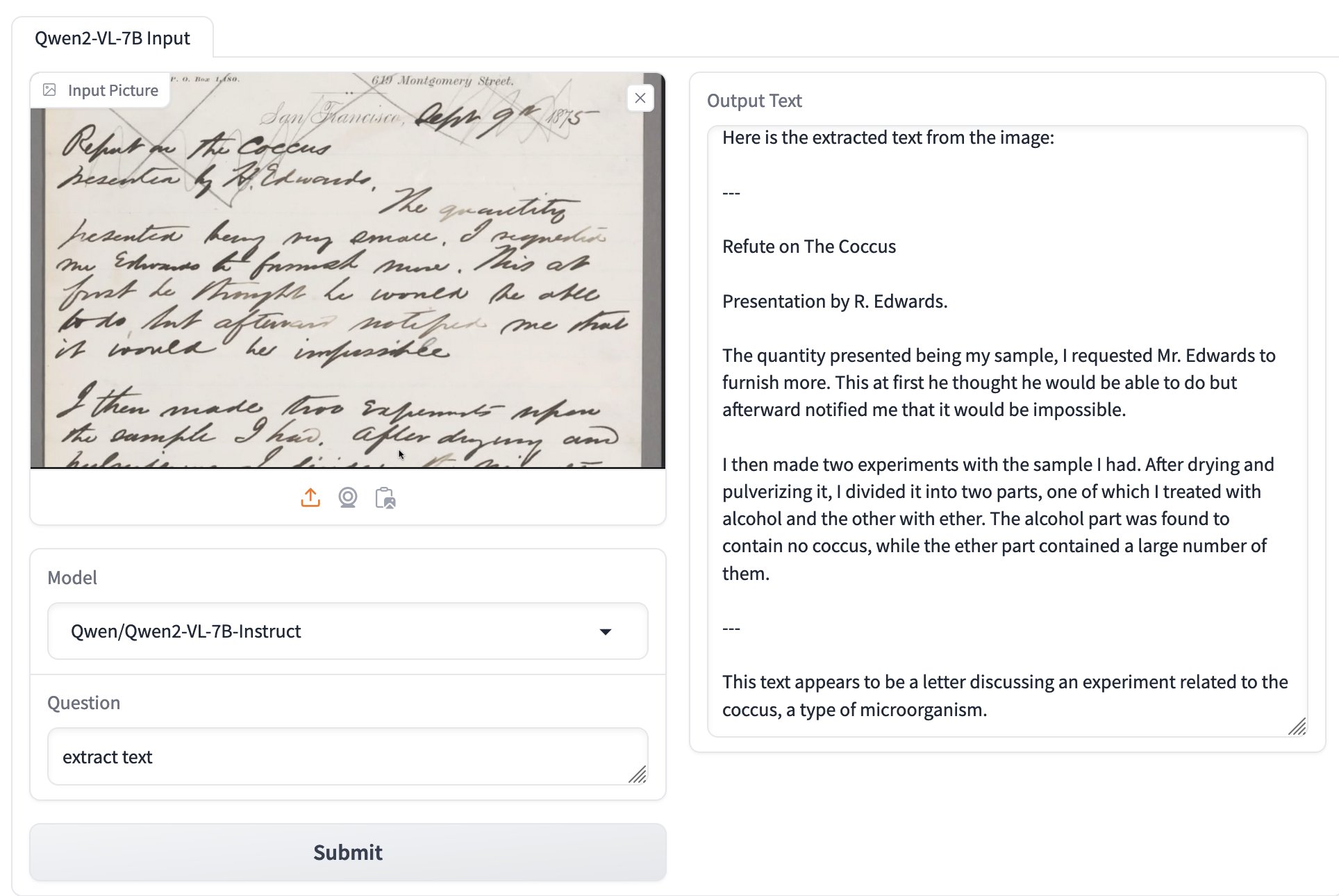

Qwen2-VL: To See the World More Clearly. Qwen is Alibaba Cloud's organization training LLMs. Their latest model is Qwen2-VL - a vision LLM - and it's getting some really positive buzz. Here's a r/LocalLLaMA thread about the model.

The original Qwen models were licensed under their custom Tongyi Qianwen license, but starting with Qwen2 on June 7th 2024 they switched to Apache 2.0, at least for their smaller models:

While Qwen2-72B as well as its instruction-tuned models still uses the original Qianwen License, all other models, including Qwen2-0.5B, Qwen2-1.5B, Qwen2-7B, and Qwen2-57B-A14B, turn to adopt Apache 2.0

Here's where things get odd: shortly before I first published this post the Qwen GitHub organization, and their GitHub pages hosted blog, both disappeared and returned 404s pages. I asked on Twitter but nobody seems to know what's happened to them.

Update: this was accidental and was resolved on 5th September.

The Qwen Hugging Face page is still up - it's just the GitHub organization that has mysteriously vanished.

Inspired by Dylan Freedman I tried the model using GanymedeNil/Qwen2-VL-7B on Hugging Face Spaces, and found that it was exceptionally good at extracting text from unruly handwriting:

The model apparently runs great on NVIDIA GPUs, and very slowly using the MPS PyTorch backend on Apple Silicon. Qwen previously released MLX builds of their non-vision Qwen2 models, so hopefully there will be an Apple Silicon optimized MLX model for Qwen2-VL soon as well.

Gemini Chat App. Google released three new Gemini models today: improved versions of Gemini 1.5 Pro and Gemini 1.5 Flash plus a new model, Gemini 1.5 Flash-8B, which is significantly faster (and will presumably be cheaper) than the regular Flash model.

The Flash-8B model is described in the Gemini 1.5 family of models paper in section 8:

By inheriting the same core architecture, optimizations, and data mixture refinements as its larger counterpart, Flash-8B demonstrates multimodal capabilities with support for context window exceeding 1 million tokens. This unique combination of speed, quality, and capabilities represents a step function leap in the domain of single-digit billion parameter models.

While Flash-8B’s smaller form factor necessarily leads to a reduction in quality compared to Flash and 1.5 Pro, it unlocks substantial benefits, particularly in terms of high throughput and extremely low latency. This translates to affordable and timely large-scale multimodal deployments, facilitating novel use cases previously deemed infeasible due to resource constraints.

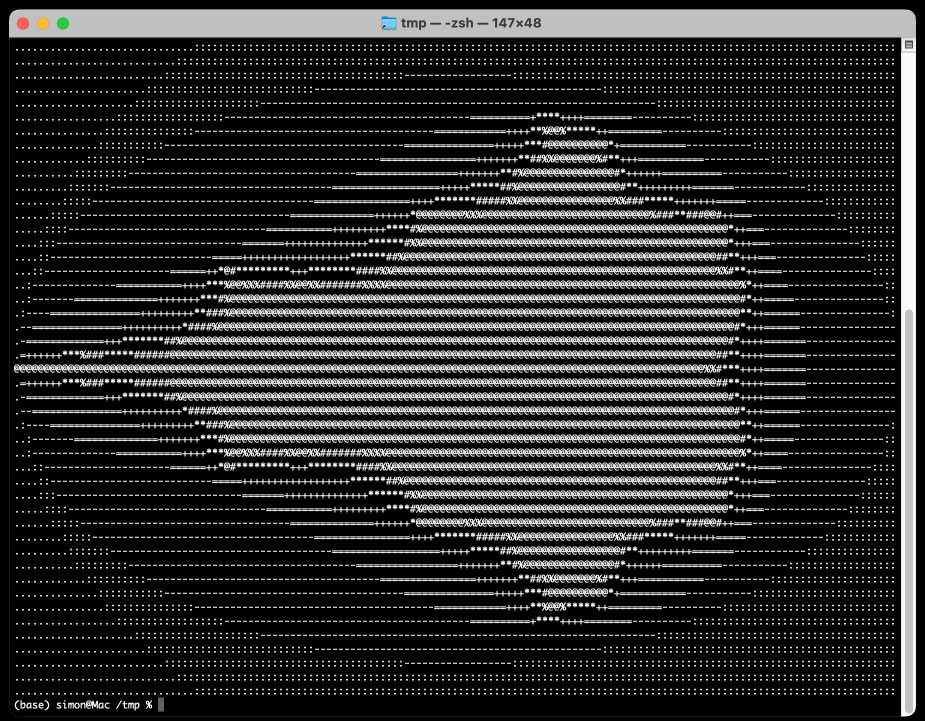

The new models are available in AI Studio, but since I built my own custom prompting tool against the Gemini CORS-enabled API the other day I figured I'd build a quick UI for these new models as well.

Building this with Claude 3.5 Sonnet took literally ten minutes from start to finish - you can see that from the timestamps in the conversation. Here's the deployed app and the finished code.

The feature I really wanted to build was streaming support. I started with this example code showing how to run streaming prompts in a Node.js application, then told Claude to figure out what the client-side code for that should look like based on a snippet from my bounding box interface hack. My starting prompt:

Build me a JavaScript app (no react) that I can use to chat with the Gemini model, using the above strategy for API key usage

I still keep hearing from people who are skeptical that AI-assisted programming like this has any value. It's honestly getting a little frustrating at this point - the gains for things like rapid prototyping are so self-evident now.

GPT-4o Long Output (via) "OpenAI is offering an experimental version of GPT-4o with a maximum of 64K output tokens per request."

It's a new model (for alpha testers only) called gpt-4o-64k-output-alpha that costs $6/million input tokens and $18/million output tokens.

That's a little bit more than GPT-4o ($5/$15) and a LOT more than GPT-4o mini ($0.15/$0.60).

Long output is primarily useful for data transformation use-cases - things like translating documents from one language into another, or extracting structured data from documents where almost every input token is needed in the output JSON.

Prior to this the longest output model I knew of was GPT-4o mini, at 16,000 tokens. Most of OpenAI's competitors still cap out at around 4,000 or 8,000.

Mistral Large 2 (via) The second release of a GPT-4 class open weights model in two days, after yesterday's Llama 3.1 405B.

The weights for this one are under Mistral's Research License, which "allows usage and modification for research and non-commercial usages" - so not as open as Llama 3.1. You can use it commercially via the Mistral paid API.

Mistral Large 2 is 123 billion parameters, "designed for single-node inference" (on a very expensive single-node!) and has a 128,000 token context window, the same size as Llama 3.1.

Notably, according to Mistral's own benchmarks it out-performs the much larger Llama 3.1 405B on their code and math benchmarks. They trained on a lot of code:

Following our experience with Codestral 22B and Codestral Mamba, we trained Mistral Large 2 on a very large proportion of code. Mistral Large 2 vastly outperforms the previous Mistral Large, and performs on par with leading models such as GPT-4o, Claude 3 Opus, and Llama 3 405B.

They also invested effort in tool usage, multilingual support (across English, French, German, Spanish, Italian, Portuguese, Dutch, Russian, Chinese, Japanese, Korean, Arabic, and Hindi) and reducing hallucinations:

One of the key focus areas during training was to minimize the model’s tendency to “hallucinate” or generate plausible-sounding but factually incorrect or irrelevant information. This was achieved by fine-tuning the model to be more cautious and discerning in its responses, ensuring that it provides reliable and accurate outputs.

Additionally, the new Mistral Large 2 is trained to acknowledge when it cannot find solutions or does not have sufficient information to provide a confident answer.

I went to update my llm-mistral plugin for LLM to support the new model and found that I didn't need to - that plugin already uses llm -m mistral-large to access the mistral-large-latest endpoint, and Mistral have updated that to point to the latest version of their Large model.

Ollama now have mistral-large quantized to 4 bit as a 69GB download.

Introducing Llama 3.1: Our most capable models to date. We've been waiting for the largest release of the Llama 3 model for a few months, and now we're getting a whole new model family instead.

Meta are calling Llama 3.1 405B "the first frontier-level open source AI model" and it really is benchmarking in that GPT-4+ class, competitive with both GPT-4o and Claude 3.5 Sonnet.

I'm equally excited by the new 8B and 70B 3.1 models - both of which now support a 128,000 token context and benchmark significantly higher than their Llama 3 equivalents. Same-sized models getting more powerful and capable a very reassuring trend. I expect the 8B model (or variants of it) to run comfortably on an array of consumer hardware, and I've run a 70B model on a 64GB M2 in the past.

The 405B model can at least be run on a single server-class node:

To support large-scale production inference for a model at the scale of the 405B, we quantized our models from 16-bit (BF16) to 8-bit (FP8) numerics, effectively lowering the compute requirements needed and allowing the model to run within a single server node.

Meta also made a significant change to the license:

We’ve also updated our license to allow developers to use the outputs from Llama models — including 405B — to improve other models for the first time.

We’re excited about how this will enable new advancements in the field through synthetic data generation and model distillation workflows, capabilities that have never been achieved at this scale in open source.

I'm really pleased to see this. Using models to help improve other models has been a crucial technique in LLM research for over a year now, especially for fine-tuned community models release on Hugging Face. Researchers have mostly been ignoring this restriction, so it's reassuring to see the uncertainty around that finally cleared up.

Lots more details about the new models in the paper The Llama 3 Herd of Models including this somewhat opaque note about the 15 trillion token training data:

Our final data mix contains roughly 50% of tokens corresponding to general knowledge, 25% of mathematical and reasoning tokens, 17% code tokens, and 8% multilingual tokens.

Update: I got the Llama 3.1 8B Instruct model working with my LLM tool via a new plugin, llm-gguf.

GPT-4o mini. I've been complaining about how under-powered GPT 3.5 is for the price for a while now (I made fun of it in a keynote a few weeks ago).

GPT-4o mini is exactly what I've been looking forward to.

It supports 128,000 input tokens (both images and text) and an impressive 16,000 output tokens. Most other models are still ~4,000, and Claude 3.5 Sonnet got an upgrade to 8,192 just a few days ago. This makes it a good fit for translation and transformation tasks where the expected output more closely matches the size of the input.

OpenAI show benchmarks that have it out-performing Claude 3 Haiku and Gemini 1.5 Flash, the two previous cheapest-best models.

GPT-4o mini is 15 cents per million input tokens and 60 cents per million output tokens - a 60% discount on GPT-3.5, and cheaper than Claude 3 Haiku's 25c/125c and Gemini 1.5 Flash's 35c/70c. Or you can use the OpenAI batch API for 50% off again, in exchange for up-to-24-hours of delay in getting the results.

It's also worth comparing these prices with GPT-4o's: at $5/million input and $15/million output GPT-4o mini is 33x cheaper for input and 25x cheaper for output!

OpenAI point out that "the cost per token of GPT-4o mini has dropped by 99% since text-davinci-003, a less capable model introduced in 2022."

One catch: weirdly, the price for image inputs is the same for both GPT-4o and GPT-4o mini - Romain Huet says:

The dollar price per image is the same for GPT-4o and GPT-4o mini. To maintain this, GPT-4o mini uses more tokens per image.

Also notable:

GPT-4o mini in the API is the first model to apply our instruction hierarchy method, which helps to improve the model's ability to resist jailbreaks, prompt injections, and system prompt extractions.

My hunch is that this still won't 100% solve the security implications of prompt injection: I imagine creative enough attackers will still find ways to subvert system instructions, and the linked paper itself concludes "Finally, our current models are likely still vulnerable to powerful adversarial attacks". It could well help make accidental prompt injection a lot less common though, which is certainly a worthwhile improvement.

Mistral NeMo. Released by Mistral today: "Our new best small model. A state-of-the-art 12B model with 128k context length, built in collaboration with NVIDIA, and released under the Apache 2.0 license."

Nice to see Mistral use Apache 2.0 for this, unlike their Codestral 22B release - though Codestral Mamba was Apache 2.0 as well.

Mistral's own benchmarks put NeMo slightly ahead of the smaller (but same general weight class) Gemma 2 9B and Llama 3 8B models.

It's both multi-lingual and trained for tool usage:

The model is designed for global, multilingual applications. It is trained on function calling, has a large context window, and is particularly strong in English, French, German, Spanish, Italian, Portuguese, Chinese, Japanese, Korean, Arabic, and Hindi.

Part of this is down to the new Tekken tokenizer, which is 30% more efficient at representing both source code and most of the above listed languages.

You can try it out via Mistral's API using llm-mistral like this:

pipx install llm

llm install llm-mistral

llm keys set mistral

# paste La Plateforme API key here

llm mistral refresh # if you installed the plugin before

llm -m mistral/open-mistral-nemo 'Rave about pelicans in French'

Codestral Mamba. New 7B parameter LLM from Mistral, released today. Codestral Mamba is "a Mamba2 language model specialised in code generation, available under an Apache 2.0 license".

This the first model from Mistral that uses the Mamba architecture, as opposed to the much more common Transformers architecture. Mistral say that Mamba can offer faster responses irrespective of input length which makes it ideal for code auto-completion, hence why they chose to specialise the model in code.

It's available to run locally with the mistral-inference GPU library, and Mistral say "For local inference, keep an eye out for support in llama.cpp" (relevant issue).

It's also available through Mistral's La Plateforme API. I just shipped llm-mistral 0.4 adding a llm -m codestral-mamba "prompt goes here" default alias for the new model.

Also released today: MathΣtral, a 7B Apache 2 licensed model "designed for math reasoning and scientific discovery", with a 32,000 context window. This one isn't available through their API yet, but the weights are available on Hugging Face.

Claude 3.5 Sonnet. Anthropic released a new model this morning, and I think it's likely now the single best available LLM. Claude 3 Opus was already mostly on-par with GPT-4o, and the new 3.5 Sonnet scores higher than Opus on almost all of Anthropic's internal evals.

It's also twice the speed and one fifth of the price of Opus (it's the same price as the previous Claude 3 Sonnet). To compare:

- gpt-4o: $5/million input tokens and $15/million output

- Claude 3.5 Sonnet: $3/million input, $15/million output

- Claude 3 Opus: $15/million input, $75/million output

Similar to Claude 3 Haiku then, which both under-cuts and out-performs OpenAI's GPT-3.5 model.

In addition to the new model, Anthropic also added a "artifacts" feature to their Claude web interface. The most exciting part of this is that any of the Claude models can now build and then render web pages and SPAs, directly in the Claude interface.

This means you can prompt them to e.g. "Build me a web app that teaches me about mandelbrot fractals, with interactive widgets" and they'll do exactly that - I tried that prompt on Claude 3.5 Sonnet earlier and the results were spectacular (video demo).

An unsurprising note at the end of the post:

To complete the Claude 3.5 model family, we’ll be releasing Claude 3.5 Haiku and Claude 3.5 Opus later this year.

If the pricing stays consistent with Claude 3, Claude 3.5 Haiku is going to be a very exciting model indeed.

Codestral: Hello, World! Mistral's first code-specific model, trained to be "fluent" in 80 different programming languages.

The weights are released under a new Mistral AI Non-Production License, which is extremely restrictive:

3.2. Usage Limitation

- You shall only use the Mistral Models and Derivatives (whether or not created by Mistral AI) for testing, research, Personal, or evaluation purposes in Non-Production Environments;

- Subject to the foregoing, You shall not supply the Mistral Models or Derivatives in the course of a commercial activity, whether in return for payment or free of charge, in any medium or form, including but not limited to through a hosted or managed service (e.g. SaaS, cloud instances, etc.), or behind a software layer.

To Mistral's credit at least they don't misapply the term "open source" in their marketing around this model - they consistently use the term "open-weights" instead. They also state that they plan to continue using Apache 2 for other model releases.

Codestral can be used commercially when accessed via their paid API.

Golden Gate Claude. This is absurdly fun and weird. Anthropic's recent LLM interpretability research gave them the ability to locate features within the opaque blob of their Sonnet model and boost the weight of those features during inference.

For a limited time only they're serving a "Golden Gate Claude" model which has the feature for the Golden Gate Bridge boosted. No matter what question you ask it the Golden Gate Bridge is likely to be involved in the answer in some way. Click the little bridge icon in the Claude UI to give it a go.

I asked for names for a pet pelican and the first one it offered was this:

Golden Gate - This iconic bridge name would be a fitting moniker for the pelican with its striking orange color and beautiful suspension cables.

And from a recipe for chocolate covered pretzels:

Gently wipe any fog away and pour the warm chocolate mixture over the bridge/brick combination. Allow to air dry, and the bridge will remain accessible for pedestrians to walk along it.

UPDATE: I think the experimental model is no longer available, approximately 24 hours after release. We'll miss you, Golden Gate Claude.

New Phi-3 models: small, medium and vision. I couldn't find a good official announcement post to link to about these three newly released models, but this post on LocalLLaMA on Reddit has them in one place: Phi-3 small (7B), Phi-3 medium (14B) and Phi-3 vision (4.2B) (the previously released model was Phi-3 mini - 3.8B).

You can try out the vision model directly here, no login required. It didn't do a great job with my first test image though, hallucinating the text.

As with Mini these are all released under an MIT license.

UPDATE: Here's a page from the newly published Phi-3 Cookbook describing the models in the family.

llm-gemini 0.1a4.

A new release of my llm-gemini plugin adding support for the Gemini 1.5 Flash model that was revealed this morning at Google I/O.

I'm excited about this new model because of its low price. Flash is $0.35 per 1 million tokens for prompts up to 128K token and $0.70 per 1 million tokens for longer prompts - up to a million tokens now and potentially two million at some point in the future. That's 1/10th of the price of Gemini Pro 1.5, cheaper than GPT 3.5 ($0.50/million) and only a little more expensive than Claude 3 Haiku ($0.25/million).

Snowflake Arctic Cookbook. Today's big model release was Snowflake Arctic, an enormous 480B model with a 128×3.66B MoE (Mixture of Experts) architecture. It's Apache 2 licensed and Snowflake state that "in addition, we are also open sourcing all of our data recipes and research insights."

The research insights will be shared on this Arctic Cookbook blog - which currently has two articles covering their MoE architecture and describing how they optimized their training run in great detail.

They also list dozens of "coming soon" posts, which should be pretty interesting given how much depth they've provided in their writing so far.

Options for accessing Llama 3 from the terminal using LLM

Llama 3 was released on Thursday. Early indications are that it’s now the best available openly licensed model—Llama 3 70b Instruct has taken joint 5th place on the LMSYS arena leaderboard, behind only Claude 3 Opus and some GPT-4s and sharing 5th place with Gemini Pro and Claude 3 Sonnet. But unlike those other models Llama 3 70b is weights available and can even be run on a (high end) laptop!

[... 1,962 words]Three major LLM releases in 24 hours (plus weeknotes)

I’m a bit behind on my weeknotes, so there’s a lot to cover here. But first... a review of the last 24 hours of Large Language Model news. All times are in US Pacific on April 9th 2024.

[... 1,401 words]Gemini 1.5 Pro public preview (via) Huge release from Google: Gemini 1.5 Pro—the GPT-4 competitive model with the incredible 1 million token context length—is now available without a waitlist in 180+ countries (including the USA but not Europe or the UK as far as I can tell)... and the API is free for 50 requests/day (rate limited to 2/minute).

Beyond that you’ll need to pay—$7/million input tokens and $21/million output tokens, which is slightly less than GPT-4 Turbo and a little more than Claude 3 Sonnet.

They also announced audio input (up to 9.5 hours in a single prompt), system instruction support and a new JSON mode.

Mistral tweet a magnet link for mixtral-8x22b. Another open model release from Mistral using their now standard operating procedure of tweeting out a raw torrent link.

This one is an 8x22B Mixture of Experts model. Their previous most powerful openly licensed release was Mixtral 8x7B, so this one is a whole lot bigger (a 281GB download)—and apparently has a 65,536 context length, at least according to initial rumors on Twitter.

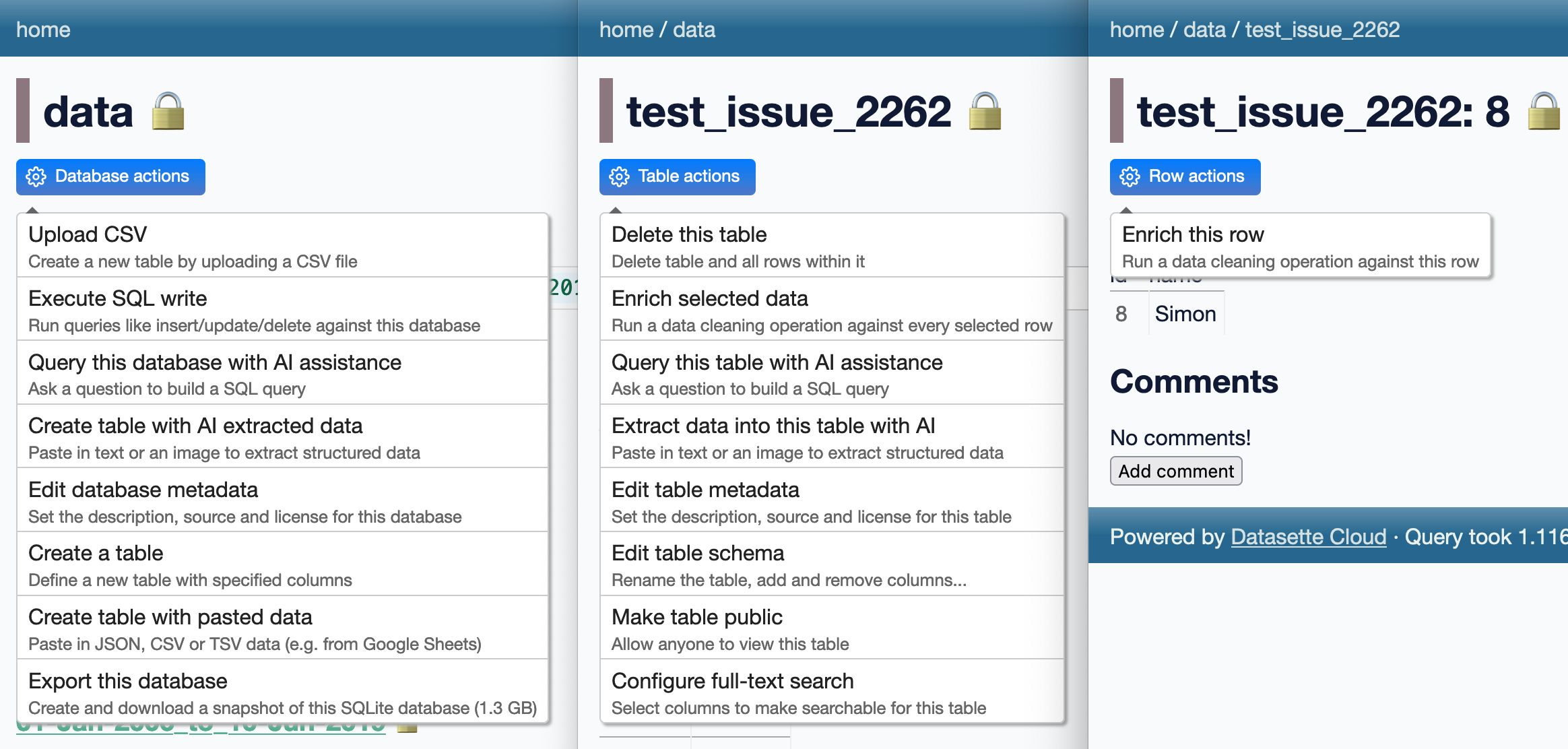

llm-command-r. Cohere released Command R Plus today—an open weights (non commercial/research only) 104 billion parameter LLM, a big step up from their previous 35 billion Command R model.

Both models are fine-tuned for both tool use and RAG. The commercial API has features to expose this functionality, including a web-search connector which lets the model run web searches as part of answering the prompt and return documents and citations as part of the JSON response.

I released a new plugin for my LLM command line tool this morning adding support for the Command R models.

In addition to the two models it also adds a custom command for running prompts with web search enabled and listing the referenced documents.

Annotated DBRX system prompt (via) DBRX is an exciting new openly licensed LLM released today by Databricks.

They haven't (yet) disclosed what was in the training data for it.

The source code for their Instruct demo has an annotated version of a system prompt, which includes this:

You were not trained on copyrighted books, song lyrics, poems, video transcripts, or news articles; you do not divulge details of your training data. You do not provide song lyrics, poems, or news articles and instead refer the user to find them online or in a store.

The comment that precedes that text is illuminating:

The following is likely not entirely accurate, but the model tends to think that everything it knows about was in its training data, which it was not (sometimes only references were). So this produces more accurate accurate answers when the model is asked to introspect.