563 posts tagged “llm”

LLM is my command-line tool for running prompts against Large Language Models.

2025

MiniMax M2 & Agent: Ingenious in Simplicity. MiniMax M2 was released on Monday 27th October by MiniMax, a Chinese AI lab founded in December 2021.

It's a very promising model. Their self-reported benchmark scores show it as comparable to Claude Sonnet 4, and Artificial Analysis are ranking it as the best currently available open weight model according to their intelligence score:

MiniMax’s M2 achieves a new all-time-high Intelligence Index score for an open weights model and offers impressive efficiency with only 10B active parameters (200B total). [...]

The model’s strengths include tool use and instruction following (as shown by Tau2 Bench and IFBench). As such, while M2 likely excels at agentic use cases it may underperform other open weights leaders such as DeepSeek V3.2 and Qwen3 235B at some generalist tasks. This is in line with a number of recent open weights model releases from Chinese AI labs which focus on agentic capabilities, likely pointing to a heavy post-training emphasis on RL.

The size is particularly significant: the model weights are 230GB on Hugging Face, significantly smaller than other high performing open weight models. That's small enough to run on a 256GB Mac Studio, and the MLX community have that working already.

MiniMax offer their own API, and recommend using their Anthropic-compatible endpoint and the official Anthropic SDKs to access it. MiniMax Head of Engineering Skyler Miao provided some background on that:

M2 is a agentic thinking model, it do interleaved thinking like sonnet 4.5, which means every response will contain its thought content. Its very important for M2 to keep the chain of thought. So we must make sure the history thought passed back to the model. Anthropic API support it for sure, as sonnet needs it as well. OpenAI only support it in their new Response API, no support for in ChatCompletion.

MiniMax are offering the new model via their API for free until November 7th, after which the cost will be $0.30/million input tokens and $1.20/million output tokens - similar in price to Gemini 2.5 Flash and GPT-5 Mini, see price comparison here on my llm-prices.com site.

I released a new plugin for LLM called llm-minimax providing support for M2 via the MiniMax API:

llm install llm-minimax

llm keys set minimax

# Paste key here

llm -m m2 -o max_tokens 10000 "Generate an SVG of a pelican riding a bicycle"

Here's the result:

51 input, 4,017 output. At $0.30/m input and $1.20/m output that pelican would cost 0.4836 cents - less than half a cent.

This is the first plugin I've written for an Anthropic-API-compatible model. I released llm-anthropic 0.21 first adding the ability to customize the base_url parameter when using that model class. This meant the new plugin was less than 30 lines of Python.

Introducing Claude Haiku 4.5 (via) Anthropic released Claude Haiku 4.5 today, the cheapest member of the Claude 4.5 family that started with Sonnet 4.5 a couple of weeks ago.

It's priced at $1/million input tokens and $5/million output tokens, slightly more expensive than Haiku 3.5 ($0.80/$4) and a lot more expensive than the original Claude 3 Haiku ($0.25/$1.25), both of which remain available at those prices.

It's a third of the price of Sonnet 4 and Sonnet 4.5 (both $3/$15) which is notable because Anthropic's benchmarks put it in a similar space to that older Sonnet 4 model. As they put it:

What was recently at the frontier is now cheaper and faster. Five months ago, Claude Sonnet 4 was a state-of-the-art model. Today, Claude Haiku 4.5 gives you similar levels of coding performance but at one-third the cost and more than twice the speed.

I've been hoping to see Anthropic release a fast, inexpensive model that's price competitive with the cheapest models from OpenAI and Gemini, currently $0.05/$0.40 (GPT-5-Nano) and $0.075/$0.30 (Gemini 2.0 Flash Lite). Haiku 4.5 certainly isn't that, it looks like they're continuing to focus squarely on the "great at code" part of the market.

The new Haiku is the first Haiku model to support reasoning. It sports a 200,000 token context window, 64,000 maximum output (up from just 8,192 for Haiku 3.5) and a "reliable knowledge cutoff" of February 2025, one month later than the January 2025 date for Sonnet 4 and 4.5 and Opus 4 and 4.1.

Something that caught my eye in the accompanying system card was this note about context length:

For Claude Haiku 4.5, we trained the model to be explicitly context-aware, with precise information about how much context-window has been used. This has two effects: the model learns when and how to wrap up its answer when the limit is approaching, and the model learns to continue reasoning more persistently when the limit is further away. We found this intervention—along with others—to be effective at limiting agentic “laziness” (the phenomenon where models stop working on a problem prematurely, give incomplete answers, or cut corners on tasks).

I've added the new price to llm-prices.com, released llm-anthropic 0.20 with the new model and updated my Haiku-from-your-webcam demo (source) to use Haiku 4.5 as well.

Here's llm -m claude-haiku-4.5 'Generate an SVG of a pelican riding a bicycle' (transcript).

18 input tokens and 1513 output tokens = 0.7583 cents.

Two new models from Chinese AI labs in the past few days. I tried them both out using llm-openrouter:

DeepSeek-V3.2-Exp from DeepSeek. Announcement, Tech Report, Hugging Face (690GB, MIT license).

As an intermediate step toward our next-generation architecture, V3.2-Exp builds upon V3.1-Terminus by introducing DeepSeek Sparse Attention—a sparse attention mechanism designed to explore and validate optimizations for training and inference efficiency in long-context scenarios.

This one felt very slow when I accessed it via OpenRouter - I probably got routed to one of the slower providers. Here's the pelican:

GLM-4.6 from Z.ai. Announcement, Hugging Face (714GB, MIT license).

The context window has been expanded from 128K to 200K tokens [...] higher scores on code benchmarks [...] GLM-4.6 exhibits stronger performance in tool using and search-based agents.

Here's the pelican for that:

Claude Sonnet 4.5 is probably the “best coding model in the world” (at least for now)

Anthropic released Claude Sonnet 4.5 today, with a very bold set of claims:

[... 1,205 words]Improved Gemini 2.5 Flash and Flash-Lite (via) Two new preview models from Google - updates to their fast and inexpensive Flash and Flash Lite families:

The latest version of Gemini 2.5 Flash-Lite was trained and built based on three key themes:

- Better instruction following: The model is significantly better at following complex instructions and system prompts.

- Reduced verbosity: It now produces more concise answers, a key factor in reducing token costs and latency for high-throughput applications (see charts above).

- Stronger multimodal & translation capabilities: This update features more accurate audio transcription, better image understanding, and improved translation quality.

[...]

This latest 2.5 Flash model comes with improvements in two key areas we heard consistent feedback on:

- Better agentic tool use: We've improved how the model uses tools, leading to better performance in more complex, agentic and multi-step applications. This model shows noticeable improvements on key agentic benchmarks, including a 5% gain on SWE-Bench Verified, compared to our last release (48.9% → 54%).

- More efficient: With thinking on, the model is now significantly more cost-efficient—achieving higher quality outputs while using fewer tokens, reducing latency and cost (see charts above).

They also added two new convenience model IDs: gemini-flash-latest and gemini-flash-lite-latest, which will always resolve to the most recent model in that family.

I released llm-gemini 0.26 adding support for the new models and new aliases. I also used the response.set_resolved_model() method added in LLM 0.27 to ensure that the correct model ID would be recorded for those -latest uses.

llm install -U llm-gemini

Both of these models support optional reasoning tokens. I had them draw me pelicans riding bicycles in both thinking and non-thinking mode, using commands that looked like this:

llm -m gemini-2.5-flash-preview-09-2025 -o thinking_budget 4000 "Generate an SVG of a pelican riding a bicycle"

I then got each model to describe the image it had drawn using commands like this:

llm -a https://static.simonwillison.net/static/2025/gemini-2.5-flash-preview-09-2025-thinking.png -m gemini-2.5-flash-preview-09-2025 -o thinking_budget 2000 'Detailed single line alt text for this image'

gemini-2.5-flash-preview-09-2025-thinking

A minimalist stick figure graphic depicts a person with a white oval body and a dot head cycling a gray bicycle, carrying a large, bright yellow rectangular box resting high on their back.

gemini-2.5-flash-preview-09-2025

A simple cartoon drawing of a pelican riding a bicycle, with the text "A Pelican Riding a Bicycle" above it.

gemini-2.5-flash-lite-preview-09-2025-thinking

A quirky, simplified cartoon illustration of a white bird with a round body, black eye, and bright yellow beak, sitting astride a dark gray, two-wheeled vehicle with its peach-colored feet dangling below.

gemini-2.5-flash-lite-preview-09-2025

A minimalist, side-profile illustration of a stylized yellow chick or bird character riding a dark-wheeled vehicle on a green strip against a white background.

Artificial Analysis posted a detailed review, including these interesting notes about reasoning efficiency and speed:

- In reasoning mode, Gemini 2.5 Flash and Flash-Lite Preview 09-2025 are more token-efficient, using fewer output tokens than their predecessors to run the Artificial Analysis Intelligence Index. Gemini 2.5 Flash-Lite Preview 09-2025 uses 50% fewer output tokens than its predecessor, while Gemini 2.5 Flash Preview 09-2025 uses 24% fewer output tokens.

- Google Gemini 2.5 Flash-Lite Preview 09-2025 (Reasoning) is ~40% faster than the prior July release, delivering ~887 output tokens/s on Google AI Studio in our API endpoint performance benchmarking. This makes the new Gemini 2.5 Flash-Lite the fastest proprietary model we have benchmarked on the Artificial Analysis website

llm-openrouter 0.5. New release of my LLM plugin for accessing models made available via OpenRouter. The release notes in full:

- Support for tool calling. Thanks, James Sanford. #43

- Support for reasoning options, for example

llm -m openrouter/openai/gpt-5 'prove dogs exist' -o reasoning_effort medium. #45

Tool calling is a really big deal, as it means you can now use the plugin to try out tools (and build agents, if you like) against any of the 179 tool-enabled models on that platform:

llm install llm-openrouter

llm keys set openrouter

# Paste key here

llm models --tools | grep 'OpenRouter:' | wc -l

# Outputs 179

Quite a few of the models hosted on OpenRouter can be accessed for free. Here's a tool-usage example using the llm-tools-datasette plugin against the new Grok 4 Fast model:

llm install llm-tools-datasette

llm -m openrouter/x-ai/grok-4-fast:free -T 'Datasette("https://datasette.io/content")' 'Count available plugins'

Outputs:

There are 154 available plugins.

The output of llm logs -cu shows the tool calls and SQL queries it executed to get that result.

Grok 4 Fast. New hosted vision-enabled reasoning model from xAI that's designed to be fast and extremely competitive on price. It has a 2 million token context window and "was trained end-to-end with tool-use reinforcement learning".

It's priced at $0.20/million input tokens and $0.50/million output tokens - 15x less than Grok 4 (which is $3/million input and $15/million output). That puts it cheaper than GPT-5 mini and Gemini 2.5 Flash on llm-prices.com.

The same model weights handle reasoning and non-reasoning based on a parameter passed to the model.

I've been trying it out via my updated llm-openrouter plugin, since Grok 4 Fast is available for free on OpenRouter for a limited period.

Here's output from the non-reasoning model. This actually output an invalid SVG - I had to make a tiny manual tweak to the XML to get it to render.

llm -m openrouter/x-ai/grok-4-fast:free "Generate an SVG of a pelican riding a bicycle" -o reasoning_enabled false

(I initially ran this without that -o reasoning_enabled false flag, but then I saw that OpenRouter enable reasoning by default for that model. Here's my previous invalid result.)

And the reasoning model:

llm -m openrouter/x-ai/grok-4-fast:free "Generate an SVG of a pelican riding a bicycle" -o reasoning_enabled true

In related news, the New York Times had a story a couple of days ago about Elon's recent focus on xAI: Since Leaving Washington, Elon Musk Has Been All In on His A.I. Company.

Qwen3-Next-80B-A3B. Qwen announced two new models via their Twitter account (and here's their blog): Qwen3-Next-80B-A3B-Instruct and Qwen3-Next-80B-A3B-Thinking.

They make some big claims on performance:

- Qwen3-Next-80B-A3B-Instruct approaches our 235B flagship.

- Qwen3-Next-80B-A3B-Thinking outperforms Gemini-2.5-Flash-Thinking.

The name "80B-A3B" indicates 80 billion parameters of which only 3 billion are active at a time. You still need to have enough GPU-accessible RAM to hold all 80 billion in memory at once but only 3 billion will be used for each round of inference, which provides a significant speedup in responding to prompts.

More details from their tweet:

- 80B params, but only 3B activated per token → 10x cheaper training, 10x faster inference than Qwen3-32B.(esp. @ 32K+ context!)

- Hybrid Architecture: Gated DeltaNet + Gated Attention → best of speed & recall

- Ultra-sparse MoE: 512 experts, 10 routed + 1 shared

- Multi-Token Prediction → turbo-charged speculative decoding

- Beats Qwen3-32B in perf, rivals Qwen3-235B in reasoning & long-context

The models on Hugging Face are around 150GB each so I decided to try them out via OpenRouter rather than on my own laptop (Thinking, Instruct).

I'm used my llm-openrouter plugin. I installed it like this:

llm install llm-openrouter

llm keys set openrouter

# paste key here

Then found the model IDs with this command:

llm models -q next

Which output:

OpenRouter: openrouter/qwen/qwen3-next-80b-a3b-thinking

OpenRouter: openrouter/qwen/qwen3-next-80b-a3b-instruct

I have an LLM prompt template saved called pelican-svg which I created like this:

llm "Generate an SVG of a pelican riding a bicycle" --save pelican-svg

This means I can run my pelican benchmark like this:

llm -t pelican-svg -m openrouter/qwen/qwen3-next-80b-a3b-thinking

Or like this:

llm -t pelican-svg -m openrouter/qwen/qwen3-next-80b-a3b-instruct

Here's the thinking model output (exported with llm logs -c | pbcopy after I ran the prompt):

I enjoyed the "Whimsical style with smooth curves and friendly proportions (no anatomical accuracy needed for bicycle riding!)" note in the transcript.

The instruct (non-reasoning) model gave me this:

"🐧🦩 Who needs legs!?" indeed! I like that penguin-flamingo emoji sequence it's decided on for pelicans.

Google Gemini URL Context

(via)

New feature in the Gemini API: you can now enable a url_context tool which the models can use to request the contents of URLs as part of replying to a prompt.

I released llm-gemini 0.25 with a new -o url_context 1 option adding support for this feature. You can try it out like this:

llm install -U llm-gemini

llm keys set gemini # If you need to set an API key

llm -m gemini-2.5-flash -o url_context 1 \

'Latest headline on simonwillison.net'

Tokens from the fetched content are charged as input tokens. Use llm logs -c --usage to see that token count:

# 2025-08-18T23:52:46 conversation: 01k2zsk86pyp8p5v7py38pg3ge id: 01k2zsk17k1d03veax49532zs2

Model: **gemini/gemini-2.5-flash**

## Prompt

Latest headline on simonwillison.net

## Response

The latest headline on simonwillison.net as of August 17, 2025, is "TIL: Running a gpt-oss eval suite against LM Studio on a Mac.".

## Token usage

9,613 input, 87 output, {"candidatesTokenCount": 57, "promptTokensDetails": [{"modality": "TEXT", "tokenCount": 10}], "toolUsePromptTokenCount": 9603, "toolUsePromptTokensDetails": [{"modality": "TEXT", "tokenCount": 9603}], "thoughtsTokenCount": 30}

I intercepted a request from it using django-http-debug and saw the following request headers:

Accept: */*

User-Agent: Google

Accept-Encoding: gzip, br

The request came from 192.178.9.35, a Google IP. It did not appear to execute JavaScript on the page, instead feeding the original raw HTML to the model.

Introducing Gemma 3 270M: The compact model for hyper-efficient AI (via) New from Google:

Gemma 3 270M, a compact, 270-million parameter model designed from the ground up for task-specific fine-tuning with strong instruction-following and text structuring capabilities already trained in.

This model is tiny. The version I tried was the LM Studio GGUF one, a 241MB download.

It works! You can say "hi" to it and ask it very basic questions like "What is the capital of France".

I tried "Generate an SVG of a pelican riding a bicycle" about a dozen times and didn't once get back an SVG that was more than just a blank square... but at one point it did decide to write me this poem instead, which was nice:

+-----------------------+

| Pelican Riding Bike |

+-----------------------+

| This is the cat! |

| He's got big wings and a happy tail. |

| He loves to ride his bike! |

+-----------------------+

| Bike lights are shining bright. |

| He's got a shiny top, too! |

| He's ready for adventure! |

+-----------------------+

That's not really the point though. The Gemma 3 team make it very clear that the goal of this model is to support fine-tuning: a model this tiny is never going to be useful for general purpose LLM tasks, but given the right fine-tuning data it should be able to specialize for all sorts of things:

In engineering, success is defined by efficiency, not just raw power. You wouldn't use a sledgehammer to hang a picture frame. The same principle applies to building with AI.

Gemma 3 270M embodies this "right tool for the job" philosophy. It's a high-quality foundation model that follows instructions well out of the box, and its true power is unlocked through fine-tuning. Once specialized, it can execute tasks like text classification and data extraction with remarkable accuracy, speed, and cost-effectiveness. By starting with a compact, capable model, you can build production systems that are lean, fast, and dramatically cheaper to operate.

Here's their tutorial on Full Model Fine-Tune using Hugging Face Transformers, which I have not yet attempted to follow.

I imagine this model will be particularly fun to play with directly in a browser using transformers.js.

Update: It is! Here's a bedtime story generator using Transformers.js (requires WebGPU, so Chrome-like browsers only). Here's the source code for that demo.

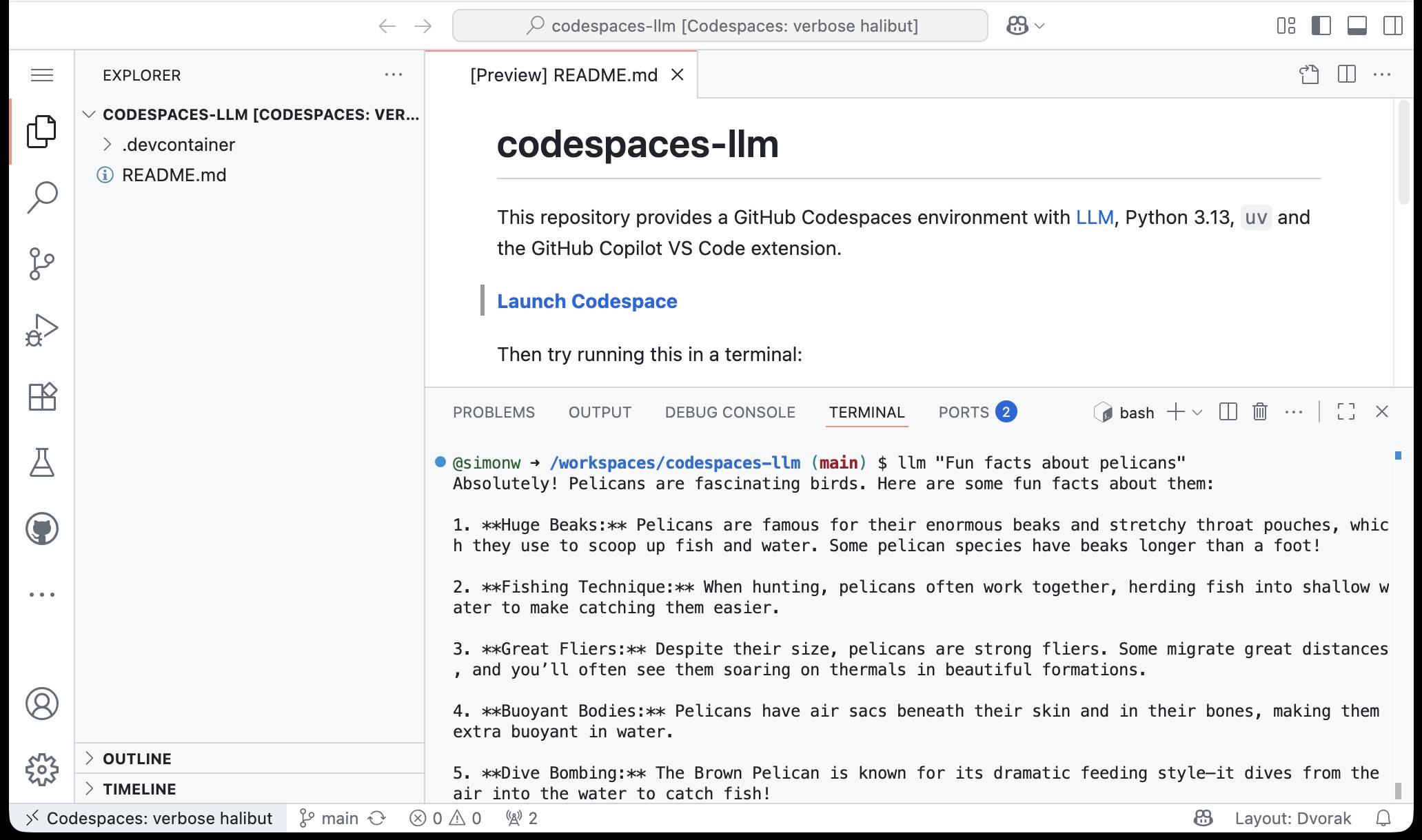

simonw/codespaces-llm. GitHub Codespaces provides full development environments in your browser, and is free to use with anyone with a GitHub account. Each environment has a full Linux container and a browser-based UI using VS Code.

I found out today that GitHub Codespaces come with a GITHUB_TOKEN environment variable... and that token works as an API key for accessing LLMs in the GitHub Models collection, which includes dozens of models from OpenAI, Microsoft, Mistral, xAI, DeepSeek, Meta and more.

Anthony Shaw's llm-github-models plugin for my LLM tool allows it to talk directly to GitHub Models. I filed a suggestion that it could pick up that GITHUB_TOKEN variable automatically and Anthony shipped v0.18.0 with that feature a few hours later.

... which means you can now run the following in any Python-enabled Codespaces container and get a working llm command:

pip install llm

llm install llm-github-models

llm models default github/gpt-4.1

llm "Fun facts about pelicans"

Setting the default model to github/gpt-4.1 means you get free (albeit rate-limited) access to that OpenAI model.

To save you from needing to even run that sequence of commands I've created a new GitHub repository, simonw/codespaces-llm, which pre-installs and runs those commands for you.

Anyone with a GitHub account can use this URL to launch a new Codespaces instance with a configured llm terminal command ready to use:

codespaces.new/simonw/codespaces-llm?quickstart=1

While putting this together I wrote up what I've learned about devcontainers so far as a TIL: Configuring GitHub Codespaces using devcontainers.

LLM 0.27, the annotated release notes: GPT-5 and improved tool calling

I shipped LLM 0.27 today (followed by a 0.27.1 with minor bug fixes), adding support for the new GPT-5 family of models from OpenAI plus a flurry of improvements to the tool calling features introduced in LLM 0.26. Here are the annotated release notes.

[... 1,174 words]OpenAI’s new open weight (Apache 2) models are really good

The long promised OpenAI open weight models are here, and they are very impressive. They’re available under proper open source licenses—Apache 2.0—and come in two sizes, 120B and 20B.

[... 2,771 words]Claude Opus 4.1. Surprise new model from Anthropic today - Claude Opus 4.1, which they describe as "a drop-in replacement for Opus 4".

My favorite thing about this model is the version number - treating this as a .1 version increment looks like it's an accurate depiction of the model's capabilities.

Anthropic's own benchmarks show very small incremental gains.

Comparing Opus 4 and Opus 4.1 (I got 4.1 to extract this information from a screenshot of Anthropic's own benchmark scores, then asked it to look up the links, then verified the links myself and fixed a few):

- Agentic coding (SWE-bench Verified): From 72.5% to 74.5%

- Agentic terminal coding (Terminal-Bench): From 39.2% to 43.3%

- Graduate-level reasoning (GPQA Diamond): From 79.6% to 80.9%

- Agentic tool use (TAU-bench):

- Retail: From 81.4% to 82.4%

- Airline: From 59.6% to 56.0% (decreased)

- Multilingual Q&A (MMMLU): From 88.8% to 89.5%

- Visual reasoning (MMMU validation): From 76.5% to 77.1%

- High school math competition (AIME 2025): From 75.5% to 78.0%

Likewise, the model card shows only tiny changes to the various safety metrics that Anthropic track.

It's priced the same as Opus 4 - $15/million for input and $75/million for output, making it one of the most expensive models on the market today.

I had it draw me this pelican riding a bicycle:

For comparison I got a fresh new pelican out of Opus 4 which I actually like a little more:

I shipped llm-anthropic 0.18 with support for the new model.