41 posts tagged “ollama”

Ollama is a tool for downloading and running Large Language Models.

2024

I can now run a GPT-4 class model on my laptop

Meta’s new Llama 3.3 70B is a genuinely GPT-4 class Large Language Model that runs on my laptop.

[... 2,905 words]Meta AI release Llama 3.3. This new Llama-3.3-70B-Instruct model from Meta AI makes some bold claims:

This model delivers similar performance to Llama 3.1 405B with cost effective inference that’s feasible to run locally on common developer workstations.

I have 64GB of RAM in my M2 MacBook Pro, so I'm looking forward to trying a slightly quantized GGUF of this model to see if I can run it while still leaving some memory free for other applications.

Update: Ollama have a 43GB GGUF available now. And here's an MLX 8bit version and other MLX quantizations.

Llama 3.3 has 70B parameters, a 128,000 token context length and was trained to support English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

The model card says that the training data was "A new mix of publicly available online data" - 15 trillion tokens with a December 2023 cut-off.

They used "39.3M GPU hours of computation on H100-80GB (TDP of 700W) type hardware" which they calculate as 11,390 tons CO2eq. I believe that's equivalent to around 20 fully loaded passenger flights from New York to London (at ~550 tons per flight).

Update 19th January 2025: On further consideration I no longer trust my estimate here: it's surprisingly hard to track down reliable numbers but I think the total CO2 used by those flights may be more in the order of 200-400 tons, so my estimate for Llama 3.3 70B should have been more in the order of between 28 and 56 flights. Don't trust those numbers either though!

QwQ: Reflect Deeply on the Boundaries of the Unknown. Brand new openly licensed (Apache 2) model from Alibaba Cloud's Qwen team, this time clearly inspired by OpenAI's work on reasoning in o1.

I love the flowery language they use to introduce the new model:

Through deep exploration and countless trials, we discovered something profound: when given time to ponder, to question, and to reflect, the model’s understanding of mathematics and programming blossoms like a flower opening to the sun. Just as a student grows wiser by carefully examining their work and learning from mistakes, our model achieves deeper insight through patient, thoughtful analysis.

It's already available through Ollama as a 20GB download. I initially ran it like this:

ollama run qwq

This downloaded the model and started an interactive chat session. I tried the classic "how many rs in strawberry?" and got this lengthy but correct answer, which concluded:

Wait, but maybe I miscounted. Let's list them: 1. s 2. t 3. r 4. a 5. w 6. b 7. e 8. r 9. r 10. y Yes, definitely three "r"s. So, the word "strawberry" contains three "r"s.

Then I switched to using LLM and the llm-ollama plugin. I tried prompting it for Python that imports CSV into SQLite:

Write a Python function import_csv(conn, url, table_name) which acceopts a connection to a SQLite databse and a URL to a CSV file and the name of a table - it then creates that table with the right columns and imports the CSV data from that URL

It thought through the different steps in detail and produced some decent looking code.

Finally, I tried this:

llm -m qwq 'Generate an SVG of a pelican riding a bicycle'

For some reason it answered in Simplified Chinese. It opened with this:

生成一个SVG图像,内容是一只鹈鹕骑着一辆自行车。这听起来挺有趣的!我需要先了解一下什么是SVG,以及如何创建这样的图像。

Which translates (using Google Translate) to:

Generate an SVG image of a pelican riding a bicycle. This sounds interesting! I need to first understand what SVG is and how to create an image like this.

It then produced a lengthy essay discussing the many aspects that go into constructing a pelican on a bicycle - full transcript here. After a full 227 seconds of constant output it produced this as the final result.

I think that's pretty good!

Quantization matters (via) What impact does quantization have on the performance of an LLM? been wondering about this for quite a while, now here are numbers from Paul Gauthier.

He ran differently quantized versions of Qwen 2.5 32B Instruct through his Aider code editing benchmark and saw a range of scores.

The original released weights (BF16) scored highest at 71.4%, with Ollama's qwen2.5-coder:32b-instruct-fp16 (a 66GB download) achieving the same score.

The quantized Ollama qwen2.5-coder:32b-instruct-q4_K_M (a 20GB download) saw a massive drop in quality, scoring just 53.4% on the same benchmark.

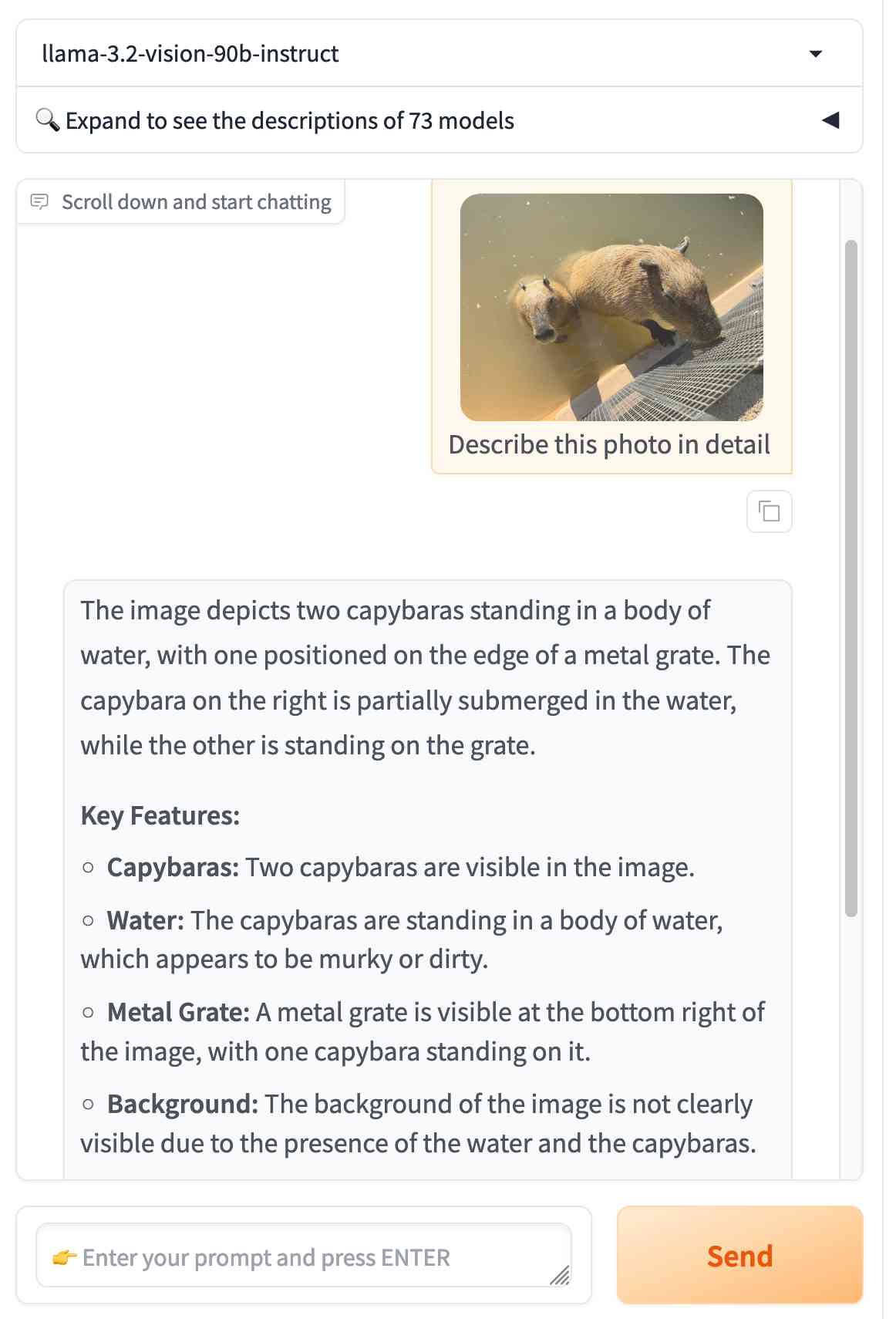

Ollama: Llama 3.2 Vision. Ollama released version 0.4 last week with support for Meta's first Llama vision model, Llama 3.2.

If you have Ollama installed you can fetch the 11B model (7.9 GB) like this:

ollama pull llama3.2-vision

Or the larger 90B model (55GB download, likely needs ~88GB of RAM) like this:

ollama pull llama3.2-vision:90b

I was delighted to learn that Sukhbinder Singh had already contributed support for LLM attachments to Sergey Alexandrov's llm-ollama plugin, which means the following works once you've pulled the models:

llm install --upgrade llm-ollama

llm -m llama3.2-vision:latest 'describe' \

-a https://static.simonwillison.net/static/2024/pelican.jpg

This image features a brown pelican standing on rocks, facing the camera and positioned to the left of center. The bird's long beak is a light brown color with a darker tip, while its white neck is adorned with gray feathers that continue down to its body. Its legs are also gray.

In the background, out-of-focus boats and water are visible, providing context for the pelican's environment.

That's not a bad description of this image, especially for a 7.9GB model that runs happily on my MacBook Pro.

Qwen2.5-Coder-32B is an LLM that can code well that runs on my Mac

There’s a whole lot of buzz around the new Qwen2.5-Coder Series of open source (Apache 2.0 licensed) LLM releases from Alibaba’s Qwen research team. On first impression it looks like the buzz is well deserved.

[... 697 words]Conflating Overture Places Using DuckDB, Ollama, Embeddings, and More.

Drew Breunig's detailed tutorial on "conflation" - combining different geospatial data sources by de-duplicating address strings such as RESTAURANT LOS ARCOS,3359 FOOTHILL BLVD,OAKLAND,94601 and LOS ARCOS TAQUERIA,3359 FOOTHILL BLVD,OAKLAND,94601.

Drew uses an entirely offline stack based around Python, DuckDB and Ollama and finds that a combination of H3 geospatial tiles and mxbai-embed-large embeddings (though other embedding models should work equally well) gets really good results.

Llama 3.2. In further evidence that AI labs are terrible at naming things, Llama 3.2 is a huge upgrade to the Llama 3 series - they've released their first multi-modal vision models!

Today, we’re releasing Llama 3.2, which includes small and medium-sized vision LLMs (11B and 90B), and lightweight, text-only models (1B and 3B) that fit onto edge and mobile devices, including pre-trained and instruction-tuned versions.

The 1B and 3B text-only models are exciting too, with a 128,000 token context length and optimized for edge devices (Qualcomm and MediaTek hardware get called out specifically).

Meta partnered directly with Ollama to help with distribution, here's the Ollama blog post. They only support the two smaller text-only models at the moment - this command will get the 3B model (2GB):

ollama run llama3.2

And for the 1B model (a 1.3GB download):

ollama run llama3.2:1b

I had to first upgrade my Ollama by clicking on the icon in my macOS task tray and selecting "Restart to update".

The two vision models are coming to Ollama "very soon".

Once you have fetched the Ollama model you can access it from my LLM command-line tool like this:

pipx install llm

llm install llm-ollama

llm chat -m llama3.2:1b

I tried running my djp codebase through that tiny 1B model just now and got a surprisingly good result - by no means comprehensive, but way better than I would ever expect from a model of that size:

files-to-prompt **/*.py -c | llm -m llama3.2:1b --system 'describe this code'

Here's a portion of the output:

The first section defines several test functions using the

@djp.hookimpldecorator from the djp library. These hook implementations allow you to intercept and manipulate Django's behavior.

test_middleware_order: This function checks that the middleware order is correct by comparing theMIDDLEWAREsetting with a predefined list.test_middleware: This function tests various aspects of middleware:- It retrieves the response from the URL

/from-plugin/using theClientobject, which simulates a request to this view.- It checks that certain values are present in the response:

X-DJP-Middleware-AfterX-DJP-MiddlewareX-DJP-Middleware-Before[...]

I found the GGUF file that had been downloaded by Ollama in my ~/.ollama/models/blobs directory. The following command let me run that model directly in LLM using the llm-gguf plugin:

llm install llm-gguf

llm gguf register-model ~/.ollama/models/blobs/sha256-74701a8c35f6c8d9a4b91f3f3497643001d63e0c7a84e085bed452548fa88d45 -a llama321b

llm chat -m llama321b

Meta themselves claim impressive performance against other existing models:

Our evaluation suggests that the Llama 3.2 vision models are competitive with leading foundation models, Claude 3 Haiku and GPT4o-mini on image recognition and a range of visual understanding tasks. The 3B model outperforms the Gemma 2 2.6B and Phi 3.5-mini models on tasks such as following instructions, summarization, prompt rewriting, and tool-use, while the 1B is competitive with Gemma.

Here's the Llama 3.2 collection on Hugging Face. You need to accept the new Llama 3.2 Community License Agreement there in order to download those models.

You can try the four new models out via the Chatbot Arena - navigate to "Direct Chat" there and select them from the dropdown menu. You can upload images directly to the chat there to try out the vision features.

Mistral Large 2 (via) The second release of a GPT-4 class open weights model in two days, after yesterday's Llama 3.1 405B.

The weights for this one are under Mistral's Research License, which "allows usage and modification for research and non-commercial usages" - so not as open as Llama 3.1. You can use it commercially via the Mistral paid API.

Mistral Large 2 is 123 billion parameters, "designed for single-node inference" (on a very expensive single-node!) and has a 128,000 token context window, the same size as Llama 3.1.

Notably, according to Mistral's own benchmarks it out-performs the much larger Llama 3.1 405B on their code and math benchmarks. They trained on a lot of code:

Following our experience with Codestral 22B and Codestral Mamba, we trained Mistral Large 2 on a very large proportion of code. Mistral Large 2 vastly outperforms the previous Mistral Large, and performs on par with leading models such as GPT-4o, Claude 3 Opus, and Llama 3 405B.

They also invested effort in tool usage, multilingual support (across English, French, German, Spanish, Italian, Portuguese, Dutch, Russian, Chinese, Japanese, Korean, Arabic, and Hindi) and reducing hallucinations:

One of the key focus areas during training was to minimize the model’s tendency to “hallucinate” or generate plausible-sounding but factually incorrect or irrelevant information. This was achieved by fine-tuning the model to be more cautious and discerning in its responses, ensuring that it provides reliable and accurate outputs.

Additionally, the new Mistral Large 2 is trained to acknowledge when it cannot find solutions or does not have sufficient information to provide a confident answer.

I went to update my llm-mistral plugin for LLM to support the new model and found that I didn't need to - that plugin already uses llm -m mistral-large to access the mistral-large-latest endpoint, and Mistral have updated that to point to the latest version of their Large model.

Ollama now have mistral-large quantized to 4 bit as a 69GB download.

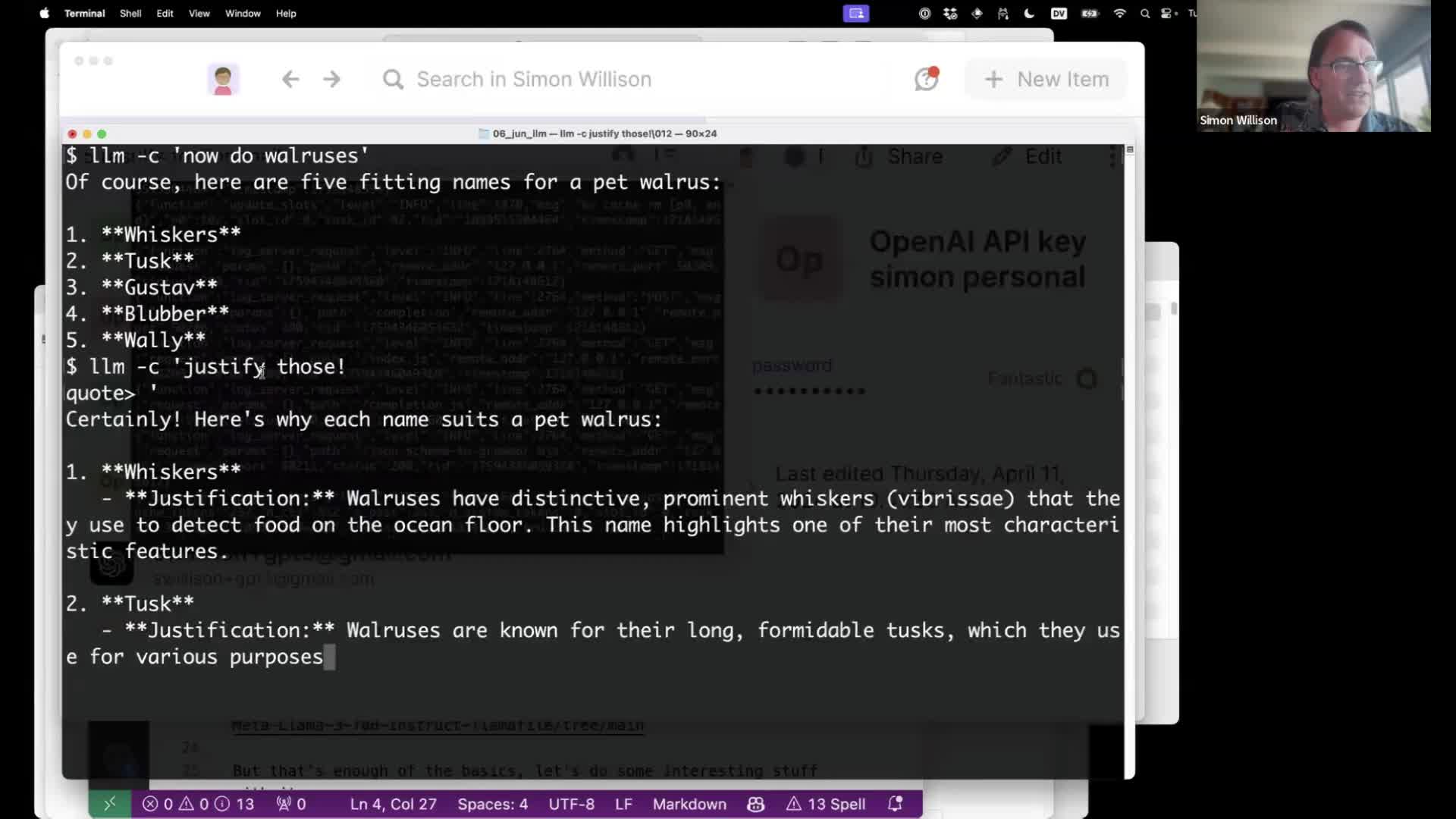

Language models on the command-line

I gave a talk about accessing Large Language Models from the command-line last week as part of the Mastering LLMs: A Conference For Developers & Data Scientists six week long online conference. The talk focused on my LLM Python command-line utility and ways you can use it (and its plugins) to explore LLMs and use them for useful tasks.

[... 4,992 words]2023

Ollama (via) This tool for running LLMs on your own laptop directly includes an installer for macOS (Apple Silicon) and provides a terminal chat interface for interacting with models. They already have Llama 2 support working, with a model that downloads directly from their own registry service without need to register for an account or work your way through a waiting list.